Perceptual Benchmarks

1. Precisions Regarding Exportability

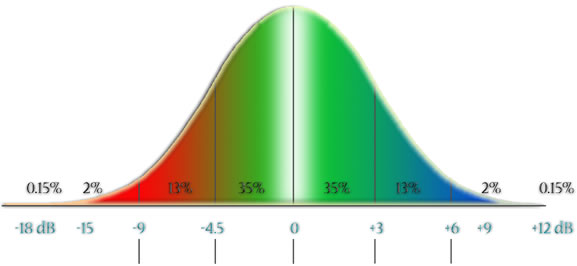

The entire validity of the work described in previous sections — as well as the ideas developed in the present section — depends on the most reliable exportation possible of the mastered results to the external world. For this reason, before continuing, it is important to describe — with more precision than in previously-published documents (1) — the actual limitations of this transposition, at the risk of causing disappointment. Today the exportability of the mastered product can be taken for granted, but only from a statistical point of view: there is never an absolute transposition between mastering studio A and the sound system of person B. In order to understand this crucial point, the classic distribution curve can be consulted. Here it is adapted to show the comparative fidelity of home sound systems:

For any given spectral region, it can be expected that 70 % of reproduction systems are situated in the “reasonable” range of fidelity, between -4.5 to +3 dB, around the flat response position (0 dB). That said, for an indeterminate number of spectral regions, the vast majority of systems sit in the 13% or 2% ranges, characterized by heavily-colouration. Even for high-quality systems such irregularities in frequency response are the norm. Only professional monitoring systems installed in acoustically-controlled environments can claim to be capable of producing a response within ±2 dB of the centre at all the necessary frequencies. As it is done on similar systems, mastering works in virtue of a law of averages:

- it detects anomalies in the response within particular frequency ranges of the systems used for mixing;

- by compensating for these anomalies, it brings the response throughout those spectral ranges within the normative position;

- these compensations, as well as the general improvements to the euphony and transparency, reach an acceptable fidelity amongst 70% of listeners — or more precisely, reach the listener with an acceptable fidelity over 70% of the spectral regions.

On home reproduction systems, the law of averages also has a number of corollaries which translate into further limitations, which it can be useful to mention:

- an important proportion (30%) of spectral regions which do not pass the test of exportability are concentrated in the bass regions, where, in the majority of uncontrolled locales, the most serious acoustic problems are found;

- this problem is enhanced as soon as anything is done with or to the sub-basses, very easily affected by the use of subwoofers, which are typically set — when present at all — either too loud because of home cinema needs or too soft out of fear of disturbing the neighbours;

- these are also the frequencies where consequences related to power shortfalls inherent to mini-systems, satellite speakers for computers and iPods are noted: certain full volume spikes below 50 Hz can provoke distortion in such systems. By filtering to accommodate amps of 25 W and less, the listening situation of higher performance systems is penalized. There is no other solution but to make compromises, one way or the other;

- even in the absence of discontinuities in the frequency response, power shortfalls can affect the clarity in full spectrum passages containing many simultaneous layers. These are cases where even the transparency introduced through mastering cannot be reproduced on sound equipment whose performance is no more than mediocre.

The other limit to universal exportability is not statistic, but is associated with certain types of content. What happens in the case of an æsthetic based on ambiguities, discomfort, assault or any type of purely physical phenomena while listening? The word physical is important, because the experience must naturally exclude all musical experiences which suggest ambiguity, discomfort or assault through æsthetic symbology, experiences which can nonetheless function within the euphonic domain. However, in cases where there is really a concrete attempt to exploit special audio properties (frequency beating, intermodular distortion, effects based on phase differences between channels, etc.) experience shows that the level of exportability is almost nil. It seems that the brain is capable of decoding — except in the most extreme cases of fluctuation of frequency response — most properly-mastered euphonic propositions, even through a reasonable veil of colouration and distortion. But it is not able to perform the same operation for projects based on very specific audio phenomenon. Such projects will therefore continue to depend on a very high degree of precision in the transposition, i.e. almost flat playback systems, which most consumers obviously do not have access to. For such projects, mastering is of no use: the expected effects of discomfort will certainly be perceived by the engineer, but in a biased manner in relation to the intentions of the mix. Even if the mixing situation were flat and therefore the original intention reaches the mastering engineer correctly, there is nothing that can be done to ensure such effects are transmitted to the average consumer.

2. The Principle of Euphony

Mastering is then limited to euphonic æsthetics. From an audio point of view, these approaches are defined by the attempt to find the most transparent path to the musical content possible, and therefore offering a minimum of physical aggression to the listener and a minimum of ambiguities (related to the nature of the sound object, its use of space, etc.) which are foreign to the composer’s initial æsthetic intentions. As we saw earlier, the composer can unknowingly induce the majority of the aggression and ambiguities during the production stages of the work. We might add: as telepathy is even today an imperfect science, the updating of the original æsthetic content — i.e. mastering — cannot be totally infallible. It is conceivable that certain manipulations produce results that are to a certain point parallel, rather than identical, to the essential intentions, or even that some corrections may on occasion present the content in a more effective manner than the composer had foreseen… which to date has provoked no protest on the part of the composers.

The goal of this article is to suggest that such deviations will always be of limited number and importance, as there are a certain number of underlying and permanently operative criteria we have baptised “Perceptual Benchmarks”, which will be used to guide the mastering operations and ensure that the results are relatively predictable and reliable. Within the limits of euphony, these benchmarks have a certain objective value exactly because they are perceptual, rather than semantic, analytic or structural. Because of this approach, the artistic identity of the mastered work will remain intact, despite that fact that the path to this identity will be subject to a thorough re-evaluation and cleaning.

3. Spectral Preferences

Nearly forty years of mastering have shown that once the sonic aggressions and greatest ambiguities are suppressed, the listener is found to be more available to the music and less prone to reactions emanating from the alarm signals of various corporal protective systems. Generally, this new disposition comes in parallel with the emergence of details or of sound layers that were previously masked by the disturbing phenomena.

The typical reluctance expressed at this point is as follows: How can the engineer manage to isolate and correct with certainty only the perturbing phenomena while at the same time ensuring his own cultural and spectral preferences do not have an impact on the work? The response is simple: such a bias in the correction simply wouldn’t work! It is one thing to detest the frequency of 250 Hz, but quite another thing to arbitrarily filter it out of a project or piece where the booming is in reality situated at 160 Hz, as this would only end up hiding details centred around 250 Hz that were previously well-balanced. Even if the engineer were so insensitive as to force the cut regardless of the cost, the composer would certainly have serious questions to ask about the loss of these details. Further, it would be difficult to implement additional corrections at 160 Hz, with the likely result that the high-basses are severely amputated. In the end, the disc would sound bad, would receive negative critiques and the reputation of the engineer would suffer.

This answer is equally applicable to all the fears expressed by some composers concerning the predilection mastering might have to systematically assign all works a uniform spectral profile, according to a fleeting æsthetic or sonic tendancy. This is possible in pop, insofar as the “flavour of the month” is available in the programme, but in electroacoustics, the disparity of audio materials makes this hypothesis very unlikely. Even if some audio guru were to decree the frequency 400 Hz to be predominant in all works this spring, it is entirely possible that in a given work there would be nothing in this region to work with!

4. Pre-æsthetic Perception

Now that we have defined what falls outside the limits of his power we can begin to explain how the mastering engineer can effectively do what his job entails: indisputably detect incongruities and correct them in an efficient manner. The explanation may seem disappointing, or reassuringly simple: the engineer is concerned with phenomena on the pre-æsthetic level, communicated and interpreted according to a common instinctual base, and which provoke pre-established associative reactions of defense, rejection, pleasure, excitement, etc. Could instinct be replaced by genetic here? Should we rather speak of archetypes? As far as mastering is concerned, these questions are secondary and will be left for others to explore. In any case, to be precise, in order to restrict it to the pre-æsthetic domain, the detection of these phenomena must obviously be done by someone who already has a broad range of experiences with the æsthetic possibilities of the musical genre that is to be mastered.

We should clarify that the perceptual benchmarks described below are none other than an attempt to illustrate practical mechanisms used in the context of a specific technique. They can be understood as a collection of tools, as a verification list, helpers or similar, but should not be taken as a general theory on audio correction.

Although not exhaustive, the list is already quite long: here we will summarily classify them according to “benchmarks of rejection” and “positive benchmarks”. A mastering session usually begins with the detection and correction of the most urgent problems. If any time remains — another unfortunately common limitation — the more subtle problems can be addresses before passing to the stage of euphonic optimization. This is the order in which the perceptual benchmarks will be presented.

4.1 Benchmarks of Rejection

Benchmarks of rejection can be understood as alarm bells which indicate problems to the mastering engineer, whose job it is to evaluate the possibility and appropriateness of intervention, by selecting a relevant processing treatment and anticipating any eventual consequences.

- A strongly resonant sound indicates a confined space, potentially suffocating.

- Short or heavily-coloured reverberations are equally indicators of a confined space (it is telling to note that the machines and programmes that produce this type of reverb are generally considered to be low-end).

- Resonances and swelling in the bass frequencies — the infamous boominess — are signals of major crises: earthquakes, volcanic eruptions, big cat attacks, etc. Is the anxiety experienced coherent with the content?

- Imprecise or ambiguous spatialization of a source generates tension, or at least an instinctual questioning which may be out of tune with the æsthetic intentions of a given musical phrase.

- Similarly, ill-defined spatial definition evokes situations that are beyond control, hidden dangers, wild stampeding.

- Distortion is also a sign of crisis situations: attacks, screaming, emergency calls, angry mobs.

- Erratic or unpredictable dynamic curves offer a heightened potential for damage to the auditory mechanism. Protective contraction of the ear’s mechanisms diminish the precision of auditory perception.

- High frequencies emitted in a raucous and discontinuous manner reflect suffocating, sickness and even agony.

- Tension in the human voice is easily discernable: breaks in the dynamic curve, upwards shifting of the formant profile, and general thinning. Taut voices restrict the total dynamic range because constriction of the throat thins out the spectral curve: the parallel with problems generally related to the use of full band compressors is compelling. The same characteristics can be noted in an audio event of a non-vocal nature and can provoke the same uneasiness.

One can reasonably object that such manifestations may be entirely deliberate on the part of the composer, in which case systematic corrections are prejudices that will cause the identity of the work to suffer irreparable damage. Here the issue of semantic ambiguity between the latent message of the sound object and its actual manifestation is raised: do the manifestations reach the ear in an unequivocal manner, completely consistent with, or at least in clear relation to the content? Is distortion apparent? A phase problem? A questionable colouration? Anything else that might suggest to the engineer that “something is not quite right”? Perhaps this is another case where the exportation is unsuccessful for technical reasons?

A simplified example would be the appearance of sound “X”, a resonant bump indicating proximity, at the calmest moment of a section which suggests great distance. Even if the resonance is not in itself disturbing and if the sensation of proximity is precise, the mastering engineer will suspect a problem and look into it. If the phenomenon was intentional, it could be confirmed by the presence elsewhere in the piece of sound “Y”, identical to “X” but without the bump. Other perceptual or stylistic indicators may confirm the interpretation, thereby eliminating the possibility of an error. In virtue of the expressive talent generally associated with the title of Composer, a deliberate ambiguity should be obvious: after all, is a musical indicator not typically announced, accompanied or confirmed by other stylistic symbols?

Of course it may be that the ambiguity is not evident to the composer because it is masked by deficiencies in his monitoring system. This deficiency could be confirmed by the systematic reappearance of the same resonant bump for several sound objects in the same frequency range, in both similar and varied contexts.

In the rare case where indicators are absent or contradictory, the possibility remains to test one or many interpretations by doing tentative corrections: one or another of the corrections will perhaps provide a result that clearly distinguishes itself from the other attempts, at the same time clarifying the entire musical context. If this last approach proves to be inconclusive, the mastering engineer will simply not risk modifying the sound object.

4.2 Positive Benchmarks

This ensemble of indicators can function in two manners. Firstly, they can be the anticipated goal for a correction suggested by the detection of a benchmark of rejection. In this context they also serve to verify that the correction did in fact work properly. But they can also function completely independent of any benchmark of rejection, instead helping detect a positive sensation, in an embryonic state or possibly already perceptible, that would be interesting to reinforce. This second type of action would not necessarily bring about results that are more foreign to the composer’s intentions than the corrective actions: certain tools available to the mastering engineer have a level of quality and efficiency the composer would have wished for but did not have access to. Examples include high-end reverb and stereo widening tools. In other cases, the monitoring system used during the creation of the work may have hindered the quest for euphony, the composer resigning himself to a certain level of sound quality, despite the fact that the audio in fact offers still more potential for development.

- A large stereo image, composed of non-coloured sounds generates a sensation of exterior spaces, of height: transcendance, control, but also liberty, adventure and discovery;

- Precise spatialization of a source is reassuring: it is more likely to transmit its own æsthetic message; multiple layers have an even greater need for transparency and differentiation in regards to their localization and for a comfortable interpretation of their nature.

- An extremely close sound is by nature uncoloured. Properly spatialized, it generates an impression of reassuring intimacy. A situation of extreme dynamics — potential yet unused — in which the transients are best perceived.

- Some types of controlled resonances in the low-medium range can evoke a range of nuances related to a feeling of security, such as experienced, for example, by the fetus.

- The interest in the extreme high frequencies, provided the flow of their transmission remains flexible and regular, could be associated with the spectral content of sounds emitted by babies babbling and by adults during sexual relations: murmuring, sighs.

- Soft dynamic curves send a message of security to the auditory mechanism. As it no longer needs to protect itself, its sensitivity increases.

- A voice perceived as pleasing has identifiable audio characteristics: an open sound with a frequential content that is more spread out and more evenly-distributed, with a discreet but notable presence of extremely high frequencies. The frequential content is such that they are clearly perceptible without any apparent addition of energy. The dynamic flow is regular and seems to require no control. As for the taut voices in the last section, all these characteristics are transposable to non-vocal sonorities, which can also generate similar reactions.

An interesting confirmation of the influence of these positive benchmarks is found in the attraction — or should we say the hypnotic obsession — for dynamic compression in commercial production, which allows for the combination of two of these benchmarks, in principle incompatible, in a single euphoric whole. On the one hand, a limited dynamic, reassuring for the ear as such, but also confirming that the source of aggression is at a safe distance. On the other hand, the proximity effect of transients revealed during the onset of the attack. A false sense of danger is thus achieved, precipitated by the strong dynamics of the transients, immediately contradicted by the drop that occurs immediately following the attack time. A kind of amusement park style pleasure: we get all the goosebumps of a dangerous adventure but in a completely controlled environment.

5. Mechanisms of the Identification of Audio Quality

The cardinal idea is then that receptivity towards the work is brought to its maximum by optimal perceptive conditions at the instinctive level. This transparency is obtained once all of the alarm signals provoked by the benchmarks of rejection have been silenced — with the exception of those that are consistent with the content — and the æsthetic identity inherent to the work is fully supported by the appropriate positive benchmarks.

Obviously, it would be easy to invoke contemporary experiences, particularly in the domain of eLearning, to support this notion of receptivity through explanations of how the use of high-definition visual objects or sounds has led to heightened learning facilities in users. But it would also be unjust, as research results in this area — as with those obtained in psychology of perception — measure reaction differences in relation to existing objects, while mastering must invent for itself the entire mechanics to permit the transformation of low- or medium-quality sound objects into high-definition objects. If no scientific “proof” has been cited to back up the ideas proposed in this publication, it is because mastering engineers simply do not have the luxury of waiting for researchers to come to a conclusion — or even a simple definition — that could be practically implemented, and that everyone involved would agree can function as the final word on what constitutes audio quality.

5.1 Audiophile Vocabulary

Need we remind ourselves that the ideas developed in this article have tended to show that the mechanisms for identification of audio quality are obscure only in appearance: they are always related to the perception of the content in as transparent a manner as possible. Obviously one must be capable of identifying this transparency, and such are the misunderstandings provoked by the proliferation of biased listening situations that an entire descriptive vocabulary developed — used by “Audiophiles” — which is as esoteric as it is pedantic. It was only a small step from there to deduce that audio quality itself was essentially subjective, and this step was taken by the large majority of not only non-audiophile listeners, but also persons working in all the various audio domains.

Amongst professionals, the seeming modesty and “subjective” stance are nonetheless surprising, considering that a significant portion of their competences are linked to a capacity — that can be confirmed by colleagues — to immediately and consistently recognize audio quality. Furthermore, proof that there are people who clearly know what they are doing is found in the fact that immense progress has been made as much in terms of equipment as the musical products themselves. This strange and almost universal self-denial of competence translates into a serious hesitation amongst professionals to analyse and write about a practice which, after all, is commonplace… we might hazard a few explanations concerning this phenomenon:

- the will to distance onesself from the preciousness of audiophile vocabulary;

- the fact that the greater the transparency, the more the content is exposed and the production hidden. To judge the audio quality of a transparent product, one must be capable of speaking about the audio content itself. A discourse of a completely different nature needs to be developed: while previously it may have sufficed to report on an excess in the bass frequencies or background noise that was too present, critique of contemporary audio faces such an immense variety of content that any musical scholar can do no more than shrug their shoulders. While it may still be, to a very limited extent, possible to identify the larger tendencies in classical instrumentation, the proliferation of equipment and commercial production procedures is an entirely different beast: brands, models, microphones, pre-amps, studios, engineers, producers, processors, software, pressing… all these can have an impact on the final sound of the product, and it can be extremely difficult to summarize these in a pertinent manner. Many generalizations, screw-ups and improvisations have resulted from this change;

- the precise recognition of the starting points of the sources, the interpretation of the resonances and of the majority of the other perceptual benchmarks… all these take place in the instinctual domain and without the benefit of any scientific support. In such conditions, few professionals will take the risk to clearly expose their own personal ideas on such a primordial aspect of their work. Stranger still is that the working and critical listening sessions involving the same professionals are usually very active verbally, and that unanimity of opinions is almost systematic…

5.2 Transparency in Electroacoustics

The absence of a credible descriptive discourse, the confusion due to monitoring sysetms, the resulting apprehensions, none of this would seem to affect the clandestine unanimity of professionals on the nature of audio quality, and on its relation to transparency. Quality and transparency which seem to be detectable without a doubt by this group after a single audition, without the least bit of information about the nature of the sources or on their production conditions. A precise mechanism is clearly at work here, and this precision itself in fact prevents the incursion of any sort of structural, cultural or semantic analysis.

But can these perceptual benchmarks — our temporary explanation — function in the case of music produced in or using “unnatural” means? Is it still possible to recognize transparency once the spaces, spectral contents and timbres have been heavily metamorphised or are entirely artificial? Not just electroacoustics, but also all types of productions employing synthesis and transformations to varying degrees come to mind. But oddly enough, we note that the positive as well as the negative audio aspects are of the same nature and just as easy to identify as in productions involving recorded instruments. It would seem that the universe of abstract sound is also a three-dimensional universe, and that its transposition to a fixed support would need to respond to the same criteria of spatialisation and euphony, as it invokes just as compellingly the entire gamut of perceptual benchmarks.

The mastering of these productions will therefore make extensive use — in the same pre-æsthetic manner as for other musical genres — of the messages emanating from these benchmarks, both positive and of rejection, to decide the path to take in each individual context. In electroacoustics, this precision can easily be translated to a passage of a few seconds or, in the case of delivery in stems, to each of the internal components of the passage. We will see that with such a high degree of focalisation, the global æsthetic of the composer is far from being called into question.

A pre-æsthetic analytical approach, perceptual benchmarks, extreme localization of the problems and on the solutions, all of these concepts are still in development and will need to be refined through further practice. This practice will be able to evolve more effectively if composers look beyond their present community of listeners, small but quite tolerant towards issues of audio quality. A much larger public, eager for new sonic and musical experiences, is awaiting electroacoustic composers. But it should be noted that this public, increasingly familiar with transparency in other musical genres, has no reason to accept anachronistic sonic aggressions and discomforts which mastering can only partially correct. The electroacoustic milieu needs to implement the necessary measures to achieve a transparency relevant to the genre, so that the public is more disposed to the sonic journeys, explorations and discoveries offered by electroacoustics. The survival of the genre is at stake.

Références

- Voir Dominique Bassal, “La pratique du mastering en électroacoustique,” eContact! 6.3 — Questions en électroacoustique / Issues in Electroacoustics (2003), pp. 49–50, section 2.3.4.

Social top