New Behaviours and Strategies for the Performance Practice of Real-Time Notation

This article was originally published as “Performance Practice of Real-Time Notation” as part of the Proceedings for the 2016 International Conference on Technologies for Music Notation and Representation (TENOR 2016), and subsequently expanded for publication in eContact! 18.4.

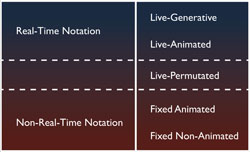

The issue of permanency in notation evokes a continuum delimited by pre-determined and immutable paper or digital scores at one end, and free improvisation at the other. Gerhard Winkler suggests that between these two extremes lies a “Third Way” that is made possible by recent technologies that support various types of real-time notation (Winkler 2004). This emerging practice of using computer screens to display music notation goes by many names: animated notation, automatically generated notation, live-generative notation, live notation or on-screen notation. These new notational paradigms can be separated into two categories: real-time notation and non-real-time notation (Fig. 1).

Real-time notation encompasses scores that contain material that is subject to some degree of change during the performance of the piece. Many works fit this definition, from those that use pre-determined musical segments that are reordered in performance to those that are completely notated in the moment of performance. Non-real-time notation accounts for all, or at least the large majority of other uses of the computer display as a notational medium; both static (fixed notation) and animated scores occupy this category. The boundary between these two primary approaches to notation on the computer screen is not rigid, and examples of the live-permutated score can be found that fit in both categories.

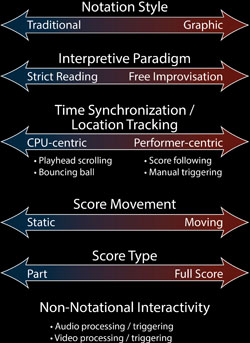

In order to better understand how and where a specific on-screen work sits along this continuum, it will be helpful to further categorize it according to its various attributes (Fig. 2). In both real-time and non-real-time scores, attributes commonly encountered include:

- Notation style;

- Interpretive paradigm;

- Time synchronization and location tracking management;

- Degree of on-screen movement;

- Whether the performer reads from a part or a score;

- If there is non-notational interactivity.

Notation style refers to the spectrum between traditional symbol-based notation and graphic notation. Many real-time notation scores, for example, use graphic notation or a combination of traditional graphic symbols and abstract graphics.

The interpretive paradigm of a piece determines whether the performer is expected to perform the music “exactly as written” in the score, or is encouraged — or even obliged — to incorporate some degree of improvisation to interpret the notation.

The methods of time synchronization and of location tracking, and the degree of on-screen movement can be important in solo and ensemble pieces for which musicians are reading from a computer screen. Relying on eye movement research, Lindsay Vickery (2014) and Richard Picking (1997) conclude that using a playhead cursor or a scrolling score discourage rapid eye repositioning — something associated with experts reading fixed notation — and instead promote a detrimental perceptual fixation. Instead, a bouncing-ball-type tracker embodies expressive and anticipatory tempo information by drawing on a performer’s skill of following a conductor (Shafer 2015b).

The question of whether the performer reads from a part or score has implications for ensemble coordination, not to mention the appropriate visual size of the music on the computer screen.

Works using real-time notation often incorporate non-notational interaction such as audio or video processing that may be independent of or generated from what the performer is expected to play. In addition to the various challenges of performing real-time notation, the performer of such works must additionally grapple with the issues associated with musique mixte and interactive electroacoustic music. For example, depending on the programming, the performer may not always be entirely aware of what the live electronic component of the work will process or play back each time. An excerpt from the performance instructions from the author’s 2015 work Law of Fives illustrates very well the kinds of demands this situation can put on performers:

The notation is generated in the moment of performance and requires the performers to sight-read the notation in front of an audience. … The goal of a performance of the piece, therefore, is not about perfect adherence to the demands of the score, but about the interaction between human and artificial intelligence. The performers should attempt to both read the music as accurately as possible and respond to and influence the computer’s musical decisions. (Shafer 2015b)

Absence of Performance Protocols for Real-Time Notation

The performance practice issues of real-time notation share connections with open form music, indeterminacy, complexity, free improvisation and interactivity. These issues, in combination with larger political and educational problems in the musical world, pose a formidable hurdle for many would-be performers (chippewa 2013). Over the past decade or so, a growing number of composers have incorporated real-time notation in their practice despite the inherent difficulties: recent or nascent software, lack of standardization of notational approaches and performance practice, and more. Some have written extensively on the topic of real-time notation in an effort to detail new software in the field or to explain the technological or theoretical underpinnings of a new work (e.g., McClelland and Alcorn 2008; Lee and Freeman 2013). However, with some notable exceptions, few have presented the problems and newfound freedoms that the performer faces in performing such works. The final portion of Jason Freeman’s 2008 article, “Extreme Sight-Reading, Mediated Expression and Audience Participation: Real-time music notation in live performance,” contains an excellent first attempt at developing a comprehensive guide for the performer. Following his argument that real-time notation can dynamically connect audiences to live performers, Freeman describes the difficulties involved in designing a score suitable for the sight-reading musician and the rehearsal time needed to familiarize oneself with the types of notation a piece is likely to produce. However, he does not go into detail when describing performer psychology in both the rehearsal and performance experience. In addition, his discussion of real-time notation is focused on synchronized ensemble improvisation and audience participation. Freeman does not address real-time scores that employ traditional notation symbols.

Composers of real-time notation works tend to include small reports of performance practice in their research, often mentioned as an ancillary issue. Such remarks are sometimes quite vague and may perhaps be too general for an effective description of a non-standard and emerging performance practice: “The best way to approach the playing of a Real-Time-Score seems to be that of a relaxed, playful ‘testing’ of the system” (Winkler 2010). While benevolent and potentially helpful to some performers, anecdotes and superficial suggestions ignore serious barriers for performers approaching real-time notation. The trust required between a composer, performer and a work that exhibits notational agency is not something that should be taken lightly, and with a significant and growing body of works that make use of real-time notation now in existence, we can begin to look more in depth at these and other aspects of the practice.

New Freedoms for Musical Expression

Freedom From Replication

The composer or performer considering whether or not to engage in the practice of real-time notation might understandably wonder in what ways the added challenges can ultimately benefit a composition. Real-time notation affords both composer and performer with new freedoms in live performance and new means for musical expression.

One freedom is the rejection of replicating the performance of a work. Since the advent of the phonograph, recorded performances have imparted an increasingly burdensome tradition on the shoulders of each generation of performers. This problem is not isolated to the hallowed ranks of common practice music. Recordings of contemporary compositions, particularly composer-endorsed recordings and recordings by esteemed new music performers, become authoritative in a way that was perhaps unintended. Such documents become a type of Urtext (an Urklang perhaps) and an immediate arbiter of what is an “authentic” performance of a piece.

Bruce Haynes’s remarks about authenticity and values in common practice music are increasingly applicable to new music:

The shortlist of “Masterpieces” that it plays over and over, repeatability and ritualized performance, active discouragement of improvisation, genius-personality and the pedestal mentality, the egotistical sublime, music as transcendent revelation, Absolute Tonkunst… ceremonial concert behavior, and pedagogical lineage. (Haynes 2007, 223)

Those ideals are in contrast to those that Haynes asserts ruled musical events before the nineteenth century:

That pieces were recently composed and for contemporary events, that they were unlikely to be heard again (or if they were, not in quite the same way), that surface details were left to performers, that composers were performers and valued as craftsmen rather than celebrities… and that audiences behaved in a relaxed and natural way. (Ibid.)

Perhaps a concerted effort should be made to return to these ideals in some part today. Among other things, these ideals reveal the lack of contemporary expectations on the performer to be a necessary creative agent in the music-making process. Paul Thom affirms this line of thinking when he says, “An ideology of replication leaves no room for interpretation; and yet interpretation is a necessity… in performance” (Thom 2011, 97). Works using real-time notation offer freedom from the shackles of so-called “authenticity” and the burden of being measured against recordings. It does this through works that defy singular documentation due to the mutable nature of the notation from one performance to the next. Therefore, the performer is freed of the burden of replicating something that cannot be fully documented, authorized or deemed “authentic”, and can then instead be charged with the task of engaging their own creative energies to play music.

Improvisational Freedom

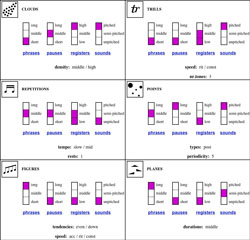

With the performer reinstated as a valued musical interpreter and the weight of the obligation of replication lifted, works that use real-time notation also grant a degree of creative licence to the performer directly through improvisation. This can manifest itself as anything from works with designated moments of aleatory or choices left to the performer to works that use abstract graphic notation to guide the performer through improvisation, or that provide a contextual framework with varying degrees of interpretational freedom left to the performer. Karlheinz Essl’s Champ d’Action (1998), for example, uses a combination of on-screen text and graphic symbols to elicit group improvisation (Fig. 3). Written for an unspecified ensemble of between three to seven soloists, the musicians respond to live-generated instructions that couple easily and relatively universally understood parameters (within Western music performance practice, at least) like phrase length, pause length, pitch register and noisiness, with descriptive classes like “clouds”, “points” and “planes”. These textual instructions must first be translated to the performer’s specific instrument before attempting the loftier goal, “to create relationships by listening and reacting to the sounds that are produced by the other players which could lead to dramatic and extremely intense situations” (Essl 1998). The composer describes the piece as a “real-time composition environment for computer-controlled ensemble,” (Ibid.) indicating the open-form nature of the work and his relinquished compositional agency to computer spontaneity and performer creativity.

Interactive Freedom

The interaction between score and performer is one of the most apparent benefits of real-time notation. Some works allow the performer to assume direct control over the content of their own notation or the notation of another performer. This is the case in Jason Freeman’s SGLC (2011) for computers and acoustic instruments, in which an ensemble of laptop performers choose and modify pre-composed musical fragments for an instrumental ensemble to perform in real time (Freeman 2011). While Freeman urges each performer to familiarize themselves with the pre-composed material, he gives complete freedom to the laptop “re-composers” to create loops, add or subtract notes, change dynamics, transpose or otherwise alter the notation. In SGLC, the relationship between the two ensembles might appear adversarial, as the instrumentalists are at the notational mercy of the laptop performers. Freeman attempts to make this hierarchy more egalitarian by encouraging pairs of laptop and instrumental performers to rehearse as a duet, becoming familiar with each other’s behaviours and abilities, before rehearsing as an ensemble: “This unusual setup encourages all of the musicians to share their musical ideas with each other, developing an improvisational conversation over time” (Ibid.).

Freeman’s approach to notational improvisation is representative of new interactions made possible in real-time notation. One might call this type of interaction permutative interaction because pre-composed segments are reordered through some mediated process. Such practices evoke a range of new categories of interaction between the performer and their notation. These interaction categories include, but are not limited to:

- Formal interaction. The performer can influence aspects of the large-scale structure of a piece.

- Temporal interaction. Rhythmic augmentation, diminution or tempo modulation can change dynamically.

- Local interaction. Surface details of a piece like pitches, rhythms, dynamics, articulations and other expressive elements become dependent on performer input.

In presenting models of interactive performance in computer music, Todd Winkler acknowledges the limitations inherent in the undertaking: “No traditional model is either complete or entirely appropriate for the computer…. Simulation of real-world models is only a stepping stone for original designs idiomatic to the digital medium” (Winkler 2001, 23). Perhaps these new types of interaction using real-time notation move closer toward Winkler’s goal of original, idiomatic designs for interactive computer music.

Ephemerality and Multiplicity

In the age of affordable multi-track audio recorders and digital video cameras, and social media hosting platforms like YouTube and SoundCloud, artists are building (and indeed expected to build) more and more personal online portfolios offering glossy documentation of their work. This manner of documentation often comes across as “litmus tests” of the individual works’ existence, against which future performances should be judged. In addition, the societal pressures to package, brand and sell a finished artwork potentially choke out the ephemeral moment of actual music making, as quite often a significant amount of the time is required to create and produce the documentation — in some cases it can take longer than the creative act itself! Works using real-time notation can address and exploit the beauty of impermanence that is so often absent in such approaches to creation and offer a solution to this philosophical and moral problem in the form of multiplicity: each performance presents only one possible version of a work that exists in plurality. To witness one performance is to know only part of a much larger whole. From the performer’s standpoint, each performance is unique, free from any historical burden and any comparative critique in the future. The music exists only as it is performed, in that particular moment. Any documentation of an individual performance will inherently fail to fully represent the work.

Computer art pioneers Stephen Todd and William Latham are often credited with the analogy of the artist as a gardener in works that display emergent behaviour: “The artist first creates the systems of the virtual world… then becomes a gardener within this world he has created” (Todd and Latham 1992, 12). The image is well suited to musical works that exhibit multiplicity. Gerhard Winkler, for instance, uses the analogy, describing the composer as someone “who plants ‘nuclei’ or germs, and watches them grow, depending on influences from the environment, in this or that way. All versions are welcome” (Winkler 2004, 5). John Cage made similar remarks about multiplicity regarding his Concert for Piano and Orchestra (1957–58), saying that every performance contributes to a holistic understanding of the piece: “I intend never to consider [the work] as in a final state, although I find each performance definitive” (Cage cited in Kostelanetz 1970, 131). Richard Hoadley uses yet another analogy, asserting that the process of composing a real-time work is similar to mapping the landscape of a geographic territory without describing every rock, tree and bush (Hoadley 2012). In this way, the composer acts as a cartographer, designing a landscape and releasing the performer to explore its details.

Problems in Rehearsal and Performance

Traditional Purposes of Practice and Rehearsal

With new freedoms for interaction and improvisation, and without concerns about replication in light of the ephemerality and multiplicity of works performed using real-time notation, come the practical issues that face musicians in rehearsal and performance. Before considering new approaches on the subject, the purposes of practice and rehearsal in traditional, fixed-notation works should be explored. The most self-evident purpose of individual practice is to learn the details of a piece that are needed for its performance. Some performers describe their practice trajectory as a process in which they first translate notational language into physical gestures, and then gradually link larger and larger musical units together, culminating in a large-scale coherent interpretation (McNutt 2005). Other performers may follow the opposite path, beginning from a theoretical understanding of the entire work and moving towards mastering the details of each moment. In either case, what is necessary is an understanding of the specific and the general, the micro and the macro.

Except in the case of solo works, the rehearsal process involves interaction with other players. Each individual’s micro-macro knowledge of the piece — gained during their own private learning process and practice — contributes to the development of a cohesive understanding of ensemble interaction that is relevant to that particular musical work or context. Rehearsal with a computer-mediated audio component, such as sample playback or interactive digital signal processing, adds further complication. Often, in the case of works exploring computer interactivity, an important part of the available rehearsal time is spent navigating the technological prosthetics involved. These include microphones, loudspeakers, pedals, sensors and other devices manipulated, directly or indirectly, by the performer. And then of course further time is spent — individually and as a group — in problem-solving, in order to ensure all the devices are compatible and functioning properly. Rehearsal time is also needed to determine the temporal modalities employed in the piece. This crucial aspect defines how time is controlled and by whom. A fixed temporal modality means that the performer should ensure that their performance conforms to the computer’s model of time, whereas a fluid modality gives the computer and performer independent command. Some pieces use a kind of interactive accompaniment, where the computer attempts to conform to the performer’s model of time. Finally, rehearsal time is also spent discovering and exploring the behaviours of the computer agent. This can take many forms, including traditional score-following, coordinated live-input processing and active human-computer joint improvisation, to name a few (McNutt 2003). The challenges to the performer’s ability to negotiate an interactive computer part in rehearsal become even more complex when the work involves real-time notation.

New Purposes of and Approaches to Rehearsal with Real-Time Notation

Many of the traditional purposes for practice and rehearsal become irrelevant with works using real-time notation. One of the primary problems for newcomers and veterans alike is the unfamiliar process of rehearsing a piece with a dynamic score. One might ask, “Why rehearse when the notation changes in the moment of performance?” The following is an attempt to answer that question.

While in real-time-notated works the performers may still be expected to incorporate more “traditional” aspects of performance practice (translating symbolic notation, surface details and annotations into an informed interpretation of the whole), they must now also engage with the score, paying attention to behaviours that may be quite different or altogether absent from performance contexts they are more familiar with: for example, performing solely on acoustic instruments. In a similar way to rehearsing with an interactive computer part, where the performer must investigate the behaviours of the computer agent, a work using real-time notation might behave in a number of ways. The score might, for example, have specific responses to performer input. Alternatively, a tempo-based clock or real-time clock might drive an event schedule that in turn generates notation. Temporal and behavioural correlations may take a myriad of forms, but they can be studied in two broad ways: with an eye for general local detail and with an eye for general large-scale form. The local detail can be as simple as discovering a set of pre-composed fragments, or it can be as complex as deducing the frequency of rhythmic figures, probability of pitches or variety of graphic indications.

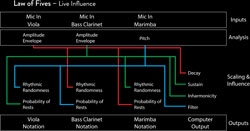

In my quartet for viola, bass clarinet, marimba and computer, Law of Fives (2015), a limited number of predetermined pitches are selected using a probability table and merged with rhythms designed using a permutation algorithm (Video 1). In this piece, the pitches are predictable while their order and associated rhythms are variable. In addition, the probability tables and rhythmic algorithm are modified in real time by control signals coming from microphones near the performers. The amplitude envelope of the viola, for example, influences both the probability of rests in the marimba part and a random permutation factor in the bass clarinet’s rhythms. Each acoustic instrument influences the other players’ notation simultaneously as well as the parameters driving a synthesizer played by the computer. Since local details depend on multiple performers’ input, the rehearsal process involves understanding how the ensemble’s collective performance affects the each individual’s notational output (Fig. 4).

If local detail defies the predictability just described, the performer might benefit from studying the large-scale form. Rehearsal should afford the performer an opportunity to play the piece multiple times in order to gain a sense of pre-planned or emergent forms. One possibility is that the notational behaviour changes significantly at certain time points. This is a strategy employed in Law of Fives, where large-scale changes in tempo, texture, orchestration and tessitura are predictable throughout the course of the piece and are repeatable from one performance to the next. In other works, one might find that a certain behaviour x always follows a different behaviour y, or some more sophisticated arrangement. Another attribute that one can study is the general difficulty level and the modulation of that difficulty throughout the work. Perhaps the piece begins simply and moves in a trajectory toward complexity in a process of accumulation, or moves conversely from complexity toward simplicity in a process of filtering.

Some behaviours lie outside of either composer or performer control. A work like Nick Didkovsky’s Zero Waste (2001) involves a sight-reading pianist whose performance is recorded by a computer and re-notated for the performer to play again. The piece creates a performer-computer-notation feedback loop that highlights inaccuracies in human performance and errors in the computer analysis of the performance, not to mention inadequacies in symbolic notation. The composer describes the outcomes of this process:

Zero Waste amplifies the resonances of a system which is characterized by the limitations of human performance and common music notation. If the performer were perfect, and if music transcription and notation were both theoretically and practically perfect, then Zero Waste would consist of identical repetitions of the first two measures. Of course, no sight reader is perfect, and notation must strike a balance between readability and absolute accuracy, so each new pair of measures diverges and evolves a bit more from the last. (Didkovsky 2001, 1)

The notation mechanism in Zero Waste creates the possibility for a chaotically deterministic form in which certain long-term behaviours can emerge. One behaviour is an increased number of rests near the beginning of each system due to the small size of the analysis window and performer hesitation. Another is the oscillating accumulation and dissolution of chord clusters regulated by rhythmic quantization error, at one limit, and the difficulty of playing such chords, at the other.

Another situation that evades composer and performer control is that of audience participation. Works like Kevin Baird’s No Clergy (2005) and Jason Freeman’s Graph Theory (2005) crowdsource certain compositional decisions, making the rehearsal of such works difficult. In this case, simulating audience feedback in rehearsal, while far from optimal, can at least help clarify which parameters might be able to be anticipated and which are more likely to be subject to chance. Whatever strategy the composer employs, a major purpose of rehearsal is deducing notational behaviour.

A common experience in the performance of real-time notation is that some amount of sight-reading is necessary. Rehearsal provides the performer time to practice sight-reading the notational output from the system. Even performers confident in their abilities can balk at the prospect of sight-reading live in front of an audience. Therefore, substantial time must be dedicated to this task — perhaps beyond that which is needed for “traditional” performance from fixed-notation scores — to aid in both the behavioural analysis described previously and the development of quick music-reading skills. Another important consideration in rehearsal (and in fact in the eventual performance) is the strong likelihood that the performer’s interpretation will vary — expectedly or not — to some degree from what is actually notated in the score. Some pieces, particularly those with graphic elements, may require a great deal of improvisation from the performer, who must therefore be well prepared not only for unexpected notation to appear in the moment of the performance, but also to make spontaneous interpretive decisions in a constantly changing notational or performance context. Another piece might use traditional musical symbols that leave little to no room for improvisation from the performer.

Whatever the case, the performer should be prepared for situations where, whether as a direct result of the notational design or the pressures and human limits of fast music reading, some deviation from the score and preparations made in rehearsal will be necessary. Flutist Elizabeth McNutt refers to this “carefully practiced reaction to a potentially overwhelming set of concerns” as triage, invoking the strict hierarchy of degrees of urgency used to assign and schedule emergency medical care (McNutt 2017). In the heat of performance, “mistakes” will occur (again, this may be by design) and the musician must triage the various problems presented in order to assess which musical elements take priority. While this might result in a degree of creative improvisation, it could also involve listening instead of playing, skipping ahead several beats in the music, repeating several beats of music, sacrificing pitch or rhythmic accuracy, or other strategies. Familiarity with the composer’s style and the nature of the piece can guide the performer through this learning, performance and decision-making process. If the general effect of the work is of prime importance, triage is clearly preferable to a defeated silence if the performer’s sight-reading skills falter. Conversely, in some cases formal connections may need to be sacrificed to meet the demands of local detail. These realities must be faced directly, ideally with composer guidance, so that the performer knows what options are most appropriate when an “ideal” rendering is unavailable.

Performers should be encouraged to become as familiar as possible with the on-screen graphical user interface (GUI) of the work. Every piece is different in this respect and the performer must acclimate themselves to the GUI in order to glean every useful bit of information possible. The notational display might follow one of several design considerations. The notation might have a degree of on-screen movement. Constant horizontal or vertical motion in the style of the so-called “scrolling score” is very common. Another option is that the notation slides periodically every beat, bar, system or pre-determined span of time so that the current time point remains on-screen. On the other hand, the notation might remain stationary, dividing the notation into virtual “pages” that turn or refresh periodically (similar to page turns in print scores). All on-screen notation designs should be concerned about the possibility of knowing what lies just ahead. The method by which a performer can read ahead is highly variable and could be related to the degree of on-screen motion. The time synchronization and location tracking system can behave in a number of ways. The most common methods include a smooth scrolling tracker, a tempo-quantized tracker or a bouncing-ball type tracker. Familiarizing oneself with these on-screen designs will undoubtedly increase the efficiency and accuracy of score reading.

Finally, the performer must be able to read the notation comfortably from their desired playing position, adjusting the music size and screen distance accordingly. A number of solutions for positioning laptops at sitting or standing height are widely available. External computer display mounts provide an option for installing a screen on an adjustable-height stand with a repositionable boom arm. In addition, as the power of mobile processors increase and software options become more proliferate, the display of real-time notation will increasingly transfer to conveniently mountable mobile devices and tablets. One example of mobile software written specifically for real-time notation is the Decibel ScorePlayer (Hope, Wyatt and Vickery 2015). Remaining practicalities such as who or what triggers the piece to start, how the piece ends, and whether or not the performer interacts with the screen or software in any unusual ways must also be addressed in rehearsal.

Performer-Composer Trust in Performance

A successful performance of a work using real-time notation hinges on the trust a performer places in the composer and computer-mediated notation. While there is no formula for building such confidence, the following factors can help create a more optimal situation for the performer and composer.

Many factors that lead to an ideal real-time notation experience for the performer revolve around the difficulty of the score and the sufficiency of information about the piece provided by the composer. Ideally, the notation should strike a balance between several competing factors:

- The general difficulty of the mechanical instructions like pitches, rhythms, dynamics and articulations;

- The visual layout of the score, including the size of the notation font, the use of non-standard symbols and whether the performer reads from a part or a score;

- The clarity of the timekeeping mechanism and how tempo modulations are implemented;

- The amount of expressive interpretation desired by the composer;

- The amount of improvisation;

- The difficulty of ensemble coordination.

As the complexity of one parameter increases, the remaining parameters should correspondingly decrease in complexity to let the performer divert maximal effort to the most difficult elements. The performer can be best prepared if the composer provides clear and ample information about hardware and software requirements, the GUI, notational conventions, a formal behavioural outline, sample scores and, where these exist, documentation of past performances.

The composer may purposefully overwhelm the performer if failure is a conceptual component of the work. Failure in performance is a theme explored by many composers in what some have termed the “post-digital” æsthetic (Cascone 2000). Any performer might understandably be alarmed at such a prospect. The optimal experience for a performer put in that situation is one that does not make them appear foolish. Didkovsky creates a performance situation in Zero Waste that requires failure in a way that is uncritical of the pianist. In that piece, a real failure would be a performance by a performer who is able to sight-read perfectly — this would effectively undermine the fundamental concept of the work and result in an uninteresting musical experience.

Performer failure is just one of many unusual demands that real-time notation can potentially place on musicians. Many real-time scores benefit from performers who develop trust in the composer and with the notation system. Real-time notation works are enhanced by the performer who is willing to risk sight-reading from the stage, who makes mistakes and continues to engage, and who knows that some performance errors are apparent to the audience while others are not. Above all, the successful musician will attempt to transcend the high demands of real-time notation and ultimately make music.

The Complex Score and the Future of Notation

A brief examination of the complex score and the associated musical movement called New Complexity provides some historical and æsthetic perspective on the issues presented here. The complex score shares some striking similarities to real-time notation. Music by composers such as Iannis Xenakis, Brian Ferneyhough and Richard Barrett (among others) ask players to perform at the limit of what is possible. This is often accomplished by presenting the performer with conflicting instructions or goals represented in a meticulously detailed, high-density fixed score. The result is a collision of actions with a variable sonic outcome from one performance to the next. 1[1. Elizabeth McNutt’s description of performance triage, described above, is obviously relevant to these performance contexts as well.]

In a similar way, real-time notation presents the player with a variety of somewhat conflicting goals:

- Relinquish the security of a fixed score while embracing new performance freedoms;

- Sight-read in front of an audience while performing musically;

- Expose the limits of ability while performing confidently.

Real-time notation also celebrates the beauty of ephemerality and multiplicity. Both the complex score and the real-time score present ensemble coordination issues, albeit for perhaps very different reasons. Both present problems in rehearsal strategies. In some ways, the real-time score is a logical extension of the complex score in which Barrett’s concepts of notation as freedom and improvisation as a method of composition can be fully realized (Barrett 2013).

Conclusion

As with any emerging practice, the subject of real-time notation performance practice continues to expand and evolve at a rapid pace. The elucidation of its performance praxis follows immediately behind the creation and performance of new works. Hopefully, the preceding discourse builds upon the foundation already established by Winkler, Didkovsky, Freeman and many others by offering insight into the new freedoms available to the performer. Similarly, this nascent field will also rely upon the ongoing work of many practitioners as real-time notation system research and development evolves. Just as the proliferation of fixed, paper notation was the product of incremental advancements in printing technology throughout the last few centuries, so is real-time notation a natural outcome of our current technology. As technology becomes more powerful and accessible, the body of real-time notation works and their associated approaches will continue to increase and differentiate. It is the author’s hope that this text builds upon the foundation already established in the performance practice of real-time notation and provides a platform for further exploration by seasoned performers of such works.

Bibliography

Baird, Kevin. “Real-Time Generation of Music Notation Via Audience Interaction Using Python and GNU Lilypond.” NIME 2005. Proceedings of the 5th International Conference on New Instruments for Musical Expression (Vancouver: University of British Columbia, 26–28 May 2005). http://www.nime.org/2005

Barrett, Richard. “Notation as Liberation.” Tempo 68/268 (April 2014), pp. 61–72.

Cascone, Kim. “‘Post-Digital’ Tendencies in Contemporary Computer Music.” Computer Music Journal 24/4 (Winter 2000) “Encounters with Electronica,” pp. 12–18.

chippewa, jef. “Practicalities of a Socio-Musical Utopia.” Originally published (in Swedish) in Nutida Musik 3–4 (2012–2013). Available on the author’s website at http://newmusicnotation.com/chippewa/texts/chippewa_spahlinger_2013-06.pdf [Last accessed 10 January 2017]

Didkovsky, Nick. “Recent Compositions and Performance Instruments Realized in Java Music Specification Language.” ICMC 2004. Proceedings of the 30th International Computer Music Conference (Miami FL, USA: University of Miami, 1–6 November 2004).

Essl Karlheinz. Champ d’Action (1998), real-time composition environment for computer-controlled ensemble. Available on the composer’s website at http://www.essl.at/works/champ.html [Last accessed 28 October 2016]

Freeman, Jason. “Extreme Sight-Reading, Mediated Expression and Audience Participation: Real-time music notation in live performance.” Computer Music Journal 32/3 (Fall 2008) “Synthesis, Spatialization, Transcription, Transformation,” pp. 25–41.

_____. SGLC (2011), for laptop and instrumental ensemble. Available on the composer’s website at http://distributedmusic.gatech.edu/jason/music/sglc-2011-for-laptop-and [Last accessed 28 October 2016]

Haynes, Bruce. The End of Early Music. New York: Oxford University Press, 2007.

Hoadley, Richard. “Notating Algorithms.” SPPEC 2012. Symposium for Performance of Electronic and Experimental Composition: “Building an Instrument” (Oxford, UK: Faculty of Music, Oxford University, 6 January 2012). Available on the author’s website at http://rhoadley.net/presentations/SPEEC002s.pdf [Last accessed 28 October 2016]

Hope, Cat, Aaron Wyatt and Lindsay Vickery. “The Decibel ScorePlayer: New Developments and Improved Functionality.” ICMC 2015: “Looking Back, Looking Forward”. Proceedings of the 41st International Computer Music Conference (Denton TX, USA: University of North Texas, 25 September – 1 October 2015). http://icmc2015.unt.edu

Kostelanetz, Richard (Ed.). John Cage. New York: Praeger, 1970.

Lee, Sang Won and Jason Freeman. “Real-Time Music Notation in Mixed Laptop-Acoustic Ensembles.” Computer Music Journal 37/4 (Winter 2013) “Analyzing Jazz Chord Progressions,” pp. 24–36.

McClelland, Christopher and Michael Alcorn. “Exploring New Composer/Performer Interactions Using Real-Time Notation.” ICMC 2008: “Roots/Routes”. Proceedings of the 34th International Computer Music Conference (Belfast, Northern Ireland: SARC — Sonic Arts Research Centre, Queen’s University Belfast, 24–29 August 2008).

McNutt, Elizabeth. “A Postscript on Process.” Music Theory Online 11/1 (March 2005), “Special Issue on Performance and Analysis: Views from theory, musicology and performance.” Available at http://www.mtosmt.org/issues/mto.05.11.1/mto.05.11.1.mcnutt.html [Last accessed 28 October 2016]

_____. “Performing Electroacoustic Music: A Wider view of interactivity.” Organised Sound 8/3 (December 2003), pp. 297–304.

_____. Interview by the author. Denton TX. 10 January 2017.

Picking, Richard. “Reading Music from Screens vs Paper.” Behaviour and Information Technology 16/2 (1997), pp. 72–78.

Shafer, Seth. Law of Fives (2015), for viola, bass clarinet, marimba and computer-generated notation. http://sethshafer.com/law_of_fives.html [Last accessed 28 October 2016]

_____. “VizScore: An On-Screen Notation Delivery System for Live Performance.” ICMC 2015: “Looking Back, Looking Forward”. Proceedings of the 41st International Computer Music Conference (Denton TX, USA: University of North Texas, 25 September – 1 October 2015). http://icmc2015.unt.edu

Thom, Paul. “Authentic Performance Practice.” In The Routledge Companion to Philosophy and Music. Edited by Theodore Gracyk and Andrew Kania. New York: Routledge, 2011, pp. 91–100.

Todd, Stephen and William Latham. Evolutionary Art and Computers. Cambridge, MA: Academic Press, 1992.

Vickery, Lindsay. “The Limitations of Representing Sound and Notation on Screen.” Organised Sound 19/3 (December 2014) “Mediation: Notation and Communication in Electroacoustic Music Performance,” pp. 215–227.

Winkler, Gerhard. “The Realtime-Score: A missing link in computer music performance.” SMC 2004. Proceedings of the 1st Sound and Music Computing Conference (Paris, France: IRCAM, 20–22 October 2004). http://smcnetwork.org/resources/smc2004

_____. “The Real-Time-Score: Nucleus and fluid opus.” Contemporary Music Review 29/1 (2010) “Virtual Scores and Real-Time Playing,” pp. 89–100.

Winkler, Todd. Composing Interactive Music: Techniques and Ideas Using Max. Cambridge, MA: MIT Press, 2001.

Social top