Doodling in the Posthuman Corpus

Wherefore scoring in the minds of machines?

Data mining and machine learning constitute the backdrop of everyday cognition, while mechanized performance bypasses scoring. Human-to-other-human notation becomes increasingly obsolescent. Poetic notation practices, such as composers’ working sketches and small communities’ idiosyncratic scoring dialects, create more resilient artistic corpuses which, if eventually mapped by artificial intelligences, will yield smarter, subtler machines.

However I have been coerced to notate, trained to notate and rewarded for notating, I have resisted writing down precise musical “recipes” for recent works, because for me the most crucial aspects of contemporary music occur in performance, in sound, in the interaction between audience, spectacle, “auricle”. Exact notation does not serve the goals of an experimental tradition valuing language’s minute-by-minute reinvention. Lachenmann and others left no form-bearing dimension safe from displacement, until the map had swelled to cover the territory, to the point that it seems the gatekeepers of the academic-æsthetic complex care little for the map save as a nostalgic ode to tradition’s presence. “I went to school,” says a score on a judge’s desk, while “I make impressive work” intones the CD player, a now anachronistic throwback to the days when mechanical reproduction had less completely circumvented sheet music’s raison d’être.

Electronic music is the clef’s existential nemesis, and as acoustic music aims toward electronic documentation, first audio and then video (and then in the future, multi-channel video? 3D projection?) mediate encounters with experimental works where they count, gradually making redundant — dispensable — the modernist illusion of notational precision. Exacting scores parallel the discourses of road signs, advertising slogans and indeed sub-threshold messages in seeking to provoke and control behaviours. Sight-reading performers have practiced their responses to specific symbols in order to make those responses faster and less deliberative — to bring them below the threshold of consciousness, until notehead and fingering act as one, and the idiom is the instrument, not the human that plays it. But electronic computers needn’t practice, since they replicate as a matter of instinct. In the lingo of the contemporary Digital Audio Workstation, to make “mistakes” — to inject randomness into an otherwise quantized process — is to “humanize”. Loudspeakers and robots realize musical modernism’s fundamental aspirations by omitting ambiguity from language. In a MIDI file, there exists no question as to the meaning, in context, of a given dynamic marking. As in a PCM (Pulse Code Modulation) audio encoding, representation corresponds one-to-one with an ideal acoustic event.

Loudspeaker arrays and other music-playing automata (some of the latter will be discussed below) create an environment in which acoustic and electroacoustic composers seeking particular sonic effects — including those involving traditional instruments — can circumvent the hindrances of human performance. Indeed, composers today can, with the right equipment, renounce writing for players. 1[1. Although, I must mention, before those who have worked with musical automata rightfully complain that, at present, using mechatronic instruments involves its own set of hindrances. I write now under the assumption that these hindrances (calibration, repair, fragility, noisy cooling systems, unusual components, etc.…) will continue to subside as technology in the field continues to progress in an operator-amenable direction. I think that when traditional scoring does occur, it does so increasingly in an ornamental capacity, and that, moreover, composers’ use of these ornaments occurs because of its colloquially recognizable function from the past. Eventually these, too, will become vague of etymology, as the most spectacular ensembles whose musical needs they are bound up with — symphony orchestras — will be seen to first withdraw (willingly or not) from the practice and performance of contemporary music, then from Classical music, and then from physical presence altogether until their sentimental strains resound most loudly in the form of Vienna Strings soundtracks to Young Adult fiction movie adaptations that themselves circumvent the practice of reading. Meanwhile, alternative ensembles, whether due to lower overhead or otherwise flexible workflows and anatomies, will deviate more than ever from the leadership of the orchestral lineage. (See chippewa [2013] for a discussion of notation for that most controversial of ensembles, the conductorless orchestra.) In the final section of the present text, I discuss some notational affordances for electronic music that occur when ensembles become extremely small.]

The academic-æsthetic complex loves to fetishize extremely detailed notation for its unicity, for its conspicuous manifestation of labour, and for its demonstration of wealth (behold all the time I have found!) and power (behold my ability to write down what other entities will do!). In its worst humoured manifestations, academia clings to scores less as recipes for playing than as repositories of prestige. And maybe that’s a good thing. Because the calligraphies of academic æsthesia, having staked their claims in an arcane bastion of virtuosity-for-its-own-sake, can resist, can interpenetrate, the onslaught of post-human functionality.

In the meantime, the continuing development of technologies based in and oriented towards sonic creation affords increasingly accessible opportunities for the exploration of new approaches to mediated creative practices in sound and performance.

Humans Delegate Their Art Habits

Post-human : the workings of corporations, digital networks, runaway climatic phenomena and other complicated emergences that in this post-enlightened era operate with the focus and intention of an organism transcending its components.

Some of the components within a multi-agent system might be humans — or they might not (Wikipedia 2016). In musical artificial intelligence, research on multi-agent systems is booming. Among the questions more specifically related to live, musical performance in this complex and fascinating field are: How can software programmes “jam” together? How can they represent, to each other, the sounds they are about to make? Research in multi-agent systems parallels the proliferation found in fields of user-interface (UI) and user-experience (UX) design, in that it tries to find a place for human users amid a growing variety of other intelligences. 2[2. In the long term, I think UI/UX design will come across as a quixotic attempt to grant human “users” selective (and therefore manageable, for the human but also for the system) access to the black-box thinking of other things, presumably the “used”. Multi-agent systems research does not draw static distinctions between used and user — all are just “agents”, communicating for varied purposes that may include use.] Multi-agent systems can occur in recursive hierarchies; a system composed of multiple agents can itself function as a single agent in relation to other such systems. The data passed between agents in a given system consists in notes-to-self from the perspective of that system, but notes-to-other from the perspective of the sending and receiving agents. A string quartet is a multi-agent system (Kloreman 2016), a group of improvisers is a multi-agent system, a composer with performers is a multi-agent system to the extent that the former acknowledges influence from the latter. But presently the most virtuosic multi-agent systems (besides, perhaps, the brain itself — though not for long) are artificial neural networks, computer programmes consisting of large numbers of subroutines (called “neurons”) relating to each other in reconfigurable ways and themselves with reconfigurable behaviours. Artificial neural networks learn, and when they learn from the right data “corpuses”, they efficiently satisfy humans’ desires for æsthetic pleasure.

At Simon Fraser University (SFU), where I studied from 2014 to 2016, Professor Arne Eigenfeldt’s small but gradually growing collection of music-playing automata includes a Disklavier, as well as two machines commissioned from the interdisciplinary engineering company KarmetiK: a marimba-playing machine called the Modulatron and a Notomotion (Figs. 1 and 2), whose eighteen arms can strike various configurations of drums and idiophones. KarmetiK’s catalogue also includes fifteen other instruments, among them a drums-playing Breakbot and a glockenspiel-playing Glockenbot (KarmetiK 2017). 3[3. Mechatronic ensembles occur increasingly widely. Particularly well known instances include the Orchestrion built by Eric Singer for Pat Metheny (see Metheny 2009 and 2013) and the robotic orchestra built by Singer for the Lido nightclub in Paris (see Singer 2015).] Collectively and individually, automata “perform” better than humans at many tasks, chiefly those involving fast passagework, wide pitch ambits, complex cross-rhythms and fine — but not continuous — distinctions between loudness levels. 4[4. Digital technology marks a philosophical return to the “terraced”, or discontinuous, dynamics with which I was taught to interpret Baroque keyboard music.] Eigenfeldt co-directs SFU’s Metacreation Lab, whose participants — myself included — attempt, among other tasks, to programme art-making algorithms capable of autonomously generating music (Eigenfeldt, Bown and Carey 2012). The human becomes the meta to the machine’s creation, the nominal distinction between machine worker and human overseer becoming increasingly arbitrary, the relation one of gazing down at an agentic progeny from further and further distance until it becomes unclear who is crafted in whose image. When the Metacreation Lab’s software meets Eigenfeldt’s hardware, as it did for a wide community at ISEA 2015’s Metacreation concert, the messages from composers’ MacBooks bypass human-read notation on the way to the performing mechanical menagerie. The only human-read notes involved are those used by the composer-programmers to represent (meta)musical ideas to themselves (Fig. 9, below), while training the robots to play the tunes.

Multi-agent systems that improvise in real time, such as the Metacreation Lab’s Musebot framework, need communication methods that allow agents to describe their activities selectively to others (Ibid.). The Musebot framework uses Open Sound Control (OSC), for its flexibility. Individual Musebots come from various human contributors, and each sends and receives messages in a set of OSC namespaces determined by its programmer. For example, the most commonly used messages are:

- notepool. Followed by a series of pitch classes usually indicating the sending Bot’s present harmonic reservoir.

- density. Followed by a number from 0 to 1, indicating the relative amount of activity taking place in the present musical texture.

The Musebots’ lack of a standardized communication protocol means they struggle to synchronize long-duration compositional structures — the “plan” message gets little use.

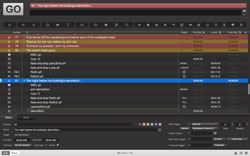

Indeed, the trade-off between standardization and efficiency (at whose optimum one finds an impoverished vocabulary), flexibility and capacity for innovation (occurring in extreme form as chaos), and human readability (maximized in the absence of non-human intellects) comes into sharp focus when working with the Musebots. Functionally, generative sound lends itself to very simple notation human-oriented notation as far as human observers encounter, since machine-oriented data transfers and processing routines handle much musical interpretation out of sight. For my soundscape-accompanied spoken word piece Flatness (2016), I used a “high-level” text-based notation scheme modelled after a theatre workflow, implemented in Figure 53’s QLab (Fig. 3). 5[5. QLab falls into a burgeoning genre of performance control software programmes used to coordinate technical cues, including sound, lights and other software, in performance.] Triggering transitions from one quasi-generative soundscape to another according to lines of text hides the computer system’s moment-to-moment decision-making process, allowing it to improvise within efficiently human-controllable temporal bounds (Audio 1). 6[6. In the early days of free atonal music, Schoenberg famously turned to a natural language — in this case French — to supply Pierrot Lunaire with the long-term structure his aprogrammatic music didn’t yield. Serialism, that most machine-friendly of artistic tools, eventually made up the difference, at least for the purpose of reducing composers’ cognitive load.]

Harker’s score is an ideal version of the piece, for eventual discarding by musicians in the heat of performance.

Machine learning provides one way of harnessing computational efficiency without reducing creativity. Machine-learning algorithms determine relevant features of an existing corpus of data and then generate new expressions bearing similar properties. Taking my inspiration from Brian Ferneyhough’s Sieben Sterne (1970), I have, in my own compositions, explored a process of learning by human performers that is modelled on machine learning (Fig. 4). My goal was to find an efficient way of extending textural passages without involving literal repeats (Audio 2).

Many composers build improvisation into their work by means of novel notation schemes. In the UK, Alex Harker’s techniques include indicating composed versions of a given rhythm and then providing license to improvise on the notated figures. Pieces like Harker’s The Kinetics of Resonance (2007), for solo drumset, avoid over-prescribing by providing template passages and then asking performers to (simply?) do their thing. Harker’s most detailed notes resonate most clearly only in the imaginations of one reading the score — an ideal version of the piece, for eventual discarding by musicians in the heat of performance.

Diaries Hack Perception

Computer networks optimize communication for the goals of the computers involved, gradually removing human consultation from all but the input. Although the niche of music writing on the assembly line between composition and performance shrinks in fields of artistic practice where computers and machines integrate in the process of creation and production, I believe its continued use in such practices emerges from its traditional role in the deliberative process of composition itself.

I have already brought up two categories of notes: notes-to-other and notes-to-self. These categories exist in a symbiotic relationship, in that a note-to-other from a specific perspective is a note-to-self from a general perspective. Douglas Holfstader’s 2007 work Gödel, Escher, Bach illustrates the notion in a dialogue between an anteater and an anthill. The anteater explains that, while it preys on individual ants, it does a service to the anthill. The ants’ communications to each other about the anteater’s imposing presence collectively constitute a behaviour in the anthill’s emergent consciousness. Holfstader’s dialogue puts a quaint spin on the immanently important ideas of distribution and emergence, topics which appear in discourse under various terms: emergent consciousness, distributed consciousness, collective consciousness, distributed communication, distributed creativity, distributed cognition. I consider note-to-self and note-to-other as a duality akin to that of wave and particle. What we normally refer to as individuals appear in other contexts as dividual. Therefore, I consider all notes, directed to both other and self, as manipulable instruments of societal cognition — analogues to synapses, the foundations of thought, perception, ethics. All notes are notes to some self.

To flesh my taxonomy of notes out into a handy grid I must also reference the binary of prescription and description, wherein description tells one what happened and prescription tells one what to do. From the perspective of an individual music theorist, traditional examples of descriptive note-to-other include: Roman numeral analyses; Schenkerian graphs; Formenlehre mark-ups indicating periods, sentences, sequences and so forth; spectrogrammes; set class annotations; selective transcriptions; Klumpenhouwer network diagrammes; and other data visualizations tailored to obviate different features of some music (in addition, of course, to academic essays). From the perspective of an individual composer, scores and parts (and programme notes and occasional manifestoes) are the stereotypical examples of prescriptive notes-to-other, with figured bass, unrealized canons, jazz charts and other shorthands constituting an intermediary type of notation that can target self or other depending on the production sequence in which one encounters them. For example: Is the orchestrator a different human than the composer? Must those singing the round all read off the same staff? And so forth. Traditional genres of composer prescription-to-self include particell scores (with or without orchestration indications), serial matrices and sometimes waveform-like blobs, indicating spectromorphological shapes and designs. These provide mnemonic help and, both consequently and additionally, alter their corresponding composition’s development as composers’ hands and eyes think together with their imaginations.

Transmediation is the process of reading description as prescription. I hear a bird call and write it down. I find a description of a Classical Greek flute and try to make music in a similar tuning. I import an audio file into my DAW and click “audio-to-MIDI”. In the academic-æsthetic complex, which places a premium on virtuosic scoring, the degree of apparent correspondence between musical score and musical event (or its documentation) provides one measure of composers’ and performers’ control over their output. Since, in the eyes of governing bodies, we as musicians compete for favourable evaluation of our deliberate action, the act of corresponding score with (documented) performance takes on imperative force — whether score dictates performance or composer fixes score post hoc, scores prescribe, project and describe all while crossing desks as cultural currency. In my mind, careerism constitutes one motivation for prioritizing score-based music. Another more honourable motivation is the influence of notational arcana on creativity.

In her 2004 opus The Creative Mind, Margaret Boden distinguishes between psychological creativity and historical creativity, where a psychologically creative event yields a phenomenon new to an individual and a historically creative event yields a discovery new to a collective. In Boden’s mind, that collective is all of humanity, ever, at least to the extent that one knows. She makes a two-by-three terminological grid by drawing distinctions on another dimension, between: exploratory creativity, which consists in creating new possibilities within a conceptual space; combinatorial creativity, which consists in combining aspects from multiple conceptual spaces to create a new hybrid; and transformational creativity, which consists in extending a conceptual space beyond prior boundaries.

Transformational creativity, the signature product of so-hailed “geniuses”, doesn’t materialize out of nothing — hence the adage that the tip of the pyramid must rest on a wide base. Likewise, historic creativity begins as psychological (represented to self before meeting a wider community) and combinatorial (represented to self as a convergence yielding a surprising sum) creativity. Now, creative artificial multi-agent systems can handle all of the above. We can discuss artificial creativities as forming part of our creative milieu, or we can discuss ourselves as forming part of their creative milieu. We can’t tell the most complex of machines what to think about. We can only influence how they think, what they say to themselves, what notions they mull over and combine.

Data mining knows no limits save those of the data min(e)d. But machine-learning algorithms trained on boring corpuses will output boring products.

Changes in notational attitudes reflect changes in compositional attitudes (the inverse is certainly also true, albeit perhaps to a much lesser degree). Where economic conditions support it, avant-gardism incentivizes the creation of new lexicons, at its extreme granulating language to the point that its conventions no longer work. In dissolving communicative conventions, experimentalism’s products resemble (my experience of) quotidian thought, which abounds in frivolities. Unwarranted attentions and spontaneous ideations often do not serve explicit goals. Instead, they can form future goals not necessarily subservient to the present ones. Given the liberality with which we now distribute creative tasks to artificial computers, do machines appreciate our prized, frivolous thought processes, or will they ultimately concern themselves exclusively with optimizations? Most research into artificial intelligence targets problems with quantitatively evaluable solutions. 7[7. Such solutions in commercial design are very often first and foremost profit-oriented. For example, the Apple design ethic eliminates whatever “frivolous” features it can, and then makes a killing.] On the other hand, if we resist taking an economy-of-means approach and instead produce a complex corpus replete with fanciful music notation, we can provide more challenging informational fuel for machines whose insights may exceed their assignments. Consider the kind of artificial intelligence that a computer would need in order to compose scores as detailed as those of Barry Guy 8[8. Several of his whimsically notated scores are presented and discussed in his 2012 article “Graphic Scores,” published in Point of Departure 38.] Moreover, the liminal status of overnotated music positions it, more than any other art form, as a complex holdout, a polyglot, ideally suited for use as an intellectual toy, and therefore as an intellectual stimulant.

At the 2013 Musical Metacreation workshop, Andie Sigler brought up the notion of Open AI — artificial intelligence that humans stay one step ahead of, whose inner workings (some) humans can always comprehend (Sigler 2013). The state of the metacreative arts had already moved on, however, with complex neural networks capable of simulating subtle expressions (handwriting, for example) that their human programmers had not otherwise studied (Graves 2014). The symbiotic practices of data mining and machine learning had come into style, with programmers channelling the affordances of black box networks to create new instances of any art form for which they could find a large enough set of specimens. Data mining knows no limits save those of the data min(e)d. The problem, then, is that machine-learning algorithms trained on boring corpuses will output boring products. The most popular datasets presently available for music AI training are repositories of MIDI files (Raffel 2016), audio features and metadata (Bertin-Mahieux et al. 2011). Poetic graphic scores have not registered as commercially prominent because they appeal only to the relatively rare demographic that can read them, as opposed to the wider demographic that consumes music as audio. But since we can’t have Open AI (any more than we can have Open HI, Human Intelligence) and we want computational environments with a grasp of human æsthetic experience beyond reduction to monetization, we must have chaotic corpuses, fed on the kind of absurd, prettyish-for-no-obvious-reason expressive saturation that panders to the inefficiencies of a human audience lauding transformational psychological novelty. Because standardization, while facilitating communication for known goals, censors communication for the kind of accidental purpose that still lingers in human activities despite not having been captured in current veins of dataset representation. Prestigious intricacy can submit to systematic standards, or push back.

In the next neighbourhood over from Simon Fraser University’s downtown art school, Maren Lisac and Alanna Ho’s Vancouver Performance Scorebook Project (Lisac and Ho 2016) collects unusual new visual expressions of music, emerging from the same community that birthed the now-defunct Dada- and Fluxus-influenced performance art band Dissonant Disco. Dissonant Disco’s love of absurdist humour challenged contemporary Classical music conventions. For example, the group sang in a kiddie pool of water for a work called Copious Flow, and hissed a wordless requiem for the CBC Radio Orchestra in Vancouver’s CBC studios. The Scorebook project, while less exuberantly idiosyncratic than Dissonant Disco, nevertheless prises individuality in parameters outside of conventional production lines (Ibid.).

My own contribution to the Scorebook implements algorithmic composition for live performers (Fig. 5). Memory Management Algorithm, like the Scorebook Project generally, as well as historical cases like Satie’s ironic text notes to players of his piano music, make the most sense when appreciated from within a small community. The Scorebook Project invites contributors to express relatively private whims. These scores are not notes-to-self from my perspective, although they may be considered as such from the perspective of the national scene and its attendant evaluating organizations. Comparatively “outsider” art is — to the (“outsider”) audience it targets — insider art, even if the audience consists of only one person. It follows that contracting spheres of increasingly private absurdism should culminate in an epitome: the diary of the individual composer (or perhaps even the “automatic writing” of a specific part of the brain of an individual composer), which contains elements susceptible to learning by others, alongside expressions which may remain mysterious forever — perhaps even mysterious to the composer who wrote them down, who, having moved on to other thoughts, loses them to the past moment and cannot remember what they were originally intended to signify. The inscrutable privacy of an instant past constitutes the extreme opposite of communicative success, and the most extreme form of resistance to standardized writing and thinking. 9[9. I’m reminded of composer and calligrapher Martin Reisle’s account (during a 2016 interview I made with him) of ghosts as records or documents detected by the subconscious but not by the conscious.]

Robots Should Scrawl Notes to Themselves

As part of my project to decamp from efficient, conventional, functional notation for humans, I have increasingly centred my artistic practice within the realm of noting-to-self. Notes-to-self are not subject to the same degree of exposure to the audience as scores or performances. The examples of notes-to-self that follow are taken from my own work and the work of others; this small collection should not be considered to represent, in any complete form, the vast array of personal notation practices used by composers. A more methodical survey of semi-private scoring practices will have to await future work!

As far as concerns my personal trajectory through idiosyncratic notation, I began by leaving performers to write their own cues to the sounds of tape parts. In trumpeter Duncan Campbell’s performance score for A Prophecy (2013), I scrawled onomatopoeias — “boom!”, “tweet!”, etc. (Fig. 6) — resembling Catherine Berberian’s indications in Stripsody (1966), except here they are describing the output of loudspeakers rather than prescribing output for the performer. Duncan and I added additional onomatopoeias as the rehearsal process progressed. During the performance (Audio 3), he supplemented the paper score’s rudimentary time cues by watching an iMac screen with Logic Pro scrolling through the tape part’s waveform. For 2015’s Kyrie, I didn’t bother writing onomatopoetic cues early in the process, but Dorothea Hayley annotated her score in a similar manner, writing her own preferred events from a tape part featuring many referential sound objects onto a score whose “tape notation” featured only a few pitch and text indications (Fig. 7).

I also avail myself of commercial music software’s seductive efficiency, sketching orchestral and other traditional forces music in Ableton Live’s “piano roll” MIDI editor (Fig. 8), and exploiting the interface’s intuitive display of chromatic relationships, before exporting my acoustic compositions to Finale for more detailed notating. I am not alone in using Live as an aid for instrumental composition. For Lépidoptères (Lepidoptera), her collaboration with Monty Adkins, Terri Hron composed and read from automation curves in Ableton’s Arrange view as cues to the behaviour of the piece’s electronic component. Hron thus used the interface of a digital composition tool to synchronize with an electronic soundscape, making double use of a score, as both a method of controlling computerized sound, and as a human-readable performance score.

What onomatopoeias seek to resemble in utterance, and DAWs seek to parameterize, spectromorphological drawings mimic in visual figures. The scores for James O’Callaghan’s Reasons (2012) and Trevor Wishart’s Vox cycle (1980) go beyond the expediencies of performance synchronization to provide windows into the composers’ visual-metaphoric milieus. I surmise that both scores’ drawings of what Manuella Blackburn labels “the sound-shapes of spectromorphology” (Blackburn 2011) bridge the divide between note-to-self and note-to-other, and that these scores stem from a tendency toward integrating the worlds of composers and instrumentalists. Performance, with such scores, becomes a meeting of the minds, across a range not possible where precisely constrained communication channels prevail. Composition, as practiced by those who write it down, is a distributive art form with a tradition of poetic insight grounded in the ambiguities of visual representation itself, rather than the pure production of sound.

That notation figures in thought as well as performance makes it less surprising when it occurs in the production of acousmatic music. Sketches host wild notational poetics. Pierre-Alexandre Tremblay at Huddersfield University advocates keeping spare paper and a writing implement handy while sitting at a computer composing audio, to doodle formal ideas in any free-associated form that comes to mind (Tremblay 2016). His own doodles look like a convergence of Blackburn’s sound-shapes and the mountain-shaped plot diagrams often used to explain the tensions of introduction, crisis, climax and dénouement to high school creative-writing students. 10[10. Analogously, although in reference to interpretation rather than composition, Ottawa-based piano instructor Jenny Regehr once handed me a pipe cleaner twisted into a helix as a metaphor for a particular phrase of music, stating that students occasionally find such tactile-visual stimuli helpful when conceptualize the pieces they practice.] My own notes-to-self mix icons with contours, using comic strip storyboard-inspired “panes” which I link together with arrows (Fig. 9).

In the cases of solitary composition and close performer-composer collaboration, I opine that the mechanism of connection between extramusical object and artistic performance — whether encapsulated by a certain parameter or dispersed throughout some intangible affect — should be grown more than trimmed, and loosed more than refined, because the prop is supposed to help in a way only it — specific to a medium all its own — can. Artists seek and conjure inter-medium connections as a private rearrangement whose experiments yield new phenomena. Many such inter-medium connections have thus far evaded colonization by the post-human, which has focussed on the commercially optimizable widely public versions of notated discourse. Machine vision has only just become human-competitive when it comes to recognizing quotidian objects (as witnessed by the profusion of hybrid murals brought about by Google DeepDream [Mordvintsev 2015]). It does not yet possess a corpus of trajectory sketches in the manner of, for example, Tremblay or Blackburn. And indeed, I estimate that machine reading will take much longer to process the purposely near-inscrutable transmissions that relatively unrecognized or self-consciously avant-garde composers have fashioned to suggest the bizarre (or bizarrely suggest the ordinary) in the thrall of an æsthetic that abhors the obvious. When the machines inevitably do learn our metaphors, I want them to understand those metaphors to a high degree of complexity and variation. The avant-garde flirtation with chaos — redefining convention so constantly that language skims randomness — insists that when computers read us, they should read us with the intricacy, subtlety and rhetorical proficiency wrought of an overwhelmingly multilingual upbringing. We can’t stop the post-human, but we can ensure that the corpus it learns from is rich, in the hope that the perceptions it fashions will be likewise.

Bibliography

Adkins, Monty. “Lepidoptera.” Lepidoptera. Performed by Terri Hron. Montreal: empreintes DIGITALes IMED 16136, 2016.

Berberian, Catherine. Stripsody (1966). Performed by Teresa García Villuendas. YouTube video “Stripsody” (3:33) posted by “Teresa García” on 31 January 2013. http://youtu.be/ljlncO4c89g [Last accessed 29 October 2016]

Bertin-Mahieux, Thierry, Daniel P.W. Ellis, Brian Whitman and Paul Lamere. “The Million Song Dataset.” ISMIR 2011. Proceedings of the 12th International Society for Music Information Retrieval Conference (Miami FL, USA: University of Miami, October 24–28, 2011).

Blackburn, Manuella. “The Visual Sound-Shapes of Spectromorphology.” Organised Sound 16/1 (April 2011) “Denis Smalley: His influence on the theory and practice of electroacoustic music,” pp. 5–13.

Boden, Margaret. The Creative Mind: Myths and mechanisms. 2nd edition. Hove, UK: Psychology Press, 2004.

Dissonant Disco. Copious Flow (2013). Performed by Dissonant Disco at Western Front in Vancouver on 16 November 2013. Vimeo video “Event Score: Dissonant Disco — [performing Copious Flow] (2013)” (3:11) posted by “Western Front” on 29 January 2014. http://vimeo.com/85391979 [Last accessed 30 October 2016]

_____. Requiem for the CBC Radio Orchestra (2013). Performed by Dissonant Disco at CBC Vancouver. Vimeo video “Requiem for CBC Radio Orchestra (Dissonant Disco)” (14:13) posted by “Remy Siu” on 21 May 2014. http://vimeo.com/95949446 [Last accessed 30 October 2016]

chippewa, jef. “Practicalities of a Socio-Musical Utopia: Degrees of ‘freedom’ in mathias spahlinger’s ‘doppelt bejaht’ (studies for orchestra without conductor).” Nutida Musik 3–4 / 2012–13 (June 2013). Trans. into Swedish by Andreas Engström.

Eigenfeldt, Arne, Oliver Bown and Benjamin Carey. “Collaborative Composition with Creative Systems: Reflections on the first Musebot ensemble.” ICCC 2015. Proceedings of the 6th International Conference on Computational Creativity. (Park City UT, USA: 29 June – 2 July 2015). http://computationalcreativity.net/iccc2015

Eigenfeldt, Arne, Oliver Bown, Philippe Pasquier and Aengus Martin. “Towards a Taxonomy of Musical Metacreation: Reflections on the first musical metacreation weekend.” AIIDE’12. Proceedings of the 8th Conference on Artificial Intelligence and Interactive Digital Entertainment (Paolo Alto CA, USA: Stanford University, 8–12 October 2012). http://aiide12.gatech.edu

Ferneyhough, Brian. Sieben Sterne (1970). Programme note. Editions Peters. http://www.editionpeters.com/resources/0001/stock/pdf/sieben_sterne.pdf [Last accessed 30 October 2016]

Graves, Alex. “Generating Sequences with Recurrent Neural Networks.” Preprint submission to Neural and Evolutionary Computing. 5 June 2014 (v.5). http://arxiv.org/abs/1308.0850 [Last accessed 30 October 2016]

Guy, Barry. “Graphic Scores.” Point of Departure 38 (March 2012) http://www.pointofdeparture.org/PoD38/PoD38Guy.html [Last accessed 29 October 2016]

Harker, Alexander. The Kinetics of Resonance (2007). Audio file “TKOR” (16:37). http://www.alexanderjharker.co.uk/music/TKOR.mp3 [Last accessed 29 October 2016]

Holfstadter, Douglas R. and Daniel C. Dennet. “Chapter 11: Prelude… Ant Fugue” from The Mind’s I. 2007.http://themindi.blogspot.ca/2007/02/chapter-11-prelude-ant-fugue.html [Last accessed 30 October 2016]

Horrigan, Matthew. A Prophecy (2013), for trumpet and tape. http://soundcloud.com/matt_horrigan/a-prophecy [Last accessed 30 October 2016]

_____. Kyrie (2015), for voice and tape. Performed by Dorothea Hayley. http://soundcloud.com/matt_horrigan/kyrie [Last accessed 30 October 2016]

_____. Flatness (2016). Digital album released by “Matthew Reid Horrigan” on 14 October 2016. http://matthewreidhorrigan.bandcamp.com/releases [Last accessed 30 October 2016]

_____. Return Main() (2016). Performed by Jacob Armstrong, Julia Lin, Sarah Albu and Sara Constant at 918 Bathurst, Toronto on 24 June 2016.

KarmetiK. “Musical Robots.” http://karmetik.com/labs/robots [Last accessed 18 January 2017]

Kloreman, Edward. “Multiple Agency in Mozart’s Music.” Unpublished doctoral thesis, City University of New York, 2016.

Lisac, Maren and Alanna Ho. “Vancouver Performance Scorebook Project: Call For Scores.” Posted 29 March 2016. http://vanscorebookproject.wordpress.com/2016/03/29/vancouver-performance-scorebook-project-call-for-scores [Last accessed 30 October 2016]

Metheny, Pat. “About Orchestrion.” November 2009. http://www.patmetheny.com/orchestrioninfo [Last accessed 18 January 2017]

_____. Excerpt from the Orchestrion Suite (2010). Performed by Pat Metheny at the former St. Elias Church Greenpoint in Brooklyn in November 2010. YouTube video “Orchestrion — An Excerpt from the Orchestrion Project” (5:33) posted by “Pat Metheny” on 14 August 2013. http://youtu.be/evHVh4bqaOQ [Last accessed 18 January 2017]

Mordvintsev, Alexander, Christopher Olah and Mike Tyka. “DeepDream — A Code example for visualizing Neural Networks.” Google Research Blog. Posted 1 July 2015. http://web.archive.org/web/20150708233542/http://googleresearch.blogspot.co.uk/2015/07/deepdream-code-example-for-visualizing.html [Last accessed 30 October 2016]

O’Callaghan, James. Reasons (2012), for amplified books and electronics. Performed by Ryan Packard during “The Library in Resonance” in the Marvin Duchow Music Library, McGill University in Montréal on 27 April 2012. YouTube video “James O’Callaghan — Reasons — for amplified books and electronics — Packard @ Duchow” (7:45) posted by “James O’Callaghan” on 30 April 2012. http://youtu.be/aelXA6JoQj8 [Last accessed 29 October 2016]

Raffel, Colin. “Learning-Based Methods for Comparing Sequences, with Applications to Audio-to-MIDI Alignment and Matching.” Unpublished doctoral thesis, Columbia University, 2016. http://www.colinraffel.com/projects/lmd/#license [Last accessed 30 October 2016]

Reisle, Martin. Personal interview. Broadcast on “re:composition”, Vancouver Co-op Radio on 27 September 2016. http://www.coopradio.org/content/recomposition-49 [Last accessed 30 October 2016]

Sigler, Andie. “Musical Meta-Creation: The discourse of process.” MUME 2013. Proceedings of the 2nd International Workshop on Musical Metacreation (Boston MA, USA: Northeastern University, 14–15 October 2013). http://musicalmetacreation.org/proceedings/proceedings-2

Singer, Eric. “Leading Robotic Musical Instrument Creator Eric Singer Creates Robotic Orchestra for Paris’ Lido Night Club.” Press release, 27 May 2015. http://www.singerbots.com/press [Last accessed 18 January 2017]

Tremblay, Pierre-Alexandre. Personal Interview. 10 March 2016.

Wishart, Trevor. Vox I (1980). Musical score posted by Electric Phoenix. http://www.electricphoenix.darylrunswick.net/gallery/pictures/page/5/count/20 [Last accessed 29 October 2016]

_____. “The Vox Cycle.” The Vox Cycle. Electric Phoenix. UK: Virgin Classics VC 7 91108-2, 1990.

Wikipedia contributors. “Multi-agent system.” Wikipedia: The Free Encyclopedia. Wikimedia Foundation Inc. https://en.wikipedia.org/wiki/Multi-agent_system [Last accessed 29 October 2016]

Social top