Designing and Playing the Strophonion

Extending vocal art performance using a custom digital musical instrument

The pathways of designing and performing a digital musical instrument (DMI) in the context of vocal art performance are elucidated in two parts. The first part discusses the musico-functional approach and the mapping of keys and sensors, the legacy of the predecessor instrument (The Hands), pitfalls along the way and possible approaches to notation for performance on the instrument. The second part addresses the practice of integrating the DMI in vocal art performance, æsthetics of vocal plurality, concepts of embodying and disembodying the voice, as well as choreographic aspects, each of which expands the field of vocal art performance.

Have a vision, be fearless, experiment, play!

—Michel Waisvisz 1[1. Cited in Dykstra-Erickson and Arnowitz 2005, 63.]

Instrument Design

Defining the Strophonion

The Strophonion is a gesture-controlled, wireless digital musical instrument (DMI). It belongs to the family of live electronic instruments that use various types of sensors to measure the movements of hands and arms that, based on momentary and continuous data, are translated via MIDI messages into sonic and musical parameters. The sensors are primarily built into cases of two hand controllers of different shapes. In addition to 13 push keys (12 for the fingers, 1 for the thumb), the right-hand controller features a three-axis accelerometer and a pressure sensor, whereas the left-hand controller offers 8 push keys, a miniature joystick operated by the thumb on two axis, and the receiver of an ultrasonic distance sensor whose complementary part, the transmitter, is mounted on a hip belt (Fig. 1).

Through the physical movement of his body, in combination with the fingers pressing and releasing the round-shaped keys on both hand controllers, the musician operates the instrument’s functionality and thereby steers the sonic and musical process. Just as with any traditional, acoustic instrument, the Strophonion allows the user to dynamically control pitch, rhythm and timbre. Additionally, it can be used as a sampling instrument in live performance, to record and play back the recorded material treated with various manipulations in real time. Taking into account all these possibilities for the control of sound, the Strophonion is a highly dynamic and versatile hybrid instrument.

The Idea Behind the Name

The name “Strophonion” is composed of three syllables derived from Greek to represent the three main components that define how I perform using the instrument: strophé + phoné + ión.

The first syllable of the name, from strophé, designates a turn, bend or twist 2[2. In ancient Greek theatre strophé usually indicates the turn of the chorus onstage.], which hints at the vocabulary of movement the right hand articulates: bending, rotating, turning, spinning and twisting. Parameters such as pitch, (attack) volume, frequency filter, sample length, sample position and many others are controlled by the right hand using the three-axis accelerometer. The Strophonion, however, uses only the x- and y-axis, as the z-axis is a combination of the x- and y-axis, making it very difficult to control in the context of the kind of live performance I conceived its use for.

The second, middle part of the name comes from the Greek phoné, meaning voice and/or sound, and refers to the fact that I sample my own voice while performing live with the instrument. I am first and foremost a vocal performer and usually play the Strophonion in combination with my voice, as opposed to using it exclusively as a solo instrument. 3[3. However, in order to learn to play the instrument properly and also for demonstration purposes, I composed a few pieces for Strophonion solo, such as the 2015 work, Eine Raumvermessung (Video 1, below).]

The ending syllable of the neologism “Strophonion” is ión, the meaning of which is twofold. The literal translation is going or wandering and, in chemistry and physics fields, it designates an atom or molecule that gives an electrical charge. More importantly, however, it calls attention to how the left-hand controller is played, in a manner that recalls the left hand of the musician opening and closing the bellows of an accordion. The distance between the performer’s hip and the Strophonion’s left-hand controller is measured by the ultrasonic distance sensor — a combination of a transmitter on the hip together with a receiver on the left-hand controller (Fig. 2). This must be connected at all times but is the only cable used in the Strophonion’s controller system when used in its “wireless” mode. 4[4. Here, “wireless” refers to the fact that the performer and instrument are not “wired” to the computer system.] In contrast to the rotating and turning nature of the right-hand movements, the use of ultrasound to measure left-hand movements — i.e. distance from the transmitter — allows the musician to control various parameters, such as overall volume, frequency filter or pitch, all on a linear scale.

The Legacy of “The Hands”

“One of the most cited and most famous works in the DMI literature” (Torre, Andersen and Baldé 2016, 22) is The Hands 5[5. See Michel Waisvisz’s website for more information on The Hands.], created and developed by Michel Waisvisz (1949–2008), who from 1981–2008 was the Artistic Director of the STudio of Electro-Instrumental Music (STEIM) in Amsterdam. The Hands provided a role model for the Strophonion. I’m still fascinated by Waisvisz’s instrument and intrigued about how he used to play it. If one compares it, as an electronic instrument, to any traditional, acoustic instrument, the potential of his creation appears to be unlimited. It is capable of combining numerous, different sampling and sound morphing techniques in addition to more conventional ways of playing traditional instruments, that is, the control of pitch, rhythm and timbre. In this way, and also with regard to the design of the right-hand controller, The Hands has certainly informed the development of the Strophonion. However, in order that the instrument respond to my own needs as a performer, which are different than those of Waisvisz, I created the Strophonion’s controller system from scratch over a period of two years (2010–11), with immense support offered by STEIM throughout the process.

In order to have some workable models to better define what my specific needs were, I first molded mock-ups of the hand controllers out of clay. Then the visual artist Florian Goettke, who used to be a violin maker, helped me create various prototypes carved out of wood. That’s when Byungjun Kwon, who at that time was the engineer at STEIM, came in and put all the electronics together and installed them inside the newly built cases. 6[6. For more information on the development of the Strophonion see Nowitz 2011.] Finally, it was Frank Baldé, long-standing companion of Waisvisz and developer of the Strophonion’s software components, LiSa and junXion, who was of tremendous help, great inspiration along the path and, most importantly, the person who constructed the sound-æsthetic structure of the Strophonion. The design directly impacts how the instrument sounds, however, so his contribution was more than that of a software designer; the nature of the instrument’s structure defines the sonic-æsthetic potential to a large degree. Seen from this perspective, it is hardly surprising that the comprehensive knowledge collected over almost a quarter of a century of researching and developing The Hands has strongly influenced the development of the Strophonion.

At first glance, when comparing the two instruments, The Hands and the Strophonion may look similar in terms of physical design, but there are many differences that are hidden in the details. They differ fundamentally with regard to the technology used to connect the control devices with the host computer, the conceptual approach to the playing of the DMI, and the overall musico-functional design. These three important differences between two instruments give rise to some basic questions and issues that accompany the process of conceptualizing, designing, building, maintaining and refining a DMI.

Basic Questions When Designing a DMI

Instead of simply comparing the two instruments, I would rather like to shed some light on the underlying questions concerning what actually motivates the development of such an instrument, and about the consequences and implications of the various decisions made along the way.

The first issue that needs to be addressed is of a purely technological nature and is particularly relevant at the present time: should the DMI be wired or wireless? Both approaches should be thoroughly investigated and evaluated before even starting the process of building any DMI, due to, on the one hand, the idiosyncratic implications of each approach as well as the limitations of the visual æsthetics of the instrument, and on the other, the technical requirements and support that are defined, or even limited, by the specific conditions of individual performance venues.

Second is the question how the playing of the instrument is approached. Will the DMI be used purely as a solo instrument, or should it rather be conceived of as an accompaniment device? Or should its function be to “extend” the performative nature and capacity of an existing instrument, in this case the voice?

Finally, the third question, which concerns the overall musico-functional concept, is whether the control devices should work — and therefore be designed — in a symmetrical or asymmetrical manner.

Following these questions concerning the function of the instrument, there remains the issue of what the æsthetic preferences and goals regarding the sound production might be, and related to this issue, how do we make the playing of the instrument and the related gestures and movements comprehensible to the audience so that an intriguing and persuasive performance that is both ear- and eye-catching can unfold?

Wired or Wireless?

The Strophonion was developed to be wireless, whereas Waisvisz’s Hands is a wired instrument. A wireless version of The Hands would have been possible to implement (as early as 2000), but this is something that Waisvisz never pursued:

The dominant reason lies most likely in that Waisvisz used, from time to time, to interface the Hands with his old synthesizers which he appreciated for their quality of sounds. Furthermore, all technical developments stopped with version three of the Hands. Waisvisz was satisfied of the technology in use. He was now mastering his DMI in its finest details from the hardware to the software. (Torre, Andersen and Baldé 2016, 32)

Yet to be accurate, within the Strophonion system, there is in fact one cable that is used, which, providing continuous and linear data from the ultrasonic distance sensor, connects the transmitter on the left-hand controller with the receiver mounted on the hip belt. The connection of the hand-held interface to the host computer can, if desired by the performer, be completely wireless.

The main reason for striving to develop a wireless instrument was the need to be able to move about freely onstage. As a vocal performer, I’m used to playing instruments in order to “accompany” myself. Before developing the Strophonion, I played piano as well as bass guitar while performing with the voice. However, during solo vocal performances that I did (for the sake of change and diversity), I missed this aspect of performance, and this is the reason I set out to develop a DMI that I could use with my hands and that simultaneously offers me the control over sonic and musical parameters to the highest possible degree. Also, I really wasn’t keen on being constrained by cables onstage. Instead, the goal was to further develop the idea of what I call Klangtanz, or “sound dance”, a live, performative approach during which “sound would be created by dancing; the performer would dance the sound” (Nowitz 2008). My first explorations in Klangtanz were made using the Stimmflieger 7[7. The Stimmflieger, or “voice kite”, was mainly designed in collaboration with composer and sound artist Daniel Schorno who, from 2001–04, was Guest Artistic Director at STEIM and responsible for the configurations of the Stimmflieger’s software components, LiSa and junXion. The Stimmflieger is discussed more in detail in the author’s article, “Voice and Live-Electronics using Remotes as Gestural Controllers,” published in eContact! 10.4 — Live Electronics, Improvisation and Interactivity in Electroacoustics (October 2008).], an instrument I developed using two Wii remotes (Fig. 3).

Pitfalls of Wireless Connection and Backing-Up: An Excursion

On the 5th of November 2011, during the first week of Sound Triangle 8[8. A festival of Experimental Music Exchange featuring Korean and STEIM-related artists and musicians, co-produced by LIG Art Hall (Seoul) and STEIM (Amsterdam), with one-week long programmes in each city.] in Seoul, I premiered the first version of the Strophonion — constructed of wood — and have since had a number of opportunities to perform with it in public. Most of the time, the instrument worked fine, but at some venues I had serious issues due to signal instability. I eventually discovered that the reason for this was that numerous Wi-Fi networks were open and running at the same time. This was most likely causing conflicts with the Strophonion system, whose radio signal transmits over the 2.4 GHz band, a frequency also used by other short-range transmitting devices such as Bluetooth, mobile phones, microwave ovens and car alarms. The reliability and responsiveness of the instrument was seriously compromised and, on top of that, significant latency was introduced into the system. The other discovery that I made was that even when only very few Wi-Fi networks were detectable, the Strophonion still reacted inconsistently. Apparently, this was due to the quality of the walls at the venue: if their surface is very smooth, the signals are more likely to bounce back and forth wildly, so that conflicts inevitably arise.

When using wireless connections, we actually never have any proof of how the signal flows unless we measure it with appropriate measurement devices, which of course most musicians do not have in their performance kit. What helped me find the solution was the fact that for a period of almost two years I was using the Shells, the prototype of the Strophonion, in combination with a wireless headset system that worked on the 800 MHz band. Every time I used both systems simultaneously, I realized that the responsiveness of the Shells became irregular, which resulted in unwanted and unexpected behaviour. This was apparently due to the fact that when the wireless headset system’s transmitting frequency is multiplied by three, it corresponds to the Strophonion’s band of 2.4 GHz. In order to be able to detect signal flow issues, the musician needs to have in-depth knowledge of the instrument’s mode of action and its internal construction, as well as, to an extent, its circuits. For the musician who is “only performing” with a custom-built instrument built by someone else, without the assistance of a technician it can sometimes be difficult or even impossible to know what is going wrong, or why something is not working, and to then be able to figure out which problem is causing what. In this particular case, my familiarity with the design and circuitry of both instruments helped me localize and resolve the problem.

Taking all my experiences with wireless signal flow into account, it became obvious that the Strophonion circuitry needed to be expanded so that a wired performance using the instrument was possible in addition to the wireless mode. During another residency at STEIM in August 2014 (thanks to a grant by the Brandenburg State Ministry of Science, Research and Culture), Berlin-based electronic engineer, sound artist and guitar player Sukandar Kartadinata implemented the new circuitry. Since then I’m able to perform using the instrument regardless of where the venue is located or how the performance space is designed. In order to improve the instrument’s performance, Kartadinata also refined some parts of the electronics. Additionally, Chi-ha-ucciso-Il-Conte?, Italian product designer and engineer at that time at STEIM, manufactured a backup version of the hand controllers, so that in case the original wooden ones get broken I’m still able to perform. Based on Goettke’s original wooden controllers, Chi-ha-ucciso-Il-Conte? designed a new version of the devices consisting of an assemblage of 3D prints in black (Fig. 4).

Summarising the pitfalls of wireless connection, I can now assume that typical theatre venues usually don’t have much Wi-Fi traffic emanating from exterior networks and therefore few, if any network-related conflicts should arise in such venues. If, in addition, the venue walls are equipped with curtains, I’m most likely able to perform using the wireless version of the Strophonion. At open-air festivals and bigger concert halls, though, I might have to switch to the wired one. This is why, at each venue, sufficient time to do a proper sound check is crucial to ensure a successful and convincing performance. On the one hand, I need to scrutinize the signal flow of the Wi-Fi connection and, on the other, I need to get familiar with the acoustic conditions of the venue, since the Strophonion, due to the microphones used, is a highly dynamic instrument, allowing the user to record and play back the voice in real time.

Solo Instrument or Extension of the Voice: A Thought on the Conceptual Approach

Looking at the differences between The Hands and the Strophonion, a second question arises concerning the performative approach to the instrument. Waisvisz played The Hands as a solo instrument, most of the time. In contrast, as a vocal performer, I consider the Strophonion to be both an instrument and an extension of the voice. On the one hand, in order to master the complexity of its musico-functional architecture, the Strophonion needs to be practiced just as any other traditional, acoustic instrument. On the other hand, as the Strophonion was developed as a device to extend the voice, it is intended to be used in combination with the natural live voice of the performer. One of a number of different practices that I’m interested in is the process of extracting the live voice and inserting it again into the current sonic or musical process. Because this is usually done at an extremely high velocity, it can be very difficult to differentiate between the natural acoustic voice and the digitally reproduced voice. In fact, due to the way I integrate them in performance, the boundary between the two can become quite blurred. The human and the machine-driven approach intermingle and a new form of vocal virtuosity and expressiveness thereby emerges. Pieter Verstraete, a scholar from Belgium currently lecturing in American Culture and Literature at Hacettepe University Ankara, describes this particular process:

Nowitz accustoms the listener to his amplified voice, which he feeds into the LiSa software…. He gradually begins to hold the remote controls in both hands in such a way that the recorded sounds start playing back. He then quickly manipulates the sounds through various hand gestures, giving one the sense that he is in control. This play with instant acousmatised sound through interface control produces an interactive space that is both fascinating to listen to and to watch, as we assess the sounds and the creator’s gestures. It produces a highly focused space that calls attention to the performer’s body as well as the disembodiment of his voice in the feedback system, as it “feeds back” to its originating body. (Verstraete 2011) 9[9. Verstraete's essay is part of a limited edition vinyl production documenting performances of Study for a Self-Portrait, No. 2 (Nowitz), for amplified voice and live electronics (using Wiis and the Stimmflieger, respectively), and How Marquis Yi of Zeng Miraculously Escaped Death (Xiao He), both recorded on 29 May 2011 as part of Who’s Afraid of the Modern Opera, a collaboration between De Player (Rotterdam) and Operadagen Rotterdam Festival.]

Symmetrical or Asymmetrical: The Musico-Functional Design

Closely connected to the conceptual idea of how to approach the instrument in a performative sense is a third question relating to the design of the devices. Comparing the Strophonion with The Hands, we see that the shape of the left-hand controllers of the two instruments is dissimilar; as a consequence, the overall musico-functional concept of the instruments is different as well. The Hands has a symmetrical design, whereas the Strophonion is an asymmetrical instrument, comparable to the notion of playing the violin. In this respect, the musician needs both hands to produce a single sound event. The creation of a crescendo using the Strophonion can serve as an example of this approach: the right-hand fingers trigger up to twelve playback events at a time of a chosen sample 10[10. The actual number depends on how many pitch keys the musician is able to press simultaneously.] while the left hand, using the ultrasonic distance sensor, raises the overall volume from zero up to the desired level. This is achieved in the following way: first, the left-hand controller is close to the hip, corresponding to zero volume; gradually, the left-hand controller is moved away from the hip, creating the crescendo effect; the loudest degree is attained once the left arm is completely outstretched (see Video 1 at 0:28).

The musician needs both hands to shape one sonic or musical event. In contrast, The Hands follows a symmetrical concept corresponding to playing the piano: each hand produces one sound entity and both hands can therefore act independently from each other. One reason for me to design the Strophonion in an asymmetrical way goes back to my experiences playing bass guitar in former days. 11[11. In the late 1980s and 90s, in addition to keyboards, guitar and vocals, I also played bass guitar in a few bands, such as Volvox (in Landshut/Munich, Germany) and Tony Buck’s Astro-Peril (Berlin).] Another reason is that since 2008 I have been playing the Stimmflieger, an electronic instrument whose controllers — two Wii remotes — are used symmetrically in counter rotation.

The Idea of the Flying Piano: The Key Pad on the Right-Hand Controller

The main reason why I was aiming for touch control by means of the fingers in order to start and stop sonic or musical events lies in the fact that I have a background as a pianist in addition to my major studies as a singer. Being right-handed, I decided that the pianistic and virtuosic demands of playing the instrument should go into the design of the right-hand controller. Actually, I did quite some research about how to achieve the idea of having a key pad available that allows me to play on it in a virtuosic manner without having to learn new skills, or, in other words, using the skills that I already have at my disposal. Another reason for the extensive and meticulous research that visual artist and former violin maker, Florian Goettke, and I pursued, was to design the shape of the instrument ergonomically in order to avoid problems in my wrist such as I had developed using the Wii remotes. In fact, I’m extremely happy with the final shape that we elaborated on 12[12. See Nowitz 2011 for documentation of the development of the instrument.], since neither my muscles nor my joints have so far gotten strained at all. Furthermore, I was fascinated by the idea of creating a controller as some sort of a flying piano. In the end, there are not so many different ways to construct a device that sits perfectly in the hand and allows for fast and unbridled playing. This is the main reason why the right-hand controller of the Strophonion somewhat resembles the data gloves of The Hands.

Right-Hand Controller Pitch Mapping and Notation

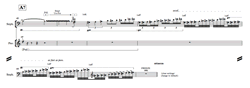

On the top of the right-hand controller, there are twelve pitch keys that are programmed to provide the equivalent number of tones, grouped as three rows having four tones each (Fig. 5). The pitches are spread within an octave in intervals corresponding to the 12-tone, equal-tempered scale of Western harmony. The tones of every row are activated by (from left to right) the index, middle, ring and little finger.

The original and therefore only untransposed sample is assigned to and always triggered by the middle finger on the corresponding key, second from left in the middle row. The lowest transposition of the sample is triggered by the index finger on the leftmost key of the upper row, whereas the highest transposition is triggered by the little finger on the rightmost key of the lower row. So, to play a downward semitone “scale” starting with the original, the right hand starts pressing keys in the following order: middle row keys pressed by the middle then index finger, then the top row keys are pressed by the little, ring, middle and, finally, index finger. Accordingly, movement in semitone steps upwards works in the opposite direction. In this manner, the original sample key has a transposition range of a perfect fourth downwards and a tritone upwards (Fig. 6).

I generally notate the Strophonion on a single staff with conventional Western music symbols and with the original sample on middle C (C4). This not only indicates the keys that need to be pressed by the right-hand keypad but also emphasizes that the resulting sound is pitch-related. When the sounding result is rather timbre-related noise, I use diamond-shaped noteheads to indicate the keys to be pressed (Fig. 7). Since the sounding result depends on the actual sample loaded, unless the original sample key happens to trigger a sound having the pitch of middle C, the score for the Strophonion is always transposing.

Alternate Mode: Changing Functionality

A pressure sensor and a shift key are available on the back of the right-hand controller (Fig. 8). The shift key modifies the functionality of the instrument and changes the mode of action of the keys on the front of the right-hand controller, as well as those on the left-hand controller. By applying the shift key in combination with one of the twelve keys on its top, the right-hand controller turns into an operational tool that can be used to call up a variety of different functions and modes that don’t need to be done on the fly, such as those triggered by the keys of the left-hand controller. The right-hand controller comprises the following functions and modes when the shift key is used:

- Three keys to access three sets (channels 1–2, buffer zone A; channel 3, buffer zone B) of stored sound manipulations;

- Two keys to load pre-recorded samples (ten shared by channel one and two, and ten for channel three);

- One key to call up sample bank 1 (if sample bank 2 is active);

- Two keys to increase or decrease the degree of responsiveness of the sensors;

- One key to change the modality of the pressure sensor;

- One key to return to the default octave (original sample octave);

- One key to recall the default state of the current selected channel;

- One “panic” key to recall default states on all three channels.

Combining Both Hand Controllers in Action

Transposition

To play back a sample above or below the default octave, the performer uses a combination of keys on the right-hand controller and the joystick on the back of the left-hand controller (Fig. 9). The joystick is pushed to the right or to the left in order to transpose the pitches up or down an octave, respectively. Pushing the joystick to the left twice in rapid succession transposes the sample down two octaves. For upward transposition, there are three octaves available by pushing the joystick three times to the right in rapid succession. The total range available using only the right-hand keys and the left-hand joystick is six octaves. The ultrasonic distance sensor can also be used to transpose by an octave, so the operation of the left arm when using the Strophonion increases the range available to the user by a further two octaves: one downward and one upward. Therefore, the total range of the Strophonion covers eight octaves.

Sustain

The joystick, operated by the thumb, not only provides a means to transpose by one or more octaves, but also provides the option to activate and deactivate the sustain mode of playback and recording events. Pushing the joystick downwards disables the sustain mode, while the upward direction enables it.

Double-Shift Mode

Combining the shift key of the right-hand controller with the left-hand shift key brings the musician to another level of functionality that I call the “double-shift mode”. Via the same right-hand buttons as those used either for normal playing (with or without octave transposition) or in alternate mode (with the single-shift key on the right-hand controller), this realm of the instrument provides access to several additional modes and functions:

- Two keys for enabling or disabling the mode to define where the recording is supposed to start from in the sample buffer (by default, or when turned off, the recording starts from the beginning of the sample buffer; activating this mode allows the performer to choose the starting point of the recording by using the x-axis of the right-hand controller);

- Two keys for enabling or disabling octave transpositions controlled by the joystick;

- Two keys for enabling or disabling octave transpositions controlled by the ultrasonic distance sensor;

- Two keys for turning the reverberation on or off in the overall output;

- Three keys for clearing three sample buffer zones (one for channel one and two, one for channel three, one for the entire sample buffer);

- One key to select the second sample bank, providing another set of twenty pre-recorded samples.

Pitch Bend

The front of the left-hand controller also has six buttons that can be used to “bend” the pitch (glissando) of the playback. After pressing the right-hand shift key, the six left-hand buttons become available and allow for six glissando intervals — quarter tone, semitone, whole tone, minor third, tritone and octave — in either direction (upward or downward), controlled by the x-axis of the accelerometer housed in the right-hand controller.

Assigning Functions and Modes to the Left-hand Controller Keys

The left-hand device is gripped in the hand with the thumb positioned to be ready to control the joystick and shift key; the little finger is inserted in a hole and the index finger further supports the device via a cork-lined extrusion, giving the user enough stability while playing. In addition to the shift (or modifier) key on the back and the six keys on the front, there is an overall reset or “panic” button that is positioned on the outside of the finger ring in order to avoid it getting triggered accidentally (Fig. 10).

The functionality of the instrument, its recording modalities and the enabling of the corresponding sensors, all of which need to be controlled on the fly during performance, are triggered by the six keys on the front of the left-hand controller. These keys offer control of the overall volume and frequency filtering (both via the ultrasonic distance sensor), as well as two recording modes (one for the voice, and one for the overall output of both the computer and the voice), a slow “scratching” mode via the y-axis of the accelerometer of the right-hand controller, and pitch bending via the x-axis of the accelerometer. By pressing the left-hand shift key, a further set of five different modes is retrievable:

- The mixing in and out of an additional voice, via the x-axis;

- A fast “scratching” mode that allows to rapidly go through the entire sample buffer, via the x-axis;

- Control of the length of the sample chosen, via the x-axis;

- Activation and deactivation of the pressure sensor as an additional volume booster;

- Release time behaviour of each note event, allowing the differentiation between three different release times (zero, short and long release, the latter by pressing two keys simultaneously).

Performance

Integrating the Strophonion in Vocal Art Performance

There are three playing modes that ought to be considered and distinguished when using the Strophonion in vocal art performance. The first is the most obvious one: when the Strophonion is used as a solo instrument to accompany the vocalist, comparable to the use of a piano or guitar. However, it seems to be more interesting if one allows for and explores the integration and merging of the live voice and the sonic potential the Strophonion itself offers. In this second mode, the performer either uses pre-recorded samples or records the voice during the performance act and employs this material in real time — definitely a much more risk-taking venture than the first approach. Of course, both methods to use pre-recorded material and to sample can intermingle with each other.

As the Strophonion is designed to be able to play back pre-recorded samples, it also allows for the elaboration of fixed media compositions that are always repeatable and can be presented with the highest sound quality. The composer can, for example, go into the studio and record the voice with high-end microphones in the best possible circumstances — i.e. without, for example, the presence of the kinds of undesirable extraneous noises that are typical of live performance. Then, after mixing and editing the recorded material, the final result can be imported into the Strophonion setup to play with it live. This approach is obviously a much more time- and labour-intensive process than with the previous modes, but it allows for the composition of works of a very high sound quality that can be presented in multiple “identical” performances. Despite the fact that each live performance of a work composed in this mode may differ somewhat in the timing or balance of its parts (as with the performance of virtually any chamber music work!), the whole reflects the same sounding essence each time it is performed. In 2015, I composed Playing with Panache in this manner.

Playing with Panache starts off with the author simultaneously performing a live vocal part and controlling a recording of his voice sampled from another piece called Panache (Nowitz 2015b). After approximately 24 seconds, the oneness of both voices gradually dissipates as if they were trying to gain independence from each other. During a sequence where the live voice has a short “solo” part, the performer is recording this solo in real time and immediately playing back its result in combination with ongoing new material generated by the live voice. During the course of the work, the performer constantly overwrites the sample content in the sample buffer such that the initial, pre-recorded sample of Panache vanishes completely. Since it is stored as a pre-recorded sample, the performer can always reload it and come back to it.

However, what actually happens during the performance of this piece is that the performer generates new sounds by recording alternately the live voice or the overall output of the computer, which is the sum of the recorded live voice and the manipulations applied in LiSa, the live sampling programme of the Strophonion. By repeatedly employing the re-sampling technique over and over again, the material is subject to constant change, is never the same, and therefore creates an aura of freshness, an æsthetic of continuous stimulation. In fact, Playing with Panache is one of those pieces during which the performer has to be highly attentive to the newly generated sound and, in this respect, has to become some sort of a momentary sound researcher who steps into what Pieter Verstraete (2011) calls “the slippery zone of playing and being played with”. 13[13. This also recalls Hans-Georg Gadamer’s reflection that “all playing is a being-played. The attraction of a game, the fascination it exerts, consists precisely in the fact that the game masters the players. Even in the case of games in which one tries to perform tasks that one has set oneself, there is a risk that they will not ‘work,’ ‘succeed,’ or ‘succeed again,’ which is the attraction of the game. Whoever ‘tries’ is in fact the one who is tried. The real subject of the game (this is shown in precisely those experiences in which there is only a single player) is not the player but instead the game itself. What holds the player in its spell, draws him into play, and keeps him there is the game itself” (Gadamer 2004, 106).] For the performer, it is intriguing to constantly feed the computer with new vocal material and, at the same time, to get informed by hearing oneself through the playback manipulations applied by the audio software and amplified through the loudspeaker system. The vocal vocabulary grows continuously during the actual act of performance. This process reveals, in both audible and visual ways, how vocal sounds can be inspired and influenced during the performance by the gesture-controlled computer system. Within this context, the performer has the task of making such processes comprehensible to the audience. Eventually, the whole system, consisting of the live voice and the Strophonion, constitutes a sonic and musical apparatus that is self-inducing and self-containing on a permanent basis. Pieter Verstraete describes this performance aspect in the following way:

The feedback system seems to allow for a fair amount of play, where its responses seem to be not just manipulated by the gestures, but also affect both the performer and listener. Here, the acousmatised and immediately de-acousmatised voice of the performer reconfigures a sense of acousmêtre, which constantly negotiates a sense of control over the sound production of his voice. In a reciprocal way, Nowitz constantly tests the distribution of his voice as auditory self-image in relation to its virtual counterpart, while over-layering it with new disembodied sounds. (Verstraete 2011)

From Embodying Through Disembodying to Re-embodying the Voice: The Æsthetics of Vocal Plurality

Once I have extracted a fragment or passage of a live, extended vocal performance and have stored, processed or otherwise manipulated it using technological means, such as gestural controllers, the vocal material is, of course, no longer controlled and directed by my voice, but rather by the touch of my fingers and the movements of my hands and arms. In fact, as with Klangtanz, my entire body is involved in the process of steering the sounds of the extracted voice. The play with the “source” vocal sounds continues — is “extended” further — using the physical movements of the extremities of the body, with the help of computer processing. When constantly feeding the remote- and gesture-controlled machine with new voice material, and instantly manipulating and playing the results back in real time, novel sound properties, hitherto unbeknownst to the performer, arise. Comparable to the act of chiselling a sculpture, abstract vocal soundscapes are molded out of concrete vocal material during this process. Both sound areas — that of the natural, live voice and that of the abstract, extracted voice — intermingle and merge. The rapidity with which this method of sound creation and processing is applied can easily seduce the listener. At the same time, it could possibly also be confusing, because the audience is no longer able to unequivocally determine the origin of what has just been heard: is what we hear the live voice performed using extended vocal techniques, the recorded voice alienated via computational manipulations, or the sum of both? A process of disembodiment takes place: even though the vocal material is still controlled continuously via the extremities of the body, the voice is pulled out of its actual self, is extracted. This disembodied voice could well be called an acousmatic voice since it can no longer be attributed to its origins; the actual producer of the sound that is heard at the end of this process is now invisible. 14[14. The term “acousmatic” dates back to the Greek akousmatikoi, identifying the disciples of Pythagoras. During his lectures, the master was hidden behind a curtain so that only the sound of his voice could be heard. This, so he believed, would assure that they could listen more attentively and follow his lectures without being distracted by his visual presence. Only at a later point in time, when the disciples grew more mature, would Pythagoras allow them to also see him while he was lecturing. See also “Acousmatic, adjective: referring to a sound that one hears without seeing the causes behind it,” in Schaeffer 1966, 91.] In the context of a live performance with the musician in view on stage, the audience can of course trace the heard sound back to its origins in the natural voice of the vocal performer. In reality, however, the listener hears only the manipulations of the virtual voice, itself consisting of residual components of a recording of a voice that was initially produced acoustically. But what this listener cannot do is perceive the actual producer or production in the moment during which the sound is (re)produced, unless the performer persuades the audience by making the process transparent, demonstrating clearly, for example, that the current computational voice is generated and controlled by the body extremities — fingers, hand and arms. At this point, the disembodied voice is, in a way, re-embodied again. Hence, the task for the performer is to resolve the paradox of the unseen sound by making it manifestly evident that the acousmatic voice is controlled by and mediated through body actions. Or, as Alexander Schubert explained it:

Ein maßgebliches Ziel ist die Nachvollziehbarkeit und Verkörperlichung (Embodiment) von elektroakustischen Klängen durch den Interpreten, so dass die Elektronik als Instrument körperlich interpretierbar und für das Publikum erfahrbar gemacht wird. (Schubert 2012, 24) 15[15. A key aim is the traceability and embodiment of electroacoustic sounds that the interpreter implements in such a way that the electronics as an instrument can be interpreted corporally and thus experienced by the audience.]

In addition to that, the processes that take place as the vocal material is transformed from embodied to disembodied and to re-embodied, and so forth, establishes a performance situation of augmented perception, a perceptive awareness that brings forth and amplifies, as Peter Verstraete calls it, “a space for listening to listening,” which both the performer and the audience are involved in and affected by:

[T]he technological display of the interface and Nowitz’s vocal and gestural gymnastics never produce a totalitarian space. Instead, the control situation opens up a space for listening to listening, a highly self-aware and self-regulating perception of the listening self as performer. The listener can also feel invited to relate the disembodied sounds back into the performer’s bodily gestures, whilst making sense of the soundscape thus created. (Verstraete 2011)

Questions of Representation Raised by the Performance Concept of the Multivocal Voice

One question that always remains for the vocal performer is whether we represent anybody or anything and, if so, who is it that we represent on stage, especially when using the potential of the voice to its full extent and, moreover, in combination with technological means. Even though we might strongly reject the idea of “representation” — in its outdated meaning we know from the bourgeois opera — the performance voice presented live still always represents someone or something. But who or what is in fact represented if the voice components are splintered and fragmented, and reconfigured in new ways? Certainly new types of unimaginable sonorities occur in the course of such a performance. But to whom are we listening? Whom do we watch during the performance of excess? What do we appreciate in an asemantic display of the limits of vocal expression? It was Aristotle who (in De Anima, chapter 8) said that the “voice is a particular sound made by something with a soul.” I’d like to take this thought a little further by asserting that the human voice is always already a bearer of emotional information that, as such, we are trained to listen to — and this is most certainly the case in the realm of vocal performances. And, further, that the “voice is a particular sound” that touches us simply due to its sound waves. But what happens then when the voice presents itself in a disjointed and multiple manner? The practice of vocal plurality — that is, multiple vocal elements executed by one single performer — exposes no singular character anymore but rather a vast number of different, distinct personas and figures, or even ghosts, shades and spectres of dreams. They all appear on stage while competing with each other. In works like Playing With Panache (Video 2) or Untitled (Video 3), the performance practice reaches a degree of plurality — via the occurrence of machine-inspired and animal (bird-like whistling) sounds, all of which I subsume under the legacy of the non-human — that seemingly aims to reflect the whole world of vocal expression potential through the presence of one single body and its technological extensions.

Another question that is raised in this context is what happens when the extracted voice (or the result of all the extracted voices collected as a result of the processes described above) is confronted again by the natural voice. Due to the disparity between the concrete live voice — the bearer of emotional content — and the abstract, computationally reproduced voice, tensions of a sonic-æsthetic nature build up and can unleash a power within that both realms nourish and inform, but they can also eliminate each other. In any event, due to the merging of both voices, in one way or the other, a new voice entity arises which, taking into account all its manifold appearances, establishes a complex and multiple stage persona or stage-self (this is as literal a translation as possible from the German komplexes Bühnen-Ich). 16[16. An early version of this thought appears within the essay Über das Erweitern und Extrahieren der Stimme. Ein Versuch zur Ergründung des Wesens der Kunststimme im Kontext zeitgenössischer Vokalperformance published online on the occasion of the solo performance Extended and Extracted (2014), for a vocal performer and live electronics, composed and performed by the author during the VO1CES festival in Signalraum Munich on 9 April 2014. See Nowitz 2014.] The vocal performer of today must be more than one in order to be able to be one. Having said this, I happily concede that this concept is by no means new, having been explored extensively by a vast number of vocal performers 17[17. To name but a few: Tomomi Adachi, Laurie Anderson, Tone Åse, Franziska Baumann, Jaap Blonk, Audrey Chen, Paul Dutton, Michael Edward Edgerton, Nicholas Isherwood, Salome Kammer, Joan La Barbara, Phil Minton, Fátima Miranda, Meredith Monk, David Moss, Sainkho Namtchylak, Mike Patton, Maja Ratkje, Dorothea Schürch, Demetrio Stratos, Mark van Tongeren, Jennifer Walshe, Ute Wassermann, Trevor Wishart, Pamela Z and many others…], although it has not yet been extensively formulated except by a very few authors (Åse 2014; Weber-Lucks 2008; Young 2015). In any case, I’d like to outline and designate this concept, at least for now, as the multivocal voice. 18[18. The author is currently working on an artistic PhD project, under the supervision of Sten Sandell and Rolf Hughes, with the preliminary title “The Multivocal Voice: Mapping the terrain for the contemporary performance voice,” College of Opera, Stockholm University of the Arts.]

Body Work and Choreographic Interventions: Mediating Technologically Equipped Vocal Art Performances

I would venture a guess that such intricate explorations of vocal art performance need to be mediated somehow. One way to do so would be to let the performer’s body dissolve these tensions by expanding the vocabulary of movement of the arms and legs, on the one hand, and filling the performance space, on the other. As mentioned above, this idea for me dates back to 2008 when I coined the term Klangtanz.

In order to better explain this, let me draw attention to normal, everyday life situations in which gestures that are executed by the hands (assuming they are free to do so!) are typically done and meant to convey supplementary meaning that is perhaps not fully articulated solely through the words spoken; in other words, the physical gestures provide some between the lines information that underpins and further explains the words as they are spoken. When I am performing with the Strophonion, however, these kinds of gestures are no longer possible, as both hands are occupied with holding and manipulating the controllers. In fact, when the performer’s hands are responsible for triggering and shaping sound, the resultant gestures are very concrete, and attentively concerned with determining the sonic and musical outcome. Therefore, instead of using everyday hand gestures, other methods of gestural mediation need to be explored in order to help a vocal art performance act unfold convincingly. I figured that this task could actually be completed by the rest of the body, so that the whole body is then used in performance. This is in fact a very foreign idea for a musician, but the overall performance gains tremendously in quality when the actions of the entire body are embedded into the vocal performance act, as opposed to exclusively focussing on the sonic result of playing the Strophonion and remaining in a static performance position, or state.

So, in order to enhance the performer’s presence and, in consequence, the performance itself with regard to both the resulting sound or music and its visual appearance, I decided to start a collaboration with a dancer working on the corporeality of body movements. Hence, I extended an invitation to Florencia Lamarca, a dancer who has integrated into her artistic approach a fascinating movement technique called “Gaga”. 19[19. Lamarca and the author know each other from performing together in more than seventy performances of The Summernight’s Dream (2006–2010), a theatre production at the Schaubühne Berlin under the direction of Thomas Ostermeier and Constanza Macras. Lamarca presents solo shows and is also a permanent member of the Sasha Waltz & Guests dance company in Berlin.] Gaga is an intriguing movement language that was developed by Ohad Naharin, the choreographer and Artistic Director of the Batsheva Dance Company; Lamarca has previously collaborated with Naharin and his company in Tel Aviv. 20[20. See the Batsheva website for more information on Gaga.] The experiences of Gaga that Lamarca brought into the project helped me learn how to open up my whole body, especially my chest and shoulders, while playing the instrument. At the same time, the quality of my movements became more fluid and natural, as opposed to modes of action that had sometimes been a little more robotic. This collaboration also resulted in bigger and more space-consuming movements that seemed to make the overall performance more gripping and appealing.

All in all, it is clear to me that the increasing inclusion of whole-body movements into performance practice seems to be the most natural step to take. And maybe, by applying this newly gained practice into my ongoing vocal art performance, a doorway to new possibilities will open up that may even supply some answers to the compelling question that French philosopher Jean-Luc Nancy raised in his essay “À l'écoute” almost fifteen years ago:

Although it seems simple enough to evoke a form — even a vision — that is sonorous, under what conditions, by contrast, can one talk about a visual sound? (Nancy 2007, 3)

Acknowledgements

Frank Baldé (software designer at STEIM, Amsterdam), Florian Goettke (visual artist, Amsterdam), Byungjun Kwon (sound artist and electronic engineer, Seoul), Marije Baalman (electronic engineer, Amsterdam), Sukandar Kartadinata (sound artist and electronic engineer, Berlin), Chi-ha-ucciso-Il-Conte? (product designer, Amsterdam), shirling & neueweise (proofreading, Berlin), Florencia Lamarca (dancer, Berlin), Roy Carroll (sound artist and audio recording, Berlin), Oscar Loeser (video artist and video recording, Berlin), Stockholm University of the Arts, Sten Sandell (pianist and supervisor of the author’s PhD, Stockholm), Rolf Hughes (prose poet and supervisor of the author’s PhD project, Stockholm), Camilla Damkjaer (handstand artist and artistic research manager, Stockholm), Sven Till (Artistic Director at fabrik, Potsdam), Susanne Martin (dancer, Berlin), Janina Janke (stage designer, Berlin), Joachim Liebe (photographer, Potsdam).

Bibliography

Aristotle. “Book II: Chapter 8." In De Anima: Books II and III (with passages from Book I). Edited by J.L. Ackrill and Lindsay Judson. Trans. D.W. Hamlyn. Oxford: Clarendon/Oxford University Press, 2002, pp. 29–34.

Åse, Tone. “The Voice and the Machine — And the Voice in the Machine — Now You See Me, Now You Don't.” Research Exposition on the Research Catalogue 2014. Posted 19 November 2014. http://www.researchcatalogue.net/view/108003/108004 [Last accessed 22 October 2016]

Dykstra-Erickson, Elizabeth and Jonathan Arnowitz. “Michel Waisvisz: The Man and The Hands.” Interactions — HCI & Higher Education 12/5 (September-October 2005), pp. 63–67. Available online at http://steim.org/media/papers/Michel Waisvisz The man and the Hands — E. Dykstra-Erickson and J. Arnowitz.pdf [Last accessed 19 October 2016]

Gadamer, Hans-Georg. Truth and Method. Second edition. Trans. revised by Joel Weinsheimer and Donald G. Marshall. London: Continuum, 2004.

Nancy, Jean-Luc. Listening. Trans. Charlotte Mandell. New York: Fordham University Press, 2007.

Nowitz, Alex. “Voice and Live-Electronics using Remotes as Gestural Controllers.” eContact! 10.4 — Temps réel, improvisation et interactivité en électroacoustique / Live Electronics, Improvisation and Interactivity in Electroacoustics (October 2008). https://econtact.ca/10_4/nowitz_voicelive.html

_____. “The Strophonion — Instrument Development (2010–2011).” STEIM Project Blog. 2011. Posted 2 January 2012. http://steim.org/projectblog/2012/01/02/alex-nowitz-the-Strophonion-instrument-development-2010-2011 [Last accessed 4 October 2016]

_____. A Few Euphemisms (2012) for voice, strophonion, sound objects, flute, violin, violoncello, piano and conductor. YouTube video “Nowitz ‘A Few Euphemisms’” (14:24) posted by “bvnmpotsdam” on 13 August 2012. Performed by Ensemble Curious Chamber Players and Alex Nowitz at Intersonanzen 2012 at the Friedenssaal in Potsdam (Germany). Conducted by Rei Munakata. http://youtu.be/gACD-b4z1FU [Last accessed 7 October 2016]

_____. “Über das Erweitern und Extrahieren der Stimme. Ein Versuch zur Ergründung des Wesens der Kunststimme im Kontext zeitgenössischer Vokalperformance.”Signalraum für Klang und Kunst, 2014. Posted on 20 February 2014. http://www.signalraum.de/sig/nowitz.html [Last accessed 22 October 2016]

_____. Eine Raumvermessung (2015). Vimeo video “EINE RAUMVERMESSUNG by Alex Nowitz” (10:24) posted by Alex Nowitz on 18 August 2016. http://vimeo.com/179308709 [Last accessed 22 October 2016]

_____. Panache (2015). Vimeo video “PANACHE by Alex Nowitz” (1:34) posted by Alex Nowitz on 31 May 2016. http://vimeo.com/168750034 [Last accessed 22 October 2016]

_____. Playing with Panache (2015). Vimeo video “PLAYING WITH PANACHE by Alex Nowitz” (2:02) posted by Alex Nowitz on 16 October 2016. http://vimeo.com/187540317 [Last accessed 22 October 2016]

_____. Untitled(2016) for voice, strophonion and one chair to be ignored. Vimeo video “UNTITLED by Alex Nowitz” (3:29) posted by Alex Nowitz on 16 October 2016. http://vimeo.com/187541243 [Last accessed 22 October 2016]

Schaeffer, Pierre. Traité des objets musicaux. Paris: Éditions du Seuil, 1966.

Schubert, Alexander. “Die Technik der Bewegung: Gesten, Sensorik und virtuelle Visualisierung.” Positionen 91 (May 2012) “Film Video Sensorik,” pp. 22–25.

STEIM. "What's Steim?" http://steim.org/what-is-steim [Last accessed 7 October 2016]

Torre, Guiseppe, Kristina Andersen and Frank Baldé. “The Hands: Making of a digital musical instrument. Computer Music Journal 40/2 (Summer 2016) “The Metamorphoses of a Pioneering Controller,” pp. 22–34.

Verstraete, Pieter. “Vocal Extensions: Disembodied voices in contemporary music theatre and performance.” In Public Sound #2: Vocal Extensions. [Vinyl limited edition.] Rotterdam: De Player, 2011.

Waisvisz, Michel. “The Hands”. 2006. http://www.crackle.org/TheHands.htm [Last accessed 7 October 2016]

Weber-Lucks, Theda. “Körperstimmen: Vokale Performancekunst als neue musikalische Gattung.” Doctoral thesis, Berlin Technische Universität, 2008. Available online at http://dx.doi.org/10.14279/depositonce-2011 [Last accessed 17 October 2016]

Wikipedia contributors. “Michel Waisvisz.” Wikipedia: The Free Encyclopedia. Wikimedia Foundation Inc. http://en.wikipedia.org/wiki/Michel_Waisvisz [Last accessed 7 October 2016]

Wikipedia contributors. “STEIM.” Wikipedia: The Free Encyclopedia. Wikimedia Foundation Inc. http://en.wikipedia.org/wiki/STEIM [Last accessed 7 October 2016]

Young, Miriama. Singing the Body Electric: The human voice and sound technology. New York: Routledge 2015.

Social top