Turning the Tables

The audience, the engineer and the virtual string orchestra

Project Goals

Audience participation in musical performance is an area that has enormous creative potential for expanding the range of concert hall musical experience and attracting a new group of listeners. However, it remains underexplored in the Classical music world. While there are significant precedents for the use of digital tools, either to gather information and feedback from audience members or to involve the audience indirectly in music making, we believe that direct audience participation in shaping musical material is essentially an open frontier (Oh and Wang 2011; Rasamimanana et al. 2012).

Many of the issues surrounding audience participation have been addressed and solved with research into the laptop orchestra. This current is now evolving toward an Internet of Things (IoT) or Bring Your Own Anything (BYOA). With a few outstanding exceptions, audience participation is usually excluded from digital ensemble performances (Bartolomeo 2014; McAdams et al. 2004).

Preserving and extending traditional human interaction with sounding objects — musical instruments or otherwise — is the underlying motivation for our work in audience participation. We identified three main goals for our audience outreach and interaction project:

- Create a user interface that is flexible, nimble and intuitive;

- Audience members should be able to grasp immediately — and without training — the outcome of their control input;

- The experience is scalable to multiple users and input mappings (Lee and Freeman 2013).

What is an intuitive user interface? Most audio hardware and software consists of user interfaces that allow manipulation of parameters to yield a desired outcome. Knobs, faders and labels, while precise and efficient for audio experts, are far from ideal musical interfaces, since they typically require some degree of background in audio production to understand. In designing our audio tools and their associated tablet controllers, we tried to avoid “cold” numerical input parameters in favour of intuitive, immediately graspable visual representations. In addition, we have matched our digital signal processing choices to specific repertoire in the hopes of generating outcomes that, as it were, cannot go wrong — even a “bad” performance by an audience member should sound pretty good.

The way that people relate to music has been profoundly impacted by the Internet and digital media in general. Easy digital access to musical performances and Classical records presents a dizzying array of possibilities, and listeners are unprecedentedly restless and distracted. Accordingly, traditional concert hall experiences may be too passive for digitally connected audiences. We believe that one antidote is to provide an interactive experience in the concert hall.

In addition, we strongly believe that the use of live electronics in a Classical musical context is just plain cool. It has been a thriving creative-research endeavour since the dawn of computer music in the 60s, yet very few people outside the field of music technology associate digital media with Classical music. We hope to raise public awareness of this rich area of musical activity.

Finally, the project has a curatorial component, which involves reimagining and re-contextualizing 20th-century Classical repertoire. Our signal processing and control surfaces were designed with Bartók, Prokofiev and Stravinsky in mind.

Mobile Apps

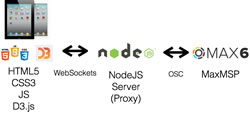

The architecture for the project revolved around an agile, scalable and reliable solution that allowed us to connect the tablets to the sound processing engine (Cycling ’74 Max). After considering alternatives such as the c74 mobile app, Lemur hardware and mobile apps, we decided to develop our own mobile web apps using open source technologies: HTML5, Advanced JavaScript and CSS3. One of the main advantages of using JavaScript Web Apps is the ability to scale to many users without requiring them to pre-install a native app from an app store, thus enabling participants to use their own devices. Also, there is no cost associated with installing an app. Figure 1 shows the architecture of our solution.

The next challenge we found was in reliably connecting multiple tablets to the sound processing engine. Here we had the option to connect the devices directly to Max via WiFi over UDP (User Datagram Protocol), but we quickly realized that more often than expected, there were network issues on either end of the connection between Max and the tablets. Instead, we developed a new lightweight application running a NodeJS web-server with support for Web Sockets. This NodeJS app enabled us to have the tablets connect to the NodeJS Server over WiFi to load the JavaScript Web Apps and to use Web Sockets to send OSC (Open Source Control) messages to the NodeJS server. Several measures were implemented in order to allow tablets to gracefully disconnect and auto-reconnect to the NodeJS server.

The NodeJS acted as a two-way channel between Max and the tablets. It multiplexes incoming OSC messages from all the tablets via a single connection to Max and broadcasts OSC messages from Max to any tablets subscribed to that class of message. This enables sending messages to a single or multiple tablets from Max. Careful implementation of the integration of NodeJS with Max allowed both Max and NodeJS to fail and gracefully automatically reconnect whenever there were any Max crashes (Wright 2002).

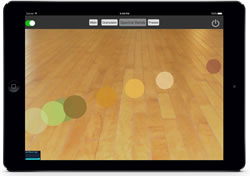

The user interface for the mobile apps was conceptualized based on intuitive controls. A physics engine based on D3.js, created by Mike Bostock, was implemented in order to gather multi-touch based interactions based on elastic forces. Other controls involve simple dials, knobs and control objects mapped to human labels, such as “orchestral”, “solo”, etc. (Bostock and Heer 2009).

We decided to broadcast the screen of the iPads during the performance to a large screen visible to the entire audience. This was a critical part of the experience! As the tablets exchanged hands among the audience members, broadcasting their interactions to the wider group generated a strong feedback loop. Audience members thus felt a greater level of agency and interaction compared to the previous experience. During one of the early exploratory performances we gave, we noticed that by the 11th and 12th iterations, the audience as a collective had evolved from a simple behaviour on the front end to specific patterns and gestures learned during the performance.

Given the flexibility of the user interface, each performance becomes unique to that audience and each audience member can find their “fingerprint” in the outcome of the performance.

Sound Processing

DSP techniques were chosen to produce powerful sonic transformations that clearly derive — even to an untrained ear — from the live source. In designing the Max patches and their associated tablet interfaces, we tried to hide as much complexity from the user as possible, while providing a reliably exciting musical experience.

The Granulator uses Dan Trueman’s munger~ object as its engine. Two munger~ objects are combined to produce about 150 voices, giving rise to mass orchestral effects (Bukvic et al. 2007).

The interface limits the user in a variety of ways. The idea is to ensure that even a “terrible” performance by an audience member will still result in a decent sonic result. Therefore, we limit the number of user-accessible parameters and place careful boundaries on those parameters to keep the result within a fairly predictable range of possible outcomes.

We programmed the granulators’ pitch shift parameters to correspond to diatonic pitch levels, but provided a limited random pitch variation parameter for the adventurous (which we affectionately call the “Penderecki slider”). The live signal may contain harmonically complex material, but forcing transposition to diatonic relationships provides a certain degree of stability to the sonic result, even when combined with random pitch variation. It also reinforces the sense that the sound mass is “orchestral”, since the transposition levels force the granulation streams into familiar registral relationships.

In order to keep the sound rich and varied, even when the user stumbles or loses attention, we derived a layer of constant variation from real-time spectral analysis of the live signal.

The Freezer is a set of four phase vocoders that can grab a short segment of sound and then scrub the phase vocoder frames so as to produce fairly organic sounding drones. This allows the user to produce sustained four-note chords (or more complex textures, depending on what is sampled) by grabbing and freezing the violinist’s live material. We tuned the relationship of sample size to scrub rate to get the most naturalistic result, and to avoid familiar artifacts of time-domain phase vocoder processing.

The drones can be made to pulse lightly by tapping the screen at the desired pulse rate. Here again, we made a special effort to minimize the artificiality of the pulsing itself. The result is intended to sound as if it were produced by bowing, not AM.

The patch called Spectral Bands uses real-time FFT analysis-resynthesis to manipulate eight groups of frequency bands with both frequency transposition and spatial displacement. The user manipulates the disposition of eight circles in a simulated three-dimensional space: movement to the left transposes down, to the right transposes up; movement to the bottom brings the spectral band “closer” and to the top moves them “away”.

The Spectral Bands interface lets the user “reach into” the live sound and change its frequency distribution, while mapping those spectral transformations onto a virtual space for maximum differentiation of the changing spectral components.

At the same time a new accompaniment is produced, since the spectral bands tend to break into flute- and other woodwind-like textures when pushed to extremes of transposition.

Aesthetics and Outreach

Our intention is to appeal specifically to Classical music audiences. This presents a dilemma: some less recent music may sound alien in the context of live electronics. Contemporary experimental music, on the other hand, although well suited to the æsthetics of digital signal processing, presents special challenges to even the most knowledgeable listener. Therefore, we chose a middle path: music by major figures from the first half of the 20th century, such as Bartók, Prokofiev and Stravinsky. Such music can still be perceived as “modern sounding” to some Classical music listeners and is, in our opinion, better suited to a live electronics treatment than, say, Mozart or Bach. 1[1. This point is, of course, arguable. See, for example, Recomposed by Max Richter: Vivaldi — The Four Seasons, published by Deutsche Grammophon.]

Therefore, we are neither creating “alienated” versions of beloved classics, nor are we confronting audiences with the inherent difficulty of cutting-edge contemporary music. Instead, we root our processing and control surfaces in a modernist sound world whose harmonic and melodic features have long since been assimilated into film music and the ongoing neo-Romantic repertoire. These sounds are, in other words, both new and familiar at the same time. The addition of live electronics will not clash with or distort the live source, and the listener will not be distracted by the juxtaposition.

Bibliography

Bartolomeo, Mark. “Internet of Things: Science Fiction or Business Fact?” Harvard Business Review (2014) Available online at https://hbr.org/resources/pdfs/comm/verizon/18980_HBR_Verizon_IoT_Nov_14.pdf [Last accessed 3 April 2016]

Bostock, Michael and Jeffrey Heer. “Protovis: A graphical toolkit for visualization.” IEEE Transactions on Visualization and Computer Graphics 15/6 (November 2009), pp. 1121–1128.

Bukvic, Ivica Ico, Ji-Sun Kim, Dan Trueman and Thomas Grill. “munger1~: Towards a cross-platform Swiss Army Knife of real-time granular synthesis.” ICMC 2007: “Immersed Music”. Proceedings of the 33rd International Computer Music Conference (Copenhagen, Denmark, 27–31 August 2007).

Lee, Sand Won and Jason Freeman. “echobo: A mobile music instrument designed for audience to play.” NIME 2013. Proceedings of the 13th International Conference on New Interfaces for Musical Expression (KAIST — Korea Advanced Institute of Science and Technology, Daejeon and Seoul, South Korea, 27–30 May 2013). Available online at http://nime.org/proceedings/2013/nime2013_291.pdf [Last accessed 3 April 2016]

McAdams, Stephen, Bradley W. Vines, Sandrine Vieillard, Bennett K. Smith and Roger Reynolds. “Influences of Large-Scale Form on Continuous Ratings in Response to a Contemporary Piece in a Live Concert Setting.” Music Perception 22 (Winter 2004), pp. 297–350.

Oh, Jieun and Ge Wang. “Audience-Participation Techniques Based on Social Mobile Computing.” ICMC 2011: “innovation : interaction : imagination”. Proceedings of the 37th International Computer Music Conference (Huddersfield, UK: CeReNeM — Centre for Research in New Music at the University of Huddersfield, 31 July – 5 August 2011).

Rasamimanana, Nicolas, Frédéric Bevilacqua, Julien Bloit, Norbert Schnell, Emmanuel Fléty, Andrea Cera, Uros Petrevski and Jean-Louis Frechin. “The Urban Musical Game: Using sport balls as musical interfaces.” CHI 2012. Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, pp. 1027–1030, (Austin TX: University of Texas, 5–10 May 2012).

Wright, Matt. “Open Sound Control 1.0 Specification.” 2002. http://opensoundcontrol.org/spec-1_0

Social top