Performing the Listener

Utilizing auditory distortion products in the compositional process

In comparison to research into acoustics, evidence of the application of psychoacoustic studies within music composition is relatively rare. Furthermore, existing texts within the modest existing output hardly begin to explain the application of such material in a compositional setting and do not attempt to address the scope of all distortion products across a variety of contexts within electronic music. Investigations into bandwidth phenomena (which primarily includes monaural and binaural beating, consonance and dissonance studies) has resulted in a sizeable amount of research published by psychoacousticians and psychologists, yet these studies are carried out in the context of gaining a further understanding of sound perception rather than the musical application of sonic materials.

Culminating in discussions of some of the author’s own compositions that directly consider the listener’s ears as instruments in the works’ realization, we seek to form a theoretical foundation upon which further creative practices can be based. While possible routes of exploration for composers are offered here, they are by no means considered to be definitive modes of practice. Furthermore, the author’s proposition of these methodologies is in no way a denial of the effectiveness of pre-existing approaches to electroacoustic composition.

Electroacoustic Music: The Ideal Platform?

A rapid growth of digital technology in recent years has facilitated a much greater level of research output in the field of psychoacoustics than was previously the case. Consequently, composers using digital audio tools in their work now have an opportunity to engage with their listener’s ears more than ever before. A relatively small number of composers have begun directly considering the various applications for the listener’s ears as instruments in their work. Christopher Haworth postulates that:

Perhaps moreso than in any other discipline, it is the artist who creates electronic music that must grapple with the science of perception in order to realise his work. (Haworth 2011, 342)

This highlights a deeper connection between electronic music within psychoacoustics research, as it references the advantages for the composer in having an understanding of perception-based studies in their own creative work.

The listening system is theoretically simple in terms of its structural behaviour. The ear’s physical construction results in it behaving as a three-stage energy converter, primarily acting in a “cause and effect” manner. As the behaviour of the ear is somewhat predictable in terms of reaction to acoustic stimuli, the level of control over sonic material that is evident in electronic music, due to its relationship with the computer, makes it a well-suited platform in which the listener’s ears can be employed as a musical instrument. According to John Chowning:

computers require us to manage detail to accomplish even the most basic steps. It is in the detail that we find control of the sonic, theoretical and creative forms. (Chowning 2008, 1)

Chowning’s observation underlines the level of accuracy that digital media offers the creator. While the effectiveness of acoustic instruments as source stimuli in music that directly considers psychoacoustic phenomena can prove quite successful, the precision of digital instruments is second-to-none. It is, by no means, a coincidence that the influx of digital-based sound technology runs simultaneously to increased levels of research in acoustics and psychoacoustics. Albert Bregman has stated that just a “trickle of studies” existed in auditory research prior to the 1970s (Bregman 1999, xi). The primary explanation for this is that the required technology with which such experimentation could be conducted has not been available. Electroacoustic practices would seem to be particularly well suited for such exploration to take place within a musical paradigm as a result of the heavy level of employment of digital tools in its creation in the modern age.

Auditory Distortion Products

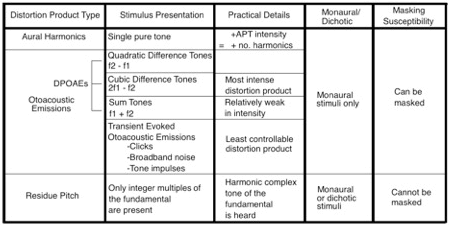

There are a number of methods in which acoustic energy (input) can provoke a non-linear acoustic response (output) from the inner ear (Fig. 1). As Harvey and Donald White have discussed:

any nonlinearity lies within the neural system of the cochlea itself, and… [is] related in a complicated way to the frequency distribution of vibrational energy in or around the hairlike cilia or neurons. (White and White 2014, 188)

The intensity and frequency levels at which the ear begins to behave non-linearly, and hearing moves from linear to non-linear, are as follows (White and White 2014):

- 30 dB / 350 Hz

- 50 dB / 1000 Hz

- 55 dB / 5000 Hz

Auditory distortion products are acoustic energies that are output from the inner ear at a lower level than the input stimulus, which can be heard by the listener. The frequencies being output by the inner ear are not present in the input signal. These phenomena allow for composers to directly consider their listeners’ ears as instruments that create their own frequency content independent of the external, free-field acoustic environment.

Aural Harmonics

A single pure tone in one ear at a “high enough” frequency level can produce one of the simplest auditory distortion products, the aural harmonic (White and White 2014, 220). With this phenomenon, frequency content that are integer multiples of the stimulus tone are emitted out from the ear at decreasing intensity levels with increasing frequency value (Ibid.).

White and White have demonstrated that a fundamental frequency of 250 Hz produces aural harmonics at multiples of the fundamental that have decreasing intensity: 500 Hz at ca. 91 dB, 750 Hz at ca. 86 dB, 1000 Hz at ca. 83 dB, 1250 Hz at ca. 81 dB, 1500 Hz at ca. 78 dB… (Ibid.).

In order to demonstrate, Audio 1 contains a 300 Hz sine wave whose intensity increases from zero and peaks in the middle of the recording before it returns to zero. While listening [best done using headphones], it is important to direct one’s attention to any additional harmonic frequency components that can be heard as the intensity increases, and their disappearance as it lessens.

It should be noted that aural harmonics are susceptible to masking and should be employed with some consideration to frequencies in close proximity. Furthermore, a correlation is evident between an increase in the intensity level of the stimulus tone and the number of aural harmonics (Ibid.). There has been much debate concerning the existing research and proposals for the construction of an effective model for aural harmonics, and for the relationship between the intensity of the stimulus tone and the intensity/number of aural harmonics (Schubert 1978, 513–514; Hartmann 2005, 55).

Due to the low intensity level of aural harmonics, combined with their susceptibility to masking, Murray Campbell and Clive Greated believe that aural harmonics “are at such a low level in comparison with the original sound that they are of no musical significance” (Campbell and Greated 1987, 64). Despite the subjective nature of this statement, it does highlight the reduced effectiveness of such a distortion product within composition in comparison to others. Furthermore, the required 95 dB as referenced in White and White’s research may prove too loud for some listeners. Prolonged and regular exposure to such intensity can result in irreparable hearing damage and so should be handled carefully.

Otoacoustic Emissions

Fluctuations in the outer hair cells within the cochlea of the inner ear cause otoacoustic emissions (OAEs). This is acoustic energy that travels back out of the ear and can be detected by an in-ear microphone as well as perceived by the listener (Kemp 2002, 223).

There are two main types that have been discerned: distortion product otoacoustic emissions and transient-evoked otoacoustic emissions.

DPOAE — Distortion Product Otoacoustic Emissions

Quadratic difference tones (QDTs) and cubic difference tones (CDTs) are the two most accessible distortion product otoacoustic emissions (DPOAEs) for composers. DPOAEs are also often referred to as “combination tones” as they rely on the existence of two or more acoustic primary tones (APTs) reaching the ear simultaneously (Campbell and Greated 1987, 64). The most basic DPOAE is the QDT, which is the result of the difference between two distinct frequencies:

f2-f1

In other words, two stimulus tones entering the ear at 1000 Hz (f1) and 1200 Hz (f2) will produce a QDT at 200 Hz, which will have similar sonic properties to those of a square wave, or a low-intensity buzzing (Audio 2).

The simultaneous presentation of 1000 Hz and 1200 Hz stimulus tones can also generate a sum tone (ST) of 2200 Hz (f1+f2). Sum tones are generally quite weak in intensity (particularly lower in intensity in relative terms to other DPOAEs) and, consequently, are not always audible (Gelfand 2010, 220). These stimulus tones force the natural behaviour of the cochlear amplifier — “without which the auditory system is effectively deaf” (Ashmore 2008, 174) — to be “rendered audible to the listener” (Haworth 2012, 61).

David Kemp has expressed that “more powerful excitation is practical with continuous tones” (Kemp 2002, 226). From this point alone, a clear connection between prolonged sine waves in electroacoustic music are the ideal (and most reliable) source of stimulation for such a phenomenon in music. Furthermore, the most powerful DPOAEs are generated when the two stimulus tones are within half an octave of each other and when f1 has an intensity that is greater than f2 by 10−15 dB (Wilbur Hall 2000, 22). Alex Chechile proposes that the approximate range of 84−95 dB sound pressure level (SPL) will provide the most audible distortion products in the ears of the listener in the context of a free-field loudspeaker environment (Chechile 2015).

The cochlear amplifier is capable of producing OAEs from -10 dB to +30 dB SPL in the ears of individuals with healthy hearing (Ramos, Kristensen and Beck 2013, 33). In relation to the lowest effective SPL of acoustic primary tones, an SPL of at least 60 dB must be provided (Gelfand 2010, 225). A steady-state distortion product can also be created when the stimulus tones are modulating in frequency, as long as the frequency difference between the two tones remains consistent. Distortion products with modulating frequencies can also be generated easily through these methods, as the frequency distance between the APTs over time requires simple consideration. The formulaic nature of provoking QDTs {f2-f1} and CDTs {2f1-f2} allows for simple inclusion of distortion products within composition as only basic calculations are required. Simply put, when the distance in frequency remains constant, modulating APTs produce a static DPOAE (Audio 3), whereas the frequency distance between APTs varies, a modulating DPOAE is produced (Audio 4).

Multi-channel loudspeaker arrangements are particularly usefulfor the demonstration of complex distortion product arrays that can, if applicable, allow the listener to experience a multiplicity of timbral colours within an immersive sphere that can be described as microscopic and macroscopic elements (Chechile 2015, 53). Furthermore, an inverse relationship is evident between QDTs and CDTs regarding the intervallic distances of their APTs.

While DPOAEs are often created in test procedures through pure tones, due to their simplicity and accuracy, that is not to say that all stimuli used in the provocation of DPOAEs must be pure tones. Quadratic difference tones were known as “Tartini Tones” in the mid-1700s, after the violinist Giuseppe Tartini, who was among the first to discover that two tones played simultaneously on the violin could provoke a third tone. 1[1. Tartini’s contemporary Georg Andreas Sorge may have been the first to have noted this phenomenon.] With regards to complex non-periodic sound sources, stimulus tones can also be generated with simple equalization procedures, wherein a bandwidth of up to approximately 20−30 Hz around a peak centre frequency is amplified. This method is effective, as the peak frequency will not be subject to masking and it can behave as an independent stimulus tone, providing the second stimulus tone is not present within close proximity (30−40 Hz).

TEOAE — Transient-Evoked Otoacoustic Emissions

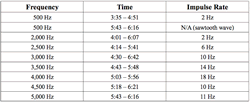

Transient-evoked otoacoustic emissions (TEOAEs) are often referred to as click-evoked otoacoustic emissions (CEOAEs) or delayed otoacoustic emissions (DEOAEs), and can be generated with stimuli of very short duration. These transient sounds, which can be short bursts of broadband noise, clicks or tone impulses (Audio 5), activate an extensive area of the basilar membrane (Ramos, Kristensen and Beck 2013, 33). Kemp has described the nature of TEAOEs as being a cochlear “echo” (Kemp 1991).

Due to the effectiveness of TEOAEs in inducing a response from the listener’s ears, they are used by the medical profession in hearing testing procedures. Most commonly used are click-based stimuli at 80−85 dB SPL (Campbell 1987). It has been found that latency is inversely correlated to frequency: lower frequencies have a slower response than higher frequencies. However, delay times of signals between 500 Hz and 5 kHz sit predominantly in the range of 5−15 ms (Ramos, Kristensen and Beck 2013, 33; Fastl and Zwicker 2007, 44).

While research findings remain somewhat inconclusive in regards to the exact frequency components that can be evoked through click-like stimuli, TEOAE amplitude levels can reach up to just 5−10 dB below the stimulus level (Fastl and Zwicker 2007, 42). Research from Jessica Arrue Ramos, Sinnet Greve Bjerge Kristensen and Douglas Beck has shown that TEOAE frequencies are generally between 1−4 kHz. They have also highlighted that TEOAE amplitudes are larger in infants than in adults (Ramos, Kristensen and Beck 2013, 33). These differences in TEOAE behaviour across varying ages has also been addressed by Graeme Yates of the University of Western Australia’s Department on Physiology, who found that responses of up to 6−7 kHz can be experienced in young ears (Kemp 2002, 225). These limitations were found to be incorrect, as both TEOAEs and DPOAEs have been recorded as high as 16 kHz through improved testing procedures, which have addressed limitations in pre-existing measurement procedures (Goodman et al. 2009, 1014). Nonetheless, the aforementioned findings in relation to diminished TEOAE sensitivity as age increases remain unchanged.

In relation to the evoking of TEAOEs, research conducted on TEOAEs in the range of 1−5 Hz has found that the signal-to-noise ratio continually decreases beyond a stimulus duration threshold of 300 microseconds (Kaushlendrakumar et al. 2011, 33). Consequently, it could be suggested that more prominent TEOAEs might be produced with longer duration stimuli. TEOAEs are in stark contrast to DPOAEs, in that the resulting frequency content, especially when measured across multiple simultaneous listeners, is almost entirely unforeseeable; however, the effect of bursts of thick, high-frequency spectral content in between clicks and noise bursts, etc., is very much predictable. Sutton’s broad description of the spectral characteristics of TEOAEs describes them as presenting several dominant frequency bands that are separated by weaker and narrower bands of frequency content (Hurley and Musiek 1994, 196). This uncertain nature is only emphasized by the fact that while TEOAE amplitude and frequency values will differ between listeners, they will also vary between the ears of a single listener (Ibid.).

TEOAEs are quite a peculiar auditory distortion product. While QDTs and CDTs present OAEs that are predominantly present while the stimulus is active (some backward masking methods have proven effective), the perception of TEOAEs does not require the simultaneous presence of the stimuli that provoke them, and they can thus be heard unaccompanied. Furthermore, the listener is also very likely to physically feel their ears beating or pulsating in response to these transient stimuli.

Residue Pitch

Residue pitch, also known as the “missing fundamental phenomenon” or “virtual pitch”, is encountered when the listener is presented with a complex harmonic signal in which the fundamental tone is absent. The listener perceives a harmonic fundamental tone (or something very close to it) of the signal despite only being exposed to a selection of its overtones.

This can be simplified by stating that the listener can perceive a tone that is equivalent to the common frequency distance between a presented stack of partials (Fig. 2). To demonstrate this, Audio 6 begins with just a 100 Hz tone, to which a stack of tones is added one at a time, each an integer multiple of the first (i.e. each 100 Hz higher than the previous) until eight tones are present (100 through 800 Hz). Following on from this, each frequency is gradually removed, starting with the lowest tone and rising. The listener will notice no change in the perceived 100 Hz tone other than changes in colour, until the final 800 Hz tone remains unaccompanied. Angelique Sharine and Tomasz Letowski have described residue pitch as being “an indication that our listening system responds to the overall periodicity of the incoming sound wave” (Sharine and Letowski 2009, 583). Stanley Gelfand explains that:

This would occur because the auditory system is responding to the period of the complex periodic tone (5 ms or 0.005 s), which corresponds to the period of 200 Hz (1/0.005s = 200 Hz). (Gelfand 2010, 225)

Residue pitch is quite a durable source of frequency content that can be heard with the SPLs of stimulus tones as low as 20 dB; the upper limit for its detection among listeners has been posited by auditory researchers Plomp and Moore as being approximately 1400 Hz (Moore 2012, 224). A clear benefit of this mechanism relates to issues concerning the speed of energy transfer and the positioning of the base and apex in relation to the flow of energy through the inner ear. Roy Patterson, Robert Peters and Robert Milroy have suggested that:

The residue pitch mechanism… enables the listener to extract the low pitch associated with the fundamental of a sound much faster than it would be possible if the information had to be extracted from the fundamental alone. (Patterson, Peters and Milroy 1983, 321)

Furthermore, residue pitch can be created despite multiple lower overtones not being present and, unlike combination tones, it cannot be masked by an incoming acoustic signal, thus making it a more robust compositional device in certain contexts (Kendall, Haworth and Cádiz 2014, 7). This aspect of the phenomenon also results in residue pitches being unable to behave as primary tones in monaural beating techniques and so it could be argued that compositional methods employing this phenomenon have an accessible level of control over frequency content that methods utilizing OAEs cannot offer (Gelfand 2010, 225). The primary tones for this mechanism may also be presented dichotically and remain effective, which Juan Roederer believes indicates that residue pitch is a result of a higher-level neural processing (Roederer 2008, 51). In relation to a practical application of this phenomenon, the greater the number of partials present, the greater the intensity of the produced residue pitch. The perception of residue pitch could be easily confused as being a DPOAE due to its illusory nature, as the perceived tone is not present in the acoustic signal. The perceived tone relates to the common mathematical difference between the partials that are present, thus particularly relating it to DPOAE perception.

Gelfand has also outlined that while various theories that attempt to explain the exact biomechanical processes taking place in the perception of residue pitch, none can provide a “comprehensive account” of this perceptual behaviour (Gelfand 2010, 225). As can be seen in the existence of a number of place theories, as well as temporal theories and a combined theory of pitch perception, such inconclusive data is both common and to be expected in this area of research. Furthermore, he has addressed pitch ambiguity relating to the pitch-extracting mechanism, by comparing a peak of one cycle in a complex waveform with an inequivalent peak in the next cycle as one such example of uncertainty that can arise (Ibid.). Nevertheless, the primary aspects of residue pitch are much clearer in relation to the essential details of the relationship between the input stimuli and the biomechanical “output”; therefore, its reliability as a compositional tool remains strong.

Using the Ear to Play the Ear

Existing work in the field would suggest that the acoustic stimuli used in the provocation of these phenomena must generally come from loudspeaker or headphone sources, whereas the inner ear can be used also to produce the required primary tones. In the right conditions (wherein masking, spectral saturation and insufficient intensity is not a factor), the sonic product of these non-linear inner-ear phenomena (created from loudspeaker or headphone primary tones) can be used as stimuli to provoke other psychoacoustic phenomena. Gelfand has described the characteristics of the “stimulus-like nature” of certain DPOAEs by stating that:

The combination tones themselves interact with primary (stimulus) tones as well as with other combination tones to generate beats and higher-order (secondary) combination tones, such as 3f1-2f2 and 4f1-3f2. (Gelfand 2010, 221)

The employment of such an approach in composition could be seen as using the ear to play itself. For example, DPOAEs and residue pitches can be used as primary tones to stimulate binaural beating, as is demonstrated in the following examples.

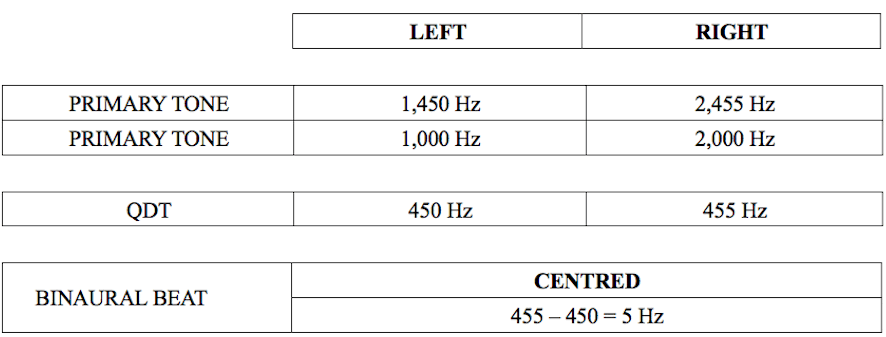

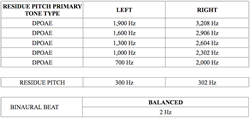

When exposed to two dichotically separated QDTs — 450 Hz (1450-1000 Hz) in the left ear and 455 Hz (2455-2000 Hz) in the right ear — the listener will hear a centred binaural beat of 5 Hz, the mathematical difference between f1 and f2 (Fig. 3, Audio 7).

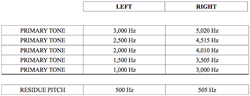

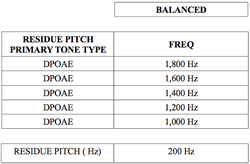

A higher number of stimulus tones are required to create residue pitch; however, the same methodology as in the preceding example can be employed, such that the residue pitches resulting in the left and right ear (f1 and f2, respectively) cause the same 5 Hz binaural beat to appear (Fig. 4, Audio 8).

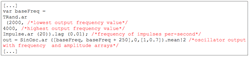

While sine tones were used in the previous examples to create of residue pitches, it is also possible to use DPOAEs for their creation (Fig. 5; Audio 9). Although, in contrast, the resulting residue pitch will be significantly rougher in texture as opposed to the somewhat smooth and clear texture of a residue pitch created by sine tones.

Due to the high number of primary tones required for this method, it is recommended that a random number generator be used in conjunction with an impulse oscillator that outputs two sinusoidal components at any given time, as demonstrated in the SuperCollider code in Figure 6. The individual outputs can then be mixed together. By having control over the set differences in frequency between the two random output tones, the user has control over QDT output. Increasing the frequency value of the impulse oscillator’s output ensures movement in the upper regions of the frequency spectrum, which allows for the acoustic primary tones to become somewhat sonically removed from the sustained QDTs. This method has the advantage of helping reduce the number of sustained tones that are required within the process.

Residue pitch cannot offer viable primary tones for DPOAEs. A perceptual conflict will occur as a result of both phenomena requiring monophonic stimulation with a substantial number of APTs in each ear. When combined, a complex spectrum with varying distances between partials is presented, thus causing multiple DPOAEs and residue pitches. This approach will therefore significantly reduce both the likelihood of success and the efficiency of methods employing residue pitches as primary tones for the stimulation of DPOAEs.

It should be noted, however, that DPOAEs can be used to create residue pitches that, in turn, will provoke binaural beating (Fig. 7). When employing such methods, the effects of the desired phenomenon will often become diluted and the theoretical attraction may be greater than its practical potential. Therefore, employment of this method should be considered carefully within compositional practice.

Discussions on the Author’s Own Compositional Material

Invisibilia (2014)

One of the author’s earliest works exploring the phenomena discussed here was Invisibilia (2014), for fixed medium (enhanced stereo using two pairs of loudspeakers). Distortion product otoacoustic emissions (DPOAE) resulting from quadratic (QDT) and cubic difference tones (CDT), as well as sum tones (ST), were used as compositional material. Invisibilia presents stimulus signals within simple and complex harmonic structures, and inharmonic structures. The source material includes sine and sawtooth waves, glass, bells, and violin and cello tones. Within the inharmonic material (glass and bell sounds), equalization techniques were used to generate formant regions that created stimulus tones at the peak frequencies, whenever that particular frequency was also present. In terms of the periodic source material (violin and cello), by removing a substantial number of its harmonics and leaving a narrow bandwidth around the fundamental frequency, an auditory product could be created that was more similar in colour to a pure tone, but had slightly more timbral detail. Such a method was also applied in the creation of stimulus tones. These equalization methods facilitated similar effects to those arising through the use of pure tones, while offering a significantly increased degree of variety in relation to timbre.

A technique used often in this piece is the delicate fading out of a frequency before subtly replacing it with a distortion product in the listener’s ears. An example of this method is evident at 1:37−2:23, wherein a 100 Hz sine wave is faded in at 2:05 that slowly masks and eventually replaces the 100 Hz QDT that was present as a result of the 200 Hz and 300 Hz acoustic primary tones (APTs) from 1:50−2:05 (Audio 10). This method of replacing DPOAEs with loudspeaker tones is also frequently used from 3:45 until the end of the piece, in this case using a 101.88 Hz tone, the frequency representing the mathematical difference between a G4 (392 Hz) and B4 (493.88 Hz), the two predominant pitches characterizing the second half of the work. Any low intensity buzz-like material experienced during the performance of this piece is almost certainly being generated within the listener’s ears.

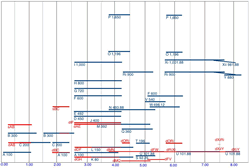

One of the most intriguing aspects of the application of DPOAEs as a compositional device in Invisibilia is that it contains 36 acoustic primary tone (APT) events that are played through the octophonic loudspeaker arrangement. As a result of the application of DPOAEs in this composition, if one were to analyze the spectrogram of Invisibilia, the level of varying sonic materials that are experienced by the listener would not be accurately reflected. A calculation of the primary distortion products that are provoked in this piece add at least an additional 16 other significant auditory products, which are key to the sound world that is created in this work. For example, the coincidence of tones emitted from the loudspeakers (indicated in blue in Fig. 8) produces sum tones “s” and difference tones “d” (both indicated in red): sAB is the sum tone product of A and B (100+300=400 Hz), while dAB is the difference tone product of A and B (300-100=200 Hz).

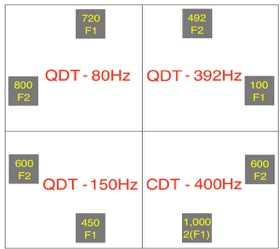

The octophonic loudspeaker arrangement is utilized in the building of a complex DPOAE stack from 2:40−4:18. The loudspeaker arrangement is divided into quadrants, each occupied by a pair of loudspeakers, and each of eight individual APTs is sent to a distinct loudspeaker. Consequently, four DPOAEs appear as the result of the APTs paired in each quadrant (Fig. 9).

As a means of further blurring the lines between distortion products and loudspeaker emissions, from 3:10−4:30 pure tones with the equivalent frequency values to those of the DPOAEs in a given quadrant are presented from loudspeakers in the opposing side of the configuration. For example, a 150 Hz pure tone, which matches the DPOAE frequency in the bottom left quarter, is played through the loudspeakers in the upper right quarter, and so forth.

Thus, each audience member will hear in many respects their own version of Invisibilia, as these “phantom” tones will differ in type and intensity from listener to listener. These DPOAEs will sound something similar to a soft buzzing or ringing when present. Thus, the listener’s own inner ears will determine the final dimension of the realization of this piece.

Transcape (2015)

The transient-evoked otoacoustic emissions (TEOAEs) that are generated in the listeners’ ears when listening to the author’s 2015 work for stereo headphones, Transcape, become the cornerstones of the work itself. After extensive research in the area, it would seem that Transcape is the first electroacoustic music composition that directly employs TEOAEs as sonic material. These TEOAEs are provoked in the listeners’ inner ears through the use of noise bursts and tone impulses that, at times, sound as though they are reflecting back from their own ears. As the stimulus material is most frequently abrupt and indelicate in nature, and TEOAEs are generally presented with a soft and shimmering quality, Transcape seeks to combine these two significantly contrasting textures as a means of exploring the potential for utilizing TEOAEs in composition as a predominant or primary objective. These TEOAEs allow listeners to hear their own ears, thus assisting in the performance of the piece at moments where the waveform activity of the stimulus is significantly decreased, or absent. TEOAEs can be experienced by listeners right from the beginning of this piece. Many will also physically feel their ears rhythmically pulsating in response to these repetitive stimuli.

As TEOAEs are a phenomenon in which auditory distortion products can be heard for prolonged periods after the stimulus has been presented (rather than simultaneously), an opportunity is opened up for the composer to present listeners with a clear demonstration of their own ears performing. Transcape applies this nature of TEOAEs in a very direct manner as it considers that the duration of exposure to impulsive stimuli correlates to the duration of the TEOAEs (Audio 11). Although the middle section of Transcape contains a 32-second section that has no acoustic signal (Fig. 10), listeners nevertheless continue to perceive sound throughout it. This is due to a temporary threshold shift in their hearing sensitivities resulting from prolonged exposure to the transient material, in this case a duration of 6:15 since the start of the work. This form of auditory fatigue is responsible for any temporary ringing listeners may “hear” at this point. During this section, listeners are invited to pay particular attention to any frequencies that they can hear coming from within their ears. Thus, they are presented with a direct demonstration of the capability of their ears as musical instruments — they will in fact hear their ears continue to respond to the earlier acoustic stimuli, as opposed to hearing virtually nothing, which the output waveform would suggest. The aural experience of this section could be described as the listeners being able to hear their own ears “singing”.

In experimental works such as this, where a minimal level of material is exploited, more conventional understandings of “musicality” are called into question. In terms of the spectral content that is output from the headphones, this work is primarily comprised of thick broadband content and there is little diversity in that regard. Listeners will, however, experience an exciting level of variation in relation to the rate at which this transient stimulus material is presented, while also hearing their ears respond, with an even greater degree of variation, to said stimuli. Furthermore, the repetitive and somewhat monotonous nature of the stimuli allows them to shift their focus toward the changing distortion products in their inner ears and to increase their awareness of the experience of their ears pulsating during the slower moments in the piece. The ears of the listeners are indeed the instruments in Transcape and it is entirely within their response to the acoustic stimuli that much of the musical variations occur.

Maeple (2015)

A variety of psychoacoustic phenomena, including residue pitch, spectral masking and binaural beating, are employed as vital compositional devices in the realization of the author’s fixed-medium, octophonic work, Maeple (2015).

In the closing section of Maeple, stacked sawtooth waves at 2000, 2500, 3000, 3500z, 4000, 4500 and 5000 Hz are used to generate an intense residue pitch at 500 Hz. As with any combination of APTs, other residue pitches may occur at times, however, in this section of the piece, only the residue pitch of 500 Hz is explored. At the start of the section, a sawtooth wave appears before the aforementioned partials are delicately introduced over it (Fig. 11; Audio 12 at 5:43). The 500 Hz sawtooth wave is then removed from the loudspeaker output, yet the frequency remains clearly audible and increases in intensity as additional stimulus tones are layered. It is likely that listeners will remain unaware of the fact that their own ears are generating the most prominent frequency that they hear. The level of variation in the impulse rates results in a greater sense of movement and turbulence than characterizes earlier parts of the work. Consequently, listeners are expected to be drawn inward by the various impulses while the strength of the 500 Hz residue pitch intensifies. By the time the 500 Hz tone is removed from the loudspeaker output (4:51), they will be entirely unaware of the fact that the 500 Hz tone that they are hearing is no longer coming from the loudspeakers.

Although there is no 500 Hz signal emitted from the loudspeakers between 4:51 and 5:43, a very prominent residue pitch of 500 Hz should nevertheless be perceived by in this section. Furthermore, the stimulus tones are presented with ever-increasing rates of impulses (consequently, the tones often appearing to be darting through the listening space) and, combined with rapid panning procedures, this results in a highly immersive and physical listening experience for the audience.

Swarm (2016)

A combination of free-field and distortion product primary tones are exploited in the creation of binaural beats and residue pitches for the author’s Swarm (2016), a fixed-medium, octophonic work (Audio 13).

In the third section (ca. 5:40 to 8:10) of Swarm, DPOAEs are used as the stimulus tones to create binaural beats. Residue pitch is applied here in the building of stacks of sawtooth waves, which are then used as APTs to generate the DPOAEs. APTs containing the frequency pairs 880/1320 Hz and 1760/2202 Hz are sent to the left and right sides of the 8-channel setup, resulting in DPOAEs at 440 Hz and 442 Hz, respectively. Consequently, listeners will hear a 2 Hz binaural beat because each of the two DPOAEs will only be present in one of their ears, due to the hard-panning of the APTs that produce them (e.g., at 6:08). This section draws to a close with the DPOAEs remaining static, while their APTs simultaneously modulate in frequency from 1320/880 Hz to 880/440 Hz on the left and from 2202/1760 Hz to 1760/1318 Hz to the right of the signal (7:34).

Conclusion

The non-linear nature of the inner ear’s response to specific types of acoustic signals has significant potential within electroacoustic music composition and encourages a reconsidering of the role of the listener’s ears in the performance process. Over the past seven years, the author has presented his research findings through concerts, installations and papers at various conferences, festivals and symposia around the world. One of the most encouraging aspects of presenting this material has been to witness the positive and intrigued response of audience members, who had rarely heard of, let alone experienced, these psychoacoustic phenomena, or considered the potential of the inner ear as a musical instrument.

Over the course of researching in detail the materials described here, it has become increasingly clear that there is not only significant potential for further dissemination of these explorations within musicological repositories, but also a blossoming interest in their findings among musical practitioners. The author hopes to see this research, and further work conducted in the area, open up exciting new avenues of æsthetic experimentation for composers of electroacoustic music.

Bibliography

Ashmore, Jonathan. “Cochlear Outer Hair Cell Motility.” Physiological Reviews 88/1 (January 2008), pp. 173–210. http://doi.org/10.1152/physrev.00044.2006 [Accessed 29 December 2019]

Bregman, Albert. Auditory Scene Analysis: The perceptual organisation of sound. Cambridge MA: MIT Press, 1999.

Campbell, Murray and Clive Greated. The Musician’s Guide to Acoustics. London: J.M. Dent & Sons Ltd., 1987.

Chechile, Alex. “Creating Spatial Depth Using Distortion Product Otoacoustic Emissions in Music.” ICAD 2015: “ICAD in Space: Interactive spatial sonification.” Proceedings of ICAD 2015 (Graz, Styria, Austria, Institute of Electronic Music and Acoustics, 6–10 July 2015). http://hdl.handle.net/1853/54100 [Accessed 29 December 2019]

Chowning, John. “Fifty Years of Computer Music: Ideas from the past speak to the future.” In Computer Music Modeling and Retrieval. Edited by Richard Kromland-Martinet, Sølvi Ystad and Kristoffer Jensen. Berlin Heidelberg: Springer, 2008, pp. 1–10. http://ccrma.stanford.edu/sites/default/files/user/jc/SpringerPub3_0.pdf [Accessed 29 December 2019]

Fastl, Hugo and Eberhard Zwicker. Psychoacoustics: Facts and models. Berlin: Springer-Verlag, 2007.

Gelfand, Stanley A. Hearing. 5th edition. Essex: Informa Healthcare, 2010.

Goodman, Shawn S., Denis F. Fitzpatrick, John C. Ellison, Walt Jesteadt and Douglas H. Keefe . “High Frequency Click-Evoked Otoacoustic Emissions and Behavioral Thresholds in Humans.” Journal of the Acoustical Society of America 125/2 (February 2009), pp. 1014–1032. http://doi.org/10.1121/1.3056566

Hartmann, William M. Signals, Sound and Sensation. New York: Springer-Verlag, 2005.

Haworth, Christopher. “Composing with Absent Sound.” ICMC 2011: “innovation : interaction : imagination.” Proceedings of the 37th International Computer Music Conference (Huddersfield, UK: CeReNeM — Centre for Research in New Music at the University of Huddersfield, 31 July – 5 August 2011). http://pdfs.semanticscholar.org/62a9/7ebf633adfa9e7e3c12ef1dc4c5c454c2016.pdf [Accessed 29 December 2019]

_____. “Vertical Listening: Musical subjectivity and the suspended present.” Unpublished doctoral dissertation, Queen’s University Belfast, 2012.

Hurley, Raymond M. and Frank E. Musiek. “Effectiveness of Transient-Evoked Otoacoustic Emissions (TEOAEs) in Predicting Hearing Level.” Journal of the American Academy of Audiology 5/3 (May 1994), pp. 195–203.

Kaushlendra, Kumar., Jayashree S. Bhat, Pearl E. D’costa and Christina Jean Vivarthini. “Effect of Different Click Durations on Transient-Evoked Otoacoustic Emission.” International Journal of Computational Intelligence and Healthcare Informatics 4 (2011), pp. 31–33.

Kemp, David T. “What are Otoacoustic Emissions?” International Symposium on Otoacoustic Emissions (Kansas City, Missouri, 1991).

_____. “Otoacoustic Emissions, Their Origin in Cochlear Function and Use.” British Medical Bulletin 63/1 (October 2002) “New developments in hearing and balance,” pp. 223–241. http://doi.org/10.1093/bmb/63.1.223 [Accessed 29 December 2019]

Kendall, Gary S., Christopher P. Haworth and Rodrigo F. Cádiz. “Sound Synthesis with Auditory Distortion Products.” Computer Music Journal 38/4 (Winter 2014) “Different Angles on Sound Synthesis,” pp. 5–23. http://doi.org/10.1162/COMJ_a_00265 [Accessed 29 December 2019]

Moore, Brian C.J. An Introduction to the Psychology of Hearing. Sixth edition. Bingley: Emerald, 2012.

Patterson, Roy D., Robert W. Peters and Robert Milroy. “Threshold Duration for Melodic Pitch.” In Hearing: Physiological bases and psychophysics. Edited by Rainer Klinke and Rainer Hartmann. Berlin, Heidelberg, New York, Tokyo: Springer-Verlag, 1983. http://doi.org/10.1007/978-3-642-69257-4_47 [Accessed 29 December 2019]

Ramos, Jessica Arrue, Sinnet Greve Bjerge Kristensen and Douglas L. Beck. “An Overview of OAEs and Normative Data for DPOAEs.” Hearing Review 20/11 (October 2013), pp. 30–33. http://www.hearingreview.com/2013/10/an-overview-of-oaes-and-normative-data-for-dpoaes [Accessed 29 December 2019]

Roederer, Juan G. The Physics and Psychophysics of Music: An Introduction. Fourth edition. New York: Springer, 2008.

Schubert, Earl D. “History of Research on Hearing.” In Hearing: Handbook of Perception, Vol. 4. Edited by Edward Carterette and Morton P. Friedman. New York, San Francisco, London: Academic Press, 1978, pp. 3–39.

Sharine, Angelique A. and Tomasz R. Letowski. “Auditory Conflicts and Illusions.” In Helmet-Mounted Displays: Sensation, perception and cognition issues. Edited by Clarence E. Rash. Alabama: US Army Aeromedical Research Laboratory, 2009, pp. 579–598. http://doi.org/10.13140/2.1.3684.4804 [Accessed 29 December 2019]

White, Harvey E. and Donald E. White. Physics and Music: The science of musical sound. New York: Dover Publications Inc., 2014.

Wilbur Hall, James. Handbook of Otoacoustic Emissions. San Diego: Cengage Learning, 2000.

Social top