Musebots

Collaborative composition with creative systems

Generative music offers the opportunity for the continual reinterpretation of a musical composition through the design and interaction of complex processes that can be rerun to produce new artworks each time. While generative art has a long history (Galanter 2003), the application of artificial intelligence, evolutionary algorithms and cognitive science has created a contemporary approach to generative art, known as “metacreation” (Whitelaw 2004). Musical metacreation (MuMe) looks at all aspects of the creative process and their potential for systematic exploration through software (Pasquier et al. 2016).

One useful model borrowed from artificial intelligence is that of agents, specifically multi-agent systems. Agents have been defined as autonomous, social, reactive and proactive (Wooldridge and Jennings 1995), similar attributes required of performers in improvisation ensembles. Musebots (Bown, Carey and Eigenfeldt 2015) offer a structure for the design of musical agents, allowing for a communal compositional approach (Eigenfeldt, Bown and Carey 2015) as well as a unified model.

Musebot ensembles, consisting of a variety of agents reflecting the varying musical æsthetics of their creators, have been successfully presented in North America, Europe and Australia. In these ongoing installations, each ensemble has been limited to five-minute performances, after which the ensemble exits and the next ensemble begins. While this limitation can be seen as an opportunity to feature as wide a variety of musebots as possible — as of this writing, there are over seventy-five unique musebots — it raises some questions.

If the musebot ensemble is a proof-of-concept, then it is certainly successful: musebots interact and can self-organize, producing novel output that can be surprising and arguably valued — thus attaining a mark of computational creativity (Boden 2009). However, after listening to any one ensemble for longer than a few minutes, one recognizes musical limitations: the interactions between musebots remain at the musical surface (i.e. harmony, rhythm, density). Moving beyond this duration, the successful outcome is no longer dependent upon interaction, but expands to include structure. It is for this reason that many MuMe practitioners have remained as part of the creative process, whether as musicians interacting with an interactive system or as operators, triggering global changes when the surface has become too predictable or static (Eigenfeldt et al. 2016).

I have used musebots in a variety of artworks in the past three years (Eigenfeldt 2016a; Eigenfeldt et al. 2017) and have become their main evangelist. Although one of the main desires of the musebot project was to provide a collaborative framework that allowed sharing of ideas and code, I have found the need to move forward independently. In doing so, I hope to not only create interesting and valuable artworks, but also, through their documentation, to entice others to join me in the development and extension of the musebot paradigm.

Musical Agents and Musebots

The potential of agent-based musical creation was explored early in the history of computer music (Wulfhorst, Nakayama and Vicari 2003; Murray-Rust, Small and Edwards 2006), specifically in their potential for emulating human-performer interaction. The author’s own initial investigation into multi-agent systems is described elsewhere (Eigenfeldt 2007). Within MuMe, the established practice of creating autonomous software agents for free improvised performance (Lewis 1999) has involved idiosyncratic, non-idiomatic systems, created by artist-programmers (Yee-King 2007; Rowe 1992). While musical results from these systems can be appreciated by other composers, sharing research has been difficult, due to the lack of common approaches (Bown et al. 2013).

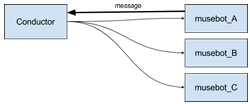

Musebots are pieces of software that autonomously create music collaboratively with other musebots. Musebots were designed to alleviate some of these issues, as well as provide a straightforward infrastructure for development (Bown, Carey and Eigenfeldt 2015). A defining goal of the musebot project is to establish a creative platform for experimenting with musical autonomy, open to people who are developing cutting-edge musical intelligence or simply exploring the creative potential of generative processes in music. The musebot protocol is, at its heart, a method of communicating states and intentions, sending networked messages established through a collaborative document via OSC (Wright 1997). A Conductor serves as a running time generator, as well as a hub through which all messages pass. The Conductor also launches individual musebots via curated ensembles.

Musebot ensembles have been presented as continuous installations at a variety of festivals and conferences, the results of which have been described elsewhere (Bown, Carey and Eigenfeldt 2015). These ensembles have been modelled after improvisational explorations, albeit with the potential for generative harmonic progressions. Ensembles have been curated that combine different types of musebots based upon their musical function (i.e. a beat musebot, a bass musebot, a pad musebot, a melody musebot and a harmony-generating musebot). But an ensemble can be constructed using any combination of different types of sound-generating musebots (Table 1).

Type |

Number |

Bass Generators |

5 |

Beat Generators |

13 |

Harmony Generators |

5 |

Keys/Pads Generators |

6 |

Melody Generators |

19 |

Noise/Texture Generators |

16 |

Table 1. List of the generative musebots currently available within the repository of working musebots (available from the Musical Metacreation website).

The information shared between musebots has tended to be limited to surface details such as a current pool of pitches, density of onsets and volume. Although having virtual performers audibly agree upon such parameters suggests musically successful machine listening, these levels of interaction become mundane surprisingly quickly; the more subtle interactions that occur in human improvisation ensembles, and their development over time, have not yet been successfully modelled within musebot ensembles.

Several musebot developers participated in ProcJam 2015, where they devoted a week to exploring the potential for musebots to broadcast their intentions for the immediate future. While the results heightened the perception of machine listening, the musebots were not, in this particular context, able to move beyond the generation of musical surface.

From Self-Organization to Composition

My own hesitation to more deeply embrace improvisational approaches has to do with my training as a composer — compositional thought suggests a top-down approach, a pre-performance organization in which musical structure elicits large-scale change. Traditional narrative and dramatic musical forms, based upon tension and release, result in hierarchical structures that are seemingly impossible to negotiate or self-organize. For example, getting agents to agree upon a central section (i.e. a chorus) and deciding how to progress towards that section through an adequate build of tension without a top-down approach is beyond my comprehension. While I have explored the use of pre-generated structural templates (Eigenfeldt and Pasquier 2013), I found this unnecessarily restrictive outside of the exact model considered (in this case, electronic dance music).

A brief look at four recent projects that not only made use of musebots, but also used them as collaborative partners, provides some insight into the potential of musebot ensembles.

Ambient Landscape (2018)

The Ambient Landscapes series is a collaboration between video artist James Bizzocchi and I, and uses musebots to accompany a generative video installation. The overall æsthetic of the work is ambient, in which minute-long static video images of naturescapes slowly dissolve into one another. The work is structured as an audiovisual environment that models and depicts our natural environment across the span of a year. Each new season triggers a new musebot ensemble, allowing for a wide variety of music generation.

The video sequencing engine selects several related videos based upon hand-coded metatags that describe the shots — for example, “spring, river, rocks” — and this information is sent to the musebots. A soundscape musebot selects from a database of over 100 soundscape recordings that potentially match the metatags. Furthermore, the musebots were developed to react to valence and arousal, and these parameters are used within the entire system to supplement the metatags sent by the video sequencer, resulting in a better affective relationship between video and music (Eigenfeldt et al. 2015).

Imaginary Miles (2018)

This project reimagines the Miles Davis groups of the late 1960s. Davis’ classic album Filles de Kilimanjaro was recorded in 1968 and is a landmark transition album between Miles’ second quintet and his electric period. It featured members of his second ensemble playing electric instruments (Herbie Hancock on Fender Rhodes, Ron Carter on electric bass), as well as new members (Chick Corea, piano). The following year he released In a Silent Way, one of his most influential albums. The latter is considered his first electric album and included a young English guitarist, John McLaughlin.

Imaginary Miles models individual musebots after these musicians: Miles (nonelectric, muted trumpet); Wayne Shorter (tenor sax), John McLaughlin (electric guitar, but more in the style of his Mahavishnu Orchestra work than with Miles), Chick Corea (mini-Moog, but modelled after his works a few years later with Return to Forever), Herbie Hancock (electric piano), Ron Carter (electric bass) and Tony Williams (drums).

A musebot generates an overall structure (intro, head, solos, head, outro) in the same way the musicians might agree upon such a structure before they begin. A 16-bar chord progression is generated by another musebot, based upon selected Miles Davis and Wayne Shorter compositions from that period. A head (melody) is generated from these works as well.

As always, musebots “listen” to each other, communicating what they are doing through messages and thus not requiring other musebots to analyze audio. They have individual desires and intentions — for example, how active they want to be and become — but balance these in relation to other musebots. The soloists take turns soloing, patiently and politely waiting for an opening; each musebot has a personality attribute of patience/impatience (how long to wait before wanting to solo), politeness/rudeness (how willing it is to interrupt another soloing musebot) and ego (how long it wants to continue soloing).

The HerbieBOT on piano listens to the soloist and responds by either filling in holes through chordal comping or echoing individual notes it just heard.

Moments: Polychromatic (2017)

I have proposed an alternative to traditional narrative structures for musical generation (Eigenfeldt 2016b), specifically what Stockhausen called Moment-Form (1963). Kramer suggests that such non-teleological forms had already been used by composers such as Stravinsky and Debussy (Kramer 1978), while I have described the use of moment form in ambient electronic music (Eigenfeldt 2016b). Moment form offers several attractive possibilities for generative music, including the notion that individual moments can function as parametric containers. Just as Stockhausen obsessively categorized and organized his material (Smalley 1974), the parameterization of musical features by applying constraints upon the generative methods can delineate the moments themselves.

Moments is the author’s first generative work that explores moment form in generative music through the use of musebots. As of 2018, Moments exists in several versions: the original for two Disklavier pianos, in which musebots send MIDI data to the mechanical pianos; Moments: Polychromatic, in which it generates all audio through Ableton Live potentially using any of Ableton’s 1200+ software synthesizers; Moments: Monochromatic, for live performer and 12 unified musebot performers (described below).

Musebots have demonstrated the potential to self-organize. However, Moments operates compositionally, in that it generates an entire musical form prior to each performance. This allows for an important benefit: a precognition by all agents of the upcoming structure. Knowing that a section is, for example, two minutes in duration, allows musebots to plan their activity within that time.

Moments: Polychromatic uses up to twelve musebots, each serving a musical function:

- ParamBot. Generates the overall structure, including the number of moments (sections) and the parameter levels within each. These parameters include speed, activityLevel, voiceDensity, complexity, consistency, volume and timbre.

- PCsetBot. Given the complexity for a section, generates a pitch-class set for the entire section;

- OrchestratorBot. Selects the ensemble, or active musebots, for the composition.

- GenoaBot. Granular synthesis agents (three are used) that select their own timbre based upon the timbral region negotiated with the other musebots.

- KitsilanoBot. Resonant soundscape synthesis (two are used), whose filter bands are determined by the current pitch-class set.

- LondynBot. Drones and long notes, trained upon Ableton Live’s 1200+ available patches (two are used).

- SienaBot. Short sounds, also sharing the Live database and selecting its own timbres.

- Lastly, a visual musebot was developed by Simon Lysander Overstall, in order to interpret the messages being sent by the musebots, including compositional parameters, as well as performance parameters.

Moments: Monochromatic (2017)

This version of Moments, unlike its cousins, is intended for live performance with a live musician, rather than as an installation. Monochromatic explores how virtual musical agents can interact with a live musician, as well as with each other, to explore a unique structure generated for the musical performance. Because the structure is generated at the beginning of the performance, musebot actions can be considered more compositional rather than improvisational, as structural goals are known beforehand.

Important aspects (sectional points, harmonies, states) are communicated visually to the performer via standard musical notation. Additionally, the performer’s live audio is analyzed by a ListenerBot and translated for the virtual musebots, who can, in turn, attempt to avoid the performer’s spectral regions or become attracted to them.

Conclusion

Because musebots lack direct interactivity, I am required to listen to the complete compositions that they produce; rather than immediately altering surface features as I hear them, I listen to their overall evolution and interaction, and react to resulting musical structure, perhaps similar to how a director might take notes of a complete run of a show, rather than stopping to fix problems in individual scenes. Like human improvisers, musebots are independent and autonomous; I can suggest ideas to them and possibly provoke musical changes, but, unlike chamber musicians reading fixed scores, I cannot force them to perform specific actions. Like many metacreative systems, the output of musebots cannot be predicted; however, my relationship with them is not one in which I simply “let them run” on their own. Instead, I find that their musical decisions continually stimulate me to develop new ensembles and even new musebots in a way that a theatrical director might decide to switch actors or a musical director might decide to alter the makeup of her ensemble; in these latter examples, the decisions can only occur when one trusts the other performer/creators to produce something new and unexpected, in the same way that I trust my musebots to produce successful compositions, even if I add new musebots into the ensemble.

Bibliography

Boden, Margaret. “Computer Models of Creativity.” AI Magazine 30/3 (Fall 2009).

Bown, Oliver, Arne Eigenfeldt, Aengus Martin, Benjamin Carey and Philippe Pasquier. “The Musical Metacreation Weekend: Challenges arising from the live presentation of musically metacreative systems.” MUME 2013. Proceedings of the 2nd International Workshop on Musical Metacreation (Boston MA, USA: Northeastern University, 14–15 October 2013). http://musicalmetacreation.org/index.php/mume-2013

Bown, Oliver, Benjamin Carey and Arne Eigenfeldt. “Manifesto for a Musebot Ensemble: A Platform for live interactive performance between multiple autonomous musical agents.” ISEA 2015. Proceedings of the 21st International Symposium of Electronic Arts (Vancouver, Canada: Simon Fraser University, 14–19 August 2015). http://isea2015.org

Chadabe, Joel. “Interactive Composing: An Overview.” Computer Music Journal 8/1 (Spring 1984), pp. 22–27.

Eigenfeldt, Arne. “The Creation of Evolutionary Rhythms Within a Multi-Agent Networked Drum Ensemble.” ICMC 2007: “Immersed Music”. Proceedings of the 33rd International Computer Music Conference (Copenhagen, Denmark, 27–31 August 2007).

_____. “Musebots at One Year: A Review.” MUME 2016. Proceedings of the 4th International Workshop on Musical Metacreation (Paris, France: Université Pierre et Marie Curie, 27 June 2016). http://musicalmetacreation.org/index.php/mume-2016

_____. “Exploring Moment-Form in Generative Music.” SMC 2016: “STREAM: Sound—Technology—Room—Emotion—Aesthetics—Music.” Proceedings of the 13th Sound and Music Computing Conference (Hamburg, Germany: Hochschule für Musik und Theater Hamburg, 31 August – 03 September 2016). http://smc2016.hfmt-hamburg.de

Eigenfeldt, Arne and Philippe Pasquier. “Evolving Structures for Electronic Dance Music.” GECCO 2013. Proceedings of the 15th Genetic and Evolutionary Computation Conference (Amsterdam, Netherlands: 6–10 July 2013). http://www.sigevo.org/gecco-2013

Eigenfeldt, Arne, Jim Bizzocchi, Miles Thorogood and Justine Bizzocchi. “Applying Valence and Arousal Values to a Unified Video, Music and Sound Generative Multimedia Work.” GA 2015. Proceedings of the 18th Generative Art Conference (Venice, Italy: Fondazione Bevilacqua La Masa, 9–11 December 2015). http://www.generativeart.com

Eigenfeldt, Arne, Oliver Bown, Andrew R. Brown and Toby Gifford. “Flexible Generation of Musical Form: Beyond mere generation.” ICCC 2016. Proceedings of the 7th International Conference on Computational Creativity (Paris, France: Université Pierre et Marie Curie, 27 June – 1 July 2016). http://www.computationalcreativity.net/iccc2016

_____. “Distributed Musical Decision-Making in an Ensemble of Musebots: Dramatic changes and endings.” ICCC 2017. Proceedings of the 8th International Conference on Computational Creativity (Atlanta GA, USA: Georgia Institute of Technology, 19–23 June 2017). http://computationalcreativity.net/iccc2017

Eigenfeldt, Arne, Oliver Bown and Benjamin Carey. “Collaborative Composition with Creative Systems: Reflections on the first musebot ensemble.” ICCC 2015. Proceedings of the 6th International Conference on Computational Creativity (Park City UT, USA: Treasure Mountain Inn, 29 June – 2 July 2015). http://computationalcreativity.net/iccc2015

Galanter, Philip. “What is Generative Art? Complexity theory as a context for art theory.” GA 2003. Proceedings of the 6th Generative Art Conference (Milan, Italy: Politecnico di Milano, 2003). http://www.generativeart.com

Kramer, Jonathan D. “Moment Form in Twentieth-Century Music.” The Musical Quarterly 64/2 (April 1978), pp. 177–194.

Lewis, George. “Interacting with Latter-Day Musical Automata.” Contemporary Music Review 18/3 (1999) “Aesthetics of Live Electronic Music,” pp. 99–112.

Murray-Rust, David, Alan Smaill and Michael Edwards. “MAMA: An architecture for interactive musical agents.” Frontiers in Artificial Intelligence and Applications, Vol. 141, pp. 36–40. IOS Press, 2006.

Pasquier, Philippe, Arne Eigenfeldt, Oliver Bown and Shlomo Dubnov. “An Introduction to Musical Metacreation.” Computers in Entertainment (CIE) 14/2 (Summer 2016) “Special Issue on Musical Metacreation, Part I.”

Rowe, Robert. “Machine Composing and Listening with Cypher.” Computer Music Journal 16/1 (Spring 1992) “Advances in AI for Music (1),” pp. 43–63.

Smalley, Roger. “‘Momente’: Material for the Listener and Composer — 1.” The Musical Times 115:1571 (January 1974), pp. 23–28.

Stockhausen, Karlheinz. “Momentform: Neue Beziehungen zwischen Aufführungsdauer, Werkdauer und Moment.” Texte zur Musik 1 (1963), pp. 189–210.

Whitelaw, Mitchell. Metacreation: Art and artificial life. Cambridge MA: The MIT Press, 2004.

Wooldridge, Michael and Nicholas Jennings. “Intelligent Agents: Theory and practice.” Knowledge Engineering Review 10/2 (June 1995), pp. 115–152.

Wright, Matthew. “Open Sound Control: A New protocol for communicating with sound synthesizers.” ICMC 1997. Proceedings of the 23rd International Computer Music Conference (Aristotle University, Thessaloniki, Greece, 25–30 September 1997).

Wulfhorst, Rodolfo, Lauro Nakayama and Rosa Maria Vicari. “A Multiagent Approach for Musical Interactive Systems.” AAMAS 2003. Proceedings of the 2nd International Conference on Autonomous Agents and Multiagent Systems (Melbourne, Australia, 14–18 July 2003).

Yee-King, Matthew. “An automated music improviser using a genetic algorithm driven synthesis engine.” In Applications of Evolutionary Computing. Proceedings of the EvoWorkshops 2007 (Valencia, Spain, 11–13 April 2007). Heidelberg: Springer-Verlag, 2007.

Social top