Creating an Autopoietic Improvisation Environment Using Modular Synthesis

The “no-source mixing desk” is a sound mixer with outputs wired to inputs, so that the self-noise of the circuitry is the only sonic material it contains. The No Input Software Environment (NISE) is an implementation of such a system using modular synthesis software, designed to encourage rapid prototyping and musical experimentation. NISE creates a non-linear, dynamic system that self-produces and self-defines its own ongoing processes. The result is analogous to an ecosystem of living creatures, each sonic entity defining its place in relationship to the others. The human agent is encouraged to reconsider their role in this changing milieu, to see the process of improvisation as one integral to organic growth and structure formation. An overview of cybernetic music precedes a tracing of the conceptual roots of this project from von Foerster’s second-order cybernetics to Maturana and Varela’s autopoiesis.

Historical Background

As a general principle, feedback describes a circuit — electronic, social, biological or otherwise — in which the output (or result) influences the input (or cause). In 1948 Norbert Wiener instantiated cybernetics as the study of regulatory systems (Wiener 1961). Ever since, such circuits have been investigated in many contexts, not least of these being the musical. Around 1950 Louis and Bebe Barron, directly inspired by Wiener, built audio feedback circuits that burnt themselves up as they played (Stone 2005). They recorded the lifespan of each circuit, using what might have been the first tape recorder in North America. In a painstaking process, they selecting the most musical of these experiments, subjecting them to home-built reverberation, progressive slowing and looping techniques (Brockman 1992, 12). Several original compositions, beginning with Heavenly Menagerie (1950), led to their famed work on the Forbidden Planet soundtrack (1956). Their explicit goal was to “design and construct electronic circuits which function electronically in a manner remarkably similar to the way that lower life-forms function” (liner notes to Barron and Barron 1976).

John Cage and David Tudor worked with the Barrons on Williams Mix (1951–53), though that composition was created using different methods than those just outlined. Tudor later returned to the electrical circuit method, building constructs from “amplifiers (fixed or variable gain, fixed or variable phase-shift, tuned, saturating types), attenuators, filters (several types), switches and modulators with variable side-band capability.” Connecting these in various combinations, he experimented from about 1970 with “generating electronic sound without the use of oscillators, tone generators, or recorded natural sound materials” (Tudor 1996). Untitled (1972) is the only extant example of a “finished” composition by Tudor, although installations like Virtual Focus, which consists of a table covered in “home-brewed” devices, appear to be functionally similar (Rogalsky 1999).

Since that time, a great many artists have adopted the principles of cybernetics in their works. Specifically, certain performers have worked with the “no-source mixing desk.” This is an audio mixer with outputs wired to inputs, thus creating feedback loops of various permutations and sonic characteristics. The self-noise of the circuitry itself is the only original sonic material; this accumulates from silence to audible signal due to progressive amplification. This process is controlled by the player, who modifies the circuit through patching, equalisation, amplitude potentiometers, and so on. Delay units, limiters and other effects are commonly utilised to augment the sonic possibilities. Toshimaru Nakamura is the name most commonly associated with this practice, but artists have been pursuing this line of inquiry for some time. For example, David Myers (working as Arcane Device) released feedback music created with guitar effect pedals as early as 1988 (Silent Records 2011).

The author’s own cybernetic audio experiments began in the 1980s. Ouroboros (1991), the first of these to be documented, was performed live over FM radio, the feedback circuit being that between broadcaster and receiver. This instantiated a fused listening space, bridging the expected physical separation inherent in radio. The aim was to challenge normative ideas of how and where such boundaries are drawn and policed. Significantly, the performer’s body was itself part of the “instrument”, modulating the sound circuit through induction. Recent incarnations of this system (in 2008 and 2009) have incorporated live audiences in public spaces (an art gallery and café, respectively).

Design Goals and Research Questions

The No Input Software Environment (NISE) designed by the author builds on this prior work in cybernetic audio. The project began by considering which qualities might be particular to previous implementations and which might more generally be applicable to all “no source” configurations. It was observed that existing systems were built on electronic circuitry in the analogue domain. Would a software circuit in the digital domain generate similar results? Discussions with other practitioners raised the possibility that the process might fail to initiate, due to the lack of noise in a digital circuit. From the outset, the author judged this opinion to be indicative of a generalised misunderstanding of digital audio. Certainly a digital circuit has a noise floor, similar to its analogue counterpart. What would this sound like when activated? Would æsthetically interesting sounds be attainable? How might this system encourage new improvisational possibilities? These were the research questions.

An important constraint on existing “no source” mixer systems is their bulk and fixed topology. Even with a patch bay, changes to the circuit are relatively constrained. From the outset, NISE was designed to circumvent these limitations in order to provide a compact, mobile and reconfigurable performance platform. The implementation comprises a laptop, a small MIDI controller and suitable software. 1[1. A tangential benefit for those concerned about renewable resources is the substantial reduction in power consumption. The entire rig can run off the laptop battery, which may even be solar powered.] This modest setup is not simply a matter of convenience. Ease of structural modification was considered a requirement to this project in order to facilitate fluid improvisation on various scales. A hardware mixer allows improvising within a given circuit; NISE facilitates rapid comparison of one such circuit with another, improvising across structures.

Besides the practical and æsthetic goals, NISE was designed to investigate practical implementations of second-order cybernetics and autopoiesis, concepts explained below.

First, a brief word about terminology. The term “no source” has often been used to describe the systems under consideration, though this is technically inaccurate. There is indeed a source for the sounds, this being the inherent circuit noise. Nakamura prefers No Input Mixing Board (NIMB) and it is in line with this precedent that the name No Input Software Environment was adopted. As a bonus, the acronym can be pronounced “nice.”

Cybernetics and Autopoiesis

Cybernetics is the study of regulatory systems, both biotic and abiotic. It takes as axiomatic the presence of circular causal relationships — feedback networks, in other words. It was initiated by the publication in 1948 of Norbert Wiener’s Cybernetics: Or the Control and Communication in the Animal and the Machine. Though radical and far-reaching when initially proposed, the concepts of cybernetics were rapidly adopted into many disciplines. So much so, that for the second edition (1961) of Cybernetics, Weiner could write: “… the chief danger against which I must guard is that the book may seem trite and commonplace.”

In particular, cybernetics has been important to the practice of electroacoustics. As an example, take Jeff Pressing’s survey of extended, reconfigured and interactive instruments. He defines the last of these categories as “one that directly and variably influences the production of music by a performer” (Pressing 1990, 20). After describing the use of computers as either “variation generators”, “synthetic accompanist” or “musical director,” he reserves a fourth catch-all category, “multifunctional environments”, for “learning, parallel processing, neural nets” and other systems that might introduce unpredictability (Ibid., 23). This catalogue demonstrates that the principles of first-order cybernetics — those concerning simple feedback loops between agents (for example, performer and machine) — have long been integrated into electronic musical practice.

The same is not true of second-order cybernetics, which applied the existing models of control and communication to the problem of cognition itself. The genesis of this “cybernetics of cybernetics” is dated by Bruce Clarke and Mark Hansen (2009) to Heinz von Foerster’s 1974 talk, “On Constructing a Reality.” Starting with a logical model of nerves and networks of nerves, that author derives some unexpected results concerning “the postulate of cognitive homeostasis”:

The nervous system is organized (or organizes itself) so that it computes a stable reality.

This postulate stipulates “autonomy,” that is, “self-regulation,” for every living organism. Since the semantic structure of nouns with the prefix self- becomes more transparent when this prefix is replaced by the noun, autonomy becomes synonymous with regulation of regulation. (von Foerster 2003a, 225–226)

To illustrate this point, he presents an illustration of a hat-wearing man, who has in his mind a representation of his world. This representation includes himself and other entities he acknowledges as similar to himself — some are even hat-wearing men. These entities have inside themselves similar descriptions of their world. Accordingly, von Foerster posits that reality is based on the overlapping environment variously computed by its inhabitants. 2[2. Here, computation and cognition are considered synonymous.] This is encapsulated in the pithy phrase: “I am the observed relation between myself and observing myself” (von Foerster 2003, 257).

This conclusion was taken up in biology by Humberto Maturana and Francisco Varela (1973), who were unsatisfied with the normative definitions of life, stated in terms of genetic reproduction and evolutionary competition. Their radical revision defines life as a homeostatic system that self-produces and self-defines its own ongoing processes, in a cybernetic cycle. This “auto-self-creation” they termed autopoiesis (Maturana 1980, xvii). The canonical example is the eukaryotic cell. Through a series of autocatalytic reactions, the nucleus, organelles and other structures that comprise this entity produce those raw materials necessary to sustain themselves by regenerating precisely those same structures. Contrast this with an aircraft factory, a typical allopoietic system, which takes in raw materials to produce structures of one type — aircraft — using structures of radically different types: assembly line workers, lathes, production quotas… but not aircraft.

Extending this model to the relationships between entities, Maturana explains societal space as being generated between autopoietic machines, in exactly the same way that de Foerster’s hat-wearing men generate their communities. According to Maturana, a “collection of autopoietic systems” interacting with each other in an integrated system “is indistinguishable from a natural social system” (Ibid., xxiv).

Correct application of second-order cybernetics to music is rare in the literature. Christina Dunbar-Hester attempted this when she stated that “most of the applications of cybernetics by musicians, composers, and so on, are concerned with self-making and the interplay of agency between composer, audience, machine, and the musical piece or performance itself and thus resemble Hayles’ second-wave cybernetics” (Dunbar-Hester 2010, 116). But this conclusion is based on a basic misunderstanding of Maturana (Hayles 1994). If “composer, audience, machine” are the productive components of the system and music is the result, the system, at least at this scale of observation, is not autopoietic but allopoietic.

David Borgo is a notable exception to this lacuna. He has usefully described a musical collective as a relationship of autonomous structures generating order out of chaos, increasing local complexity and structure within a consensual domain.

From an autopoietic perspective, intelligence is manifest in the richness and flexibility of an organism’s structural coupling. Maturana and Varela have also broadly re-envisioned communication, not as a transmission of information, but as a coordination of behavior that is determined not by any specific or external meaning but by the dynamics of structural coupling. (Borgo 2002, 15)

This formulation captures emergent properties of an improvising group without recourse to causal explanations or hierarchical models, both of which tend to reduce improvisation to a mechanistic process that is quite unrecognisable to actual practitioners. A more detailed discussion of this point, along with a more extensive consideration of improvisation in the main, is provided elsewhere (Parmar 2011a).

Implementation

NISE could well have been implemented in any application that follows the paradigm of the modular synthesizer, in which different building blocks, each with a simple, contained function, can be connected together to form structures of ever-increasing complexity. Reaktor 5, a software package developed by Native Instruments of Berlin, was chosen for this research. Reaktor has certain advantages when compared to alternatives: a large library of pre-built components, ease of reconfiguring these dynamically (in real time, as the circuit continues operating), a customisable user interface, straightforward MIDI and OSC assignments (allowing connection to performance hardware) and a strong developer community.

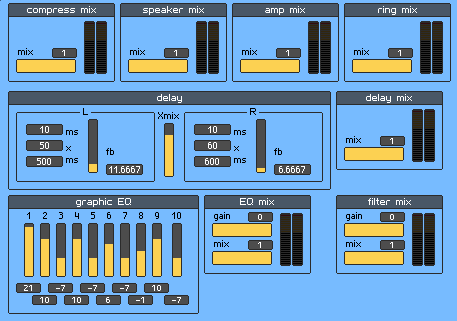

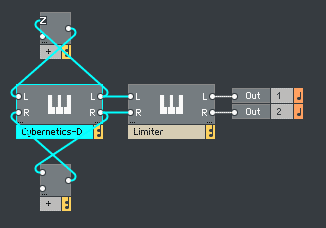

In Reaktor, a complete circuit is an “instrument”. This is displayed with an interface panel that may be simple and functional (Fig. 1) or made as pretty and obscure as one might wish, using various bitmaps and other graphical niceties. The structure view reveals the individual building blocks (“macros”) of an instrument, which can be nested to an arbitrary level. Figure 2 shows the top-level structure for the same NISE implementation. The main workings are contained within the Cybernetics-D macro. The outputs from this are routed to the inputs, forming the main feedback loop. The audio result exits the system to stereo outputs, preceded by a limiter. This final component is critically important as hearing protection.

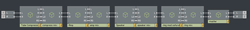

Inside the Cybernetics-D macro is a chain of effects from input to output. These include compressor, delay line, filter, graphic equalizer, amplifier saturation and so on. Each macro is followed by a mixer to allow control over both the direct unmodified signal and that modified by the particular component — dry and wet balance, in other words. A simplified view (with many components omitted for reasons of space) is shown in Figure 3. Such a circuit is only a starting point. Through practice it was discovered that additional feedback paths internal to each macro produced increasingly varied soundscapes. Successful experiments involved the use of cross-fed delay lines (left to right channel and vice versa), filters and multiple output channels, enabling spatial distribution.

Each circuit has its own personality, based on the emergent properties of the components operating in combination. The sonic result of NISE can indeed be “nice” — full of slow pulsing exchanges of rhythmic information, like grasshoppers stridulating at night. Or it can be “nasty”, as frequencies compete in the limited phase space, combining into beat frequencies and modulating each other to create ever-increasing sound densities. These possibilities demonstrate different admixtures of negative and positive feedback, of destructive and constructive interference. 2[2. For sound examples, refer to the author’s CD Avoidance Strategies (2013), which contains compositions created from live improvisations with NISE (Parmar 2011b). The publisher, Stolen Mirror, provides for barrier-free listening through Bandcamp.]

The output of NISE is sensitive to initial conditions. Before activating the processor, the player can “seed” the patch by boosting particular frequency bands on the EQ or by setting certain initial delay times. During playback, small changes to the parameters might well result in unpredictable sonic output. This reliance on initial conditions and precise parameter values situates NISE within the class of non-linear systems.

Given the complexity of some of the NISE circuits, the question arises as to how “playable” the system is and how it compares in this regard to electronic circuits. This concern can be addressed by looking at the control hardware, expressiveness of the system and technical limitations such as latency.

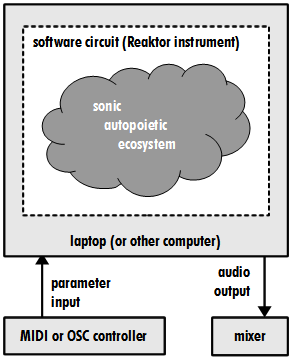

A typical hardware configuration utilised the Novation Nocturn, a compact MIDI controller with eight potentiometers (Fig. 4). These can be accessed in multiple banks (pages), each with their own mappings to the software. This allows quick access to a multitude of different parameters, all from a small form factor. Best results were achieved with subtle, incremental parameter changes. The ability to map the controls to suit each NISE implementation resulted in a responsive interface, with ergonomics considerably improved over that of motley collection of physical mixers and outboard gear.

Despite any ergonomic advantages, it should be noted that perfect musical control was not the goal of NISE. Were that so, it could have been implemented algorithmically, with inputs precisely selectable by numeric value (hence also being repeatable). Instead, the large number of parameters (three dozen or so) thwarted any attempt at complete control. It was physically impossible to manipulate that many potentiometers simultaneously, or even in rapid succession. Besides which, it was usually unclear how a change to a particular controller value might alter the resulting soundscape.

Rather than being in a position of control in relationship to their environment, the NISE performer is instead subject to a sonic ecosystem (Fig. 5) that evolves from the given circuit, initial parameters and any previous actions. Performer and instrument respond to each other in a state of improvisation. This situation corresponds to Pressing’s suggestion that unpredictability might be generated by “unrememberable complexity, rapid and complex changes in processing by another agent” (Pressing 1990, 23). NISE explicitly encompasses non-deterministic sound generation, refuting reductionist formulations of improvisation, as further described elsewhere (Parmar 2011a).

NISE as Cybernetic System

NISE is a family of circuits that share important traits with autocatalytic loops. Some of the important characteristics of this system can be described in relation to autopoietics. A fundamental aspect of the architecture must first be clarified. The system as a whole (computer, controller, software) is no different in structure from the interactive computer systems surveyed by Dunbar-Hester and Pressing and is thus a first-order cybernetic implementation. However, the sonic ecosystem that evolves within the software itself creates an autopoietic arena. In this milieu, sound generates sound, as will be explained.

Like Tudor’s Untitled, NISE implements a closed system with no explicit audio source, whether from samples, microphones, oscillators or other generators. All information that might be used to generate sound is embedded in the circuit design and its constraints; this information is constant within the scope of a particular NISE ensemble. Various parameters, including the admixtures of negative and positive feedback at different points, are under the control of the performer, opening NISE to improvisatory effects that may be conceptualised as energy transfers into the system. Hence, this model conforms to autopoietic systems that are “both environmentally open to energic exchange and operationally closed to informatic transfer” (Clarke and Hansen 2009, 9).

The internal state of the system is in flux. This phase state is defined by three dimensions — amplitude, frequency and time — under specific constraints. Frequency is limited by the internal digital sampling rate, within the idealised limits of 20 Hz and 20 kHz. Amplitudes are defined on a decibel (logarithmic) scale from minus infinity to 0 dB, an arbitrary maximum constrained by an output limiter. Time is limited on the macro scale by the duration of the performance and inflected on smaller scales by the periodicity of controller movements and delay times. The phase space defined by the combination of these dimensions possesses certain attractors, to borrow a term from chaos theory. These are regions in the phase space that the system tends to occupy, corresponding to recurring timbres or rhythmic properties of the output.

Consider one simple case: an equal energy sound (white noise) is subject to a frequency curve that is flat except for a slight peak at 2 kHz. Positive feedback in the system will emphasise this peak, increasing the amplitude at this frequency to the maximal allowed value, over a time period determined by various other processes in the circuit. In a simple system, this would result in an undesirable feedback spike. But NISE circuits have been specifically built to implement certain catalytic effects, which add complexity to the signal. Specifically, delay lines smear feedback patterns over the temporal axis, providing more time for the performer (and other system effects) to respond to this frequency peak. Filters and frequency equalisation directly alter the frequency component, so that it too changes over time. Rather than spike suddenly, such frequency events tend in these circuits toward stability, traversing the phase space in the manner of a soliton, a self-reinforcing travelling wave. It is notable that NISE achieves these effects with limited resources and without explicit programming, relying instead on applied constraints and the emergent properties of the phase space.

We can test the musical and experiential results of our experiment against the formulations of Maturana and Varela. The following definition is entirely congruent with NISE, remembering that the components in this case are sonic in nature:

[A]n autopoietic machine continuously generates and specifies its own organization through its operation as a system of production of its own components, and does this in an endless turnover of components under conditions of continuous perturbations and compensation of perturbations. (Maturana and Varela 1973, 78–79)

NISE as Ecosystem

Listening to the soundscapes created by NISE, it is difficult to avoid comparison with a rich ecosystem, such as a marsh or grassland. In each milieu, certain sonic entities appear, exist for a time, and then subside once more into the background. At times it seems that these entities are in discourse; at other times they are perceived as defining an independent niche in the ecosystem. Just as different species of insects or birds avoid masking by communicating over different frequency bands, so do sonic entities in NISE.

This is exactly the metaphor that Louis and Bebe Barron used to describe their own circuits. These simple electronic devices were explicitly built to behave like organisms, with a finite life and unpredictable behaviour. As Bebe Barron explains:

[A]ll of our circuits were based on mathematical equations so there was an organic rightness about it. When you listen to the last cut on the soundtrack album, you can hear the monster sounds. They have a unique attack — kind of a lumbering sound. The rhythm was absolutely organic to the circuit. In Forbidden Planet, when Morbius dies in the laboratory — that really was the Id/monster circuit dying at that point. And that worked especially well because Morbius was the monster: it was coming from his subconscious. That was the end of that circuit. It was the best circuit we ever had. We could never duplicate it. (Brockman 1992, 13)

The Barrons treated these lifeforms with a good deal of care and respect, very much like improvisers working within a fragile system, sensitive to small variations.

We always were innocents, with a sense of wonder and awe of the beauty coming from the circuits. We would just sit back and let them take over. We didn’t want to control them at all. We were in a very receptive state, like that of a child, working with our eyes and minds open, paying attention to the potential of each circuit, and we were simply amazed at what great things came out of those circuits. (Chasalow 1997)

The parallels between these working conditions and the position of the performer vis-à-vis NISE are striking. In both cases there is a tangible partnership between the circuit agent and the human agent. It is not possible for one to dominate the other.

Conclusion

The interaction paradigm that dominates electroacoustic music performance is defined in terms of a particular causal relationship to the environment; we make changes in the environment and in turn react to environmental events (Chadabe 1996, 44). This formulation construes the environment as something outside of ourselves in the first instance and forces us to consider bridging this gap as a secondary concern. We separate out flows and materials into arbitrary constructs, between which we build interfaces. This figure / ground metaphor simplifies the complex of environmental dynamics into simple binary systems. Two decades ago, already, Chadabe considered this interface model was “due for replacement.”

Performance models based on second-order cybernetics no longer fixate on interface, but rather take into account von Foerster’s assertion: “The environment as we perceive it is our invention” (von Foerster 2003, 212). Rather than environment, autopoiesis defines life in terms of internal self-production, as a self-defining homeostatic system (Maturana and Varela 1973). This definition may be extended to social groupings of such entities (Maturana 1980, 16) and applied to the specific domain of musical improvisation (Chadabe 1996). The autopoietic formulation allows us to examine emergent musical structures in ways that transcend previously applied dichotomies and arbitrary divisions between human activities (Parmar 2011a). The emphasis is now on process, rather than descriptions of qualities. Musical properties no longer require definition before the fact, but can instead appear as emergent phenomena, inherent in the milieu itself.

With this in mind, we can now paraphrase Maturana to achieve a working definition of NISE: The No Input Software Environment instantiates a collection of autopoietic entities, which interact with each other in an integrated system indistinguishable from a natural ecosystem. This conclusion demonstrates that autopoiesis is a useful tool in expanding our understanding of sonic ecology, beyond the concerns of preservation and documentation that dominate that field (Parmar 2012).

In continuing this research, there is considerable scope for further circuit design work, experimentation with alternative implementations (including performance controllers) and application of autopoietics to other performance systems. The power of a software modular synthesis environment is that prototypes may be created immediately in response to changing musical requirements (or even whims), enabling an efficient iterative design cycle. Such a development process does more than facilitate improvisation; it becomes part of improvisation itself.

Bibliography

Barron, Louis and Bebe Barron. Forbidden Planet. [Vinyl album]. Beverly Hills: Planet Records PR-001, 1976.

Borgo, David. “Synergy and Surrealestate: The orderly disorder of free improvisation.” Pacific Review of Ethnomusicology 10 (2002). Available online at http://www.ethnomusic.ucla.edu/pre/Vol1-10/Vol1-10pdf/PREvol10.pdf [Last accessed 9 February 2016]

Brockman, Jane. “The First Electronic Filmscore — Forbidden Planet: A conversation with Bebe Barron.” The Score 8/3 (Fall/Winter 1992), pp. 5–13.

Chadabe, Joel. “The History of Electronic Music as a Reflection of Structural Paradigms.” Leonardo Music Journal 6 (1996), pp. 41–44.

Chasalow, Eric. “Bebe Barron 1997.” YouTube video (14:49) posted by “Eric Chasalow” on 12 June 2011. http://youtu.be/Zg_5Eb8coTU [Last accessed 9 February 2016]

Clarke, Bruce and Mark B.N. Hansen. “Neocybernetic Emergence.” In Emergence and Embodiment: New essays on second-order systems theory. Edited by Bruce Clarke and Mark B.N. Hansen. Durham NC: Duke Uuniversity Press, 2009, pp. 1–25.

Dunbar-Hester, Christina. “Listening to Cybernetics: Music, machines, and nervous systems, 1950–1980.” Science, Technology, Human Values 35/1 (16 February 2010), pp. 113–139. Available online at http://sth.sagepub.com/content/35/1/113 [Last accessed 9 February 2016]

Hayles, Katherine N. “Boundary Disputes: Homeostasis, reflexivity, and the foundations of cybernetics.” Configurations 2/3, 1994, pp. 441–467.

Maturana, Humberto. “Introduction.” In Autopoiesis and Cognition: The realization of the living. Dordecht, Holland: D. Reidel Publishing, 1980, pp. xi–xxx.

Maturana, Humberto and Francisco Varela. “Autopoiesis: The organization of the living.” In Autopoiesis and Cognition: The realization of the living. Dordecht, Holland: D. Reidel Publishing, 1973, pp. 63–135.

Parmar, Robin. “No Input Software: Cybernetics, improvisation and the machinic phylum.” ISSTA 2011. Presented at the 1st Irish Sound, Science and Technology Association Convocation (Limerick, Ireland: University of Limerick, 10–11 August 2011).

_____. Avoidance Strategies [CD]. Ireland: Stolen Mirror 2011C01, 2011.

_____. “‘The Garden of Adumbrations’: Reimagining environmental composition.” Organised Sound 17/3 (December 2012), pp. 202–210.

Pressing, Jeff. “Cybernetic Issues in Interactive Performance Systems.” Computer Music Journal 14/1 (Spring 1990), pp. 12–25.

Rogalsky, Matt. “David Tudor’s Virtual Focus.” Musicworks 73 (Winter 1999), pp. 20–23.

Silent Records. “David Myers” [Artist page]. 18 May 2011. http://www.silentrecords.us/html/david_myers.html [Last accessed 9 February 2016]

Stone, Susan. “The Barrons: Forgotten pioneers of electronic music.” Morning Edition, National Public Radio News, 7 February 2005 (updated 12 December 2012). Available online at http://www.npr.org/templates/story/story.php?storyId=4486840 [Last accessed 9 February 2016]

Tudor, David. Three Works for Live Electronics [CD liner notes]. New York: Lovely Music, 1996. Available online at http://www.dramonline.org/albums/david-tudor-three-works-for-live-electronics/notes [Last accessed 9 February 2016]

von Foerster, Heinz. “On Constructing a Reality.” In Understanding Understanding: Essays on cybernetics and cognition. New York: Springer-Verlag, 2003, pp. 211–228.

_____. “Notes on an Epistemology for Living Things.” In Understanding Understanding: Essays on cybernetics and cognition. New York: Springer-Verlag, 2003, pp. 247–260.

Wiener, Norbert. Cybernetics: Or Control and Communication in the Animal and the Machine. 2nd edition. Cambridge MA: MIT Press/Wiley, 1961.

Social top