Exploring Real, Virtual and Augmented Worlds Through “Putney”, an Extended Reality

Held in Manchester from 24–26 October 2014, the first Sines & Squares Festival of Analogue Electronics and Modular Synthesis was an initiative of Richard Scott, Guest Editor for this issue of eContact! Some of the authors in this issue presented their work in the many concerts, conferences and master classes that comprised the festival, and articles based on those presentations are featured here. After an extremely enjoyable and successful first edition, the second edition is in planning for 18–20 November 2016. Sines & Squares 2014 was realised in collaboration with Ricardo Climent, Sam Weaver, students at NOVARS Research Centre at Manchester University and Islington Mill Studios.

Putney is an interactive sonic path-finding project featuring a virtual VCS3 synthesizer created with a graphics-physics-game engine and originally composed and designed by Ricardo Climent in 2014. The storyline is set in 1969 and it features an abandoned vernier pot called “K” as the main character of a sonic-centric immersive experience. Performers need to help K to navigate her sonic journey (a sonic path) in search for “home”, the historical EMS studios on Putney Bridge Road in London.

Background

The Concept

The underlying concept behind Putney is the navigation of the intersections between the real, the virtual and the augmented through the medium of sound, aiming to construct a new “extended reality”. We commence with an introduction to retrograde cultures and the interference of cross-modalities, at the same time providing information about some of the original features in the VCS3. Then, we explore the connections between the notions of modularity, hybridisation and extended reality through interactive performance, under the umbrellas of music composition and live game-audio.

Two Creative Outcomes

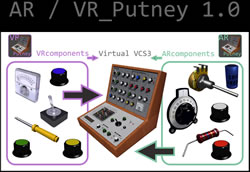

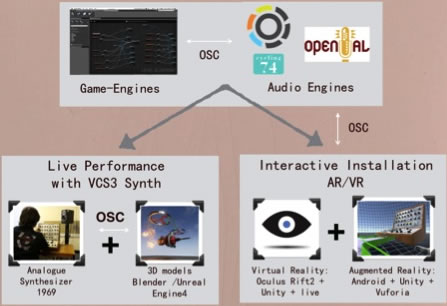

After the first presentation of the project by Ricardo Climent in 2014, the concept was further explored in the form of collaboration strands that produced two outcomes: Putney K and AR_VR_Putney. Both aimed to enhance the existing degree of musical expression and interactivity in the work.

Putney K, for live game-audio, evolved the concept of sonic pathfinder in collaboration with Mark Pilkington, who incorporated the live performance of an original EMS VCS3 analogue synthesizer (interacting with its virtual game-audio version, played live by Climent). This piece has also been performed in duo with other live electronic musicians, such as Mexican Rodrigo de León Garza (modular synthesizer).

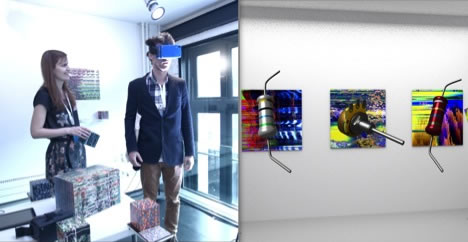

The second collaboration route was entitled AR_VR_Putney and was in the form of an interactive media composition employed as the language and grammar for extended realities (a term which explores augmented with virtual reality through sound). AR_VR_Putney was intended to be presented alongside its original concert version as if they were in the style of a “song cycle”, although performed in different venues of the same festival or event. AR_VR_Putney was created in partnership with architect Alena Mesárošová and visual artist Manuel Ferrer Hernández from the Manusamo & Bzika collective.

Retrograde Culture and the Interference of Cross-Modalities

The resurgence of a retrograde culture, such as the return to analogue systems and their unique sound, is in many aspects driven by a sense of aural and cultural nostalgia at the edge of the digital era. By uncovering the secrets and forms of interaction of a recent past, the composer becomes a pseudo-archaeologist mediating a sense of place and time to create music.

With this project, a number of questions emerge, such as: Can the combination of analogue and digital technologies question the methods that composers and performers use to critically engage with the medium? And how will the hybridisation of the medium affect its embedded musical language?

The hybridisation of media provides the interference of cross-modalities by provoking new possible lines of investigation in music performance and composition. As a result, the incorporation of interactive audiovisual technology allows the development of a system in which creative restrictions are exposed and explored. It is then that the fluidity between the instrument and its virtual simulation permits the boundary of the physical and virtual to coexist in the minds of the performer and the listener. Within this framework, the digital æsthetic has resulted in vast changes in all aspects of contemporary music, cultures and cultural economics — social theory — through mediation of performance and improvisation, but what do we gain from its intersection with the analogue æsthetic and culture? How can this merger shape perceptual and critical reception of an audiovisual performance, and where is the added value? Putney K aims to tackle all these questions throughout the piece in its alternative outcomes.

The Original VCS3 and the Modular Metaphor

The original VCS3 (also called Putney) is a semi-modular analogue synthesizer developed by Peter Zinovieff’s EMS company in London back in 1969. Its electronic circuits were largely designed by David Cockerell and the machine’s distinct appearance was the work of electronic composer Tristram Cary. This iconic synthesizer consists of three solid-state oscillators, a ring modulator, an envelope shaper, a voltage-controlled filter, an internal spring reverb unit, a white noise generator, a patch bay matrix, audio inputs and outputs, and a joystick controller.

The hybridisation of the Putney system via the combination of 3D-designed electronic components in a virtual game engine environment, and the physical presence of the actual VCS3 synthesizer, adds an “extended reality” to the fabric of the piece, where sound is the link that unites the two worlds. In this state of historical replay, Putney pays homage to the EMS Studios in London by taking inspiration from the design and sonic capabilities of the EMS VCS3 synthesiser to influence the structure of compositional materials. Physical components and surface plates of the VCS3 synthesizer were virtually modelled in 3D to become avatars in an interactive game-audio experience. Such a game engine provides an empty canvas that introduces a range of sonic fantasies (aural paidia, as in Roger Caillois’ typology) that contributes to the visualisation of the sound through its modular circuitry.

The Modular Metaphor

Putney’s live performance consists of collecting electronic components of the instrument during game play. When the sonic elements of the sounding composition emerge as a result of the virtual circuit assemblage, the whole becomes more than the sum of its parts — this process we have named the “modular metaphor”.

Modular design is based on the subdivision of simple block elements called modules, and it is through the energy of movement, transmission and connection of these units that the metaphor is fulfilled. The design of the modular synthesizer — an assemblage of diverse parts, each with a distinctive role in the larger system — contrasts with the contemporary digital computer, a single, all-inclusive machine capable of innumerable number calculations.

Some strands of experimental music culture embrace the tradition of re-designing musical systems, which proves to be appealing to performers looking for alternative ways of interacting with instruments and technology. The process of searching for that elusive “hidden quality” is the pursuit of machine musicianship and human interaction through notions of re-invention, observation and re-evaluation. Visionary ways in which composers have utilised modular design is evident especially in those hybrid systems where a combination of computer and analogue sound synthesis is encountered. Modular systems have the potential to harness the physical (tactile) and cognitive (algorithmic) into a solid union which informed the various realities of Putney.

“Putney K” — Exploring the Language of Hybridisation

Putney K explores the connections between the concepts of modularity, hybridisation and extended reality. It makes use of an audiovisual interactive performance duo as the sonic laboratory for the investigation of an extended language and grammar emerging from a number of intersections.

Putney K juxtaposes extremes found in Milgram’s virtuality continuum throughout the convergence of spaces combining sound perspective, localisation, psychoacoustics and spectacle. In this work, there are many connections in the audiovisual contract with regards to the languages of the digital and the analogue. The difference between the two systems — digital and analogue — consists of their position along the reality-virtuality continuum, and their convergence provides a homomorphic experience in which the viewer becomes more engaged and immersed. The screen reveals a 3-dimensional virtual world, whilst the synthesiser exists both as a real-world object and as a mirror of its components, block functions and circuits. The two states embrace one another to provide an immersive and unique experience both for performer and listener.

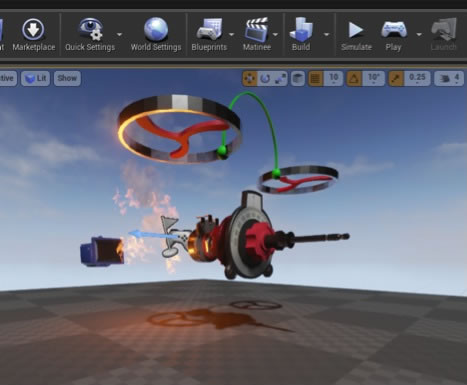

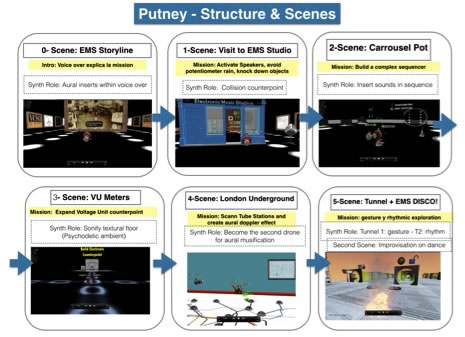

From the creative perspective, the composer makes use of a semi-abstracted, game-like narrative to portray the sonic journey of a lost VCS3 vernier potentiometer as the main character of the story (Fig. 1). It embarks the listener upon a voyage of aural discovery across the musical attributes of the instrument and its retro culture and aural æsthetics. The live game-audio performance provides a visual structure “score” with focused “missions” that need to be accomplished (Fig. 5). It consciously borrows from the theory of level design in computer games (Thompson, Berbank-Green and Cusworth, 2008) but its rewards are primarily “sonic”, providing the performers with “compositional esteem” as the performance progresses.

The interaction commences with a voice-over introducing the storyline and challenges to Putney K, the flying potentiometer character, will encounter on her journey to visit a virtual representation of the EMS Studio (Scene 1), where she can explore the sounds of some of its original equipment, including: a Synthi 100, reel-to-reel machines, interactive speakers with aural messages and a faked PDP8 computer (which is really a replica of Manchester’s Baby, from 1948). 1[1. The world’s first computer with stored programmes. Officially known as the Manchester Small-Scale Experimental Machine (SSEM), Baby was built by Frederic C. Williams, Tom Kilburn and Geoff Tootill, and ran its first programme on 21 June 1948.] The studio façade seen in the piece was reconstructed after a visit to 277 Putney Bridge Road in London, currently the site of a hairdressing salon, which was the home of Zinovieff’s EMS Studio in the late 1960s. More musical challenges continue for the performers with the construction of a sequencer-like aural section (Scene 2). Activated by collecting sounds from virtual potentiometers in a loop, originally work pre-recorded from Rob Hordijk’s “benjolin” synth module, creating a curious sequential interplay between the unreal and the live VCS3 synthesizer (or modular system, when performed with another instrument).

Scene 3 “VU Meters” (a voltage display) explores the erratic nature of the instrument, creating a counterpoint that is “baked” to the VU meter’s needle. The character activates the fast and unpredictable melodic contours via a proximity sensor (in the virtual space) and places it at the centre of the scene, creating a sonically rich electronic counterpoint when they are all together. This scores enough compositional esteem to proceed to the next section, while the musification of the dynamic floor in an otherwise totally dark environment is made via the live synthesizer through the use of visual arm cues or body gestures. The fourth scene, exploring the “London Underground” map consists of pre-composed sections that are dynamically performed. The small green scanner traces each London tube station, sonically “ping-pointing” the sound of each station until it reaches Putney bridge station. The main character, K (in the game engine), and the real instrument portray the sounds of the two potentiometers in orbit, representing gestural detail and a 3-dimensional sustained sound shooter (the former) and the Doppler effect (the latter).

Up until this scene, all the game and physics engine parts were realised in the Blender game engine and the sound in OpenAL via a Python script with the use of logic bricks. In the final double scene, the composer decided to use Unreal Engine 4. In the Tunnel sections (Fig. 6, Scene 5), the focus is on the exploration of sonic gesture through the collisions of pots and jumping capacitors. Once unlocked, the audio featured in the second tunnel is exclusively focused on rhythm. When the potentiometer finally reaches the EMS studios, she is full of joy and aural excitement and the players in the virtual and the real realms are free to improvise for the first time without any rule or compositional constraint.

AR_VR_Putney — Extended Reality

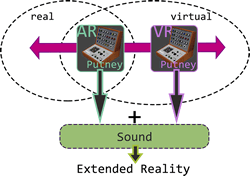

AR_VR_Putney is an interactive media composition exploring extensions of our perception of reality. It employs the very same concept as in Putney K — a 1969 VCS3 synthesizer used as the means to transcend the boundaries between virtual and augmented realities throughout sound.

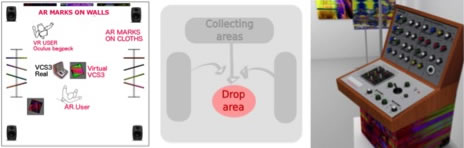

In the proposed ludic experience, visitors co-exist and interact in a game-like immersive environment to construct the vocabulary of a shared sound-centric experience that triangulates between the real, the virtual and the enhanced. Virtual potentiometers, VU meters, patch-pins and electronic components spring from the augmented reality markers positioned on the walls of an art gallery (Fig. 7), so that users can collect and transport them to assembly parts of a virtual VCS3 in the middle of the room (Fig. 8). As the synthesizer parts are being assembled by visitors, the purposely-composed mosaic of original VCS3 recordings is also gradually organised. As a result, sound and music composition provide the integration for both experiences as extensions of reality.

AR_VR_Putney uses both augmented (AR) and virtual reality (VR) to modify the user’s spatial perception. The augmented reality enables us to distinguish the digital information flowing through the physical space. It creates a sensory interface that allows us to appreciate the virtual part that is hidden in physical space (Mesárošová and Ferrer Hernández 2015). The virtual reality in this case is fully synthetic environment designed as a 3-dimensional environment that offers users a first-person experience.

Technologies to augment the human field of view with virtual overlays (i.e. AR markers for mobile technology) are rarely explored in combination with 100% computer-simulated 3D environments (using head-mounted VR displays). In AR_VR_Putney, we have added an additional angle (the aural) to achieve the integrated experience. Sound is enacted from both the virtual and augmented experience, enhancing and sharing visitors’ extensions of reality, while providing an added focus on sound to a genre, which was born predominantly visual.

The sound produced by user interaction in the virtual and augmented environment aims to dissolve the boundaries between the virtual and the augmented, by introducing a new kind of a reality: the “extended reality” (ER). Extended reality offers the new possibilities in a game-engine environment to implement a non-linear narrative that entirely depends on user’s interaction.

The AR_VR_Putney installation offers various kinds and degrees of immersion to the user. The physical environment users actuate as spectators: they are only able to perceive the physical visual parts of the installation, and the sound environment immersion is non-interactive. For the AR environment users the installation is entirely interactive. They can move and make changes within the physical world that affect the virtual world and produce a new real-time sound composition. The virtual immersion is partial, while the users are aware of the real environment that surrounds them.

The VR environment users achieve the highest grade of immersion because they can interact in the virtual world. The perception of movements and the users’ space perception are fully dependent on the virtual space. The user interactions within the virtual space affect the experience of the users in the real environment, as the VR user’s interactions directly impact — and alter — the sound composition heard in the real environment.

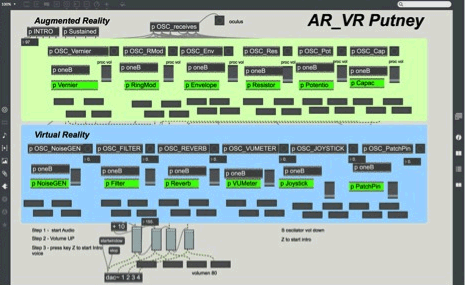

Both visual and audio technologies are employed to simulate the virtual and augmented synthesizer parts. On the visual side, the following are used:

- Augmented Reality: Unity3D and Vuforia using markers deployed for Android smartphones or tablets.

- Virtual Reality technology: OculusRift 2 Head-Mounted Display connected to a laptop running the virtual game environment made with Blender and Unity3D software and original VCS3 3-dimensional virtual models.

And on the visual side, the following are used:

- A digital sound engine that consists of VCS3 real sound samples and transformations in Max/MSP is controlled by the visitor using an interface created using Unity and OSC.

- A quadraphonic system (e.g., 4 Genelec 8030s).

Putney is an interactive sonic path-finding work introducing the concept of “extended reality” as a result of the exploration of the intersections between the concepts of real, virtual and augmented reality. The latter two have been widely explored in isolation but it is rare to see projects involving the combination of both, especially when sound becomes the glue for the amalgamation of technologies with the mission to drive our senses.

This paper also observes the resurgence of retrograde culture, in particular the use of analogue sound systems, while focusing on the exploration of the language and grammar of an EMS 1969 VCS3 synthesizer. Although this project heavily relies on the use of physics-graphics-game-audio engines, often found in ludic contexts and video consoles, it recontextualises such technologies in a performative environment. For instance, the duo Climent / Pilkington (live game-engines / live VCS3 synthesizer) performing at Sines & Squares Festival recovers the tradition of the live concert, using the moving image as a dynamic score for player’s interaction. It immerses the audiences in the narrative of the reconstruction of the synthesizer as a method to unfold the musical language and grammar.

This section leads to the definition of the “modular metaphor”, transcending the physicality of the electronic instrument during game play, when performing assemblage of the VCS3 circuits, where the whole becomes more than the sum of its parts through sound. It briefly discusses modular design in the concept of hybrid systems including computer and analogue sound synthesis technologies.

The final AR_VR section featuring Mesárošová’s input describes the implementation of such technologies in detail but it especially highlights the piece’s alternative outcomes, supplied by multiple reality modalities. In particular, when contrasting the interactive AR_VR installation with the concert performance. This research may open doors for future work where audience interaction could integrate AR_VR_Putney in the context of Putney’s live concert performance.

Bibliography

Arnheim, Rudolf. Art and Visual Perception: A Psychology of the Creative Eye. Berkeley: University of California Press, 1974.

Begault, Durand R. 3-D Sound for Virtual Reality and Multimedia. Boston: AP Professional, 1994.

Caillois, Roger. Man, Play and Games. University of Illinois Press, 1961.

Chion, Michel. Audio-Vision: Sound on Screen. Trans. Claudia Gorbman. New York NY: Columbia University Press, 1994.

Climent, Ricardo and Mark Pilkington. Putney (2015) Vimeo video “Putney Live Performance @ xCoAx Conference 2015. Ricardo Climent (Live Game-Audio Engines) and Mark Pilkington (1969 VCS3)” (8:21). Posted by “Wunderbar Lab Berlin” on 13 September 2015. http://vimeo.com/139128350 [Last accessed 31 October 2015].

Collins, Karen. Game Sound: An introduction to the history, theory, and practice of video game music and sound design. Cambridge MA: MIT Press, 2008.

Ince, Steve. Writing for Video Games. London: A.&C. Black, 2006.

Klug, Chris and Josiah Lebowitz. Interactive Storytelling for Video Games: A Player-centered approach to creating memorable characters and stories. Burlington MA: Focal Press, 2011.

Koster, Raph. A Theory of Fun for Game Design. Scottsdale AZ: Paraglyph, 2005.

Lecky-Thompson, Guy W. AI and Artificial Life in Video Games. Boston MA: Charles River Media, 2008.

Menard, Michelle. Game Development with Unity. Boston MA: Course Technology Cengage Learning, 2012.

Mesarosova, Alena and Manuel Ferrer Hernández. “Art behind the mind: Exploring new art forms by implementation of the electroencephalography.” Proceedings of the 2015 International Conference on Cyberworlds (Visby, Gotland, Sweden: Uppsala University, 7-9 October 2015).

Milgram, Paul and Fumio Kishino. “A Taxonomy of Mixed Reality Visual Displays.” IEICE Transactions on Information and Systems 77/12 (1994) pp. 1321–1329.

Mullen, Tony. Introducing Character Animation With Blender. Hoboken NJ: Chichester: John Wiley, 2011.

Murray, Janet H. Hamlet on the Holodeck: The future of narrative in cyberspace. Cambridge MA: The MIT Press, 1998.

Musburger, Robert B. An Introduction to Writing for Electronic Media: Scriptwriting essentials across the genres. Amsterdam/Boston: Focal Press, 2007.

Pecino, Ignacio and Ricardo Climent. “SonicMaps: Connecting the ritual of the concert hall with a locative audio urban experience.” ICMC 2013: “Idea”. Proceedings of the 39th International Computer Music Conference (Perth, Australia: Western Australian Academy of Performing Arts at Edith Cowan University, 11–17 August 2013). http://icmc2013.com.au

Slater, Mel and Martin Usoh. “An Experimental Exploration of Presence in Virtual Environments.” Unpublished, 2013. Available online at http://qmro.qmul.ac.uk/xmlui/handle/123456789/4705 [Last accessed 31 October 2015].

Steuer, Jonathon. “Defining Virtual Reality: Dimensions determining telepresence.” Journal of Communication 42/4 (December 1992), pp. 73–93. http://doi.org/10.1111/j.1460-2466.1992.tb00812.x [Last accessed 31 October 2015).

Sines & Squares Festival. http://sines-squares.org

Thompson, Jim, Baranby Berbank-Green and Nic Cusworth. The Computer Game Design Course: Principles, practices and techniques for the aspiring game designer. London: Thames & Hudson, 2007.

_____. Game Design: Principles, practice, and techniques — the ultimate guide for the aspiring game designer. New York City: John Wiley & Sons, 2008.

Unreal Engine 4. http://www.unrealengine.com

VCS3 3D models designed by Ricardo Climent. http://vcs3.com [Last accessed 31 October 2015].

Social top