Audiovisual Art

Perspectives on an indivisible entity

Inspired by Deleuze, particularly his writings about the audio-visual (Deleuze 1989), here are introduced diverse examples of creative approaches toward the production of audiovisual works in a historical perspective, where the visual and the sonic have the same level of importance. The main focus though, refers to the distinction of two parallel, but somehow opposite approaches: on the one hand, those in which both elements are synchronised in a unity; on the other hand, those in which the disparate elements are not synchronised as a unity, but nevertheless work as a whole and individual entity. The latter is the artistic approach of the author of this paper; a description of how such an æsthetic position works is also described.

According to Brian Evans (2005, 12), when the 20th century arrived, artists started to “consider their work in a musical way.” Since the start of the past century, there have been several approaches to the combination of visual and sonic elements. This can be observed in works by many composers and painters, who have shown interest in the relationship between colour and music. Examples can be found in Scriabin and Kandinsky: the former included in his score for his musical composition Prometheus: Poem of Fire (composed in 1910–11) a voice for coloured light (McDonnell 2007, 17); around 1911, as Michael T.H. Sadler observed, Kandinsky was “painting Music. That is to say, he has broken down the barrier between music and painting” (Kandinsky 1977, xix). By the middle of the 20th century, filmmakers such as John and James Whitney (around 1944) were influenced by Schoenberg’s serial principles, notably in their films Catalog and Arabesque (McDonnell 2007, 11).

Since the 1990s, the idea of seeing the sound has spread rather quickly, mostly thanks to the advantages offered by new technologies and their cheaper prices, all of which makes possible today to edit a film or create sounds on a laptop, without the need of using professional studios, something unthinkable until the end of the 1980s. Nowadays, composers have access to film and video tools, whilst film-and-video makers have access to sound and music software; the consequence is that audio-visual creations have become more and more frequent. As Zielinski observes:

Further, in the late 1980s, several software firms marketed programmes with their young customer-kings of kilo- and megabytes could produce rough animated moving pictures themselves, provided that their computers were equipped with an accelerator, a “blitter”. With this step, the simulation of visuals gradually approached the industrial level which the simulation of music had already attained. The composition of synthetic worlds of sound from pre-existing set pieces had already reached the market for personal computer. (Zielinski 1999, 228)

A Brief Historical Overview

The dream of creating a visual music comparable to auditory music found its fulfilment in animated abstract films by artists such as Oskar Fischinger, Len Lye and Norman McLaren; but long before them, many people built instruments, usually called “color organs,” that would display modulated colored light in some kind of fluid fashion comparable to music. (Moritz 1997)

Based on Moritz, the former quote introduces the possible origins of audio-visual compositions and visual music. Colour organs consisted of a keyboard for coloured light that could project colour in synchronicity with music and were inspired by the Clavecin Oculaire in the 18th century by Louis-Bertrand Castel and Newton’s theory of correspondence between music and colour (Peacock 1988). Rimington arranged the keyboard for these instruments in a similar manner as that of any tempered key-based instrument, with the spectrums also divided in similar intervals (Rimington 1912, 50). Rimington’s colour organ was used, according to Moritz (1997), for the premiere of Scriabin’s synæsthetic symphony Prometheus: The Poem of Fire in 1915. 1[1. Scriabin composed the piece from the beginning for the interaction with light in 1909/10, but the premiere of 1911 was a purely instrumental version because the lighting machine did not work at that time. Only in 1915 (March 20) was the work premiered as originally intended at Carnegie Hall (Peacock 1988, 401).]

In spite of the fact that there are many other important examples of the combination of image and sound, this paper focuses particularly on the moving image and specifically on the author’s audiovisual compositions described in the next section. Hence, according to this view, an appropriate definition of Audio-Visual theory can be found in the ElectroAcoustic Resource Site (EARS) as “The study of how moving images and sound interact in audio-visual media such as film, animation, video art, music video, television” (EARS 2013), which does not include cases such as those by Kandinsky and Klee because they are not related to the moving image.

In the 1920s, Oskar Fischinger began to experiment with the combination of music and visual art, influenced by the experimental filmmaker Ruttmann and particularly by Ruttmann’s Lichtspiel Opus 1 (1921). Fischinger’s series of Studies (1929–34) are some of the most important experiments in the field, in which film and music are perfectly synchronised. Another important example is An Optical Poem (1938), which was composed to the Second Hungarian Rhapsody by Franz Liszt and made using the traditional animation technique of stop motion. One of the most important contributions to the field were his writings and film experiments about Ornament Sound [Ornament Ton], which focused on creating films with synthetic sound by drawing directly on the film soundtrack (Fischinger 1932). These experiments could be considered one of the first approaches to what nowadays is called visual music 2[2. Visual music could be described as a non-narrative and imaginative mapping of a set of visuals and music (or vice versa) normally synchronised in real time. (Evans 2005; EARS)]. According to the Center for Visual Music (CVM), visual music refers to systems that create imaginative mappings, translating sound into a visual and vice versa (CVM 2012).

After Fischinger, there were several other filmmakers, who created representative and important works in the field of audio-visual, such as John and James Whitney, Norm McLaren and William Moritz. According to Ikeshiro, the Whitney brothers produced one of the earliest examples of the integration of these two entities, with a more formal and structural level, in their Five Abstract Film Exercises, created between 1941 and 1944 (Ikeshiro 2012, 148).

As can be observed in these film exercises, the Whitney brothers extended Fischinger’s idea of connecting the visual directly to the aural by the use of two devices, one being “an optical printer and another that used a system of pendulums to photograph and control the light to expose areas of the soundtrack” (McDonnell 2007). This technique helped the brothers to create image and sounds at the same time in which “sound pattern was literally mirrored, figure for figure, in an image / sound dialog” (Ikeshiro 2012, 148).

Later, the Whitney brothers, in collaboration with Jerry Reed, developed a special software environment that synchronised graphics and music, the RDTD (Radius-Differential Theta Differential), which generated imagery from sound oscillations (McDonnell 2007, 12). One example of the usage of this software can be appreciated in Permutations (1968), where there is a drum pattern that is mirrored by a visual motive: “For Whitney it was the beginning of the future of his vision of digital harmony, one which he left for future artists to continue” (ACM Siggraph). Whitney defined the unification of colour and sound (“tone” in his words), as a “very special gift of computer technologies” (Ibid.).

From the above, it can be gathered that since the beginning of the 20th century, artists and composers have been interested in the synchronisation of both the sonic and the visual elements. In most of the aforementioned examples, music and image were treated as two separate entities, with one of them being subordinated to the other instead of both having the same level of independency. There are however, other approaches, such as that of Eisenstein, who, according to Evans “was not interested in direct synchronisation of sound and image, but rather the ‘inner synchronisation between the tangible picture and the differently perceived sounds’” (Evans 2005, 22). This type of synchronisation can be considered as a search for a new type of image, in which neither of its two constitutive elements can be separated; the result is a new individual entity called “audiovisual”, in which those two perceptions — the visual and the sonic — interact, creating a relationship described by Chion as an audiovisual contract, a “reminder, that the audiovisual relationship is not natural, but a kind of symbolic contract that the audio-viewer enters into, agreeing to think of sound and image as forming a single entity” (Chion 1994, 216).

In the 1980s Deleuze explored the topic of the audiovisual in a rather deep and methodical manner:

What constitutes the audio-visual image is a disjunction, a dissociation of the visual and the sound; each heautonomous, but at the same time an incommensurable or “irrational” relation which connects them to each other, without forming a whole, without offering the least whole. (Deleuze 1989, 256)

In his writings, Deleuze arguments that once an image becomes audiovisual, it does not break apart, but on the contrary, it acquires a new consistency (Ibid., 252).

In the past years, the influence of the Whitney brothers is noticeable in audiovisual performances in different environments, thanks to the variety of available software (sometimes working in real time) which generate visual effects from music or sound, and which typically reflect a predilection for the synchronization of both entities. Jörg Scheller observes that starting in the 1920s, but more specifically from the 1990s onwards, visual music emerged as an art movement shared between different cultural spaces such as clubs, theatres, galleries and academy (Scheller 2011). Examples of this emergence can be found in the CAMP Festival, initiated by Thomas Maos and Fried Dähn in 1999, and in the media art gallery fluctuating images, created in 2004 by Cornelia and Holger Lund.

After this summary of audio-visual practices seen from a historical perspective, the next section focuses on an æsthetic approach in which an autonomy of each of the two elements is consciously maintained: the sonic element is not subordinated to the image, and the image is no subordinate of the sound. Hence, both elements possess the same degree of importance but with the common goal of a complete and unique audiovisual experience, where optic and sonic images are presented simultaneously, albeit disassociated from one another. This creates a different kind of unity than that described above, a new type of image made by a very strong and indivisible relationship of those two different entities, following the aforementioned Deleuzian descriptions of the term “audiovisual”. This type of unified image is the goal of the approach I have used for most of my audiovisual works, for which details are given in the next section.

Convergence of Visual and Sound Elements: Two Approaches Based on Deleuze

This section describes two approaches taken by the author, in which optic and sonic images are treated as separated and individual entities. Hence, they are not related to one another: visual and auditory layers are combined in these compositions in counterpoint. Given the fact that the sound is non-diegetic — meaning that it neither responds nor belongs to the visuals — the audience is induced into a rather permanent state of doubt: it is in the power of the audience to decide at each moment which direction (visual or sonic) should be followed. In other words, two separate but nevertheless unified fields, into which the audience members can immerse themselves, are proposed: the sonic and the visual.

The following sub-sections will describe two different approaches, based on how the point of convergence between the audio and the visual layers is achieved. The first approach (montage in fixed-media compositions) considers the point of convergence conceived during the composition of a fixed media piece. The second approach (performances with interaction in real time) can be divided in two subcategories: the first one considers the point of convergence during the performance in real time, activated by the actions of the performer (playing a musical instrument) during the execution of the piece; the second situates the point of convergence in the emotional and physiological state of the performer, due to the introduction of bio-feedback and bio-interfaces during the performance.

Audiovisual Montage in Fixed-Media Compositions

For these compositions, both elements are created simultaneously, albeit independent of each other; which guarantees that each of them maintains their individuality and inner flux when mixed together. This process does not exclude short moments of convergences of visual and audio elements at specific points in the composition. These carefully planned convergences are not very frequent in my work 3[3. More information on the works cited here as well as other works by the author can be found on her website.], but some of them can be found, for example, at 6:10 in Bewegung in Silber (Video 1) or at 4:10 in ZHONG (Video 2).

This occasional convergence does not imply however the presence of diegetic sound; it is simply a means for both images (optic and acoustic) to have a tension point at precisely the same moment, hence creating a unique and absolute entity. Based on Chion, these points of convergence can be called “synch points”: “A point of synchronization, or synch point, is a salient moment of an audiovisual sequence during which a sound event and a visual event meet in synchrony” (Chion 1994, 58).

Audiovisual Performances with Interaction in Real Time

As mentioned above, performances with interaction in real time can be found in my work using different types of interaction: either live electronics performances 4[4. These performances are not necessary audiovisual; the term is more frequently used in real-time performances with electronic and interactive instruments (Landy and Atkinson).] or bio interfaces / biofeedback.

Interaction Using Live Electronics

In this type of real-time performance, the synch points are created by the interconnection between music produced by a musical instrument and the visual element. For this type of performance, the aforementioned counterpoint of both elements is maintained, as the two layers are again separated and individual; the difference however, is that the points of convergence are produced by a musician — normally performing with an acoustic instrument — in real time during the execution of the piece in a flexible way, in contrast to those works described above, in which the convergence was defined by the composer a priori, and are hence fully fixed.

An example of how this works can be seen in the audiovisual performance Wooden Worlds (2010), created in cooperation with composer Javier A. Garavaglia. For this performance, the live viola functions as an accretion and mergence agent, unifying, in a sense, the audio and visual elements via musical passages; some of these passages were composed in detail while others were freely improvised during the performance (Garavaglia and Robles 2011). In this piece, there is a real-time interaction between the video and the viola, where two parameters from the viola — pitch and intensity — are sent to a Max patch to scale and trigger diverse video effects. These video effects are therefore controlled by the viola in real time and the viola performer can be considered as the pivot of the audiovisual convergence (Video 3).

Another example of a performer / instrument as a pivot of the audiovisual convergence is the visualisation of music in improvised performances, whose ethic, according to Tremblay, is “an art of composing in real time, more often than not within an ensemble context” (Tremblay 2012, 4). In many of these performances, the visual part is directly influenced by improvised music and based on a translation of sound in images as in visual music, described above. The most important characteristic of these audiovisual improvisations is the synchronisation of both elements. In a performance at the 2013 CAMP Festival with the cellist Fried Dähn 5[5. Audiovisual improvisation for an electronic cello and video in real time, performed at the 2013 CAMP Festival at the Salon Suisse, during the 55th Venice Biennale. A video of the entire concert, “Camp Festival for Visual Music,” was uploaded to Vimeo by “Sandi Paucic” on 15 June 2013.], I used frequencies obtained from the cello to calculate the dimension and pixel position in the screen of each projected image; the result is a visualisation of music that reinforces the performer’s musical expression and creates a space that contains and amalgams both elements.

Audiovisual Real-Time Performances Using Biodata

In spite of what is said in the previous section, an instrument-and-live-electronics type of convergence does not correspond to Deleuze’s concept of audiovisual, and are therefore perhaps slightly off-topic here. It is mentioned only because it is nevertheless a part of my body of artistic work. In fact, my main interest focuses on the creation of a new image with a new consistency created from not only the dissociation but also an irrational relation of both elements, as Deleuze describes the concept of an audiovisual image. Hence, a substantial part of my research in interactive multimedia works is dedicated to the usage of biointerfaces, based on biofeedback methods developed in the 1960s (Robles 2011, 421), all of which include Deleuze’s view as its main æsthetic goal.

In these performances, sound and visual elements are derived directly from the performer; the values of her diverse physiological parameters are captured using biotechnologies before being transmitted to a computer and translated into further visual and sonic elements. Video and sound effects created using Max are scaled and triggered by the values from diverse physiological parameters of the performer at the moment of measurement, such as muscle tension, brain activity or skin surface moisture levels. For these performances involving biofeedback techniques, the convergence point between video and sound is created by the performer’s emotional state during the performance: although video and sound are generated separately and independently from each other, any type of biointerface reveals — in the form of computer data — different psychological and/or physiological inner states of the performer (for example, the appearance of stress or relaxation), which translate into simultaneous modifications in both the audio and the video layers. In these cases, video and sound are connected to the performer, but not to each other. The main aim behind these performances is the creation of an audiovisual environment that reflects the performer’s inner state in real time, provoking in both the performer and the audience member a conscious perception of the body and its functions.

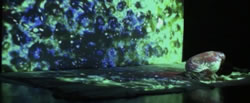

Three examples of this type of performances of my own authorship, each of them using different kinds of bio-interfaces, are Seed/Tree, INsideOUT and SKIN 6[6. A detailed description of the works Seed/Tree and INsideOUT can be found in the author’s article “Creating Interactive Multimedia Works with Bio Data” (Robles 2011).], which make use of electromyography (EMG), electroencephalography (EEG) and galvanic skin response (GSR), respectively (Videos 5–7). SKIN (2012–13) is brand new performance and an interactive installation using a GSR interface, which measures the performer (for the performance version) and visitor (for the installation version) skin’s moisture. The variations of these values are an indication of psychological or physiological arousal, such as appearance of stress or relaxation. SKIN is a reflection about metamorphosis, in this case, inspired by the natural moulting process of skin change. The visual environment is created by close-up images from the performer’s skin recorded in real time and the sound environment is transformed by the performer’s emotional state via skin moisture values (Fig. 1).

Conclusion

Since the first experimentations with the combination of image and sound in the moving image in 1920s, the perfect synchronisation of both elements has been one of the most desired tendencies by composers and visual artists. Approaches such as Eisenstein’s (around the 1930s) about the combination of these two elements as independent layers to produce a truly audiovisual image do not seem to be a frequent issue in most audiovisual concerts nowadays. Thus, concepts about the audiovisual such as those proposed by Eisenstein and Deleuze seem to be rarely applied to audiovisual experimentations nowadays.

All of the different ways of composing audiovisual works discussed here seek to offer the audience a non-representative experience, which goes beyond the perception of similarities and synchronicity between both fields. The audience is confronted with a particular experience based on two independent entities, in which each one maintains its own values and characteristics but at the same time offers a new individual image as a result of the two independent entities. In this way, each audience member becomes an active participant, capable of creating her own personal and particular environment by connecting these two entities in a personal manner. The two entities propose a wide range of possibilities, connections and relationships between the visual and the aural. It becomes therefore a question of how we see and listen to these types of works.

Bibliography

ACM Siggraph. “The John Whitney Biography Page.” http://www.siggraph.org/artdesign/profile/whitney/rdtd.html

Chion, Michel. Audio-Vision: Sound on Screen. Trans. Claudia Gorbman. New York: Columbia University Press, 1994.

Deleuze, Gilles. Cinéma 2: The Time-Image. Trans. Hugh Tomlinson and Robert Galeta. Minnesota MN: University of Minnesota Press, 1989.

Evans, Brian. “Foundations of a Visual Music.” Computer Music Journal 29/4 (Winter 2005) “Visual Music,” pp. 11–24.

Fischinger, Oskar. “Klingende Ornamente.” Deutsche Allgemeine Zeitung, Kraft und Stoff 30, 28 July 1932. Selected statements translated into English translation available online at http://www.centerforvisualmusic.org/Fischinger/SoundOrnaments.htm [Last accessed 29 March 2014]

Garavaglia, Javier A. and Claudia Robles-Angel. “Wooden Worlds: An Audiovisual Performance with Multimedia Interaction in Real-time.” IMAC 2011 — The Unheard Avantgarde. Proceedings of the Interactive Media Arts Conference (Copenhagen, Denmark: 17–19 May 2011), pp. 54–59. Edited by Morten Søndergaard. http://vbn.aau.dk/files/73765571/IMAC_2011_proceedings.pdf

Ikeshiro, Ryo. “‘Audiovisual Harmony: The real-time audiovisualisation of a single data source in Construction in Zhuangzi.’” Organised Sound 17/2 (August 2012) “Composing Motion: A visual music retrospective),” pp. 148–155.

Kandinsky, Wassily. Concerning the Spiritual in Art. Trans. Michael T.H. Sadler. New York: Dover Publications Inc., 1977.

Landy, Leigh and Simon Atkinson (Eds.). “Audio-Visual Theory.” EARS: ElectroAcoustic Resource Site. http://www.ears.dmu.ac.uk/spip.php?rubrique50

_____. “Live Electronics.” EARS: ElectroAcoustic Resource Site. http://www.ears.dmu.ac.uk/spip.php?rubrique283&debut_list=60

_____. “Visual Music.” EARS: ElectroAcoustic Resource Site. http://www.ears.dmu.ac.uk/spip.php?rubrique1402

McDonnell, Maura. “Visual Music.” Original 2007 article published in the Catalogue of the Visual Music Marathon (11 April 2009). Updated version in eContact! 15.4 — Videomusic: Overview of an Emerging Art Form / Vidéomusique : aperçu d’une pratique artistique émergente (April 2014). https://econtact.ca/15_4

Moritz, William. “The Dream of Color Music, and Machines That Made it Possible.” Animation World Magazine 2/1 (April 1997). http://www.awn.com/mag/issue2.1/articles/moritz2.1.html

Moritz, William and The Fischinger Archive. “Oskar Fischinger Biography.” CVM — Center for Visual Music. http://www.centerforvisualmusic.org/Fischinger/OFBio.htm

Peacock, Kenneth. “Instruments to Perform Color-music: Two Centuries of Technological Experimentation.” Leonardo 21/4 (February 1988), pp. 397–406.

Robles-Angel, Claudia. “Creating Interactive Multimedia Works with Bio Data.” NIME 2011. Proceedings of the 11th International Conference on New Interfaces for Musical Expression (Oslo, Norway: Department of Musicology, University of Oslo. 30 May – 1 June 2011), pp. 421–424. http://www.nime2011.org/proceedings/NIME2011_Proceedings.pdf

Rimington, Alexander Wallace. Colour-Music. The Art of Mobile Colour. London: Hutchinson & Co., 1912.

Scheller, Jörg. “Töne sehen, Bilder hören.” ZEIT Online 18 (24 May 2011). http://www.zeit.de/2011/18/KS-Visual-Music

Tomás, Lia. “The Mythical Time in Scriabin.” 5th Congress of IASS-AIS — Semiotics around the World: Synthesis in Diversity. Proceedings of the Fifth Congress of the International Association for Semiotic Studies (Berkeley: University of California Berkeley, 12–18 June 1994). Available online at http://users.unimi.it/~gpiana/dm4/dm4scrlt.htm [Last accessed 29 March 2014]

Tremblay, Pierre A. “Mixing the Immiscible: Improvisation within Fixed-Media Composition.” EMS 2012 — Meaning and Meaningfulness in Electroacoustic Music. Proceedings of the Electroacoustic Music Studies Network Conference (Stockholm: Royal College of Music, 11–15 June 2012). http://www.ems-network.org/ems12 Article available at http://www.ems-network.org/IMG/pdf_EMS12_tremblay.pdf

Zielinski, Siegfried. Audiovisions: Cinema and Television as Entr’actes in History. Trans. Gloria Custance. Amsterdam: Amsterdam University Press, 1999.

Social top