On the Brink of (In)visibility

Granulation techniques in visual music

In a departure from my early Visual Music works, which utilised primarily abstract audio and video materials, the stylistic signage in my latest audiovisual compositions engage with mimesis, ergo the intentional imitation of real-life sonic and visual anecdotes. When composers wish to escape the irresistible pull of cinematographic narrativity, they can rely on a wealth of strategies to progressively remove materials from their causal bonding, so they can be more flexibly arranged in meta-narratives: instead of tales narrated through sound and image, we construct audio-visual-stories about stories. The fragmentation of real-life imagery into pointillistic elements seems a particularly attractive strategy, both for the richness of the image space that becomes attainable and for the conceptual and technical parallels that sonic artists can draw between such techniques and the known and trusted granular sound synthesis and processing procedures. Thus, as part of my recent compositional endeavours, I began an exploration of granular techniques for the design of audio-visual gestures and parallel streams of morphologically similar sonic and visual materials. This article reports on the results of such explorations and presents reflective considerations in view of possible developments of suitable software tools, which are currently lacking. Extracts from my latest work Dammtor, as well as other works from the repertoire, are included in guise of examples of the techniques discussed in the text and serve the purpose of illustrating the artistic and idiomatic implications of granular techniques in the complex arena of audiovisual arts, which electroacoustic composers are exploring with increasing curiosity. 1[1. The author’s Dammtor (2013) is a visual music work that was produced in the Music Technology Laboratories at Keele University (UK). It is based on the poem of the same title by James Sheard (2010, 5) and utilises sounds and video materials linked to the words, images, metaphors and overall spirit of the text.]

Audiovisual Granulation — Conceptual Overview

Granulation is one of the most common techniques utilised in sound design, and it is often applied to mimetic sound materials, cutting recorded concrete sounds into small chunks (grains) which may, or may not, be individually processed, typically by means of spatialization, pitch transposition and more complex FFT data transformations. Snippets of mimetic sounds, lasting between 50 and 200 ms, may still retain, individually, a certain level of source bonding (Smalley 1997, 119) which, although progressively weaker, can still be surprisingly resilient, even when durations fall below such values. In electroacoustic culture, the pointillistic nature of granularized mimetic sounds, separated into short isolated snippets, often represents an element of stylistic signage recognised by composers and audiences alike. Thus, granulation can be used to shift the impact of mimetic material away from their intrinsic anecdotal force field, taking advantage of the great flexibility offered by such a technique, if one considers the various parameters available to sound designers: grain duration, grain density, spatial scattering, pitch range, envelope shape, etc. Sounds can be torn apart and reconstructed suddenly or progressively, depending on the temporal profile of the aforementioned parameters. Barely audible clinks, as well as dense granular clouds, can retain as little or as much of their original causality as we wish.

The audio-visual designer / composer, especially that of electroacoustic provenance, will be interested in adopting the compositional paradigms, as well as the processing techniques, of granulation and apply them to both sounds and images. We will turn our attention to the visual aspect of granulation, assuming that the reader will be somewhat familiar with the theory and working practices of sound granulation 2[2. See, for example, Barry Truax’s article “Discovering Inner Complexity: Time-shifting and transposition with a real-time granulation technique,” in Computer Music Journal 18/2 (Summer 1994), an excerpt of which is available on his website as “Granulation of Sampled Sound.”], and that visual granulation can be theorised drawing some, albeit not all, conceptual parallels from the corresponding sound processing technique.

Visual Grains

Unlike their sonic cousins, visual grains possess both a temporal size, i.e. duration, and a geometrical (spatial) size, which refers to the area of the video frame occupied by the visible subject of the grain itself. Depending on the use of the geometrical frame, we can introduce a basic taxonomy of video granulation techniques, dividing them in two general categories.

In a simple extension of their sonic counterparts, visual grains can be created taking short extracts of a camera footage clip. Assuming conventional rates of 25 or 30 frames per second, typical in PAL or NTSC video standards respectively, the smallest units we can address would be the time interval between two consecutive frames, i.e. 33 or 40 ms. The smallest video grain would be a single frame which, taken individually, would be a still picture, rather than a moving image. Intriguingly, at the aforementioned rates, a few frames would be sufficient to create micro-clips, which, although very short, could already provide the sensation of motion, albeit in short bursts. 3[3. Higher frame rates are being introduced as part of the Digital Cinema Initiative.]

A more flexible approach to visual granulation can result from a different conceptualisation of video grain. Instead of a short burst created by the temporal juxtaposition of full-frames, we could imagine grains as visible particles occupying a (small) region of the frame.

These two different notions of visual grain lead to dissimilar practices and indeed contrasting results, which will be discussed in the following paragraphs.

Frame-Based Visual Granulation

Here the “video grain” is a short-duration snippet of video material where imagery occupies the whole area (or most of it) of the frames involved. The synthesis of novel visual material occurs as a result of temporally pointillistic, full-frame images, which can retain a strong bond with aspects of the visible, real-world subjects or landscape from where the original footage is taken. The full-frame grains can overlap and generate new visual amalgamations by means of different blending techniques applied to the overlapping grain. 4[4. In this case we are considering both a temporal and a geometrical frame overlap. See below for more details on grain overlap and blending techniques.]

Frame-based techniques are more likely to yield results that preserve the mimetic content of the original footage, although the latter can be disguised by means of filtering techniques, zoom close-ups, or, with even more dramatic results, compositing two or more overlapping video grains. The following excerpt from Dammtor illustrates the use of frame-based granulation, initially with very short grains, one to four frames each (40–160 ms), but then with longer chunks.

This excerpt is taken from the very first section of the work and features phrases of pointillistic audio and video fragments, which are arranged in short sequences, each with its own cadential contour. Therefore, the opening of the film already provides the viewer with clear idiomatic reference: this is a work in which the design and the organisation of the materials stand at the core of the discourse; in particular, listeners who are familiar with the electroacoustic repertoire will immediately recognise an extension into the audio-visual domain of archetypical sonic arts phraseology. The fragmented images represent the visual extensions of the pointillistic sonic grain, common in much of the electroacoustic repertoire. The temporal and causal misalignment between the audio and video fragments challenges cinematic narrativity in favour of a looser association between the materials and a plot-to-be. The attention of the viewer is, at least partially, removed from a possible story conveyed by the mimetic material, and progressively focused on the morphological treatment of the audible and visible gestures instead.

Frame-based granulation techniques are utilised in the work of experimental cinematographers associated with the film poets of the New American Cinema movement in the 1960s (Curtis 1971, 62–63).

In Stan Brakhage’s Mothlight (1963), we see a highly energetic montage of monochromatic images on white luminous background. Flashes of leaf structures close-ups, streamed together in an uninterrupted granular flow, are barely recognisable and seem to depict elegiacally a moth’s experience of life.

Particle-Based Visual Granulation

In particle-based granulation 5[5. The word particle is here used with a less infinitesimal acceptation than in other computer graphics applications.], the “video grain” is a snippet of video material, the shape of which can be as diverse as we can imagine, and can obviously be time varying. The grains have short temporal duration and occupy a limited, usually small, region of the frame.

The synthesis of novel visual material occurs as a result of the clustering of spatially and temporally pointillistic specs of images, each with its own duration, time-varying shape, trajectory and chromatic behaviour. These grains can overlap temporally (when they occur at the same time) and / or geometrically (when they occupy the same region of the frame). When the grains overlap both temporally and geometrically, they can generate an amalgamated grain, which can derive from various visual blending techniques. This second type of video granulation, even when applied to real-life imagery, is akin to many existing video particle-generators, such as those available in Adobe After Effects, Apple Motion and third-party plug-ins developed for those platforms. Particle granulation is likely to generate abstract visual material, as the recognisability of the subject is likely to become impaired by the geometrical and temporal segmentation of the images involved. In particular, the use of large numbers of video grains of small geometrical size, with high values of density, will tend to generate clusters that can be regarded as extensions to the visual domain of typical granular sound clouds.

Particle-based granulation can be seen in action in Dammtor, during the various morphing processes achieved through the manipulation of the writing hand footage. In the final section of the piece (Video 2), a continuous shift between reality and abstraction is achieved, visually, by means of granular de/re-construction of recognisable close-up footage of a hand writing on a piece of paper.

Emergence, by Andy Willy (2013) is constructed, almost in its entirety, by granulating footage of waves lapping against a shoreline at low tide (Video 3).

The image processing in Emergence was achieved by means of a complex series of manipulations carried out using various video and photographic editing tools (darkening, filtering, edges detection, compositing). Thus, the geometrical integrity of the original footage disintegrates into fluidly morphing filamentous fragments. The visual shapes, as well as their textural motions, emerge from the different patterns of aggregation of such fragments and are mostly unrecognisable, despite their real-life provenance.

If the visual grains themselves are computer-generated abstract imagery particles, as opposed to specs taken from real-life footage, granular techniques will obviously tend to generate abstract aggregates, which can acquire various degrees of fascinating textural complexity. In a passage from Bret Battey’s 2005 work Autarkeia Aggregatum (Video 4), the imagery is generated by means of up to 11,000 individual “grains”, each consisting of points in localised circular motion, leaving behind various types of visual trails or shades.

Similarly, the particle-based granulation techniques adopted in the author’s 2010 work Patah (Video 5) often involve the clustering of several sinuous “streaks”, each with subtly varying chromatic and spatial behaviour.

The above taxonomy is very crude; in many cases, audiovisual designers will want to operate in scenarios that fall somewhat in between these two polarities. It can be observed that frame-based techniques can be regarded as singularities of the particle-based universe, whereby the geometrical size of the grain is extended to occupy the whole area of the frame.

Visual Grain Density

Visual grain density, or frequency, will refer to the number of grains per second included in a certain sequence. In frame-based techniques, the density is an intuitive parameter, indicating, for instance, an average number of temporal chunks per second taken from the original clip(s). In particle-based techniques, the density is more elusive, as granular specks of visual materials can be clustered together with different degrees of temporal density in different areas of the frame. Indeed, the contour of the resulting clusters is achieved precisely by varying distributions of density across the frame; probability distributions can be used to instruct an algorithm on where, how and when to increase or decrease the density of particles generated in different areas.

Grain Overlap

In audio granular synthesis, overlapping grains can be combined using a variety of sophisticated techniques, such as convolutions or spectral averaging. However, in most cases, overlapping audio grains are merged by means of simple mixing, i.e. digitally summing the samples’ values, after suitable amplitude scaling. The notion of mixing assumes that two (or more) elements are combined, while still remaining somewhat individually recognisable. Such a paradigm can be extended to the field of digital image processing; in this case, the equivalent of pure mixing would be the overlap of frames, or visual snippets, by means of transparency, whereby two (or more) images can be seen, simultaneously, controlling the extent to which one can be seen through the other(s), depending on the respective values of the alpha-channels data.

Moreover, video grains can be superimposed, with visually striking results, by means of compositing techniques other than alpha-channel transparency. Compositing modes, also known as blend modes, or blending modes, or mixing modes, can utilise both colour and brightness information on the overlapping video fragments, to determine what algorithm will be used to calculate the pixel values of the resulting composited image (Cabanier and Andronikos 2013, Chapter 10).

Grain Size and Duration

This aspect only requires a brief note regarding terminology: in audio granular techniques, the size refers to the temporal duration of the sound grain. The concept extends well to frame-based visual granulation, although we pointed out already that common frame rates in the video industry limit the minimum duration of video chunks to the interval between two consecutive frames (33 or 40 ms). However, we need to acknowledge that in particle-based video granulation, video grain poses both a temporal duration, as well as a geometrical size too. The two should, obviously, not be confused.

Spatial Scatter

As noted in the previous point, video grains need not occupy the whole geometric area of the frame. They can be given, individually, collectively, or in subsets, their own locus within the frame itself. Entire footage frames taken from within one or more videoclips can, potentially, be re-sized and spatially scattered across a newly constructed frame. This type of dispersion, however, is more common in particle-based granulation in the hand of abstract animators or visual effect designers. Dennis Miller’s Echoing Spaces (2009) features several examples of video granular techniques, in which time-varying spatial scatter plays an important role in determining textural flavour and gestural behaviour of the entire work. Audiovisual works that rely more on mimetic discourse will most likely make a less adventurous use of spatial scatter, in favour of visual grains that occupy a reasonably large and stable area within the frame.

Enveloping

The envelope of sonic grain usually refers to the shape and duration of the attack and decay imposed on the chunks of audio extracted from the sound(s) to be granulated. A similar temporal envelope can be considered for visual grains. In frame-based granulation, for example, fades from or to black can be used instead of straight cuts. The duration of such fades can be compared to the duration of attack and decay in audio grains. If we wished to extend the concept of envelope shape from the audio to the visual domain, we could consider different ways in which transitions to or from black can be achieved. Most video editing platforms allow for various types of such transitions, operating on brightness (typical fades), colour (channel-map fades), or geometrical transformations (iris effect, spinning, page peel, etc.). Despite the short durations (a few frames) probably involved when enveloping full-frame video grains, these different transition techniques may provide different flavours to the resulting granular sequence.

Aside from temporal enveloping (transitions), we can utilise geometrical enveloping, defined as the type of contouring of a visual grain, be it a (quasi-) full-frame one, or a particle-like one. In the following bridge section from Dammtor (Video 7), the visual grains occupy almost the entire frame area, but are contoured, geometrically, using a feathered oval-shaped black matte.

Video Granulation Techniques in “Dammtor”

A variety of software tools offer sound designers opportunities to adapt audio granulation to the particular compositional needs of the project at hand. Plug-ins, Max patches and various stand-alone granular synthesis and processing applications can generate, even in real-time, spectromorphologically rich granular audio streams and clouds. Video granular techniques do not benefit from a similar paradigm available on dedicated software tools. In the absence of a specifically dedicated software tool, most of the video granulation processes utilised in Dammtor were constructed by chaining together an appropriate assortment of existing filter effects in Final Cut Pro 6[6. In particular, I am referring to Apple Final Cut Pro 7, but similar techniques can be developed using later versions of Final Cut, as well as other video editing suites.] or constructing complex particle-based video processing algorithms in ArtMatic, a modular graphics synthesizer.

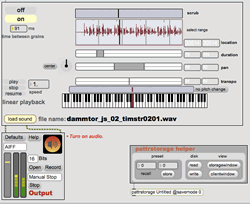

Early video processing experiments were triggered by the desire to replicate in the visual domain the type of pointillistic audio materials that had been previously generated using granulation techniques on recordings of voice actors reading and whispering stanzas from James Sheard’s poem (Audio 1).

Low values for audio grain durations (40–90 ms) and inter-grain time gap (60–100 ms) [Fig. 1] resulted in streams of vocal utterances that were then edited further, manipulated with various spectral transformations and finally arranged together with intelligible sections of the poem recordings (Audio 2).

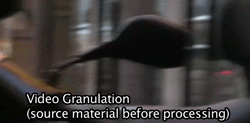

Video example No. 8 shows a summary of the real-life film footage used in the frame-based granulation processes in Dammtor, which include imagery associated with the words, metaphors and overall atmosphere evoked by the poem’s text. The raw video comprises original material filmed on location, as well as third-party archival footage collected elsewhere.

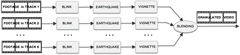

Grain Slicing

A technique akin to what I earlier described as frame-based video granulation was adopted for the design of contrapuntal visual streams, to be arranged with and against the sonic streams already available in the piece sound palette. The most obvious strategy took advantage of frame-level digital editing of the footage. In fact, it is possible — albeit tedious — to zoom into videoclips and separate them into short chunks, down to the smallest duration of one frame each. In an attempt to automate the slicing process and provide the granulated video with suitable stylistic signage, I adopted the strategy illustrated in Figure 2.

The flow of digital video signal goes from left to right and is divided in parallel processing streams, one for each of the video tracks utilised in the corresponding Final Cut project timeline (see screenshot in Fig. 3). In a first incarnation of the process, four video tracks hosted footage captured inside Dammtor train station (Hamburg, Germany), as well as material filmed with intentionally shaken hand-held digital camcorder in the Padova city centre (Italy).

The bottom of Figure 4 shows the sequence timeline. On the top right of the image, the canvas window provides a preview of the processed video resulting from all the editing decisions incorporated in the timeline. The top left window contains an inspector that shows the video effects applied to the currently selected videoclip, along with the relevant parameters values. The “blink” filter essentially provides the equivalent of an automatic splicing of the video footage, separating chunks of the videoclip (“on duration” in Fig. 4), with chunks of black video (“off duration” in Fig. 4). In the passage illustrated in Figure 4 the blink effect was automated, with increasingly longer “on” durations and increasingly shorter “off” durations, to achieve an effect of longer and longer video grains getting progressively closer, temporally speaking, to each other. 7[7. In line with the typical terminology historically adopted in video post-production, I will use the word “filter” to indicate the more general category of video effects, or video processing tools.]

Scattering

The “earthquake” filter introduces an animated jittering displacement on the granulated footage, as if shaken by an earthquake. With low values for the twist, horizontal and vertical shakes parameters, the effect can be no more than a subtle tremor, whilst with high values — such as those used in Dammtor — the quivering effect can be quite violent. The striking misplacement introduced by the earthquake effect functions as a conceptual parallel of the spatial scattering of audio grains within the stereophonic virtual sound stage. Furthermore, it achieves the additional stylistic effect of blurring the contour of the subjects in the frame, in a fashion similar to the semantic disintegration caused by audio granulator applied to the recorded and whispered recitatives of the poem. Consequently, it contributes to strengthen the tension, in terms of expressive mood, introduced by the granulation process, now regarded as compositional device, rather than merely as a design tool.

Geometrical Enveloping

The vignette effect, with appropriate feathering of the frame periphery, was added in order to re-shape and smooth the geometrical contour of the granulated images. Figure 5 shows the result on a still frame from Dammtor. The values for the parameters size, falloff and blur amount determine the magnitude and the intensity of the feathering effect introduced by the vignette filter.

The impact on the enveloped material is that of a stylistic intent less crude than a simple cut, rather akin to the difference between enveloped attacks in audio regions compared to abrupt truncations of the corresponding sound material. Excerpts from Dammtor seen above (Vid. 1, Vid. 7) illustrate how geometrical enveloping can effectively ennoble a short chunk of video from a humble status of “b-cut” residue to a higher status of motion vignette.

Grain Overlapping

Each track effects chain in Figure 2 (above) produces a separate stream of granulated video. Before we discuss the results that can be achieved through the blend of these independent streams (far right in Fig. 2), we ought to reflect on the likely outcome of the effects utilised in each chain.

The slightly different values of the parameters in the effects used in the parallel video tracks cause a randomised misalignment on all morphological and temporal attributes of the processed streams. Firstly, the different keyframes used for the time-varying on/off values in the blink effect cause the grains to be sometimes in synchronisation, sometimes out of synch, sometimes partially in synch. Similarly, different values for the parameters of the earthquake effects cause the jittering behaviour of the separate streams to be unpredictably dissimilar. Most importantly, each of the individual overlapped video tracks is manipulating different film footage, with content wildly diverse in terms of subject, camera motion, zoom, panning, colour palette, brightness and contrast. All of the above variables contribute to give the process illustrated in Figures 2 and 3 sufficient complexity and consequent stochastic behaviour.

The separate video streams are “mixed” together to produce the final granular stream. The mixing procedure is not visible looking at the timelines and inspectors, because it is achieved by means of blending techniques applied on the four video tracks, which need to be selected for each of the clips inserted in the sequence (see Fig. 6), so they can be “seen through” each other in the unique way allowed by the particular blending algorithm chosen.

In Final Cut Pro 7 there are eleven blending modes available (or “composite” modes, in Final Cut parlance), each using different techniques to combine colour and brightness data of the overlapped clips to produce the final mix.

When compositing four or more tracks, the results can be rather diverse, depending on the particular interaction between colour and brightness data of the clips involved and depending as well on which blend mode is selected for every overlapping clip. In the design of the granular streams in Dammtor, hundreds of modes combinations were experimented with. The ones utilised in most situations were “Difference” and “Overlay”, although others were also adopted on occasion. The choice was dictated by æsthetic considerations, chiefly the desire to achieve imagery with specific degrees of recognisability and coherent colour schemes.

Video example 9 shows the results from the procedure previously illustrated in Figure 2 (block diagram) and Figure 4 (Final Cut screenshot).

Figure 7 shows another example of granular technique implemented in Final Cut, this time using six video tracks. In this case, the blink effect was not automated and each of the overlapped regions in the timeline was given different values for “on” and “off” durations, to achieve an effect similar to the keyframing of parameters. Video example 10 shows the outcome of this latter procedure.

Aesthetic Considerations and Stylistic Implications

We would like to regard mimetic Visual Music, broadly speaking, as a time-based art, which utilises recognisable sounds and recognisable moving images purely as means for artistic expression. However, we need to contend with the ineluctable fact that the use of sound, and especially images captured from reality, typically through camera filming and microphone recording, inevitably shifts the audio-visual artefact towards much more powerful and ubiquitous mediatic experiences, such as cinematography, television, video documentary and amateur video making. The cultural, perceptual and cognitive force field created by these attractors is nearly impossible to elude, as they are indwelt in our experience of audio-visual media constructs. Narrative is the inescapable source of this attraction. Joseph Hyde has pointed out that our mediated audio-visual experience is highly influenced by the praxis of narrative cinema, television, streamed content on the Internet and, I would add, for the generations born after the 2000s, videogames (Hyde 2012, 174). Indeed, when we are exposed to any video-camera footage on a television set, computer display, portable device or large cinema screen, our first and foremost reaction will be the search of narrative validity: why are those objects, people, landscape being shown? Where do they come from, where are they going next? What is their story? What story are they telling me, and who or what is the story about? Ergo, mimetic Visual Music continuously struggles to escape the gravitational pull of narrative. It has to do so, as its purpose of existence lies in the artistic cracks found within the established language of cinematography.

In the composition of Dammtor, I considered, and indeed experimented with, techniques and sonic / visual solutions aimed at freeing mimetic materials from their real-life associations. However, possibly due to the specific dramaturgical references imposed by Jim Sheard’s poem, I found myself embracing verisimilitude, rather than striving to move away from it. I also found that an unmitigated departure from narrativity is not only very difficult, but also not entirely desirable. Instead, I developed a language based on the arrangement of mimetic materials that considers their spectromorphological phenomenology, their mimetic references and, obscurely, their narrative potential. The framework I operated within allowed me no fruitful use of visual suspension (Hyde 2012, 173–74), the visual equivalent of reduced listening. On the contrary, the viewer is left to recognise sounds and images together with their causal relation to reality and the stories therein; yet the “reality” they mimic is not phono / videographical, because it is re-contextualised, fragmented and re-composed in the fashion of the verses in a poem. Granulation techniques, both in the audio and video design, were crucially important to manipulate levels of surrogacy (Smalley 1986, 82), in search of a particular focus.

While exploring the historical background to the use of mimetic material, and its manipulation by means of granulation, I noticed parallels between some modern strains of Visual Music and the heritage of certain strands of experimental cinema. These two worlds are perhaps more contiguous than any practitioner on either side would care to admit. Bridging the distance between them may be useful as a further analytical perspective.

Mimetic Visual Music in particular, with its references to reality, is often contiguous to strands of abstract non-narrative cinematography and can, and probably should, be examined also from the angle of film theory. In associational experimental films, real-life images that may not have a clear logical connection are juxtaposed to evince their expressive qualities and/or articulate concepts, rather than narratives (Brodwell and Thompson 2012, 378). Associational viewing strategies represent the antithesis of visual suspension and reduced listening (Hyde 2012, 173–74), which are more appropriate methods to relate to Visual Music, at least to the part of repertoire developed by, or in collaboration with, sonic artists of musique concrète extraction. Yet, in mimetic Visual Music, certainly in the case of my work Dammtor, the viewer is allowed to recognise, hidden behind the mist of granulated audio and video, the anecdotes portrayed or implied by the materials. Yet, he/she is also encouraged to use a flexible and heuristic approach to make sense of relationships between them that go beyond cinematographic narrativity — an approach suspended somewhere in between anecdotal electroacoustic videos and poetic associational films (Brodwell and Thompson, 378).

The use of granulated video sequences of high gestural content is very common in the work of experimental cinematographers associated with the “film poets” of the New American Cinema movement in the 1960s (Curtis 1971, 62–63). Brakhage’s Mothlight was already mentioned above, where I commented on the flow of granular images on white background.

Gregory Markopoulos’ Ming Green utilises pictorially beautiful images of his New York flat to construct the cinematic equivalent of a still-life painting (Ibid., 130). The video “grains” consist of short still-frame sequences, lasting between 0.5 and 2 seconds, enveloped consistently by means of fades from or to black and cross-dissolves between adjacent grains, sometimes using composited fading techniques (Video 11).

Compared to these examples, the granular video emerging from the techniques utilised in Dammtor unsurprisingly possesses a more defined temporal and textural clarity, thanks to the advantage given by modern digital video technology compared to analogue filming and montage techniques of the1960s and 1970s. Greater attention is given to the possibility offered by technology in the manipulation of the visual grains, which reflects a more refined pictorial sophistication of the frames.

Furthermore, Robert Breer’s cinematic assemblage (Uroskie 2012, 164) in his film Fuji (Breer, 1974), titled after the now discontinued train which connected Tokyo and Ōita until 2009, utilises the juxtaposition and occasional morphing between short sequences (fraction of a seconds, sometimes single still frames) of real-life footage, photos and stylised drawings of the same scenes, landscapes, people, on and off board of the train (Video 12).

Conclusions

This article has provided a framework for the experimentation on granular techniques in audio-visual composition, highlighting technical aspects and artistic implications encountered during my latest compositional projects. Future endeavours will look into the design and implementation of suitable software tools: an integrated platform to utilise the above conceptual approach in the crafting of novel material from synthesis and, more likely, processing of digitised audio and video footage captured in the real world, by means of digital recorders and camcorders.

Despite the conceptual and computational similarities, the perceptual effects of audio mixing and visual blending can be very different. This is an area that deserves further multi-disciplinary research to establish, for instance, the degree of recognisability of granularized mimetic video, compared to granularized mimetic audio. This awareness will enable the correct mapping of sound granulation paradigms into visual granulation ones (or vice-versa), as a stepping stone towards the realisation of morphologically linked audio and video streams. The software will need to be able to offer intuitive access to all the parameters and techniques discussed here, including the choice of algorithms for the blend of overlapped video grains.

Computational power permitting, such tools may one day allow real-time granular manipulation of high resolution audio-visual streams, a prospect that promises innovative paradigms for live performance of mutually interactive sounds and images, possibly captured on the performance location or, in the case of networked spaces, in the world.

Bibliography

Battey, Bret. Autarkeia Aggregatum. 2005. [Video]. Available online on the composer’s Vimeo profile at http://vimeo.com/14923910 [Last accessed 12 December 2013]

Breer, Robert. Fuji. 1974. YouTube video (5:55) posted by “ChunkyLoverf” on 22 November 2011. http://www.youtube.com/watch?v=HudkC6Oapww [Last accessed on 12 December 2013]

Brakhage, Stan. Mothlight. 1963. [Video]. In By Brakhage: Anthology 1 & 2. The Criterion Collection [Blu-ray], 2010. YouTube video (3:25) posted by “phospheneca” on 4 June 2009. http://www.youtube.com/watch?v=Yt3nDgnC7M8 [Last accessed 12 December 2013]

Brodwell, David and Kristin Thompson. Film Art: An Introduction. Columbus OH: McGraw-Hill, 2012.

Cabanier, Rik and Nikos Andronikos (Eds.). “W3C — Compositing and Blending 1.0. W3C (MIT, ERCIM, Keio and Beihang, 2013.” Available online at http://www.w3.org/TR/2013/WD-compositing-1-20130625 [Last accessed 12 December 2013]

Curtis, David. Experimental Cinema: A Fifty-Year Evolution. London: Studio Vista, 1971.

Garro, Diego. Dammtor. 2013. [Video]. Available online on the composer’s Vimeo profile at http://vimeo.com/81707952 [Last accessed 12 December 2013]

_____. Patah. 2010. Available online on the composer’s Vimeo profile at http://vimeo.com/14112798 [Last accessed 12 December 2013]

Hyde, Joseph. “Musique Concrète Thinking in Visual Music Practice: Audiovisual silence and noise, reduced listening and visual suspension.” Organised Sound 17/2 (August 2012) “Composing Motion: A visual music retrospective),” pp. 170–78.

Markopoulos, Gregory. Ming Green. 1966. YouTube video (6:53) posted by “elenasebastopol” on 29 September 2013. http://www.youtube.com/watch?v=PDLICTKu8Jo [Last accessed 12 December 2013]

Miller, Dennis H. Echoing Spaces. 2009. [Video]. Available online on the composer’s personal website http://www.dennismiller.neu.edu [Last accessed 12 December 2013]

Sheard, James. Dammtor. London: Jonathan Cape, 2010.

Smalley, Denis. “Spectromorphology: Explaining sound shapes.” Organised Sound 2/2 (August 1997) “Frequency Domain,” pp. 107–20.

_____. “Spectro-Morphology and Structuring Processes.” In The Language of Electroacoustic Music. Edited by Simon Emmerson. Basingstoke: Macmillan, 1986, pp. 61–93.

Uroskie, Andrew V. “Visual Music after Cage: Robert Breer, expanded cinema and Stockhausen’s Originals (1964).” Organised Sound 17/2 (August 2012) “Composing Motion: A visual music retrospective),” pp. 163–69.

Willy, Andrew. Emergence. 2013. [Video]. In “A portfolio of audiovisual compositions consisting of Sketches, Mutations, Emergence, Evolution, Insomnia, Xpressions and Ut Infinitio Quod Ultra. Unpublished doctoral dissertation. Keele UK: Keele University. Also available online on the composer’s Vimeo profile at http://vimeo.com/64507082 [Last accessed 12 December 2013]

Social top