A Particle System for Musical Composition

This paper describes the development of a software particle system for musical composition. It employs a generator as described in William Reeves’ seminal 1983 paper on the subject, but one in which the particles are musical themes rather than images or points of light. This is distinct from an audio-level particle system such as might be employed effectively in conjunction with granular synthesis, because an audio-level process has no “musical intelligence” in the traditional sense as the term is used in discussing rhythm, melody, harmony or other traditional musical qualities. The particle system uses the author’s software, The Transformation Engine (Degazio 2003), as the musical engine for rendering particles. This allows the particle system to control relatively high-level musical parameters such as melodic contour, metrical placement and harmonic colour, in addition to fundamental parameters such as pitch and loudness. The musical theme corresponding to an individual particle can therefore evolve musically over the lifetime of the particle as these high-level parameters change.

Prior Work

Audio

A good case can be made that the well-known technique of granular synthesis is the audio version of a particle system. There are several parallels — both techniques break down complex behaviour into the sum of a large number of much simpler behaviours. For a particle system this is the particle, for granular synthesis, the grain. Both techniques produce resultant streams which exhibit emergent behaviour and effectively simulate natural processes such as fluid flow (e.g., Barry Truax’s 1986 piece, Riverrun). Both techniques apply stochastic choices to the resultant stream of particles to mitigate the undesirable mechanical qualities of the process. Even though in practice there are some differences as well — for example, granular synthesizers are generally much simpler in design than particle systems, and are less oriented to the simulation of natural processes — the overall parallel between the two is strong.

Music

While there are a small number of prior works in applying the particle system concept to audio based on the granulation technique, e.g., the Spunk Synthesizer (Creative Applications Network 2010), there seems to be little interest in applying it to music composition. I have recently discovered that the commercial software Plogue Bidule has a very simple particle system that outputs a stream of MIDI notes. The compositional application is very limited because pitches, velocities and durations are purely random, but the Bidule environment provides further processing modules which improves the situation somewhat. Still, by comparison with the system presented here it is very simple because the particles are simply individual MIDI notes. There is no facility to use note sequences (i.e. musical themes), continuous control data or pitch bend, nor is there any control of the particle system proper beyond the basic rudiments.

Why an Audio Particle System?

The principal attraction of using a particle system of any type is for the effective simulation of natural processes that are otherwise intractable. As William Reeves says in his seminal paper on the technique:

Particle systems model an object as a cloud of primitive particles that define its volume. Over a period of time, particles are generated into the system, move and change form within the system, and die from the system. The resulting model is able to represent motion, changes of form, and dynamics that are not possible with classical surface-based representations. (Reeves 1983)

This makes particle systems an ideal method for the digital simulation of water, wind, fire, smoke, clouds and other unpredictably changeable natural processes. Because sound (and by implication, music) is itself a necessarily dynamic (i.e. changeable) process, particle systems may be an effective method for shaping it creatively.

Why a MIDI Particle System?

The main limitation of granular synthesis as a compositional technique is that is applied at a sub-symbolic level. The distinction between Symbolic and Sub-symbolic music systems has been discussed in a 2009 article by software designer and composer Robert Rowe. Symbolic systems, such as MIDI, employ a high-level description of musical events and therefore implicitly embody a large amount of musical knowledge. This implied knowledge is a form of structure which can also be restrictive. Sub-symbolic systems, such as audio stream processing techniques like granulation, operate at a low level. They imply less particular musical knowledge and so are correspondingly less restrictive. Each system has characteristic advantages and disadvantages. Obviously, for a project like the compositional particle system described here, it makes a lot more sense to use a symbolic system like MIDI than a sub-symbolic one based on audio stream processing, even though the latter might have some advantages for audio quality.

MIDI (Loy 1985), despite all the complaints of its detractors (Moore 1988), has been a remarkably long-lived computing standard. Its worst limitations have mostly been overcome by clever extensions, and one of its detractor’s main complaints — the slow speed and unidirectional nature of its cable interconnection — has been rendered irrelevant by its use within high-speed network and software-only systems. MIDI’s longevity says something positive about its success as a symbolic system.

A symbolic MIDI particle system has the advantage that each particle corresponds to a complete high-level entity, usually a musical motif consisting of series of notes (but see the examples below for other uses). This means that each individual particle can represent a musical entity in the composition, for example, an individual strand of counterpoint. It would be very difficult, probably impossible, to program a granulation system so that each grain corresponded exactly to a musical motif. Because of the sub-symbolic character of the granulation process there are simply no markers in the data to readily indicate the presence of a symbolic entity such as a motif. Conversely, because of the symbolic character of MIDI and the high-level control offered by the Transformation Engine, it is very straightforward for each grain to be represented by a musical motif. In general, the attraction of using MIDI for a particle system music generator is that the particle system parameters can be made to connect convincingly with compositional techniques employing traditional concepts such as harmony based on diatonic tuning systems and metrical rhythmic structure.

On the minus side, MIDI is limited in the number of grains that can be performed simultaneously. Whereas audio granulation routinely uses grains numbered in the hundreds, and visual particle systems sometimes employ millions of particles, the MIDI system described here is limited to just 64, the number of tracks in the Transformation Engine.

To a large extent, however, this deficiency is compensated by the complexity of the MIDI particle as compared to an audio grain. While an audio grain is typically only a few dozen milliseconds long, a MIDI particle can consist of an entire musical phrase of several measures duration, or even an entire composition. Typically it is a musical motif of several notes, lasting perhaps a few beats.

There also seems to be a perceptual characteristic that limits this deficiency. It might be called a primitive “rule of large numbers.” Perceptually, if more than 3–10 musical entities are employed, the listener perceives them simply as “many.” At any rate, the perception of a great mass of sonic entities does not seem to be diminished by the small number of particles.

Description of the MIDI Particle System

Basic Parameters

The basic particle system parameters which I have developed were adapted from Quartz Composer’s simple built-in particle system (Fig. 1). Quartz Composer (Apple 2006) is a visual programming environment for the development of graphic processing programs in OS X. Quartz Composer’s built-in particle system is obviously a slightly evolved descendant of William Reeve’s original design.

To summarize Reeve’s basic design, the following table outlines the essential parameters (L) and what, in musical terms, they become (R). Figure 2 shows the user interface.

Overall flow intensity (determined by Rate, Variance) |

The same as visual particles except that the driving frame-rate is musically variable to suit various rhythmic situations. This allows the rhythmic character to be varied continuously from purely random to highly beat-oriented. See examples 3 and 5, below. |

Particle size / brightness |

Loudness, i.e. MIDI note velocity and breath controller value. |

Particle lifetime |

Duration in musical terms. i.e. ticks or beats. |

Particle initial position |

Initial pitch location, i.e. tessitura. |

Individual particle |

Rate at which the MIDI particle travels the parameter space, e.g., tessitura or loudness. |

Observations in Use

Some experimentation with the MIDI particle brought up a number of differences from a traditional visual particle system. In brief:

- The MIDI Particle System employs a much smaller number of particles — dozens, rather than thousands or millions.

- MIDI particles are not uniformly similar. There is a greater complexity of individual particles. The particle consists of a musical stream of several notes differentiated by pitch, rhythm, dynamic shaping, articulation and other performance parameters. This is analogous to using a video stream as the particle source in a conventional visual particle system.

- The shape of the emitter, which is very important visually, is not relevant to the MIDI Particle System.

The most important differences arise from item 1 — the MIDI Particle System employs a much smaller number of individual particles. This implies its corollary, item 2, that the separate particles have more individual “personality”, that is, an individual particle has greater perceptual weight than in a visual system, and the particles exhibit greater variation from one to another.

There are of course also some similarities to visual particle systems:

- Emergent structure. The interaction of particles produces sonic structures that have not been explicitly programmed.

- A wide variety of behaviours is possible by adjusting a relatively small number of parameters.

- These resulting behaviours readily simulate natural processes such as fluid flow, bubbling, etc., that are otherwise difficult to reproduce algorithmically.

The table below compares the MIDI Particle System with a conventional (visual) particle system.

Parameter |

Visual Particle System |

MIDI Particle System |

Flow rate |

particles per frame |

|

Life span |

measured in frames |

measured in musical time (beats) |

Size |

visual size and |

loudness, i.e. MIDI velocity and continuous controller value |

Position |

position in 3-D |

position within 5-D space (X, Y, Z, U, W): X = pitch width (melodic contour amount) Y = tessitura (general location in musical pitch range) Z, U = rhythmic position W = articulation (individual note duration) |

Examples

Clarinet Orchestra

The “orchestra” used for the particle system in the following audio examples 1, 2 and 4 consists of clarinets synthesized additively in Wallander Modelled Instrument’s WIVI system. Clarinets were chosen because, like string instruments, they constitute a large family of instruments covering the entire orchestral pitch range with a consistent tone quality. Unlike sampled instruments, the WIVI instruments provide continuous control of dynamics and tone colour, a factor which is essential to the simulation of flow in the MIDI Particle System.

The orchestra consists of a total of 32 instruments / particles:

6 clarinets in E-flat

6 clarinets in B-flat

6 clarinets in A

6 basset horns in F

4 bass clarinets in B-flat

4 contrabass clarinets in B-flat

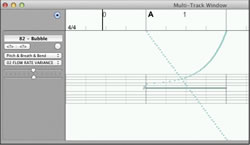

1. Bubbling Clarinets

This example demonstrates the use of the MIDI Particle System in simulating a natural dynamic process, the movement and breaking apart of water bubbles. I followed Farnell’s guidelines for synthesizing an individual bubble from Designing Sound (Farnell 2010), and applied the random nature of the MIDI Particle System to simulate a growing dynamic. Figure 5 illustrates a single “bubble” particle as played by an individual clarinet. The note played is middle-C#, the downward-sloping straight line represents the instruments loudness, and the upward-sloping exponential line represents a pitch glissando.

2. Multiple Particle Streams

This example demonstrates a more musical application of the MIDI Particle System. Three independently controlled particle streams are employed, one for each of the high-, medium- and low-pitched instruments of the simulated clarinet orchestra. The particle used for each stream is the same — a simple 16th-note trill. Each stream is momentarily brought into prominence through the variation of the loudness, tessitura and flow-rate parameters of the corresponding particle system.

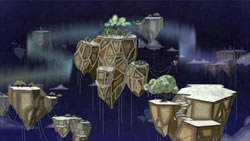

3. Frog Crescendo from “Mashutaha — Sky Girl”

This sound clip was created to introduce a sequence in an animated film project created by students of Sheridan College in partnership with the Communications University of China (CUC), Beijing, China.

Mashataha — Sky Girl is an animated rendering of a Huron Indian creation myth involving a celestial woman who falls into the earthly world. The audio example given here is a sonic illustration of a mythic idea of the Huron Indians — “the drum is the heartbeat of nature.” In it, various natural sounds, (predominantly the croaking of frogs) coalesce into a steady beat, which then is taken over by a simple frame drum and wooden flute.

In this case, the individual particles are simply individually recorded frog croaks, triggered by way of MIDI control of a software sampling engine. The random temporal placement of the individual croaks becomes gradually more rhythmic and eventually melds with the drumbeat and flute for the title music of the film. It is a clear illustration of rhythmic randomness progressing to orderedness through the use of the MIDI Particle System. The main particle system parameters to effect this are beat variance and beat jitter.

4. Ant Colony

This example required certain extensions to the Particle algorithm for full effectiveness. In particular, two parameters relating to the selection of MIDI theme were extended with a variance allowance in order to be randomized on playback. These parameters were 1) the starting position of the theme; and 2) the time scaling factor.

The first of these has the effect of varying the beginning of each particle’s statement of the theme. Without some statistical variation of this parameter, each particle begins with an identical opening. Varying the start point is very effective in this example because the “Theme” is an algorithmically generated random walk (Fig. 7) — a sequence of random pitches connected by small intervals. Varying the start position therefore has the effect of selecting a different segment of the random walk, providing a much more variable set of “themes”. The variance of the starting position can be quantized to a musically sensible value, e.g., in this case to sixteenth notes. This means that each particle will randomly choose a starting position on a sixteenth note boundary.

The second parameter varies the playback rate of theme across a wide range, from 1/12 of the original tempo to 24/12 (=2x) the original tempo. This again provides a much more diverse set of particles, very suitable for the representation of the independent paths of hundreds of creatures.

The sonic density (flow-rate) of the particle system was varied to suit the degree of activity implied by the macroscopic images.

5. African Drums — Rhythmic Confluence

Like the frog drums example above (Fig. 6, Audio 3), this clip demonstrates the use of the MIDI Particle System to vary the temporal placement of the particles to create a structural trajectory from temporal randomness to orderliness and back again. However, in contrast to the frog drums example, the particles themselves consist of musically structured material — the interlocking rhythmic patterns of the various instruments of a West African drum ensemble. There are altogether 24 instruments organised into five particle systems, one each for cowbells, shakers, high drums, medium drums, low drums and log drums. Beat variance and beat jitter are the main parameters controlling the structural process (as in the “frog drums” example).

Bibliography

Creative Applications Network. Spunk Synthesizer. [Software]. 2010. http://www.creativeapplications.net/mac/spunk-sound-mac

Degazio, Bruno. “The Transformation Engine.” ICMC 2003. Proceedings of the International Computer Music Conference (Singapore, 2003).

Farnell, Andy. Designing Sound. MIT Press, 2010.

Loy, Gareth. “Musicians Make a Standard: The MIDI Phenomenon.” Computer Music Journal 9/4 (Winter 1985), pp. 8–26.

Moore, F.R. “The Dysfunctions of MIDI.” Computer Music Journal 12/1 (Spring 1988), pp. 19–28.

Reeves, William. “Particle Systems — A Technique for Modelling a Class of Fuzzy Objects.” Computer Graphics 17/3 (July 1983), pp. 359–76.

Rowe, Robert, “Personal Effects: Weaning Interactive Systems from MIDI.” Proceedings of the Spark Festival 2005.

_____. “Split Levels: Symbolic to Sub-symbolic Interactive Music Systems.” Contemporary Music Review 28/1 (February 2009) “Generative Music,” pp. 31–49.

Wallander, Arne. WIVI Modeled Instruments. http://www.wallanderinstruments.com

Social top