The Heart Chamber Orchestra

An audio-visual real-time performance for chamber orchestra based on heartbeats

A Brief Introduction to Different Aspects of the Heart Chamber Orchestra

The Heart Chamber Orchestra (HCO) is an audiovisual performance. The orchestra consists of 12 classical musicians and the artist duo Peter Votava and Erich Berger (aka Terminalbeach). The heartbeats of the musicians control a computer composition and visualisation environment. The musical score is generated in real time by the heartbeats of the musicians. They read and play this score from a computer screen placed in front of them. HCO forms a structure where music literally “comes from the heart.” The debut performance of HCO was given in Trondheim (Norway) in October 2006, during Trondheim Matchmaking, a festival for electronic arts and new technology, and was performed by the Trondheim Sinfonietta.

HCO Background

In Western culture we consider the heart to be the place for certain feelings. This is visible in language, with phrases like “to lose one’s heart to” or “to lacerate the heart.” The heartbeat also reflects the physical state of the body. It pumps heavily if the body is under physical tension, and when it starts to beat very fast it can indicate fear, stress or excitement. Slow beats show a state of relaxation or sleep. Through meditation or biofeedback techniques one can manipulate these bodily states. The heart is a vital organ and for some it is the very place of life itself — a container of a metaphysical soul.

Musicians, Sensors and Computer

The musicians are equipped with ECG (electrocardiogram) sensors. A computer monitors and analyses the state of the 12 hearts in real time. The acquired information is used to compose a musical score with the aid of computer software. It is a living score dependent on the state of the hearts. While the musicians are playing, their heartbeats influence and change the composition and vice versa. The musicians and the electronic composition are linked via the hearts in a circular motion, a feedback structure. The emerging music evolves entirely during the performance. The resulting music is the expression of this process and of an organism forming itself from the circular interplay of the individual musicians and the machine.

Transformation

HCO is oriented toward traditional musical terminology but carries out a transformation with regard to content and form. The interpreting musician, previously a hermeneut, becomes an actor by composing and interpreting at the same time. There is no pre-existing written score. The score is a temporary one and generated in the very moment of the heartbeats. Therefore a rehearsal, in the original sense of the word, is not possible. For the HCO, a rehearsal means learning to master the real-time process.

Musification and Visualisation

HCO takes the idea of a traditional symphony as a framework: each of the movements and their forms illuminate the ongoing process from different directions through musification. Musification is a sub-category of sonification. It uses musical decisions to map streams of events into musical structures. Imagery forms an integral part of the HCO. Computer graphics generated from the HCO data establish a different sensual and narrative layer. The interplay of audio and video allows for a synæsthetic experience for the audience (Fig. 1). The heartbeats of the musicians and their relation to each other become audible and visible.

The Art of Implication

HCO is not a composition in the traditional sense (of a finished musical artwork). It draws its inspiration from music, art and science. This interdisciplinary approach pushes and questions the boundaries of the contemporary practice within these disciplines. The creative act does not create an object but implements a space of possibilities, a structure for processes to evolve. There is no clear distinction between author and interpreter, but a reading/writing continuum with no territory for a single author emerges.

The Technology

The network consists of 12 individual sensors, each fitted onto the body of one musician. A computer receives the heartbeat data, and, using different algorithms, software analyzes the data and generates the real-time musical score for the musicians, as well as the electronic sounds and the computer graphic visualization.

The Stage

HCO is presented in a traditional concert space and setting. The orchestra is placed in the usual manner, but in place of a printed score, the musicians read the real-time score from a laptop screen placed on a music stand in front of them. Terminalbeach and their computer equipment are placed at the back of the auditorium behind the audience. The traditional concert space is augmented by a video projection for computer-generated graphics and a sound system for electronic sounds.

The Application of Heartbeats in the Heart Chamber Orchestra

The HCO links elements of physicality with composition and performs the resulting feedback processes on stage. Although the use of biological processes is central to the “story” of the performance, HCO can be seen as a data-driven algorithmic performance environment in which the musical part focuses on compositional aspects and the visual part on creating visual representations of the ongoing process. While the connotation of the biological information provides inspiration for possible mappings, the actual bio-data provide the concrete values to implement them.

The signals the HCO system receives can be described as a stream of 1-bit data (ON or OFF, heartbeat or no heartbeat). The heartbeats are sensed by heart rate monitors which indicate only the moment the heart muscle contracts; they do not provide any further information — such as the envelope of the heartbeat or the strength of the contraction — which would be valuable information for data-driven composition. It is therefore, as such, a rather poor source for music composition and visualisation.

What charges the signals with “meaning” is the cultural connotation and emotional enrichment of everything heart-related. As everything concerning the heart is so central to life we pay special attention to things that deal with it. We know about the importance of our hearts to our biological existence — as long as the heart beats one is still alive. But the heart also used to be considered the place where certain feelings sit, an idea which is still to be found in many sayings and metaphors like “this comes from the heart,” “with all his heart,” “warm-hearted” or “a broken heart.”

The time intervals between the heartbeats are not random but have an “inner structure” (Ransbeeck and Guedes 2009, 2). Although the foundation of this structure might not be identifiable, they clearly represent the state of the musician’s pulses and various forces affecting them.

The music and visualisation of the HCO doesn’t produce meaningful medical or physical information about the individuals, as the data gained from a few seconds of heartbeats doesn’t hold any usable information. For this a pulse needs to be recorded over a significantly longer time period (Ballora et al., 2004).

Mapping as Compositional Strategy

Many composers do not document their mappings in detail, and those that do rarely release them in the public domain. Rare and famous exceptions are Iannis Xenakis’ (1992) extensive descriptions of his use of statistics, stochastic and mapping of mass events for composition.

Contrary to mapping in sonification, which “makes particular properties of an underlying data material auditorily available… mapping, which consequently may also be musically motivated, … takes a completely different approach and serves a fundamentally different purpose” in composition (Nierhaus 2009, 269). Mapping is a “transfer function” (Agostino Di Scipio cited in Doornbusch 2002, 147) for information from one system to another, which “in algorithmic composition… becomes central to the compositional effort, either implicitly or explicitly” (Ibid., 148).

In this performance, mapping turns heartbeats and their evaluations into music and imagery by creating interpretations of them and relationships between them. As these transformation processes happen in real time, the mappings and the actual values of the mapping parameters in use define the æsthetics of the musical output. Thus mapping serves as a main compositional strategy in the HCO.

Musically motivated mapping generates a narrative from arbitrary processes. In order to be successful, it needs to create “meaningfulness”, which happens when the listener feels or clearly perceives certain aspects of the source processes in the music. To make the musical results of compositional mapping credible, qualities that are considered to be characteristic for 12 heartbeats need to be sufficiently apparent in elements of the music. These qualities are:

- Rhythmic independency: as it is highly unlikely that 12 pulses run synchronously this is best reflected in an independent playing of the musicians.

- Percussiveness: heartbeats are short, successive signals — long notes erase these qualities and should therefore be used in exceptional cases only.

- Heart Rate Variance: heartbeat intervals are irregular and so should be musical time intervals.

- Successive heartbeats sound monotonous: the compositions should therefore avoid to prominently use obvious harmonic melodies, except where the distortion of harmony is applied for creating meaningfulness.

The HCO System

The Heart Chamber Orchestra aims to create “algorithmic synaesthesia”, a term used to describe “multimedia works in which sound and image share either computational process or data source” (Sagiv et al., 2009, 296).

The ensemble consists of the following acoustic instruments (the order reflects their position seen from the audience):

- Back row (left to right): cello, trombone, French horn, double bass, tuba, cello

- Front row (left to right): violin, bassoon, bass flute, viola, clarinet, violin

In a performance, the musicians are positioned in two lines of six each on a stage with a projection screen of approximately 8 x 3 meters behind them and laptops on music stands in front of them (Fig. 3). Terminalbeach are positioned behind the audience, facing the orchestra and the screen to be able to perform and conduct all necessary live actions, such as controlling visualisation parameters or volume instructions for the musicians.

The data flow starts when the musician wearing the heart rate transmitter enters the proximity of the receiver box connected to his laptop. The sensor hardware sends a message to the sensor receiver module on each heart muscle contraction indicating a heartbeat. The receiver prepends a unique index to the message so it can be clearly allocated by the whole system and sends it through a wireless network to the statistic module. This module collects all twelve individual message streams and carries out a number of measurements and statistical evaluations (Fig. 4).

Each composition module contains one or more mapping modules, which use different evaluations from the statistic module to compute individual composition segments for each instrument. In most movements each composition segment holds the musical information for a musical unit of two bars. The content of the composition segment is sent to the score display module, which resides on each laptop in front of the musicians. The score display module converts the incoming data and displays them as notation on the laptop screens for the musicians to play only a few seconds later. This process runs continuously throughout the entire concert, constantly redrawing the score. Selected parameters of the mapping modules can be changed manually, which allows for variations of the composition while it is computed.

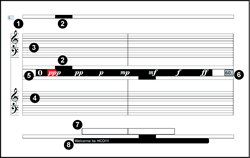

Musicians have a laptop in front of them, which shows the score interface (Fig. 5). The main interface elements can be split into three groups: note display, volume instruction and playing position display.

The note display (elements [3] and [4] in Fig. 5) comprises two lines of notation, spanning the entire width of the screen, situated above each other. Each line represents two bars of music; hence with a tempo of 60 bpm and a time signature of 4/4, each line represents eight seconds of music (eight quarter notes in two bars). This defines the duration of the musical segments, thus, the temporal delay between the biophysical events and their musical interpretation.

In the centre of the screen the volume instruction is shown by means of a horizontally oriented volume slider (element [5]), which is manually controlled with a MIDI controller during the performance. The slider display covers a range from pianissimo possibile to fortissimo with a “0” position to the very left, which tells the musicians to rest. When on “0,” the slider background colour changes to red making it easy for the musicians to see when they are “muted.” The default slider appearance (white letters on black background) inverts whenever the slider value changes, giving the musicians a clear sign for a change in their playing volume.

The playing position marker (element [2]) indicates the current playing position and is supported by a visual metronome and a tempo display (elements [6] and [7]). All three are controlled in real-time making it possible to individually change the tempo according to any mapping parameters.

Measurement and Statistical Evaluation

The HCO system receives twelve data streams indicating the heartbeats of the musicians online. Initially, the time intervals of the data streams are measured and recorded. This produces an array of twelve growing lists of integers (indicating the intervals in milliseconds) representing an algorithm based on the physical condition of the musicians without any further interpretation. Then a “group pulse” is generated from the arithmetic mean of all twelve, adding a thirteenth row to the array.

In order to make further statements about their relationships, statistic calculations are used to generate additional information from the time intervals. Thereby we differ between two groups of analyses: relations inside each stream of heartbeat events and relations between different streams. The inside-stream relations include the minimum, maximum and average heartbeat intervals, pulse rate (inversion of heartbeat intervals), speed tendency of the pulse (increase, stationary, decrease) and variation of pulse rate changes (jittering). This additional information is an interpretation of real-world events. By comparing the heartbeats to each other we introduce a “social behaviour” between them. This includes, for example, a ranking list of the pulse rates, groups of close pulse rates by introducing thresholds or by measuring the distance of all individual heartbeats from the average pulse rate.

Composition and Mapping in the Heart Chamber Orchestra

The composition intends to create a strong musical experience for both the performers and the audience but at the same time conveys the heartbeats. Making the heartbeats appear in the music is generally not too difficult. For instance, most of the unsynchronised, percussive play of the orchestra would easily be interpreted as music driven by heartbeats. This is more difficult when using results from the statistical evaluations, especially those that construct relations between the hearts. As the listeners don’t know the source data they will not be able to “hear” them, which can quickly lead to a separation of the music from the source, putting the entire concept into question. This creates a tension between the composer’s attempt to “show” the heartbeats in a comprehensible way and his wish to use the full set of available data for composing. It seems that this arises with any musically motivated mapping and is, hence, an artistic challenge that has to be met and solved for each case individually.

In the current version of the HCO, the musicians’ heartbeats are introduced to the audience with an as-direct-as-possible representation using electronic sounds. Over the course of the concert, more statistics-based, abstract compositions and mappings are allowed, with a return to musical structures that can be more easily perceived as heartbeat interpretations at several points. The different movements of a performance “look” at the same scenery — the ever-changing biophysical state of the musicians — from different perspectives to compose diverse, musically motivated “auditory views”.

Linking the hearts (and also the brains) of the participating musicians with a real-time composition and notation system creates a biofeedback loop that builds a “man-machine instrument”. The feedback in this case is implemented in two ways, physically and acoustically. The musicians provide the source signal that results in the composition, which, when played by them, affects their pulses in unpredictable ways. The music, resulting from this interplay and heard by the musicians, is the acoustic back channel of their source data influencing their biophysical state in irreproducible ways. This feedback adds an element of unpredictability and autonomy to the entire system that makes it “alive”. However, it is not intended to influence the performers with purposefully demanding, exhausting compositions, which respond to changes of the biophysical state (but these are not purposefully avoided). The intention here is to represent the musician’s pulses. Although a bi-directional communication channel between musicians and the computer is created, no “moderation” of their communication is applied, for it is led by the feedback loop itself.

The computer can transfer the bioinformation to music with virtually no delay. Any sequence of triggers can be interpreted on the fly in ways impossible for human performers. Digital instruments are especially suitable for immediate representations of the heartbeats, for transformations which are too complex to be played by humans and to include sonorities which cannot to be produced by acoustic instruments.

On Real-Time Notation

The decision to realise the real-time notation system of the HCO by means of the JMSL-based “MaxScore” (Didkovsky n.d.) object was made due to the seamless integration into the Max-based composition environment.

The use of a real-time notation system not only makes it possible to musically explore a “biological algorithm” like heartbeats with acoustic instruments, it also creates the possibility for exploring biofeedback. By operating in real-time “notation becomes a vehicle for expressing the uniqueness of each performance of a work rather than a document for capturing the commonalities of every performance of the work” (Freeman 2008, 39).

Two conditions need to be met for musicians to play correctly from a score that is continuously updated. Firstly, they need to be able to read musical segments of at least a few seconds at once to play in a “human” manner (if this is desired). To ensure this condition, the score is split into two grand staff (treble and bass clef staves) lines of two bars length (Fig. 7). Depending on the tempo, each staff line represents approximately eight seconds — enough for professional musicians to play most notations encountered in this composition correctly. This necessary chunking of music instructions affects the corresponding mapping strategies. For more complex musical structures, all bioinformation of a period close to the musical segment to be generated needs to be collected, processed and displayed as notation in the shortest possible time. This leads to a delay between the biodata and their musification according to the human need to perceive a succession of notes at once before they can be interpreted. Secondly, it needs to be taken into account that as the complexity of the composition increases, the musicians will play less precisely. This is also due to the fact that there is no possibility to rehearse the exact pieces but only their character, as each rehearsal will sound different due to the unforeseeable nature of the pulses. I.e. during HCO rehearsals, the musicians progress from “studying the note to [learning] how the system works” (Winkler cited in Freeman 2008, 35).

Concrete notation using traditional music symbols (though not necessarily standard notation) is in general more appropriate than less distinct graphical scores for this purpose. As the source material is already of a sufficiently unpredictable nature, this quality should not be amplified with additional uncertainty by a freely interpretable notation.

Visualisation

A Heart Chamber Orchestra performance consists of four different movements, each of which are made up of different parts which evolve from performance to performance. These movements and parts differ in their algorithmic and æsthetic approaches. The goal of the visualization is to create a visual layer of information from the heartbeat data, additional and independent to the musification. The audible and visual layers are designed to support each other and to give the audience an understanding of the ongoing process the HCO is built upon. When working with real-time data processing it is essential to understand that audio and video work differently in terms of reading and understanding and that each media has its amenities when creating informative situations for the audience. The visualization can disclose additional and different aspects than the musification and so both work hand in hand to maximize the experience. One example is that it is relatively simple to indicate a process history within an image by drawing data without erasing its trace, while for example subtle changes in the process can be better expressed through audible strategies.

The challenge for the visualization is to establish a visual language which enables the audience to read and to follow the process and to understand that the musicians’ heartbeats are the source for visualization and musification. As we refrain from handing out explanation manuals to the audience, the strategy for the visualization is to begin with very simple data displays, which we then develop in the course of the performance to more complex visual processes. With this strategy, and by building each successive visual step on visual knowledge and language that has already been established, we are able to take the audience along.

The first movement serves as a good example to show this strategy. At the very beginning the stage is empty and without sound and image. The musicians enter the stage with a delay of about fifteen seconds between each other and take their seats. When the first heartbeat sensor connects wirelessly to the receiver, the performance starts. The visualization shows a pulsating white circle and the musifications produces audible clicks, both representing the heartbeat of the musician in real-time. This procedure repeats itself as each musician takes position on the stage. The twelve pulses are now clearly visible and the audience can see how they correlate to the individual musicians. In this simple way, the audience members are introduced to the basics of the process. They now also understand that the visual and audible layers work differently, as what they can hear is a number of different clicks (heartbeats) which they can not associate with a particular musician but which make sense together with the visualization.

After this intro, the display changes and the 12 heartbeats of the musicians take the shape of twelve horizontal streams of white rectangles interrupted by black spaces representing the moment the hearts beat (Fig. 8). Each line shows a history of about one minute of beats of each musician and the relation of the hearts to each other. In movement two, the core element is an array of curves showing the relation of the heartbeats in the very moment (Fig. 9). A particular challenge for the visualization is the difficulty to show which data on the screen belongs to which musician. An elegant solution was found for movement three where we directly control the laptop screen to blink in sync with each musician sitting in front of it. The relating visualization is an emerging drawing, which shows the musicians how the individual heart rhythms change over time. In this way each movement has a characteristic visual element with a specific task to show a certain or several aspects of the process.

A better understanding of this overview of the visualization can be gained by watching the video documentation of the project (Video 1) which was compiled from excerpts of the HCO performance at Kiasma Theatre in Helsinki during Pixelache 2010.

Performing with the Heart Chamber Orchestra

The HCO ensemble comprises twelve acoustic instruments and one electronic instrument per musician, which gives a maximum of 24 voices. The decision for twelve performers is based on the chromatic scale of the western music system, in which each octave is split into twelve halftones. This makes it possible to have the acoustic instruments play all twelve different pitch classes at once if desired. While acoustic instruments have an unmatched timbral richness and variety in playing techniques, computers can generate an unlimited variation of sounds and provide immediacy in response to playing instructions otherwise impossible with acoustic instruments.

The HCO system extends the idea of what an interactive music instrument can consist of by means of biofeedback. Its hybrid nature of human musicians feeding a computer system with their heartbeats, which in turn provides them with an instant score based on previously defined rules and establishes interaction on two levels: inside the system between musicians and machine; and outside, where the system in itself can be seen as an instrument performed by Terminalbeach. Terminalbeach plays this instrument by acting as composer and performer generating the musical dramaturgy of each piece in real time. By introducing changes, breaks and different combinations of instruments during a performance, musical pieces reminiscent of “the Cubist and Futurist fragmentation of unitary space in simultaneous, multiple perspectives of the same object in different planes” (Matossian 2005, 66) are created. While algorithmic composition techniques are used to build base elements of the music during the performance, the final shape evolves from the live interaction between the orchestra and Terminalbeach.

Bibliography

Ballora, Mark, et al. “Heart Rate Sonification: A New Approach to Medical Diagnosis.” Leonardo 37/1 (February 2004), pp. 41–46.

Didkovsky, Nick. “MaxScore — Standard Western Music Notation for Max/MSP.” http://www.algomusic.com/maxscore [last accessed 17 August 2010]

Doornbusch, Paul. “Composers’ Views on Mapping in Algorithmic Composition.” Organised Sound 7/2 (August 2002) “Mapping / Interactive,” pp. 145–156.

Freeman, J. “Extreme Sight-Reading, Mediated Expression, and Audience Participation: Real-Time Music Notation in Live Performance.” Computer Music Journal 32/3 (Fall 2008) “Synthesis, Spatialization, Transcription, Transformation,” pp. 25–41.

Matossian, Nouritza. Xenakis. Lefkosia: Mufflon Press, 2005.

Nierhaus, Gerhard. Algorithmic Composition: Paradigms of Automated Music Generation. Vienna: Springer Verlag, 2009.

Van Ransbeeck, Samuel and Carlos Guedes. “Stockwatch, A Tool for Composition with Complex Data.” Parsons Journal for Information Mapping 1/3 (July 2009), pp. 1–3.

Sagiv, Noam, Freya Bailes and Roger T. Dean. “Algorithmic Synesthesia.” The Oxford Handbook of Computer Music. Edited by Roger T. Dean. Oxford: Oxford University Press, 2009.

Xenakis, Iannis. Formalized Music: Thought and Mathematics in Composition. Hillsdale NY: Pendragon Press, 1992.

Social top