Extending the Piano

Electronic Music and Instruments

What does the combination of instruments and electroacoustic technology offer? Either it adds one or more additional voices in terms of a counterpoint or it extends the sound or function of the instrument itself. The idea of extending an instrument is crucial in the current situation of contemporary music since instruments reached a point of optimization. There are still modifications introduced but these are enhancements of the usability and adaptations to the current needs like quartertone interfaces.

This is in fact important for the evolution of music depending on new features in terms of the interface or the sound. The modification of the instrument itself has been a crucial point in the development of æsthetics in addition to social and political changes. Furthermore, the separation of parameter and timbre creation, in other words, composition and instrument, can be eliminated using a computer. As a consequence technology offers a solution to new requirements of function and timbre.

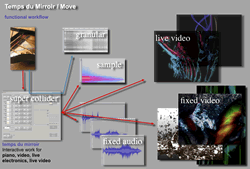

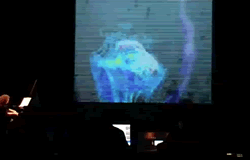

In this article some ideas of extending the piano are stressed as used in my compositions Le temps du Miroir (2005) for piano, live electronics and live video, Move (2006) for piano, live electronics and live video, and Flow (2009) for piano and live electronics. I try to show extreme examples of this extension by adding even triggered 3-channel video to the control of the player, transforming the piano into a meta-instrument. Beside Also the control of video in real-time spatialization will be introduced, since I believe it is still an underestimated technology experiencing a renaissance.

Piano Extended by Audio

The use of the piano in contemporary music is clearly related to its flexibility as an instrument. It is possible to create polyphonic structures, rich chords as well as single melodic lines. A single musician controls all of these structures so that no conductor and no large number of musicians are necessary.

The sound material of the piano is very specific. It contains a discrete set of 88 pitches with a characteristic amplitude gradient. The amplitude envelope has a peak at its beginning followed by a decay. This makes the piano sound an ideal trigger, easy to be detected by microphones and software. In addition to this, the keyboard of the piano became a standard for MIDI input and output. Special models of acoustic pianos are equipped with a mechanism to translate MIDI information into playing action.

All of these facts define the piano as an ideal instrument for the interaction between computer and musician, as an ideal tool for composition in a technological context.

As a consequence of the attack at the beginning of each sound, it cannot create a continuous melodic line of events, nor is it possible to modulate the dynamic of a sound once it is triggered, unlike the voice or wind instruments. These types of modification of the piano sound are however possible through the use of a computer. First of all the attack can be used to trigger samples or processes. Second, the sound can be transposed, delayed and modified in duration. Finally all of these processes can be combined to create a rather complex structure of sound events, all controlled by a single player.

Sample Trigger

Using an action triggered by an instrument creates some problems the composer has to solve. In case the triggered action always creates a similar sound, the listener is distracted due to the redundancy of information. But in case the triggered reaction is too heterogeneous, the result might become arbitrary. So it is the aim of the composer to create a balance between complexity and simplicity. At this point some ideas to introduce a variety of triggered samples by the piano shall be shown.

- Not all notes trigger a sample: a threshold value for the re-trigger action is introduced.

- The triggered sample is not always the same; the sample can be ordered in a pattern of several different sounds.

- The triggered sample can be transposed by a pattern of different transposition factors.

- A single note can trigger multiple samples.

- The number and combination of the polyphonic samples can be modified using individual threshold times for each sample voice.

Granulation

Along with the triggered sound a real-time granulation of the piano sound can be used to take advantage of a timbre immediately following its creation. This creates a link between the instrument and the samples triggered. Granulation allows the manipulation of a given amplitude evolution. To do this, the sound of the piano is automatically recorded after being triggered by the attack and stored in a buffer until the next trigger refills the buffer. This buffer is played through a granulator using a two-dimensional interface for the control of the grain duration and the buffer position. With this interface, the sound can be controlled in real time in terms of freezing, extending the duration or looping, all being different instances of the same operation.

This granulation generator creates a link between the live action and computer-controlled action. The sound of the piano gets a certain reinterpretation and the timbre is slowly evolving from the acoustic into the electronic sound. The acoustic sensation for the listener can be described as a sound created on stage by the piano gradually moving away from the instrument into the speaker.

Piano Extended by Live-Triggered Video

In the two works Temps du miroir and Move for piano and electronics, the idea of extending the piano is taken to a certain extreme. Move uses sample trigger and granulation as well as video in parallel. The video is treated pretty similar to the sound: it is triggered by the attack of the piano sound in real time. The video trigger can address up to three independent video streams. Since all of them use different threshold values the videos perform a polyphonic action similar to the samples in the sound domain.

The video contains different scenes chosen during the performance or by preset settings. The player jumps into random points inside of the chosen scenes and offers several transitions between the triggered snippets. There are sudden stops or opacity changes, all of them either at the beginning or at the end of the video snippet (Video 1).

This technique creates a visual analogy between video and the piano note, especially when a fade out is applied to the snippet. This visual note is in this case not a visualization of a note, but is rather perceived as another instance of the sound, similar to the samples or the granulation. Certainly an unusual experience.

Excursion: Space

The influence of electronic technology on music in the 20th century propelled significant changes both in the physical properties of the instruments and in the way music was received on the whole. By breaking the paradigm of binding the instrument to the physical necessities of sound generation, the sound itself was liberated to the point of complete dematerialization, becoming pure vibrations in air. The implementation of newly developed interfaces and new forms of interaction with the sound only became possible through this change (see also Brümmer 2009).

Of the new possibilities for presenting sound, space is currently being emancipated as a separate parameter, significantly changing the way we think about sound. Concert spaces with “loudspeaker cathedrals” already exist and the reception of music is developing more and more into an immersive sensory experience. The pronounced use of technology also captures the composers’ sense of æsthetics. They are capable of evoking technological changes on the basis of new requirements on their part. The conception of new forms of interaction with these “sound machines” alters the creation process considerably. A new breed of performers is joining forces with the already highly specialized instrumentalists. They see their instruments as flexible, functional objects that follow their own ideas and they creatively transform them accordingly.

Why Space?

In order to pursue this question, the term “space” must be more specifically defined. Firstly, sound is not possible without space. Every tonal process, from the vibration of the string and the soundboard to spatial sound, is an articulation of space.

In addition to sound synthesis, spatial phenomena exist that are most commonly subsumed under the concept of acoustics. The size of the room, its geometry and the reflecting surfaces are perceived in the form of reverberation and echo. The size of a space is ascertained by the time interval between the direct sound and the first reflection (Neukom 2003, 85), as well as by a few other parameters, such as how the reverberation progresses. If this interval is short, the listener in effect intuitively develops the sense of a small room.

Sound synthesis and architectural acoustics are complemented by the determination of the position of a sound in space. The process of determining position is made up of the perception of the distance and the direction from which the source sound is emanating. Human hearing is capable of simultaneously perceiving several independently moving objects or detecting groups of a large number of static sound sources and following changes within them. Spatial positioning is thus well suited for compositional use. While the acoustics of different rooms cannot be perceived simultaneously, the parameters of position and movement can be polyphonically used in highly complex formations.

What persuaded musicians to orchestrate sound events using different spatial locations and movement? The observation that nearly all sound sources in our environment are in motion is of particular interest in this context. The most diverse moments or objects can serve as examples for this, such as birds, automobiles, speech or song; the sources of these sounds cannot be attributed to a fixed position. Smaller movements or gestures in space, such as are carried out by strings, woodwinds, etc., as an element of their musical interpretation, are constantly perceptible during the production of sound. As a result of this movement, the sound obtains a constantly changing phase configuration (Roads 1996, 18), which gives rise to vitality in the sound. In contrast, static, immobile instruments appear uninteresting; their sound seems flat and unreal. This problem is even compensated for in an organ through the slight detuning of its pipes, thus creating beats. A similar technique is used in the tuning of a piano, whereby the piano tuner detunes the strings slightly amongst themselves in order to achieve a stronger and thereby fuller sound. These aspects indicate that the movement of sounds and the accompanying phase shifting belong to the normality of acoustic perception. This leads to the conclusion that the movement of sounds in space most certainly corresponds to our acoustic patterns of perception, and that the use of these parameters therefore naturally suggests itself for musical contexts.

The use of spatial information within a composition clearly has an impact on compositional decisions. Examples from the 16th century demonstrate a clear logic in the use of these parameters. Spatial information aided in providing additional form to musical information and helped to structure the course of the music far more effectively than phrasing, articulation and instrumentation. The best example for the use of spatiality within a compositional structure is a duet, or the concerto grosso (Scherliess and Fordert 1997, 642), whose compositional form is perceived as being clearly structured through the use of spatiality in conjunction with instrumentation. The expanded and more differentiated approach to the spatial area available to the sound, as well as the integration of motion, allow new possibilities to emerge for the dramatic development of a composition.

A further aspect that speaks in favour of the deliberate use of spatiality has its foundation in the fact that human hearing is capable of perceiving more information when it is distributed in space than when it is only slightly spatially dispersed. The reason for this phenomenon lies in the fact that sounds are capable of concealing, or masking, each other (Bregman 1990, 320). For example, if one plays back the signal of a loud bang and the signal of a quiet beep at the same time, the bang would normally completely conceal the other signal. If both sounds were equally loud, they would blend together in the ideal case. However, both sounds would remain separately perceptible, without merging with or masking each other, if they were to be played back on the left and right sides of a listener’s head. This example can be multiplied, until a sound situation arises in which 20, 30, or more sounds are audible, distributed throughout the space. Spatially distributed, such a situation sounds transparent and clear, while the stereophonic playback of the same sounds would appear muffled, with little detail. The listener would be capable of geometrically grouping different events and perceiving spatial formations. This “active listening” would have various alternatives depending on the position of the listener, resulting in multiple variants of how the sound is received. In addition to this, the brain also has the ability to focus on specific sound objects, in what is called the “cocktail party effect” (Roads 1996, 469). However, the brain only has this ability in conjunction with spatially positioned sound objects. If the listeners are ideally surrounded by sounds, they are able to dissect complex sound structures, in a sense. This is impossible even for the frontally spatial setup of an orchestra.

If one summarizes the various acoustic and psycho-acoustic aspects of human hearing, it becomes clear that the ear’s ability to differentiate sounds increases considerably with the use of spatial information. If sounds are distributed over a large area, complex sound information can be designed in a transparent and easily audible manner. The listeners are thus able to grasp more acoustic information and flexibly structure their listening.

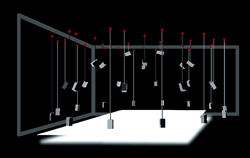

The Sound Dome Project

The Sound Dome of the IMA (Figs. 3a and 3b) is based on strategies that were inspired by the spherical auditorium of Osaka in 1970 (Frisius 1996, 196). In this specific spatial arrangement, the listener is immersed in a dome-shaped loudspeaker installation that allows sounds to be placed throughout the entire area of the concert hall. The reticulated configuration of the loudspeakers enables the continuous movement of sound sources around the listener using the Vector Based Panning (VBP) method (Roads 1996, 459), irrespective of the size of the space and the number of loudspeakers. The aforementioned software Zirkonium provides the interface between the flexible loudspeaker array and the unvarying sound choreography (Brümmer et al, 2006).

Particular attention was paid to the development of the sound manipulation. Until now it was a challenge to control, for example, 50 or 60 loudspeakers together with 38 sound sources at once. A great deal of effort was put into the design of the interface, in order to make it intuitively understandable.

Thus, for the first time in the complex development process, Zirkonium represents an instrument that can be used to flexibly perform spatial music, even with practicable resources and a small number of loudspeakers. Whereas composers were dependent on a proprietary format or a strictly set loudspeaker arrangement up to this point, it is now possible for them to adapt to the individual conditions of the performance space and to work on corresponding productions without being tied to the specific location. Moreover, an increasing number of concert halls possess multichannel sound systems that can be transformed into a sound dome environment with little effort. The existing loudspeaker installations can play back spatial sounds with little technical effort. An example can be seen in a setup at the Stuttgart State Opera, in which the loudspeakers were reconfigured into a sound dome for the occasion of a theatre festival in less than one hour, without moving a single loudspeaker!

Zirkonium’s basic conception and development is currently being further developed by the ZKM | Karlsruhe in conjunction with the Université de Montréal. The goal is to motivate composers, developers and institutions to get involved in its collective, open source development.

Since a lot of attention was drawn to the interface of Zirkonium it should be mentioned that it is quite easy to use and worth exploring by non-experts even if only headphones are available. Installations are possible on Mac OS X systems.

New kinds of reception are also possible with such a multichannel environment. For example, orchestral music can be made into an immersive experience with the assistance of a sound dome. Instead of positioning the whole sound frontally, as is the case in the concert setting, the entire orchestra can virtually be placed above the audience. This makes it possible to step through the individual instrument groups while the work is being performed. The secondary lines suddenly become perceptible. The listener can move from the first to the second violins, to the woodwinds and brass, thus experiencing the music interactively and analytically. This new kind of listening leads to an “active” reception of music, which makes the construction of complex musical works perceptible in a new way. It is even possible to take it one step further and separate the polyphonic structures of Glenn Gould’s interpretation of the Goldberg Variations, expressing it as sound architecture by placing and moving the motive threads in space.

Kindled by the possibilities of digital control and in parallel to the consumer domain, multichannel technology has especially made itself heard among composers of electroacoustic music. It is clearly evident that new approaches are being tried out, as well as being adapted for the normal concert setting. In addition to the Sound Dome, the traditional Acousmonium (Jaschinski 1999, 551) and wave field synthesis (Roads 1996, 252) must be mentioned here. The potential that is introduced to music through spatiality is only at the beginning of its development. The ability to consciously listen using space will continue to be developed in the future and larger installations will become more flexible and more readily available overall. This will make it easier for composers and event organizers to animate and challenge the audience’s capacity for experience.

Piano and Space

Since the keyboard of the piano is a spatial representation of pitch — think of description of pitches as “high” or “low” being expressions out of spatial contexts — it is no surprise to find a number of compositions using a spatial model for the sounding structure. This is the case for Frédéric Chopin’s Étude in C minor, Op. 25 No. 12 or several works of Conlon Nancarrow. If the 88 pitches would be separately placed in space the pitch information would be combined with spatial information. With other words its pitches would be mapped into the spatial domain. As a consequence, a scale would transform into a spatial path, intervals into spatial jumps and pattern would have a spatial correlation. This idea can be extended using a flexible mapping procedure so that a certain pitch is not located in the same position.

In my composition Flow, this task is performed. The work has to be played with a MIDI grand piano where the MIDI information are sent to a Max patch, translating the pitch values into spatial coordinates sent in real time to the spatialization software, Zirkonium. The sound information captured by a microphone is sent to Zirkonium as well and the sound is placed at the specific position in the Sound Dome in real time. To enhance the perception of the resulting paths a long reverb is added to the sound and modified during the performance. Continuous re-scaling of the parameters modifies the mapping of pitch and movements. With this feature it is possible to enlarge or minimize the intervals. Very small movements in pitch can be of a huge dimension in the Sound Dome and large intervals can appear very small. With this kind of real-time interpretation, the mapping does not appear to be mechanic or static and creates a continuous interest for the listener.

Conclusion

The intention to modify available instruments is clearly necessary. This can be done in various ways which all are accessible by the composer himself. Technology offers a special challenge in this context since it enables the instrument to gain features that are very far off what you would expect of the given instrument. Extending the functionality of an instrument keeps it intact to a certain extent but adds on multiple options. This transforms the instruments into a polyphonic small ensemble creating the fascination of invisible sound. It even enables an instrument to be a generator of visual information; it enables the composer to extend his composition into a visual domain, treating video like sound. It is an exciting expectation how these features will change our understanding and perception of music.

Bibliography

Brümmer, Ludger (Ed.). “The ZKM | Institute for Music and Acoustics.” Organised Sound 14/3 (December 2009) “ZKM 20 Years — a Celebration,” pp. 257–267.

Brümmer, Ludger, Joachim Goßmann, Chandrasekhar Ramakrishnan and Bernhard Sturm. 2006. “The ZKM Klangdom.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2006 (Paris: IRCAM—Centre Pompidou, 4–8 June 2006).

Bregman, Albert S. Auditory Scene Analysis — The perceptual organization of sound. Cambridge, MA: MIT Press, 1990.

Frisius, Rudolf. Karlheinz Stockhausen. Einführung in sein Gesamtwerk — Gespräche mit Karlheinz Stockhausen. Mainz: Schott Music, 1996.

Jaschinski, Andreas et al (Eds.). “François Bayle.” Die Musik in Geschichte und Gegenwart. Kassel: Bärenreiter Verlag, 1999, pp. 551ff.

Neukom, Martin. Signale, Systeme und Klangsynthese. Grundlagen der Computermusik. Zürcher Musikstudien — Band 2. Bern: Peter Lang Publishing, 2003.

Ramakrishnan, Chandrasekhar. “Zirkonium: non-invasive software for sound spatialisation.” Organised Sound 14/3 (December 2009) “ZKM 20 Years — a Celebration,” pp. 268–276.

Roads, Curtis. The Computer Music Tutorial. Cambridge, MA: MIT Press, 1996.

Scherliess, Volker and A. Fordert. “Art. Konzert.” Die Musik in Geschichte und Gegenwart. Subject Encyclopedia 5, 1997.

Social top