Virtual Agents in Live Coding

A Review of past, present and future directions

Machine learning is currently (2021–22) a trending topic in science, engineering and the arts (Alpadyn 2016). Bringing machine learning into the real-time domain is a well-known challenge due to the significant amount of time needed to process the many complex computations it requires (Collins 2008; Xambó, Lerch and Freeman 2018). Artificial Intelligence (AI) and improvisation have been investigated extensively in various artistic domains, including music. However, with a few notable exceptions discussed here, AI in the context of improvisational practices inherent to live coding has been little explored. Live coding provides an exemplary scenario through which to investigate the potential of machine learning in performance, as it brings a live account of programming applied to music-making, described with the property of liveness (Tanimoto 2013).

Here we explore some of the present and future directions that research into and practices using virtual agents (VAs) in live coding might take. In discussions and writings in the early years of live coding practices, in addition to the promises and potential of a “new form of expression in computer music” (Collins et al. 2003, 321), the drawbacks and challenges of live coding were also highlighted, including the amount of time required to generate audible code, the dependency on inspiration (which is not always present when improvising) and the risk of dealing with code errors due to its liveness. Collaborative music live coding typically involves a group of at least two networked live coders, who can perform together while co-located in the same space, distributed in different spaces or in a configuration that is a combination of both (Barbosa 2003, 57–58). Collaborative music live coding is a promising approach to music performance and computer science education because it can promote peer learning in the latter and an egalitarian approach to collaborative improvisation in the former (Xambó et al. 2017). Given that it might sometimes be impractical for one artist to collaborate with another human — for reasons of travel limitations or scheduling issues, for example — an alternative virtual peer can be an option. Looking at the challenges and opportunities of collaborating with VAs is of interest in the present article.

A review of different perspectives of using VAs in the practice of live coding from past and present focusses on the period 2010–2020 and points to future directions. To identify relevant articles, different database sources have been used (DBLP, Google Scholar, ICLC Proceedings, JSTOR Search, Scopus, Zenodo) to search for different keyword strings (“live coding + machine learning”, “live coding + agent”, “live coding + virtual agent”) found in publications from that period. 1[1. The main analysis was done in the summer of 2020, with bibliographic updates in the winter of 2021.] We have excluded references that explore immersive experiences (e.g., virtual worlds, audiovisual experiences) as they would be out of the scope of this research. To begin with, we carried out an initial text analysis of the documents. Our analytical approach is based on thematic analysis (Braun and Clarke 2006) using the NVivo software to identify the main topics discussed here. Thirty items from 2003–2020 have been analyzed; further references that were more broadly generic were excluded from the study. The references have been grouped into two main categories: conceptual foundations and practical examples. Figure 1 illustrates the most frequent specialized words encountered in the analyses — these orbit around vocabulary from computer music, computing and machine learning, among others.

The discussion and propositions here follow up on the author’s previous research exploring the potential role of a virtual agent to counterbalance the limitations of human live coding (Xambó et al. 2017). In turn, it provides a critical context to position our project “MIRLCAuto: A Virtual Agent for Music Information Retrieval in Live Coding,” funded by the Engineering and Physical Sciences Research Council (EPSRC) Human Data Interaction (HDI) Network Plus. Contributing to the music performance domain can more broadly inform the emerging fields of machine learning and computational creativity applied to real-time contexts (e.g., creative computing, music education, music production, performing arts) and help to move the field forward.

Conceptual Foundations

Broader Context

Several areas inform the field of virtual agents (VAs) in live coding. Figure 2 shows the main keywords of disciplines and theoretical frameworks that are present in the selected readings. These include interdisciplinary fields (affective computing, genetic programming [GP], human-computer interaction [HCI], music technology), fields of Artificial Intelligence (AI) and creativity (computational creativity), fields of AI and computer music (algorithmic composition, computer-aided composition, laptop orchestra, machine musicianship), approaches to collaboration and AI (co-creation, multi-agent systems, participatory sense-making), and approaches to interactive music systems (musical interface design), among others. This variety illustrates the interdisciplinary character of the field.

Virtuality, Agency and Virtual Agency

The adjective and noun “virtual” come from the Latin adjective virtualis of or relating to “power or potency”, and the noun virtūt-, virtus referring to “manliness, valour, worth, merit, ability, particular excellence of character or ability, moral excellence, goodness, this quality personified as a goddess, any attractive or valuable quality, potency, efficacy, special property” (OED — Oxford English Dictionary). In computing (of hardware, a resource and so on) the adjective refers to “not being physically present as such but made by software to appear to be so from the point of view of a programme or user” (OED).

Virtuality is the property of a computer system with the potential for enabling a virtual system (operating inside the computer) to become a real system by encouraging the real world to behave according to the template dictated by the virtual system. In philosophical terms, the property of virtuality is a system’s potential evolution from being descriptive to being prescriptive. (Turoff 1997, 38)

From this perspective, virtuality refers to how computers represent reality. The term “virtual” was used long before the appearance of computers and has multiple connotations, including the related term “virtue” as a personal quality, and the “liminal” property in virtual spaces that refers to temporary zones (Shields 2003, 1–17).

The noun and adjective “agent” come from the Latin agent-, agēns, agere meaning acting or active. The noun refers to “a person who or thing that acts upon someone or something, one who or that which exerts power or the doer of an action,” whereas the adjective refers to “acting or exerting power” (OED). A commonly accepted definition of an agent is “one who acts, or who can act” (Franklin and Graesser 1996, 25); the term refers to humans and most animals, but also to computer-based entities. More precisely, an agent is defined as “anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators” (Russell and Norvig 2016, 35). A more specific working definition brings the notion of being capable of acting independently:

An autonomous agent is a system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to effect what it senses in the future. (Franklin and Graesser 1996, 25)

According to Stuart Russell and Peter Norvig, a rational agent is described as follows:

[F]or each possible percept sequence 2[2. I.e. the complete history of everything the agent has ever perceived.], a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has. (Russell and Norvig 2016, 37)

Hence, “a rational agent should be autonomous” (Russell and Norvig 2016, 39).

A commonality of these definitions of an agent is to cover from the most complex systems on the one end, e.g., humans with sophisticated senses and a range of possible actions to choose from, to the simplest systems on the other end, e.g., thermostats, which are just reactive because they simply respond to the fluctuating temperature of the environment. They term the simplest type of agent a simple reflex agent, which only considers what it senses at present. Such agents “have the admirable property of being simple, but they turn out to be of limited intelligence” (Ibid., 49). Accordingly, it is worth noting that a programme is not necessarily a computer agent if it does not continuously sense the environment and it does not affect what it senses in a later period. As Russell and Norvig describe:

Computer agents are expected to do more [than just act]: operate autonomously, perceive their environment, persist over a prolonged time period, adapt to change, and create and pursue goals. (Russell and Norvig 2016, 4)

A software rational agent is often referred to as a virtual agent, which is the term that we use here. We are cautious about using the term “intelligent agent” as it can raise unrealistic expectations (Nwana 1996, 240), although the state-of-the-art is progressing rapidly. From the above, we assume that VAs are autonomous and that they can span from simple to complex types.

Three primary non-mutually exclusive attributes have been identified by Hyacinth Nwana as preferred criteria in software agents (Ibid., 209 ff.):

- Autonomy. The capacity of agents to operate on their own.

- Cooperation. The ability to interact with other agents, whether human or software.

- Learning. The ability to learn in the process of reacting or interacting with the environment.

Three aspects related to what can constitute a rational agent have been distinguished by Russell and Norvig (2016, 38–39):

- Omniscience. The unrealistic ability to know the outcome of future actions (not to be confused with rationality and the ability to gather or explore information).

- Learning. The ability to learn from what it is perceived.

- Autonomy. The ability to learn and modify prior knowledge.

Accordingly, one of the advantages of a learning agent is that “it allows the agent to operate in initially unknown environments and to become more competent than its initial knowledge alone might allow” (Russell and Norvig 2016, 55). This is possible via the additional learning element, which oversees the improvement of the agent’s performance through constantly modifying its behaviour according to feedback and learning goals. The learning component builds on the performance element, which oversees decision-making and is present in the agents discussed here.

Machine Musicianship, Musical Metacreation and Musical Cyborgs

Other relevant terms related to VAs in computer music include machine musicianship, musical metacreation and musical cyborg.

Machine musicianship applies AI concepts and techniques to computer music systems, where the systems can learn and evolve (Rowe 2001). Some notable examples exist:

- Voyager. An interactive musical system created by George Lewis (2000) with the ability to improvise with a human improviser by combining responses and independent behaviours.

- The Continuator. A musical instrument developed by François Pachet (2003) that learns and plays interactively with the performer’s style.

- Shimon. A robotic musician developed by Guy Hoffman and Gil Weinberg (2010) that can improvise with humans.

Much of the literature on the subject this far has focussed on studying the use of virtual agents based on the call-and-response strategy using, for example, a similar vs. contrasting response (Subramanian, Freeman and McCoid 2012) or an affective response (Wilson, Fazekas and Wiggins 2020). In contrast, our research takes the perspective of a VA that goes beyond the approach of following live coder actions. To embody the humanoid metaphor, we envision that the virtual agent should be able to act both as a live coder and a chatting peer (Xambó et al. 2017).

A specialized exploration of agents in computer music, in terms of computational simulations of musical creativity, can be found at Musical Metacreation (MuMe), which has since 2020 partnered with another research platform, the Computer Simulation of Music Creativity (CSMC), on the annual joint Conference on AI Music Creativity (AIMC). Connected with the creative practice field of artificial life art or metacreation (Whitelaw 2004), the field of musical metacreation investigates generative tools and theories applied to music creation and includes in its research collaboration between humans and creative VAs (Pasquier et al. 2016). Collaborative music live coding can be understood as a conversation between at least two people; regardless of the size of the group, VAs can be integrated in addition to human agents (HAs). Further, beyond VA-HA collaboration, we envision the possibility of VA-VA collaboration (Xambó et al. 2017). Accordingly, several research questions emerge: Can multiple agents collaborate among themselves? How feasible is it? What would the computational cost be? To what extent should there be supervision and how often? Collaboration between live-coding VAs can be seen as a particular case of multi-agent systems for music composition and performance, which has been widely researched (Miranda 2011).

Donna Haraway has accurately described the term cyb-ernetic org-anism or cyborg as “a cybernetic organism, a hybrid of machine and organism, a creature of social reality as well as a creature of fiction” (Haraway 1991, 149). This creature of social and fictional realities has profoundly influenced science, engineering and the arts; such organisms have been encountered notably in music and live coding practices. The musical cyborg has been defined as “a figure which combines human creativity and digital algorithms to create sounds and moments that would not otherwise be possible through human production alone” (Witz 2020, 33). On the close relationship between the live coder and the algorithm Jacob Witz further explains that “the live coded cyborg actively shapes the algorithms they use to optimize their output on an individual level” (Ibid., 35). Accordingly, here we use the term musical cyborg as a metaphor that refers to the cooperation between a human live coder and a virtual agent, particularly when it is a one-to-one relationship.

Theoretical Frameworks

The revision of a number of existing theoretical frameworks can help in the design and evaluation of systems of VAs for live coding.

Margaret Boden has proposed a general definition of creativity as “the ability to generate novel, and valuable, ideas” (Boden 2009, 24). However, she acknowledges that assessing whether a computer is creative is in itself a philosophical enquiry. In computational creativity, we find some theoretical frameworks designed for understanding computational creative systems: e.g., the Standardised Procedure for Evaluating Creative Systems (SPECs) (Jordanous 2012) and the Creative Systems Framework (CSF) (Wiggins 2006), among others. In particular, CSF has been discussed in the context of live coding (McLean and Wiggins 2010; Wiggins and Forth 2018). Transformational creativity is highlighted as an important element for an agent to be considered creative, “in which an agent modifies its own behaviour by reflective reasoning” (Wiggins and Forth 2018, 274).

Steven Tanimoto (2013) proposes six levels of liveness in the process of programming. This theoretical framework looks into the relationship between the programmer’s actions and the computer’s responses. Beyond the four levels Tanimoto had earlier outlined — informative; informative and significant; informative, significant and responsive; informative, significant, responsive and live — two new levels are proposed. Level 5 is called tactically predictive and is slightly ahead of the programmer by predicting the programmer’s next action (e.g., lexical, musical, semantic) utilizing machine learning techniques. Level 6 is foreseen as strategically predictive, which indicates more intelligent predictions about the programmer’s intentions. Intelligent predictions are linked to agency and liveness, where not only the code but also the tool entail liveness:

The incorporation of the intelligence required to make such predictions into the system is an incorporation of one kind of agency — the ability to act autonomously. Agency is commonly associated with life and liveness. (One might argue that here, liveness has spread from the coding process to the tool itself.) [Tanimoto 2013, 34]

Other approaches to understanding VAs for live coding borrow concepts from studies on collaboration between humans from cognitive science and HCI, among others. A suitable model that takes into account human collaboration and improvisation is the enactive paradigm (Davis et al. 2015). This paradigm incorporates an enactive model of collaborative creativity and co-creation to describe improvised collaborative interactions with feedback from the environment. As part of this paradigm, participatory sense-making is situated as a key component, described as “negotiating emergent actions and meaning in concert with the environment and other agents” (Ibid., 114). In the context of a co-creative drawing partner, a set of design recommendations for co-creative agents is presented in alignment with participatory sense-making and open-ended collaborations (Davis et al. 2016). Accordingly, co-regulation of interaction between agents should form part of the process of participatory sense-making. As proposed in the TOPLAP Manifesto, “Obscurantism is dangerous” and to help practitioners avoid this, screens should typically be shown during a performance. Thus, the live coder’s actions and algorithmic thoughts can be shared not only with co-creative agents but also with the audience, who can also become part of this process of participatory sense-making.

Examples in Practice

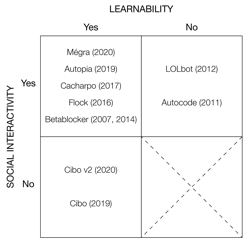

Several scholars have proposed agent typologies — of note are those by Hyacinth Nwana (1996) and by Stuart Russell and Peter Norvig (2016). Our assumptions here are that the VAs are autonomous (ranging from simple to complex agents). In this section, we analyze examples of VAs in live coding based on two dimensions:

- Social interactivity. Does it cooperate with other agents, either human or virtual?

- Learnability. Does it learn, either online (during the performance time, in real time) or offline (during pre-performance time)?

Shared collective control in computer music has been described by Sergi Jordà (2005) as having two categories:

- Multiplicative actions. The product of individual contributions, or a series of highly interdependent processes.

- Summative actions. The sum of individual contributions, or independent processes, where there is little mutual interaction.

We consider Jordà’s two categories when describing social interactivity to reflect more precisely on the possibilities of collaboration in musical practice. Figure 3 summarizes the examples analyzed below according to our two-dimensional representation. The examples are presented in chronological order by year of the publication in which it was first presented.

Betablocker

Betablocker is a multi-threaded virtual machine designed for low-level live coding that can be used for algorithmic composition, as well as for sonification and visualization (Video 1). It was originally developed by Dave Griffiths (2007) using the Fluxus gaming engine and operated with a game pad instead of a keyboard and mouse. Later it was ported to the Gameboy DS system and a follow-up version was implemented in SuperCollider (Bovermann and Griffiths 2014). The engine only stops if it is externally halted, but if it is left running with no user intervention, it might evolve to stable repetitive behaviours. The language is highly constrained with no distinction between programme and data.

Video 1. Dave Griffiths live coding using the Betablocker DS. Vimeo video “Betablocker DS realtime synth” (3:20) posted by “dave griffiths” on 29 May 2011.

With the Fluxus and Gameboy DS versions, it is possible to create several interactive software agents that modify themselves and each other. For example, while one programme plays sound in a loop, another can be made to overwrite parts of the former while the former is playing, according to an XOR operation. 3[3. The Boolean logic operation “exclusive or” returns “true” if the two inputs are different.] Betablocker also uses genetic algorithms with a customized fitness function for selecting the next generation of programmes. The SuperCollider version results in a more deterministic approach, although this is counterbalanced with randomization functions.

Betablocker is an example of a virtual multi-agent environment that hosts simple autonomous agents that can interact with other agents (multiplicative actions) and also has the ability to learn using evolutionary algorithms. In the words of the system’s authors, “Betablocker can be viewed as a companion for live coding that one has the opportunity to get to know, collaborate with, and — sometimes — work against” (Ibid., 52).

ixi lang

In 2009, Thor Magnusson designed “ixi lang: A SuperCollider parasite for live coding,” a language intended for beginners in live coding. The syntax is simple and allows the user to manipulate musical patterns in a constrained environment (Magnusson 2011). The interaction metaphor consists of creating agents that have assigned instruments, such as melodic, percussive, sample-based or custom, which can play scores defined by the musical patterns.

Autocode (Video 2) is an autonomous and deterministic virtual multi-agent environment within ixi lang. When invoking the VA, it is possible to define the number of agents or “musicians” to be created. Then the musicians start to be generated in real time with assigned musical patterns according to a deterministic random behaviour that chooses the type of instrument and the score to be played, among others. Although multiple agents can be created that can play in sync, their processes seem to be independent of each other.

Autocode exemplifies a virtual multi-agent environment that behaves autonomously by creating musical agents with the ability to cooperate by performing live coding as summative actions. However, there is no apparent ability to learn.

LOLbot

Sidharth Subramanian, Jason Freeman and Scott McCoid created a VA called LOLbot. It was implemented in Java and resides in LOLC (Laptop Orchestra Live Coding), a text-based environment they presented in 2011 for collaborative live coding improvisation (Freeman and Van Troyer 2011). In a performance setting, LOLbot is run on a separate computer and appears as another ensemble member in the interface. The motivation for the development of this agent was threefold: to understand the nature of human performance, to bring a new character to the ensemble and to provide a tool for practising (Subramanian, Freeman and McCoid 2012). Time synchronization between performers is managed via a shared clock in the LOLC server. The live coders can create rhythmic patterns based on available sound samples. LOLC has an interface with an instant-messaging feature and a visualization of the created patterns, which can be shared and borrowed (multiplicative actions). LOLbot observes and analyzes what the human performers are doing, encapsulating its observations as patterns. Then the VA selects suitable patterns to play in the ensemble using the LOLC syntax. LOLbot uses pattern-matching algorithms to identify the preferred choice to play, which is determined by the metric coherence / contrast. This metric is defined in real time by the human performers using a slider. The value of the coherence / contrast slider defines the character of the agent between a rhythmic behaviour and a contrasting behaviour.

LOLbot is an example of an autonomous agent that can interact with other agents (in this case humans), but with no apparent internal feedback, a mechanism to make improvements.

Flock

Video 3. Shelly Knotts, Holger Ballweg and Jonas Hummel performing Knotts’ Flock at ICLC 2015 at Left Bank Leeds (UK) in July 2015. Vimeo video “Flock (2015)” (10:55) posted by Shelly Knotts on 9 November 2015.

Shelly Knotts’ Flock is a system implemented in JITlib, a SuperCollider library that provides live coding functionality (Knotts 2016). The system has voting agents that “listen” to the music made by human live coders and vote on their preferred audio stream according to their pre-defined musical taste (Video 3). The audio analysis uses machine listening techniques employing the SCMIR library in SuperCollider. The votes affect the audio level presence of the audio streams in the audio mix. The agents’ preferences can change over time depending on the other agents’ votes inspired by a bipartisan political model and flock theory in decentralized networks.

Flock exemplifies a virtual multi-agent system with autonomous agents that can interact with other agents and have the ability to constantly modify their own behaviour according to changes in the environment, including other agents’ behaviours (multiplicative actions).

Cacharpo

Video 4. Luis Navarro co-performing with Cacharpo. Vimeo video “Cacharpo: co-performing cumbia sonidera with deep abstractions” (5:49) posted by “luis navarro del angel” on 27 July 2017.

Developed by Luis Navarro and David Ogborn, Cacharpo is a VA capable of live coding that works as a co-performer of a human live coder (Navarro and Ogborn 2017). The music it generates is inspired by the cumbia sonidera genre from Mexico and has roots in the Colombian cumbia (Video 4). The motivation is to provide a companion that can bring new dynamics into solo or group performances. The agent “listens” to the audio produced by the live coder using machine listening and music information retrieval techniques implemented in SuperCollider. Artificial Neural Networks (ANNs) developed in Haskell are used in real time to identify the characteristics of the music (e.g., cumbia sonidera roles, instruments and relevant audio features). The training of the ANNs is performed offline prior to use with a data set of sound recordings from SuperCollider performances. An algorithm in SuperCollider is in charge of generating the code.

Cacharpo is an example of an autonomous agent that can interact with other agents (in this case humans) with summative actions and can learn during offline training.

Cibo and Cibo v2

Video 5. Performance demonstrating the Cibo Agent. Vimeo video “Cibo Safeguard” (9:42) posted by “Blind Elephants”.

Jeremy Stewart and Shawn Lawson built Cibo in 2019 with interconnected neural networks that generate TidalCycles code in a solo performance style using samples from a training corpus (Video 5). An encoder-decoder sequence-to-sequence architecture, typically used for language translation, is implemented using the PyTorch library in Python. The training was based on recordings of TidalCycles performances by the authors Jeremy Stewart and Shawn Lawson. Some open questions emerged from the results, notably:

When live-coding’s [sic] intent is to show the work, what does it mean if Cibo shows its work and yet no-one, not even the creators, understand entirely how it [is] working? (Stewart and Lawson 2019, 7)

The authors have suggested that Cibo fulfils Tanimoto’s 5th level of liveness, “tactically predictive”.

Cibo v2 (Stewart et al. 2020) is a VA implemented in TidalCycles that builds on the first implementation. The second version (Video 6) adds more neural network modules to improve the performance (e.g., progression, variation). The agent has been trained with recordings of performances by several live coders using TidalCycles and operates based on characteristics learned from the training material: “The resulting performance agent produces TidalCycles code that is highly reminiscent of the provided training material, while offering a unique, non-human interpretation of TidalCycles performances” (Ibid., 20).

Cibo and Cibo v2 are examples of autonomous agents that can learn during offline training. Although it is reported that the intention is to explore the agent as a co-performer, at the moment the agent performs solo.

Autopia

Video 7. Autopia playing on its own and then with human live coders. Vimeo video “Autopia: An AI Collaborator for Live Coding Music Performances (Demo performance)” (1:22) posted by “Norah Lorway” on 19 July 2019.

Autopia is a system that can participate in a collaborative live coding performance. Developed in 2019 by Norah Lorway, Matthew Jarvis, Arthur Wilson, Edward Powley and John Speakman (Lorway et al. 2019), the agent generates code based on genetic algorithms, where multiple generations of agents are created (Video 7). For example, a simple genetic crossover algorithm is used to produce a new agent from two parent agents. At present, the system implements pre-defined templates using a GP algorithm in C# that automatically creates SuperCollider code. Inspired by gamification, the fitness evaluation function required to evaluate the fitness of the population members is currently based on the audience’s feedback (Lorway, Powley and Wilson 2021). The audience can vote in real time using a mobile web app. The participants can score what they are hearing utilizing a slider. The audience’s slider values are averaged to be used for the next generation of agents.

The system was demonstrated by the networked live coding duo Electrowar (Norah Lorway and Arthur Wilson) at the Network Music Festival 2020 using Extramuros for the network collaboration (Ibid). In this performance, Autopia started performing alone while the audience was voting, to then be joined by the human performers.

Autopia exemplifies a virtual multi-agent system of autonomous agents that can interact between themselves with multiplicative actions and can also learn using GP.

Mégra

Video 8. Niklas Reppel creating a pattern language using the Mégra learning functions. Vimeo video “Mégra — Creating a Pattern Language on the Fly” (1:54) posted by “Park Ellipsen” on 3 March 2019.

A stochastic environment that Nikas Reppel began to work on in 2017, the Mégra System is based on Probabilistic Finite Automata as a data model, was developed with Common Lisp and has SuperCollider as a sound engine (Reppel 2020). The system is designed to work with a small data set that is trained using machine learning techniques and which allows for real-time interaction (Video 8). It is possible to create sequence generators using different techniques (e.g., transition rules, training and inference, manual editing and so on). The model can be visualized in real time as the code is updated.

Mégra exemplifies an autonomous agent that can learn during online training. It also represents cooperation with the human live coder à la musical cyborg, where a continuous dialogue between the human live coder and the system creates a sense of unified identity.

Future Directions

Potential Research in Live Coding

In the process of analyzing the various readings and projects outlined above, a number of further research ideas have emerged.

Out of all the VAs investigated here, only Betablocker (Bovermann and Griffiths 2014; Griffiths 2007) has explicitly explored live coding beyond the laptop and keyboard, in this case a system comprising a game pad and a Gameboy DS. Researching this topic further seems of interest, and could prove to be in alignment with the Internet of Things and smart objects research (Fortino and Trunfio 2014) along with the Internet of Musical Things (Turchet et al. 2018). A prominent criticism of performing music with personal computers and the genre of laptop music has been the lack of bodily interactions, as well as the lack of transparency of the performer’s action. Live coding represents a step forward to avoid obscurantism by projecting the performer’s screen showing the code. However, the performer’s action can still be difficult to understand for an audience with little code literacy. Emphasizing the legibility of the code is relevant so that the processes and decisions taken by both the VA live coder and the human live coder are clear at all times. How to find the right balance between simplicity and complexity remains an open question: “Live coding languages need to avoid unnecessary detail while keeping interesting possibilities open” (Griffiths 2007, 179).

Inspired by the human-centred approach of using machine learning algorithms as a creative musical tool (Fiebrink and Caramiaux 2018), the Mégra system (Reppel 2020) brings the training process of machine learning to the live coding performance so that it is also part of the “algorithmic thinking” of the live coder. The online machine learning training exposed in this system contrasts with the offline training process of machine learning of Cacharpo (Navarro and Ogborn 2017), Cibo (Stewart and Lawson 2019), and Cibo v2 (Stewart et al. 2020). In a survey about the design of future languages and environments for live coding (Kiefer and Magnusson 2019), the respondents highlighted the following features for a live coding language for machine listening and machine learning: flexibility, hackability, musicality and instrumentation of the machine learning training. It would be interesting to compare the same system using both online and offline learning to identify any musical and computational differences. Sema is a user-friendly online system that allows practitioners to create their live coding language and explore machine learning in their live coding practice (Bernardo, Kiefer and Magnusson 2020). The findings from a workshop with Sema indicated that the topics of programming language design and machine learning can be challenging for beginners in computer science. Further research is needed in this area to assess how we might integrate machine learning concepts into the legibility of the code during a live coding session.

Another open question is how to evaluate these new algorithms from a musical and computational perspective (Xambó, Lerch and Freeman 2018; Xambó et al. 2017). Who should evaluate these systems; the audience, the live coder or the virtual agent? How should these systems be evaluated? As previously discussed, there are several theoretical frameworks and approaches that we can borrow, but the literature on evaluating VAs in live coding is scarce. A point of criticism of these systems is the lack of formal evaluation methods and the need to provide better documentation for supporting reproducible research (Wilson, Fazekas and Wiggins 2020). More extensive and consistent documentation and publication of data and code would greatly benefit the advancement of practices and research in the community. 4[4. There are many ideas that could be advanced for increased efforts in this field but that would be out of the scope of the present text.]

Exploring a laptop ensemble with only VAs is proposed in LOLbot’s research findings (Subramanian, Freeman and McCoid 2012). As discussed earlier, we envision multiple agent collaboration or VA-VA collaboration as an interesting research space (Xambó et al. 2017). However, the results of a survey on music AI software (Knotts and Collins 2020) reflect a general preference that VAs should not replace humans, as human creativity is difficult to model. A promising area of research is the alternative roles that VAs can take beyond imitating humans; however, Knotts and Collins have found through their research that “tools that take over or control the creative process are of less interest to music creators than open-ended tools with many possibilities” (Ibid., 504).

Speculative Futures

Perhaps there will come a time when the virtual agents are completely autonomous, become independent of humans and create their own communities (Collins 2011). This raises potential machine ethics that should be considered when designing future generations of VAs. Isaac Asimov’s Three Laws of Robotics are a valuable precursor for this potential techno-societal challenge (Asimov 1950).

When interacting with humans (HA-VA), what role do we envision for the VAs? (Collins 2011; Subramanian, Freeman and McCoid 2012; Xambó et al. 2017) Intelligent tutors, who can help the learner to develop musical and computational skills? Virtual musicians, who can create new unimaginable music? Co-creative partners, who are always available to rehearse and perform? Computational-led companions, who can speed up the process of live coding? Tools that can help understanding how human live coders improvise together by modelling their behaviours?

If and when we reach the “strategically predictive” level 6 of liveness in the process of programming (Tanimoto 2013), what will the role of the human live coder be? If the VA completes our code or even writes the code on our behalf, can this still be considered a collaborative musical practice? Will this novel approach to music-making change the musical æsthetic? (Kiefer and Magnusson 2019) Is the intention to create a VA that produces a live coding performance indistinguishable from a human live coder and passes the Turing test? (McLean and Wiggins 2010) Are we going to be able to produce VAs that are more responsible for the creative outputs than at present? (Wiggins and Forth 2018)

Conclusion

We have presented a review of past, present and future perspectives of research and practice on virtual agents in live coding. A set of selected references has been distributed into two main categories: conceptual foundations and practical examples. In conceptual foundations, we outlined key terms and theoretical frameworks that can be helpful to understand VAs in live coding. In practical examples, we described exemplary instances of VAs in live coding based on two dimensions, with the assumption that the VAs are autonomous: social interactivity and learnability. We concluded by envisioning further potential research ideas and speculative futures.

Live coding is a promising space in which to explore AI and computational creativity. We have seen a range of perspectives and explorations of what a VA live coder can mean. We are just at the beginning of a technologically promising and conceptually exciting journey.

Acknowledgments

The MIRLCAuto project is funded by an EPSRC HDI Network Plus Grant — Art, Music, and Culture theme. Special thanks to Gerard Roma for inspiring conversations during the writing of this article.

December 2021, April 2022

Bibliography

Alpaydin, Ethem. Machine Learning: The new AI. Cambridge MA: The MIT Press, 2016.

Asimov, Isaac. I, Robot. Garden City NY: Doubleday, 1950.

Barbosa, Álvaro. “Displaced Soundscapes: A Survey of network systems for music and sonic art creation.” Leonardo Music Journal 13 (December 2003) “Groove, Pit and Wave — Recording, Transmission and Music,” pp. 53–59. http://doi.org/10.1162/096112104322750791

Bernardo, Francisco, Chris Kiefer and Thor Magnusson. “Designing for a Pluralist and User-Friendly Live Code Language Ecosystem with Sema.” ICLC 2020. Proceedings of the 5th International Conference on Live Coding (Limerick, Ireland: Irish World Academy, University of Limerick, 5–7 February 2020), pp. 41–57. http://iclc.toplap.org/2020 | http://doi.org/10.5281/zenodo.3939228

Boden, Margaret A. “Computer Models of Creativity.” AI Magazine 30/3 (Fall 2009), pp. 23–34. http://doi.org/10.1609/aimag.v30i3.2254

Bovermann, Till and Dave Griffiths. “Computation as Material in Live Coding.” Computer Music Journal 38/1 (Spring 2014) “Live Coding,” pp. 40–53. http://doi.org/10.1162/COMJ_a_00228

Braun, Virginia and Victoria Clarke. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3/2 (2006), pp. 77–101. http://doi.org/10.1191/1478088706qp063oa

Collins, Nick. “Reinforcement Learning for Live Musical Agents.” ICMC 2008 — “Roots/Routes”. Proceedings of the 34th International Computer Music Conference (Belfast, Northern Ireland: SARC — Sonic Arts Research Centre, Queen’s University Belfast, 24–29 August 2008). http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.147.2430 [Accessed 31 March 2022]

_____. “Trading Faures: Virtual musicians and machine ethics.” Leonardo Music Journal 21 (December 2011) “Beyond Notation: Communicating Music,” pp. 35–39. http://doi.org/10.1162/LMJ_a_00059

Collins, Nick, Alex McLean, Julian Rohrhuber and Adrian Ward. “Live Coding in Laptop Performance.” Organised Sound 8/3 (December 2003), pp. 321–330. http://doi.org/10.1017/S135577180300030X

Davis, Nicholas, Chih-Pin Hsiao, Yanna Popova and Brian Magerko. “An Enactive Model of Creativity for Computational Collaboration and Co-Creation.” In Creativity in the Digital Age. Edited by Nelson Zagalo and Pedro Branco. London: Springer-Verlag, 2015, pp. 109–133. http://doi.org/10.1007/978-1-4471-6681-8_7

Davis, Nicholas, Chih-Pin Hsiao, Kunwar Yashraj Singh, Lisa Li and Brian Magerko. “Empirically Studying Participatory Sense-Making in Abstract Drawing with a Co-Creative Cognitive Agent.” IUI 2016. Proceedings of the 21st International Conference on Intelligent User Interfaces (Sonoma CA, USA: Association for Computing Machinery, 7–10 March 2016), pp. 196–207. http://doi.org/10.1145/2856767.2856795

Fiebrink, Rebecca A. and Baptiste Caramiaux. “The Machine Learning Algorithm as Creative Musical Tool.” In Oxford Handbook of Algorithmic Music. Edited by Alex McLean and Roger T. Dean. Oxford: Oxford University Press, 2018, pp. 181–208. http://doi.org/10.1093/oxfordhb/9780190226992.013.23

Fortino, Giancarlo and Paolo Trunfio (Eds.). Internet of Things Based on Smart Objects: Technology, middleware and applications. Springer, 2014. http://doi.org/10.1007/978-3-319-00491-4

Freeman, Jason and Akito Van Troyer. “Collaborative Textual Improvisation in a Laptop Ensemble.” Computer Music Journal 35/2 (Summer 2011) “Communication in Performance,” pp. 8–21. http://doi.org/10.1162/COMJ_a_00053

Franklin, Stan and Art Graesser. “Is it an Agent, or just a Program? A Taxonomy for autonomous agents.” In Intelligent Agents III: Agent theories, architectures and languages. Proceedings of the ECAI ’96 Workshop (ATAL) (Budapest, Hungary: 12–13 August 1996). Edited by Jörg P. Müller, Michael J. Wooldridge and Nicholas R. Jennings. Berlin: Springer, 1996, pp. 21–35. http://doi.org/10.1007/BFb0013570

Griffiths, Dave. “Game Pad Live Coding Performance.” In Die Welt als virtuelles Environment. Edited by Johannes Birringer, Thomas Dumke and Klaus Nicolai. Dresden: Trans-Media-Akademie Hellerau, 2007, pp. 169–179.

Haraway, Donna J. “A Cyborg Manifesto: Science, technology and socialist feminism in the late twentieth century.” In Simians, Cyborgs and Women: The reinvention of nature. New York: Routledge, 1991, pp. 149–181. http://doi.org/10.1007/978-1-4020-3803-7_4

Hoffman, Guy and Gil Weinberg. “Shimon: An interactive improvisational robotic marimba player.” CHI ’10 Extended Abstracts on Human Factors in Computing Systems (Atlanta GA, USA: Georgia Tech, 10–15 April 2010), pp. 3097–3102. http://doi.org/10.1145/1753846.1753925

Jordà, Sergi. “Multi-user Instruments: Models, examples and promises.” NIME 2005. Proceedings of the 5th International Conference on New Instruments for Musical Expression (Vancouver: University of British Columbia, 26–28 May 2005), pp. 23–26. http://www.nime.org/2005 | http://doi.org/10.5281/zenodo.1176760

Jordanous, Anna. “A Standardised Procedure for Evaluating Creative Systems: Computational creativity evaluation based on what it is to be creative.” Cognitive Computation 4/3 (September 2012) “Computational Creativity, Intelligence and Autonomy,” pp. 246–279. http://doi.org/10.1007/s12559-012-9156-1 [Also see the author’s doctoral dissertation “Evaluating Computational Creativity: A Standardised procedure for evaluating creative systems and its application” (University of Sussex, 2012).]

Kiefer, Chris and Thor Magnusson. “Live Coding Machine Learning and Machine Listening: A Survey on the design of languages and environments for live coding.” ICLC 2019. Proceedings of the 4th International Conference on Live Coding (Madrid, Spain: Medialab Prado, 16–18 January 2019), pp. 353–358. http://iclc.toplap.org/2019 | http://doi.org/10.5281/zenodo.3946188

Knotts, Shelly. “Algorithmic Interfaces for Collaborative Improvisation.” ICLI 2016. Proceedings of the 3rd International Conference on Live Interfaces (Brighton, UK: University of Sussex, 29 June – 3 July 2016). http://thormagnusson.github.io/liveinterfaces | Available at http://users.sussex.ac.uk/~thm21/ICLI_proceedings/2016/Colloquium/137_ICLI2016_DC_ShellyKnotts.pdf [Accessed 13 March 2021]

Knotts, Shelly and Nick Collins. “A Survey on the Uptake of Music AI Software.” NIME 2020. Proceedings of the 20th International Conference on New Interfaces for Musical Expression (Birmingham, UK: Royal Birmingham Conservatoire, 21–25 July 2020). http://www.nime.org/2020 | http://doi.org/10.5281/zenodo.4813499

Lewis, George E. “Too Many Notes: Computers, complexity and culture in Voyager.” Leonardo Music Journal 10 (December 2000) “Southern Cones Introduction,” pp. 33–39. http://doi.org/10.1162/096112100570585

Lorway, Norah, Matthew R. Jarvis, Arthur Wilson, Edward J. Powley and John A. Speakman. “Autopia: An AI collaborator for gamified live coding music performances.” AISB 2019. Proceedings of the Society for the Study of Artificial Intelligence and Simulation of Behaviour Convention (Cornwall, UK: Falmouth University, 16–18 April 2019). Available at http://repository.falmouth.ac.uk/3326/1/AISB2019_Live_Coding_Game.pdf [Accessed 30 May 2022]

Lorway, Norah, Edward J. Powley, Arthur Wilson. “Autopia: An AI collaborator for live networked computer music performance.” AIMC 2021 — Performing [With] Machines. Proceedings of the 2nd Conference on AI Music Creativity (Graz, Austria: University of Music and Performing Arts Graz, 18–22 July 2021). http://aimc2021.iem.at | Available at http://aimc2021.iem.at/wp-content/uploads/2021/06/AIMC_2021_Lorway_Powley_Wilson.pdf [Accessed 30 May 2022]

Magnusson, Thor. “ixi lang: A SuperCollider parasite for live coding.” ICMC 2011 — “innovation : interaction : imagination”. Proceedings of the 37th International Computer Music Conference (Huddersfield, UK: CeReNeM — Centre for Research in New Music at the University of Huddersfield, 31 July – 5 August 2011), pp. 503–506. http://hdl.handle.net/2027/spo.bbp2372.2011.101

McLean, Alex and Geraint Wiggins. “Live Coding Towards Computational Creativity.” ICCC 2010. Proceedings of the 1st International Conference on Computational Creativity (Lisbon, Portugal: University of Lisbon, 7–9 January 2010), pp. 175–179. Available at http://computationalcreativity.net/iccc2010/papers/mclean-wiggins.pdf [Accessed 30 May 2022]

Miranda, Eduardo Reck (Ed.). A-Life for Music: Music and computer models of living systems. Middleton WI: A-R Editions, Inc., 2011.

Navarro, Luis and David Ogborn. “Cacharpo: Co-performing cumbia sonidera with deep abstractions.” ICLC 2017. Proceedings of 3rd International Conference on Live Coding (Morelia, Mexico: CMMAS — Centro Mexicano para la Música y Artes Sonoras, 4–8 December 2017). http://iclc.toplap.org/2017

Nwana, Hyacinth S. “Software Agents: An overview.” The Knowledge Engineering Review 11/3 (October/November 1996), pp. 205–244. http://doi.org/10.1017/S026988890000789X

Pachet, François. “The Continuator: Musical interaction with style.” Journal of New Music Research 32/2 (2003), pp. 333–341. http://doi.org/10.1076/jnmr.32.3.333.16861

Pasquier, Philippe, Arne Eigenfeldt, Oliver Bown and Shlomo Dubnov. “An Introduction to Musical Metacreation.” Computers in Entertainment 14/2 (December 2016), pp. 1–14. http://doi.org/10.1145/2930672

Reppel, Niklas. “The Mégra System: Small data music composition and live coding performance.” ICLC 2020. Proceedings of the 5th International Conference on Live Coding (Limerick, Ireland: Irish World Academy, University of Limerick, 5–7 February 2020), pp. 95–104. http://iclc.toplap.org/2020 | http://doi.org/10.5281/zenodo.3939154

Rowe, Robert. Machine Musicianship. Cambridge MA: The MIT Press, 2001.

Russell, Stuart J. and Peter Norvig. Artificial Intelligence: A Modern approach. 3rd edition. Essex, UK: Pearson, 2016.

Shields, Rob. The Virtual: Key ideas. London: Routledge, 2003.

Stewart, Jeremy and Shawn Lawson. “Cibo: An autonomous TidalCyles performer.” ICLC 2019. Proceedings of the 4th International Conference on Live Coding (Madrid, Spain: Medialab Prado and Madrid Destino, 16–18 January 2019). http://iclc.toplap.org/2019 | http://doi.org/10.5281/zenodo.3946196

Stewart, Jeremy, Shawn Lawson, Mike Hodnick and Ben Gold. “Cibo v2: Real-time live coding AI agent.” ICLC 2020. Proceedings of the 5th International Conference on Live Coding (Limerick, Ireland: Irish World Academy, University of Limerick, 5–7 February 2020), pp. 20–31. http://iclc.toplap.org/2020 | http://doi.org/10.5281/zenodo.3939174

Subramanian, Sidharth, Jason Freeman and Scott McCoid. “LOLbot: Machine musicianship in laptop ensembles.” NIME 2012. Proceedings of the 12th International Conference on New Interfaces for Musical Expression (Ann Arbor MI, USA: University of Michigan at Ann Arbor, 21–23 May 2012), pp. 421–424. http://www.nime.org/2012 | http://doi.org/10.5281/zenodo.1178425

Tanimoto, Steven L. “A Perspective on the Evolution of Live Programming.” LIVE 2013. Proceedings of the 1st International Workshop on Live Programming (San Francisco CA, USA: IEEE Computer Society, 19 May 2013), pp. 31–34. http://ieeexplore.ieee.org/xpl/conhome/6599030/proceeding | http://doi.org/10.1109/LIVE.2013.6617346

Toplap Contributors. “TOPLAP manifesto.” http://toplap.org/wiki/ManifestoDraft [Accessed 15 April 2022]

Turchet, Luca, Carlo Fischione, Georg Essl, Damián Keller and Mathieu Barthet. “Internet of Musical Things: Vision and challenges.” IEEE Access 6 (September 2018), pp. 61994–62017. http://doi.org/10.1109/ACCESS.2018.2872625

Turoff, Murray. “Virtuality.” CACM — Communications of the ACM 40/9 (September 1997), pp. 38–43. http://doi.org/10.1145/260750.260761

Whitelaw, Mitchell. Metacreation: Art and artificial life. Cambridge MA: The MIT Press, 2004.

Wilson, Elizabeth, György Fazekas and Geraint A. Wiggins. “Collaborative Human and Machine Creative Interaction Driven through Affective Response in Live Coding System.” ICLI 2020. Proceedings of the 5th International Conference on Live Interfaces (Trondheim, Norway: Norwegian University of Science and Technology, 9–11 March 2020), pp. 239–244. Available at http://live-interfaces.github.io/liveinterfaces2020/assets/papers/ICLI2020_paper_52.pdf [Accessed 2 April 2022]

Wiggins, Geraint A. “A Preliminary Framework for Description, Analysis and Comparison of Creative Systems.” Knowledge-Based Systems 19/7 (November 2006), pp. 449–458. http://doi.org/10.1016/j.knosys.2006.04.009

Wiggins, Geraint A. and Jamie Forth. “Computational Creativity and Live Algorithms.” In Oxford Handbook of Algorithmic Music. Edited by Alex McLean and Roger T. Dean. Oxford: Oxford University Press, 2018, pp. 267–292. http://doi.org/10.5281/zenodo.1239843

Witz, Jacob. “Re-coding the Musical Cyborg.” ICLC 2020. Proceedings of the 5th International Conference on Live Coding (Limerick, Ireland: Irish World Academy, University of Limerick, 5–7 February 2020) pp. 32–40. http://iclc.toplap.org/2020 | http://doi.org/10.5281/zenodo.3939123

Xambó, Anna, Alexander Lerch and Jason Freeman. “Music Information Retrieval in Live Coding: A Theoretical framework.” Computer Music Journal 42/4 (Winter 2018) “Music Information Retrieval in Live Coding,” pp. 9–25. http://doi.org/10.1162/comj_a_00484

Xambó, Anna, Gerard Roma, Pratik Shah, Jason Freeman and Brian Magerko. “Computational Challenges of Co-creation in Collaborative Music Live Coding: An outline.” Co-Creation Workshop. ICCC 2017. Proceedings of the 8th International Conference on Computational Creativity (Atlanta GA, USA: Georgia Institute of Technology, 19–23 June 2017). http://computationalcreativity.net/iccc2017 | Available at http://computationalcreativity.net/iccc2017/CCW/CCW2017_paper_6.pdf [Accessed 2 April 2022]

Software, Agents and Communities

AIMC — AI Music Creativity. http://aimusiccreativity.org [Accessed 22 December 2021]

Betablocker. http://fo.am/activities/betablocker [Accessed 2 April 2022]

Extramuros. http://github.com/dktr0/extramuros [Last accessed 22 December 2021]

Fluxus. https://www.pawfal.org/fluxus [Accessed 22 December 2021]

ixi lang. http://www.ixi-audio.net [Accessed 2 April 2022]

JITlib. SuperCollider library. http://doc.sccode.org/Overviews/JITLib.html [Accessed 22 December 2021]

MuMe — Musical Metacreation. http://musicalmetacreation.org [Accessed 11 August 2020]

NVivo. http://www.qsrinternational.com/nvivo-qualitative-data-analysis-software [Accessed 11 August 2020]

PyTorch. Python library. http://pytorch.org [Accessed 22 December 2021]

SCMIR. SuperCollider library. http://github.com/sicklincoln/SCMIR [Accessed 22 December 2021]

TidalCycles. http://tidalcycles.org [Accessed 22 December 2021]

Social top