“In Flight” and Audio Spray Gun

Generative composition of large sound-groups

In Flight (2014) is a fixed-media composition for 8-channel surround sound. It was composed in its entirety using Audio Spray Gun, a computer programme that generates and spatializes large groups of sound-events derived from a single sound sample. The software is described, with a stereo reduction of the work serving as an illustration of its use.

The processing power of modern computers offers sonic possibilities that are too complex to explore at the level of single events. For some years, the automated generation of numerous sound events has been explored using short samples in both granular synthesis (Roads 2001) and particle systems for audio (Kim-Boyle 2005; Fonseca 2013) but only recently has it become possible, with relatively inexpensive computers, to work with sources of longer duration. 1[1. In the case of Audio Spray Gun, these are typically 250 ms to a few seconds long.] Audio Spray Gun is an experimental tool for fixed-media composition that simultaneously generates and spatializes large groups of such sources, all derived from a single sound sample.

In Audio Spray Gun, “sound-events” are treated as points in a four-dimensional parameter space. These points are generated using simple rules that constrain otherwise random parameter choices to a specific locus which may be transformed over time by means of expansion, contraction and translation. This produces a sequence of events that is then rendered to multi-channel audio.

Each time such a sequence is created, the characteristics of its constituent events may vary significantly but its gross features remain strictly defined. Thus, when the same process is executed a number of times, the results obtained are near identical at the meso-time scale 2[2. Time periods measured in seconds that govern the local (as opposed to global) structure of a composition (Roads 2001, 14).] but differ at the level of individual sound-events.

Sound groups generated in this fashion can be made up of several hundred events running over periods of a few seconds to a few minutes. While each event is stationary and distinct, the overall effect of so many overlapping sounds can produce dramatic apparent motion around the sound space.

The Analogy

Audio Spray Gun is so named for its similarity to the spray gun function common to many computer graphics programmes (Video 1). This graphical simulation of an aerosol spray distributes coloured dots at random locations within a circular locus on the canvas. This locus may be moved over time, and the density of the dots within it is controlled by the speed at which the user moves the mouse.

Audio Spray Gun works in a similar fashion, but because it operates in a time-based medium, its output is formed by the trajectory and transformation of the locus rather than by a final static image. This output, referred to here as a “sound-group”, is a collection of events generated at random inside a locus that shifts over time within the parameter space. The events that make up the group are analogous to the dots that make up the graphical image, and the locus within which they are constrained is analogous to the moving circle produced by the spray gun on screen.

Events and Loci

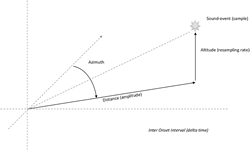

Audio Spray Gun 3[3. Version 0.61b at the time of writing.] operates in a four-dimensional space whose axes are inter-onset interval (delta time), resampling rate, azimuth and distance. Each sound-event generated by the programme is described by five parameters (Fig. 1):

- The file name of the sample to be played by the event;

- The radial distance in the horizontal plane of the event from the listener;

- The azimuth of the event with respect to the listener when facing forward;

- The rate at which the event will be played back (shown here as an altitude);

- The time preceding the next event.

Each event is stationary within the parameter space and has no spatial or spectral trajectory (for example, panning or pitch bend) of its own.

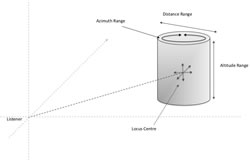

Consider a collection of events occurring within a fixed locus. In two dimensions, such a locus might consist of a circle on a horizontal plane. In this example, events generated by the system occur at random points within the circle, giving the impression, when rendered as audio, of a body of sound with specific width and depth, centred on a particular location. If this locus is extended into a third dimension (resampling rate), the events play back at random frequencies in a specific range, but still occupy the spatial locus described above (Fig. 2). If a fourth, temporal, dimension is added, the time between events is constrained in similar fashion.

Locus Transformation

Once such a locus has been defined, it may be modified over time by transforming its position and extent within the space. In Audio Spray Gun, this is achieved by modifying seven parameters: three of these (radial distance, azimuth and altitude) define the focal point of the locus, and three (distance, azimuth and altitude ranges) set its extent about that point. The seventh parameter sets the available range of inter-onset intervals.

Possible transformations include:

- Modifying the azimuth and distance ranges, causing the spatial locus to expand or contract;

- Changing spatial coordinates of the focal point so that the locus of events moves through the sound space;

- Altering the altitude of the focus so that the frequency range of possible events moves up or down;

- Changing the extent of locus in the resampling dimension so that the spectral range of possible events expands and contracts;

- Modifying delta time to change event density from discernable individual events to thick textures.

Implementation

In Audio Spray Gun, the trajectory of a sound-group is defined using seven identical function generators, one for each of the seven parameters described above. Two modes are available so that these functions are dependant either upon time elapsed (as fraction of a pre-defined total duration) or on the number of events played so far (as a fraction of a pre-defined total).

The general format of these functions is:

value(x) = add + f (mult × env(x))

where add and mult are constants and env(x) is the height of a curve or envelope (0–1) at point x. This point is defined as either:

x = (time now)/(total duration)

or:

x = (index of current event)/(total number of events)

according to mode.

The function f can be selected from a number of options, for example:

linear add + (mult × env(x))

rand add + random(mult × env(x))

rand2 add ± random(mult × env(x))

Typically, the trajectory of the locus will be described by linear functions and its extent will be controlled by random functions.

Each time an event is generated, the programme calculates the position and extent of the locus by evaluating these seven functions. It then selects a random point from inside the locus, which it converts to an event location with respect to the listener. This process repeats in real time until the end-point of the functions is reached. Events are rendered to audio as they are created and stored as a data array to be replayed as necessary.

Interface

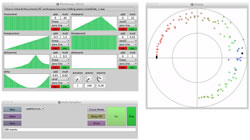

The programme interface consists of three windows (Fig. 3). The parameter window contains all the information required to define both the trajectory and transformation of the group. At the top of the window is an area into which the user may drop the sound file from which the programme will create events. Below this are the seven graphical function generators that define the parameters of the locus that these events will occupy and the way in which they will change over the lifetime of the sound-group. The function generator design is based around a multi-slider that can be edited using the mouse or by selecting one of several pre-defined transformations from a menu. The function can also be either inverted or reversed using a single mouse click. Two knobs are provided to define the length of the group either as a number of events or as a duration in seconds and a further knob sets the maximum gain for individual events.

The launch window holds controls for programme execution and data storage. From this window, the user can create and replay sound-groups through two, four or eight output channels, load and save parameter sets as text files, and select time or event count mode of operation. The launch window also features a delay switch. When this is activated, a delay approximately equivalent to the speed of sound in air (340 ms-1) is applied to the onset of each event, proportional to its distance from the listener. This causes events within moving sound-groups to bunch together in time when approaching the listener and spread apart when departing. Because sound-events within such a group are all static and do not generally share a common periodicity, this does not produce a classical Doppler shift but can give a quite naturalistic impression of a moving body and, at high speeds, produce strong “whip crack” effects. Examples of this can be heard in the recording of In Flight (Audio 1) at 3:10, 4:37 and elsewhere.”

The display window shows an animation of the spatial locations of events as they occur. Events are shown as coloured dots on a circular display with the listener at the centre. The colour of the dot reflects the spectrum of available resampling rates such that low appears red and high appears blue.

The current version of the programme has no record function of its own but can either be sent to hardware or recorded onto a DAW via a software audio bridge such as Soundflower.

Audio Spray Gun is written entirely in SuperCollider 3.6.6.

Applications

This author has used Audio Spray Gun in number of works over the past year, including In Flight. The method of operation has been to develop sound-groups in Audio Spray Gun and then record a number of variants in eight channels (four stereo tracks) to Ableton Live. The process of composition is then to select the best versions and arrange them to form a piece. Balancing the groups within the piece is largely a matter of adjusting the gain of each group as a whole without recourse to panning and with only minimal use of “fades”. In Flight (Audio 1) is included here as an illustration of some of the techniques employed.

Conclusions

The principle attraction of Audio Spray Gun in its current form is that it offers the user the opportunity to experiment. Groups of sounds created with hundreds of individually spatialized samples can be constructed and auditioned in a matter of minutes, producing output it would take days or even weeks to produce by manipulating individual samples in a DAW.

Although sound-groups created in Audio Spray Gun are made up of stationary objects, the generation of multiple events along a trajectory gives a strong sense of spatial motion even when subsequent events are placed some distance apart.

Spreading sounds around a finite spatial locus can suggest the presence of a single body as opposed to a mass of discrete points. When such a body passes across the central listening position, some of its component sounds may be heard in one loudspeaker, while others are heard in the speaker opposite. This can sustain the sense of motion described above even when traversing this acoustically hollow central space.

Users can experiment with density and placement of sounds, creating transitions from near-continuous washes of overlapping sounds to discrete stationary events and vice versa.

Issues

Two issues stand out.

Firstly, because the only spectral treatment of the sound is resampling, the software lends itself to the generation of long sounds whose low frequency components are of extended duration that tend to rumble on after higher frequency components have died out. In the future, Audio Spray Gun will feature an option to constrain the length of lower frequency events so as to compose more abrupt features.

Secondly, as is common with such broad-brush techniques, there is currently no way to perform surgical edits on near-perfect sound groups. While it is generally quicker to run the process a few more times until one obtains a better version, the capacity to edit out inconvenient events and re-render might be useful.

Future Development

Because Audio Spray Gun operates in an abstract space, the data it produces can be translated into any of a number of real-world parameters. It should therefore be relatively easy to extend the system into other dimensions, for example, physical altitude for 3D spatialization or timbre descriptors for automated sample selection. It would also be desirable to extend the system to add other rendering options (for example VBAP, Ambisonics, WFS).

Audio Spray Gun is still in the early stages of development and many possibilities remain to be explored.

Bibliography

Fonseca, Nuno. “3D particle systems for audio applications.” DAFx-13. Proceedings of the 16th International Conference on Digital Audio Effects (Maynooth, Ireland: National University of Ireland, 2–5 September 2013). Available on the DAFx-15 website http://dafx13.nuim.ie

Kim-Boyle, David. “Sound Spatialization with Particle Systems.” DAFx-05. Proceedings of the 8th International Conference on Digital Audio Effects (Madrid, Spain: 20–22 September 2005). Available online on the JIM 05 website http://jim.afim-asso.org/jim2005/download/19. Kim-Boyle.pdf [Last accessed 7 April 2015]

McCartney, James. “Rethinking the Computer Music Language: SuperCollider.” Computer Music Journal 26/4 (Winter 2002) “Language Inventors on the Future of Music Software,” pp. 61–68.

Roads, Curtis. Microsound. Cambridge MA: MIT Press, 2001.

Social top