Spectral Delay as a Compositional Resource

Introduction

In some of my recent work, I present familiar acoustic instruments in new light by transforming their sound in unexpected ways. One kind of processing I use often is based on the idea of spectral delay, in which each spectral component has a dedicated recirculating delay line. I like the way that this technique stratifies a familiar sound, allowing us to experience its timbre from a new vantage point, much as layers of rock are revealed in a limestone outcropping. Using high delay feedback lets us freeze a sonic moment, extending its timbre by circulating it through the delay and creating, from a short static sound, pulsating textures of vibrant colour. Composer Barry Truax describes time-stretching by granulation as a way to put a sound under a microscope, allowing a listener to explore its timbral evolution in detail (Truax, “Granulation”). My use of spectral delay to freeze a brief portion of a sound is a different way of accomplishing something similar.

In this paper I discuss software I have written to perform spectral delay processing and its use in three of my compositions: Slumber, a surround-sound fixed-media piece; Out of Hand, an interactive piece for trumpet, trombone and computer; and Wind Farm, an improvisatory piece for laptop orchestra.

Dancing Pianos

Before addressing the specifics of the software, let’s have an example of the kind of sound spectral delay can produce. In Slumber, I asked pianist Mary Rose Jordan to record excerpts from Schumann’s Kinderszenen, “Kind in Einschlummern.” I chose several short chords from the recording and subjected them to spectral delay processing, using a version of my software hosted in the RTcmix environment. (1) Each spectral slice has a unique, randomly determined delay time. With feedback set to maximum, the partials of the piano chord dance in multiple, out-of-sync repetitive strands. Here is one of the piano chords, an A-minor triad.

I set the spectral delay so that it would generate four channels of audio. Delay times are different between the channels to decorrelate them for a more spacious sound. Here is a stereo mix of the resulting texture.

This sound reminds me of the time I entered a store that displayed a variety of antique clocks, all of them ticking and clanging in overlapping rhythms. One of my goals in Slumber was to animate isolated moments from the Schumann while gradually reconstructing a phrase from the original piece. (2)

Spectral Delay Basics

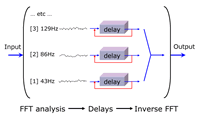

Spectral delay is one of a family of processing techniques that harness the power of the Fast Fourier Transform (FFT) to decompose a sound into its spectral components. A full technical explanation of the process is beyond the scope of this paper. But briefly, the software sends overlapping, enveloped windows of the input sound to the FFT. Then, for every windowed segment, each frequency band is represented by a single complex number. This number enters a delay line, equipped with a feedback path (represented by red lines in Fig. 1). The outputs of all the delay lines feed an inverse FFT, which converts the sound from the frequency domain back into the time domain, where we can hear the result of the transformation.

As is the case with any kind of FFT processing, the length of the sound segments sent to the FFT must be a power of two (typically 1024). This length determines the number of frequency bands in the analysis (half the FFT length), as well as the timing resolution. The delay lines in the processor do not operate at anything close to the audio sampling rate, which is a good thing, considering how many delay lines are required. The delays run at a rate determined by the FFT length and the degree of overlap between windows. This matters to the user in one important way: it constrains the choice of delay times, which must be increments of the FFT length (divided by the overlap).

Swirling Pianos

You might think that starting with a clean recording would be key to achieving good results with the spectral delay. But some added noise, whatever the source, can excite the high-frequency delay lines. Our closely-placed microphones for the Schumann recording picked up bench creaking, pedalling and other unwanted sound. First, the original clip.

This turns into a swirl of hissing sounds layered above the pitched reflections of the piano chord.

A slightly longer input segment, longer attack and release times for the input envelope and greater overlap between FFT windows yields a very different result.

This texture — more a continuous series of waves than a constant interplay of independent repetitive layers — eases the transition into the more overt piano notes that carry the piece to its end.

A Max/MSP Object

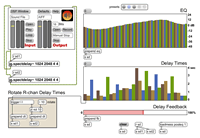

To extend my experiments with spectral delay into real-time contexts, I took my RTcmix spectral delay instrument, SPECTACLE (written in 2002), and converted it into a Max/MSP external, jg.spectdelay~, with full variable control over nearly all parameters. Fig. 2 shows a patch that demonstrates the use of the external.

The arguments to the jg.spectdelay~ object are the FFT length (in samples), the window length, the amount of overlap (4x in this case) and the maximum delay time in seconds. The object processes only a mono signal, so two objects are used to achieve stereo output. You control the object by sending messages to it. For example, to set the feedback amount, send a message like “fb 0.6.” To set delay times for many bands at once, send a message like “dt 0.5 1.2 0.95 3.14 2.8 1.9.”

To generate a compelling stereo image, delay times for the two channels are decorrelated in a simple way: the set of objects in the lower left corner of the patch rotates the left-channel delay times before applying them to the right channel. Dragging a delay time slider triggers a recalculation of the entire set of delay times for both channels.

It is a simple matter to include a graphic EQ in the design of any FFT-based processor, because a band’s EQ value merely scales the complex number for that band. (This is essentially the same as in the classic Max/MSP Forbidden Planet patch by Zack Settel and Cort Lippe.) The jg.spectdelay~ object makes it easy to freeze a short piece of audio (with high feedback) while modifying the EQ response curve.

Here is the result of sending a sustained male vocal note through this patch.

In this patch, there are 512 frequency bands. Controlling all of these with individual sliders would be unwieldy. Moreover, it would give a misleading impression of the audible effect of changes, because the top 256 bands affect only the highest octave (a limitation inherent in the FFT), but they would take up half of the array of sliders. My object allows you to group frequency bands together for control by a single slider. By default, this is done automatically in such a way that greater frequency resolution is reserved for the lower bands than for the higher ones. Users wanting more control can specify arbitrary groupings of successive bands by using the binmap message.

My work on spectral delay does not stand alone. In the commercial world, there is the Spektral Delay plug-in (unfortunately now defunct) by Native Instruments and the Spectron plug-in by iZotope, both offering effective and innovative interfaces for real-time control. Add to this the open source FreqTweak, Jesse Chappell’s collection of FFT-based processors, and David Kim-Boyle’s native Max/MSP implementation of spectral delay (Kim-Boyle 2005).

Spectral Delay in Live Electronic Music

I used spectral delay as a real-time processor in Out of Hand, for trumpet, trombone and computer. In this piece, I employ pitch detection of the brass instruments to cue changes in sound processing and synthesis. The trumpet opens the piece by playing an extended passage of intermittent repeated sixteenth notes. Spectral delay, applied to the trumpet, creates a stream of eighth-note reflections of the trumpet’s staccato notes. Delay times are quantized to the eighth-note grid, and spectral delay output is offset in time to compensate for input/output latency. (The latency of the spectral delay processor is equal to the window length, given as the second argument to jg.spectdelay~.) The trombone plays long tones that trigger synthetic responses by the computer, and all this sound goes through the spectral delay as well. The result is a pulsating eighth-note accompaniment that carries a distinct trace of the live instrumental sound. Here is an excerpt near the opening of the piece.

Audio excerpt of John Gibson’s Out of Hand, for trumpet, trombone and computer. Performed by Michael Tunnell (trumpet) and Brett Shuster (trombone).

The spectral delay times are not fixed, as in the Slumber examples above, but instead randomly change at a rate that synchronizes with the eighth-note pulse. The patch has stereo output, so there are two jg.spectdelay~ objects. Instead of having different delay times for the two objects, as described above for the demonstration patch, I chose to decorrelate the channels by notching out alternating frequency bands using the built-in graphic EQ. In other words, the left channel carries the even-numbered bands, while the right channel carries the odd-numbered bands.

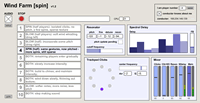

In Wind Farm, a laptop orchestra piece, I have two sound-making patches, one of which produces a series of clicks when the player rubs across the track pad, using the same “flick” scroll method familiar to iPhone users. This creates a decelerating spinning effect, once the player’s finger leaves the surface of the track pad. The clicks enter a resonator and then a spectral delay.

A conductor patch sends resonator pitches and spectral delay parameters to the players over a wireless network, while each player focuses on creating gestures that complement what the other players are doing. Spectral delay frequency bands are grouped together in a way that works well for this particular sound source. This requires only eight different delay time sliders. which map (using the binmap message) to the underlying 512 individual delay lines.

Software Availability

My spectral delay software is part of the standard RTcmix distribution, which runs on Linux, Mac OS X and other Unix-based operating systems. RTcmix is free software. The Max/MSP external, documentation and some example patches are available from the “software” area of my website. There you will find a Universal Binary version of the object for Mac OS X, as well as GPL-licensed source code.

Notes

- RTcmix is an open-source signal-processing and synthesis language derived from the original CMIX language, developed at Princeton University by Paul Lansky. http://rtcmix.org

- A stereo mix of Slumber can be heard on the composer’s website at http://john-gibson.com/pieces/slumber.htm

Bibliography

Truax, Barry. “Granulation of Sampled Sound.” On the author’s website. Last accessed 30 September 2009. http://www.sfu.ca/~truax/gsample.html

Kim-Boyle, David. “Spectral Delays with Frequency Domain Processing.” Proceedings of the Spark 2005 Festival, pp. 50–52. University of Minnesota, Minneapolis.

Social top