An Overview of Score and Performance in Electroacoustic Music

Notation has gradually developed into ever more sophisticated and complicated forms. However, the traditional system has inherent deficiencies; many aspects of music remain which cannot be notated precisely and in some cases not at all; dynamics and articulation marks can only be approximate, and fluctuations of tempo are difficult if not impossible to reproduce.

Notation quantises the musical material in accordance with the human ability to communicate through the medium of writing, reducing it into simple elements; consequently, traditional notation is able to encompass only a limited degree of the sounds’ structural complexity. Moreover, it binds the composer to certain patterns of thinking, which is one of the reasons why the twentieth century saw the emergence of new notational practices. Especially in electroacoustic music, where the attention of the composer is switched to different aspects of musical experience, the traditional means of representing sounds through notation becomes insufficient. With the rise of electroacoustic music, some elements turned to be equal or even more important than others; the sound itself and its components became a priority for the composer. Pierre Schaeffer’s Traité des objets musicaux (1966) was the first significant work to elaborate on the spectral and morphological characteristics of sound. However, important sonic aspects still remain indescribable; for example, timbral variation of instrumental sounds can only be notated as action rather than sound.

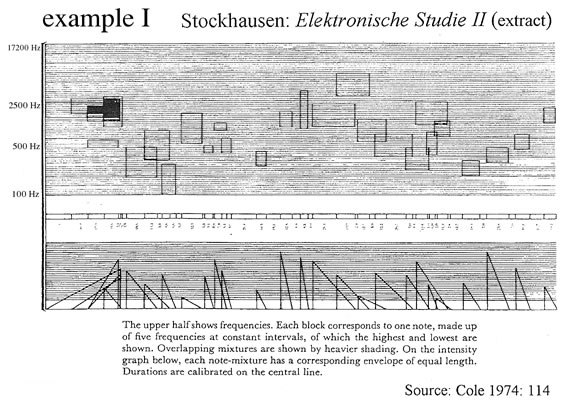

Scores of early electronic music combined a description of the way the sounds were produced with a graphical representation of how they were expected to sound; they usually featured a frequency chart and a chart for dynamics, together with notes often containing wiring diagrams (example 1). Since electronic music mainly deals with unfamiliar sounds, descriptive scores in the form of sketch-maps are advantageous. Complicated techniques of sound manipulation make it difficult to follow details on scores, so graphical representations of musical aspects such as textural densities are helpful. For example, the score of Tristram Cary’s 345 A Study in Limited Resources (1967) is a sketch-map presenting textural densities, although details are still available for those who wish to examine individual frequencies and modulations (example 2). Similarly, the score of Stockhausen’s Kontakte (1960) contains a simplified graphic representation of the tape part to enable the two instrumentalists to synchronize their playing with the recorded sounds (example 3). Elsewhere in the score, Stockhausen gives a detailed account of all the steps required for the reproduction of the electronic part of his composition. Different scores accommodate different instructions, depending on the needs of the piece. For example, Davidovsky’s score of his Synchronism no. 3 (1965) for cello and tape provides an extra staff for the recorded sounds, which contains commands for starting and stopping the tape machine (example 4).

In cases of complex processes, the production of detailed scores becomes very difficult. In acousmatic music, where no live performers are involved and when a realization of a piece exists, the purpose of the score is questionable. At first glance, the score seems to be an item preserved for posterity, rather than a means to fulfill its traditional main purpose, which is to generate the act of performance. The fact that the recording is the permanent performance repeatable ad infinitum, diminishes the usefulness of the score. An example of a complicated score of tape music, which seems to have been made for archival purposes and serves historical interest, is Andrzej Dobrowolski’s Music for Magnetic Tape no.1 (1963). It includes a glossary of the different sound sources, graphs revealing how sound complexes were built up, diagrams showing the modifications of the original attacks and decays of the natural sound sources, and frequency charts; a recording accompanies his score (example 5; Cole 1974, 113).

There is an inherent problem related to scores of tape music, which are designed for reproduction of the original pieces. Every studio followed — and follows — its own methods, with its own equipment calibrated in a certain way. This makes it unlikely for later musicians to be able to follow the instructions of a score in order to replicate the composition; unless those musicians work under exactly the same preconditions that were met by the composer who wrote that particular piece. Notated works, in most of these cases, provide only historical interest (ibid.).

However, there are different reasons that justify the existence of scores of acousmatic pieces. A score may have been produced to serve a pedagogical purpose, or its production may have been necessary due to copyright laws that exist in some countries; or, according to Ciamara, it may have been produced simply to satisfy the artistic inclination of the composer (Gustav Ciamara in Appleton 1975, 116). Moreover, scores can provide important information to analysts of acousmatic music. Perhaps most importantly for the composer, scores with approximate trajectories mapped over a timeline can assist the live diffusion of acousmatic works, thus reducing the amount of improvisation at the faders.

Traditional notation has been developed to assist memory (e.g. in composition, performance, pedagogy), to facilitate the dissemination of musical pieces, and to preserve the work of the composer. The evolution of recording has rendered the score almost unnecessary, since these needs can be met by the recording medium. The entire tradition of Western art music is constructed upon notation and consequently the composer is bound to it. As a result of this convention, the outburst of electroacoustic music, which uses the recording studio, threatens the roots of the Western art music tradition. Regarding the alienation between tradition and electroacoustic music, Chris Cutler maintains that innovations in the medium of the recording technology were made mostly by people who were not emerging out of the art music tradition, because “… the old art music paradigms and the new technology are simply not able to fit together”; Pierre Schaeffer’s proposal came out of his work as an engineer at the French radio and not of the music tradition (Cutler 2000). Regarding the role of notation in electroacoustic music, Trevor Wishart noted in 1985 that

… the relative failure of electro-acoustic music to achieve academic respectability… can to some extent be put down to the fact that no adequate notation exists. (Wishart 1985, 22)

In the Western art music tradition, the score generally leads to performance. In electroacoustic music, the process is often reversed, as it is more likely that the score is realised after the recorded part has finished. 1[1. There are a few exceptions, like Stockhausen's early electronic works, which were notated in their minute detail before they were recorded.] However, the composer of electronic music often provides the means to a computer to execute a musical task by feeding it with appropriate information, which can be regarded as a form of notation. An early computing program based on traditional musical concepts such as “orchestra” and “score” was MUSIC 4 (a precursor of Csound), which was developed by Max Mathews and completed in 1962. The “orchestra” consisted of oscillators, modulators, filters and reverberation units, and the “score” contained the performance data such as waveshapes and control settings. The score was originally made by complex strings of characters, until 1972 a sub-program called SCORE was introduced to accompany the next version MUSIC 5 that allowed the use of a more accessible syntax. In the numerous computing programs and systems available today, there are various methods of entering information that can be seen as fulfilling the role of notation, such as using optical recognition devices, inputting instructions written with ordinary characters, having graphs drawn on a screen, etc. However, this information looks so different from the set of characters and symbols that constitute a traditional score, that any association with traditional notation becomes even more distant.

In the early 1980s, there was considerable delay between putting information into a computing system and hearing the results at the output, thus creating an “… extremely remote working environment for the composer” (Manning 1980–81, 128). Today, programs allow the immediate check of the resulting sound; this gives the opportunity for revisions and modifications to be done on the spot during the process of composing, which was rarely possible with the traditional methods. Thus the composer is able to fill the gap between composition and performance, creation and execution, and construct exactly what will be heard in the concert. Notation is eliminated, as it is replaced by direct reproduction of performances, thus destroying the distinction between composition and performance.

In concerts of acousmatic pieces, no performers are involved apart from the sound projectionist. This type of music is designed for loudspeaker listening, where the audience does not have visual access to the gestures of sound-making. Performance is redefined according to the Pythagorean concept of listening behind a veil, and the act of listening is emphasised. As acousmatic compositions are recorded, the composer has the flexibility of combining layers of sounds, which may have been created by using different methods. Moreover, the composer eliminates external factors and becomes also the performer of the work. Michael McNabb blames the composer for any unsatisfactory results of acousmatic music:

[C]omposers of electronic music must realize that they are the performers, and are therefore responsible for adding all the nuance of performance to the music if there is not going to be someone at the concert to do it for them. The composition process must extend down to subtler levels. Otherwise the end result is a kind of “audible score” instead of music. (McNabb in Emmerson 1986, 144)

In concerts of acousmatic music with live sound diffusion, the number of speakers and their levels are adjusted so as to dramatise the acoustic image. The aim of this process is to enhance the spatial dimensions of musical pieces, to expand the listening space and to place the audience “inside” the music, in contrast to the traditional music concerts, where the audience is placed in a position looking at the direction of the performance. Sound diffusion has elevated the presentations of acousmatic music, which were initially criticised for their lack of visual interest. Diffusion of sound in space has been considered by composers since the early days of electroacoustic music. Pierre Schaeffer experimented in 1951 at the Théâtre de l’Empire in Paris, using four channels to create different perspectives and trajectories of sounds. A few years later, in 1958, a highly evolved sound diffusion system used c.400 loudspeakers, for the performance of Xenakis’ Concret PH and Varèse’s Poème Électronique, in the Philips Pavilion at the Brussels World’s Fair.

Works that have been composed with sound diffusion in mind cannot be presented successfully in music halls built for entirely different purposes. Stockhausen saw the difficulties that emerged with sound diffusion, when he first presented at the Cologne radio station in 1956 his piece Gesang der Jünglinge, a work on multi-track tape composed for five groups of loudspeakers, which were designed to be placed around and above the audience. He called for acousticians and architects to provide “… a badly-needed solution for current problems” (Stockhausen 1961, 69). His idea was to have a spherical chamber fitted all around with loudspeakers, where the audience would sit in a platform (‘transparent to both light and sound’) hung in the middle of the concert space. A spherical auditorium especially designed for Stockhausen was finally built in 1970 in the German Pavilion, at the Expo ’70 in Osaka. Eventually, the first concert hall with a permanent installation of loudspeakers for sound diffusion was made for the “Gmebaphone” instrumentarium of the Groupe de Musique Expérimental de Bourges in France, in 1973; next came the “Acousmonium” of the GRM in Paris, in 1974 (Emmerson 2001, 63).

In addition to performance situations where live diffusion of acousmatic pieces takes place, there are situations where electronic resources are combined with or used by musicians on stage. There are many different approaches to the use of electronics in live situations, which change according to the performance circumstances determined by the availability of studio and financial resources, by performers and performance opportunities. Composers utilise accessible resources according to their needs, usually following one of the two prevalent methods of combining live instrumentalists with electronic sources. In the first method, pre-recorded material is played alongside sounds produced live by the players. In the second, the sounds produced live are modified electronically; several of their characteristics can be changed, including their spectra (by using filters, ring modulators etc.), envelope shapes and spatial distribution. There are situations where the main “instruments” on stage are computers, for example in laptop improvisations. According to Joel Chadabe (1999, 29), there are three basic models for computer-assisted performance; (a) the performer plays some part of someone else’s composition; (b) the performer is also the composer who controls an algorithm in real time; and (c) the performer improvises by controlling an algorithm in real time, while he/she reacts to the information generated by an electronic device, which is often based on analysis of the improvisation. Interactive composition emerged in the mid-1980s, and as a consequence, the computer was elevated and took the role of the performer.

The invention of voltage-controlled synthesizers (Moog and Buchla) in the mid-1960s, encouraged the use of electronics in live situations, despite the fact that since most of the devices were monophonic, they were more suited to the studio. The introduction of the keyboard synthesizer facilitated the use of electronics in performances. Analog live electronics are found in many forms, and various methods of generating sound are employed in performances. An example of non-standard technique is Cleve Scott’s Residue III for a solo trombone and electronics, where the first part is recorded on analog tape and is played back later as the second part, routed through a ring modulator.

Often, the instrumentalist/performer controls many parameters of the live processing, sometimes including spatial diffusion. In some occasions, his/her position may be more advantageous than that of the sound projectionist, especially when the situation involves improvisational material. However, instrumentalists are not normally assigned complex tasks; instead, they usually manipulate live electronics through foot switches, as for example in Bevelander’s piece Synthecisms No.3 (1988) for solo saxophone, digital delay and tape (Bevelander 1991, 151–57).

In the UK, the use of electronics in live situations was initially more important than their use in the studio (Emmerson 1991, 179). Three significant ensembles provide examples of different approaches; the Gentle Fire, Intermodulation and the West Square Electronic Music Ensemble (formed in 1968, 1969 and 1973 respectively). The experimental ensemble Gentle Fire specialised in group composition and used scores of indeterminate notation; most famously, they recorded Stockhausen’s Sternklang in collaboration with Intermodulation. Since the mid-70s, live electroacoustic performances in Britain moved towards a more systematic approach and away from indeterminacy. The West Square Electronic Music Ensemble had a core of established musicians who were united in different combinations for the completion of certain projects. Famously, they realised Stockhausen’s Solo, which until then was thought to be unperformable. The piece is for a melody instrument and a multiple tape feedback system. The ensemble had a variable multi-delay table built, on which they were able to produce six stereo delays from a single recorded source (ibid, 180–83). Intermodulation played acoustic instruments (viola, reeds and percussion) and VC3 synthesizers used mainly for signal processing of instrumental sounds. The ensemble did not use a mixer for the overall sound balance, but they approached the performance as a traditional chamber ensemble, which works without a conductor and where each member balances his/her own level in relation to the other players. However, as there is an

… ever growing need to integrate sound systems, there remains a sense in which contemporary electronic music performance practice has deprived the performer of this essentially interactive aspect of performance. (ibid., 185)

In live electroacoustic music, the sound does not come necessarily from the stage but it can be placed around the audience; moreover, it can be manipulated by an invisible “conductor”. Consequently, the performer has somehow been deprived of his authority and control. In 1971, during a performance of Kurzwellen, Stockhausen sat

… at the electronic controls and when any idea he consider[ed] inappropriate [was] produced, he promptly phase[d] it out by the twist of a knob. (Peter Heyworth, quoted in Maconie 1976, 246)

There is a variety of problems and concerns related to live performance, which need to be mentioned. Electroacoustic pieces can only (or mostly) be reproduced on the originally used type of instruments, devices and computer programs, as mentioned earlier. This means that old repertoires can only be recreated on “period” equipment. Consequently, many works of the early electronic music scene cannot be experienced live, as certain equipment and programs are not available anymore.

According to Stroppa, combining acoustic instruments with sounds from loudspeakers yields a lot of problems, because there is a mismatch in the behaviour of these two very different types of sound source. To improve the overall sound, one has to create virtual sources with several loudspeakers for each family of the electronically produced or processed sounds, which may require hundreds of audio channels, to enable a rich electronic texture to diffuse properly in the performance environment, where acoustic instruments are also present (Stroppa 1999, 75).

An additional complication is that electronically manipulated sounds do not necessarily indicate human activity; in live performance, most of the times, the gestures of the player do not associate with the sound product. Consequently, the link between the performer and the music played — which was very important for the listener in the past — has been broken.

It is often argued that electroacoustic music eliminates any personal interpretation by the performer, since the composer creates the entire musical environment in which the performer acts. However, there are examples where interpretation is possible. The UPIC 2[2. Unité Polyagogique Informatique du CEMAMu. It was devised by Xenakis, developed at CEMAMu (Centre d'Etudes de Mathématique et Automatique Musicales) in Paris, and completed in 1977.] machine offers such a possibility; this is a digitising system linked to a computer to produce music and it operates by moving a light-pen across a graphic screen. Thus, the performer reads the information already stored in the machine by the composer, who prepares all the material including timbre. Since 1988, the UPIC can perform in real time, making it possible to be used in live performances. The machine allows for a variety of changes of the composed material during the performance, including re-composition of the parts; therefore, the performer can make his or her own interpretation of the work set up by the composer. The player is required “… to recreate, as in traditional practice, the living expressive thought virtually written on the page” (Bernard 1991, 48). Pierre Bernard in his score of Temps Réel (1989) for UPIC, does not give a definite chronological order of the sound events and he leaves his work open to interpretation and further composition. His score outlines details such as rhythmic patterns, average speed and timbres. During the performance, there are several possible changes that can be introduced by the player; these can be applied on the speed of reading the graphics, the direction of reading (opposite direction results into the inversion of intensities and rhythm), the order of playing the graphics, amplitude, and the choice of attack. The compositional material can be further altered, although certain alterations require a few seconds delay before they appear as actual sound; they involve transposition of the material, modification of timbre, and radical change of the overall speed that allows the work to be stretched out to several hours or squeezed into half a second (ibid, 53–57).

Digital technology adopted the MIDI protocol in 1983, which allows the performer to trigger pre-composed fragments and to change configurations quickly on signal processing devices. The triggering of soundfiles during performance eliminates the use of the click track, which has been objected as a straitjacket by many musicians in the past (Emmerson 2001, 62). Since MIDI concerns events (notes) and states (patches) and not timbre, the greatest interest in the early days of MIDI had been towards the use of computers “… in the ‘syntax’ of music rather than its ‘phonology’” (Emmerson 1991, 186). However, from early on, manipulating timbre via MIDI messaging was encouraged by versatile MIDI controllers, such as the MIDI Hands developed in 1984 by Michel Waisvisz 3[3. Michel recently passed away; see In Memoriam in this issue. —Ed.] at STEIM (STudio of Electro-Instrumental Music) in Amsterdam, a center for research and development of instruments and tools for performers. Moreover, later programs such as Max/MSP integrated MIDI control, so that information received from MIDI devices can be used to process audio in real time, and affect timbre as well as structure.

Computer-assisted performances have been made possible with the contribution of many research centres around the world, most notably IRCAM, which has always had an affiliation with real-time applications. An early example of what IRCAM has offered in this field is the program Patcher, developed in the early 1990s, which controls commercial MIDI equipment and machines made at IRCAM such as the MIDI flute, the 4X processor and the Matrix system for sound distribution and spatialisation (Lippe 1991, 220). IRCAM continues to upgrade and develop real-time applications and devices for performance (for latest developments and details see the IRCAM website).

Computers operate without making mistakes (provided they are programmed properly and have sufficient memory), thus allowing the performer to concentrate on other aspects of music-making, such as expressivity. Chadabe (1999, 25–26) states that because performance tasks are divided between software programs and human players, people who have not studied an instrument are able to participate in musical actions at an artistic level. Max Mathews’ Conductor Program allows the performer to concentrate on “… the timing, the tempo, the dynamics, and, perhaps even more important, the shading, the attacks and decays, and the timbral qualities of the notes” (Mathews, quoted in ibid, 25). However, composers who use interactive media do not allow listeners (who are also the performers of interactive pieces) to have significant control over their compositions, for fear that their work might be ruined. A solution was suggested by Subotnick, who introduced an aleatory aspect in the CD-ROM version of his composition All my Hummingbirds Have Alibis (1991). By offering many parallel paths as possible alternatives, he limited the performer’s role to a harmless choice of already composed options. Therefore it becomes clear that computer-assisted performances challenge traditional notions such as the necessary skills that a performer should normally have, as well as the role of the composer, who becomes less responsible for the final product. In many cases, instead of making a musical composition, the composer creates a system that satisfies the role of the performer (ibid., 29).

In the tradition of acoustic music, the performer’s fluctuations and slight shifts around the music notation depend essentially on information that is constantly received from the performance environment. However, the machine is not able to operate in a similar manner.

Assimilating a real-time device to a performer simply because it starts its own sequence alone is degrading the performer’s competence, asserting that a rubato or a slightly different tempo is the heart of true music is intellectually questionable and musically weak, if not a purely ideological claim (Stroppa 1999, 44).

A more positive attitude sees the composer as an explorer of the creative potential of technology. The composer sets a context, wherein a collaboration of humans and machines can be made possible (Rowe 1999, 87).

In conclusion, notation had to expand its capacity to integrate the new sound discoveries of the composer. Quickly, it became complicated and it was addressed mainly to computing systems; at the same time, it had to be simplified into graphic representation forms in order to be used by the live performer. The score almost lost its traditional meaning, so that it had to be re-evaluated and its continuing existence to be justified.

Likewise, performance was redefined, because in electroacoustic music there are acousmatic concerts without visible performers. Moreover, when performers participate in the music-making on stage, their gestures are not always associated with the sound product. In addition, computer-assisted performances challenge the traditional competencies of the performer and the role of the composer.

Electroacoustic music has enabled access to a multitude of sounds, in ways unimaginable to the composer and the performer of the past. It is inevitable that traditional music notions such as the score and performance adapt accordingly, as their potential cannot be fully exploited if they remain confined within the borders of their traditional design.

Bibliography

Appleton, Jon H. and Ronald C. Perera, eds. The Development and Practice of Electronic Music. Englewood Cliffs NJ: Prentice-Hall, 1975.

Bevelander, Brian. “Observations on Live Electronics.” Contemporary Music Review 6/1 (1991), pp. 151–57. Edited by Peter Nelson and Stephen Montague.

Bernard, Pierre. “The UPIC as a Performance Instrument.” Contemporary Music Review 6/1 (1991), pp. 47–57. Edited by Peter Nelson and Stephen Montague, trans. by Richard Easton.

Chavez, Carlos. Towards a New Music: Music and Electricity. Trans. by Herbert Weinstock. New York: Da Capo Press, 1975.

Chadabe, Joel. “The Performer is Us.” Contemporary Music Review 18/3 (1999), pp. 25–30. Edited by Peter Nelson and Nigel Osborne.

Cole, Hugo. Sounds and Signs: Aspects of Musical Notation. London: Oxford University Press, 1974.

Cutler, Chris. “A History of Plunderphonics.” http://www.l-m-c.org.uk/texts/plunder.html. [Last accessed 2001. Link is no longer active, however, a recent version of the article can be found on the author’s website at http://www.ccutler.com/ccutler/writing/plunderphonia.shtml.]

Cutler, Chris. “A History of Plunderphonics.” Musicworks 60 (Fall 1994). Also published in Resonance 3.2 and 4.1, in Kostelantz and Darby (eds.), Classic Essays on 20th Century Music (New York: Schirmer Books, 1996) and in Simon Emmerson (ed.), Music, Electronic Media and Culture (London: Ashgate Press, 2000). The article is also available on the author’s website at http://www.ccutler.com/ccutler/writing/plunderphonia.shtml [last accessed 28 August 2008].

Emmerson, Simon, ed. The Language of Electroacoustic Music. London: MacMillan Press, 1986.

Emmerson, Simon. “Live Electronic Music in Britain: Three Case Studies.” Contemporary Music Review 6/1 (1991), pp. 179–95. Edited by Peter Nelson and Stephen Montague.

Emmerson, Simon and Denis Smalley. “Electro-acoustic Music.” The New Grove Dictionary of Music and Musicians. 2nd edition, vol. 8. Edited by Stanley Sadie. London: Macmillan, 2001, pp. 59–65.

Griffiths, Paul. A Guide to Electronic Music. London: Thames and Hudson, 1979.

Hodgkinson, Tim. “Interview with Pierre Schaeffer.” ReR Quarterly 2/1 (1987).

Kanno, Mieko. “The Role of Notation in New Music.” http://www.hud.ac.uk/schools/music+humanities/music/newmusic/notation_role.html. [Last accessed 2001. Link is no longer active.]

Kennedy, Michael, ed. The Concise Oxford Dictionary of Music. 4th edition. Oxford University Press, 1996.

Lippe, Cort. “Real-time Computer Music at IRCAM.” Contemporary Music Review 6/1 (1991), pp. 219–24. Edited by Peter Nelson and Stephen Montague.

Maconie, Robin. The Works of Karlheinz Stockhausen. New York: Oxford University Press, 1976.

Manning, Peter. “Computers and Music Composition.” Proceedings of the Royal Musical Association 107 (1980–81), pp. 119–31. Edited by David Greer.

Montague, Stephen. “Mesias Maiguashca” (interview in Baden-Baden, 25 January 1989), Contemporary Music Review 6/1 (1991). Edited by Peter Nelson and Stephen Montague, pp. 197–203.

Rowe, Robert. “The Aesthetics of Interactive Music Systems.” Contemporary Music Review 18/3 (1999). Edited by Peter Nelson and Nigel Osborne, pp. 83–87.

Schaeffer, Pierre and Guy Reibel. Solfège de l’Objet Sonore. Paris: Editions du Seuil, 1966. [Incl. 6 CDs.]

Stockhausen, Karlheinz. “Two Lectures.” Die Reihe 5 (1961), pp. 59–82.

Stroppa, Marco. “Live Electronics or… Live Music? Towards a Critique of Interaction.” Contemporary Music Review 18/3 (1999), pp. 41–77. Edited by Peter Nelson and Nigel Osborne.

Wishart, Trevor. On Sonic Art. Edited by Simon Emmerson. Amsterdam: Hardwood Academic Publishers, 1996. [Incl. CD.]

Social top