The Phenomenology of Musical Instruments: A Survey

An earlier version of this paper, entitled “The Acoustic, the Digital and the Body: A Survey on Musical Instruments,” was presented at NIME 2007 (New York City USA, 6–10 June 2007). It has been revised, updated and expanded for publication in eContact! 10.4.

Introduction

The Aim of the Survey

Methodology

Evaluation and Findings

Interesting Comments

Discussion

Future Work

Notes | Bibliography | Author Biographies

Introduction

At this moment in time, we witness an extraordinary boom in the research and development of digital musical instruments. This explosion is partly caused by advances in tangible user interfaces and new programming paradigms in computer science. It is also driven by the curiosity and æsthetic demands of musicians that, in a Varèseian manner, demand new instruments for new musical ideas. A plethora of new audio programming languages and environments now exist that allow the musician to develop their own instruments or compositional systems. These environments work seamlessly with new sensors and sensor/controller interfaces, many of which are open source and incredibly cheap. This makes the design and implementation of novel controllers relatively simple — an upturn of the situation as it was merely a decade ago. On the net one finds plenty of information (languages, protocols, interfaces, and schematic diagrams) needed for the design of such instruments and people altruistically (which, in the open source culture, results in various personal advantages) post their inventions up on video sharing websites in order to spread their inventiveness. It is an enthusiastic culture of sharing information, code, instrument design, and (of course) music.

This outburst of research and development in musical tools (where most of the interesting research comes from academia and DIY people — as opposed to the commercial sector) is also a response to the unsatisfactory feeling the audience of computer music performances tends to have due to the lack of perceptual causality between the bodily gestures of the musician and the resulting sound. This lack of causality is not to be found in acoustic instruments and people are realising that it is not just a matter of “getting used” to the new mode of disembodied performance of the laptop performance; fundamental to musical performance is the audience’s understanding of the performer’s activities, a sharing of the same “ergonomic” or activity space. Recent research in brain science on mirror neurons (Molnar-Szakacs & Overy 2006; Godøy 2003; Leman 2008); in sociology of thinking as collective activity (Hutchins 1995); and in biology of enactivism (Varela et al. 1991), all yield further understanding of embodied performance. This development is highly related to the realization in cognitive science of the role of the body and the environment in human cognition. The fields of psychology, HCI, engineering, ergonomics and music technology all understand the role of embodiment in the design of technological artefacts. For these research programmes, music technologies are particularly interesting case studies as music is a performative, highly embodied, real-time phenomenon that craves impeccable support for manual dexterity, ergonomical considerations and solid timing. Music as a focused, performative, real-time, rooted in tradition, and last but not least, a highly enjoyable activity, is proving to be one of the most interesting cases for human-machine relationship studies.

In a nutshell, the situation is this: after decades of computer music software that focuses on the production environment (of composing or arranging musical patterns), there is now a high demand and interest in the computer as a platform for the design of expressive musical instruments. Instead of prepared “projects” or “patches”, the desire is for instruments that are intuitive, responsive and good for improvisation. Instruments that provide the potential for expression, depth and mystery that encourages the musician to strive for mastery. Currently, music made through an improvisation with computers is typically characterized by long, extensive parts and slow formal changes. Improvisation with acoustic instruments, on the other hand, can be fast, unpredictable and highly varied in form, even within the same piece/performance. The desire is now for digital instruments that have expressive depth, ease of use, and intuitive flexibility.

The Aim of the Survey

Research Questions

In the light of the differences between acoustic and digital instruments we decided to conduct a survey with practitioners in the field — both acoustic and digital musicians (which are categories that typically overlap). In the survey we were specifically concerned with people’s experience of the differences in playing acoustic and digital instruments. The approach was phenomenological and qualitative: we wanted to know how musicians or composers describe their practice and the relationship they have with their musical tools, whether acoustic or digital. We deliberately did not define what we meant by “digital instrument” (such as a sequencer software, a graphical dataflow language, a textual programming language or a tangible sensor interface coupled to a sound engine), as we were interested in how people themselves define the digital, the acoustic, and the relationship between the two. We were intrigued to know how people rate the distinctive affordances and constraints of these instruments and whether there is a difference in the way they critically respond to their design. Furthermore, we wanted to know if people relate differently to the inventors and manufacturers of these two types of instruments. We were also curious to learn if musical education and the practicing of an acoustic instrument results in a different critical relationship with the digital instrument. How do instrumental practice change the ideas of embodiment and does it affect the view of the qualitative properties of the computer-based tool? Finally, we were interested in knowing how people related to the chaotic or “non-deterministic” nature of their instruments (if they saw it as a limitation or a creative potential) and whether they felt that such “quality” could be designed into digital instruments in any sensible ways.

In order to gain a better understanding of these questions, we asked the participants about their musical background; how intensely they work with the computer; which operating systems they run; what software they predominantly use; and the reasons they have chosen the selected environment for their work.

The Participants

We tried to reach a wide audience with our survey: from the acoustic instrumentalist that has never touched a computer to the live-coder that does not play a traditional instrument. More specifically, we were interested in learning from people with a critical relationship to their tools (whether acoustic or digital); people who modify their instruments or build their own, with the aim of expressing themselves differently musically. We were also curious to hear from people that have used our own software (ixi software) to find out how they experience it in relation to the questions mentioned above.

The results we got were precisely from the group we aimed at. The majority of the participants actively work with one or more of the audio programming environments mentioned in the survey. Very few replies were from people that use exclusively commercial software such as Logic, Reaktor, Cubase or ProTools, although insights from them would have been welcome.

In the end we had around 250 replies, mainly from Western Europe and North America, but a considerable amount also from South America and Asia. Of all the replies, there were only 9 female participants but it was outside the scope of this survey and research to explore the reason behind this. We were interested in the age of the participants and how long they have been playing music. We were surprised how relatively high the mean age was (37 years), distributed as shown in Figure 1.

The Questionnaire

The survey was a qualitative survey where the main focus was on people’s description of and relationship with their musical tools. We divided it into six areas:

- Personal Details. A set of demographic questions on gender, age, profession, nationality, institutional affiliation, etc.

- Musical Background. Questions on how the participants define themselves in relation to the survey (musician, composer, designer, engineer, artist, other), how long they have played music, musical education, computer use in music and musical genre (if applicable).

- Acoustic Instruments. Questions about people’s relationship with their instrument. (Which instrument, how long they have played it, etc.). We asked whether people found their instrument lacking in functionality; if they thought the instrument has “unstable” or “non-deterministic” behaviour; and if so, how they related to that. We wanted to know how well they knew the history of their instrument and which factors affected its design. Would it be beneficial if the human body was different?

- Digital Instruments. We asked which operating system people use and why; what hardware (computer, soundcard, controllers, sensors); what music software; and whether they have tried or use regularly the following audio programming environments: Pure Data, SuperCollider, ChucK, CSound, Max/MSP, Plogue Bidule, Aura, Open Sound World, AudioMulch and Reaktor. We asked about their programming experience and why they had chosen their software of choice. Further, we were interested in knowing if and how people use the Open Sound Control (OSC) and if people use programming environments for graphics or video in the context of their music making.

- Comparison of Acoustic and Digital Instruments. Here we were concerned with the difference of playing acoustic and digital instruments, and what each of the types lack or provide. We asked about people’s dream software; what kind of interfaces people would like to use; and then if people found that the limitations of instruments are a source of frustration or inspiration. Did that depend on the type of instrument?

- ixi software. This section of the questionnaire is only indirectly related to this paper but part of a wider research programme.

People were free to answer the questions they were interested in and to skip the others, as it would not make sense to force an instrumentalist to answer questions about computers if he/she has never used one. The same goes for the audio programmer that does not play an acoustic instrument. For people interested in the questions, the survey can be consulted on the ixi audio website.

Methodology

The survey was introduced on our website and we posted it to the ixi mailing list, but we also sent it to various external mailing lists (including SuperCollider, ChucK, Pure Data, Max/MSP, CSound, AudioMulch, eu-gene, livecode). We asked friends and collaborators to distribute the survey as much as possible and we contacted orchestras and conservatories and asked them to post the survey on their internal mailing lists. The survey could be answered in nine languages, but the questions themselves were only available in English or Spanish. Unfortunately, as a quarter of the visitors on our website originate from Japan (and where there is a strong culture of using audio programming languages) we did not have the resources to translate the survey into Japanese.

After three months of receiving replies, we started working on the data. We analysed each reply and put it into a database. All the quantitative data was retrieved programmatically into the database, but as the questionnaire was largely qualitative (where people write their answers in the form of descriptive narrative), we had to interpret some of the data subjectively. Here we created a bipolar continuum (marked from 1 to 5) where the following “archetypal” elements were extracted:

- Abstract vs. graphical thinking: i.e. the tendency for working with textual vs. dataflow programming environments.

- Preference of self-made vs. pre-made tools.

- Embodied vs. disembodied emphasis in playing and making instruments or compositions.

- Whether the person is a “techie” vs. “non-techie” where we tried to extract the level of people’s “computer-literacy” and programming skills.

- Academic vs. non-academic. We were interested in the question of how these audio programming environments (that mostly have their origins in academia) have filtered out into the mainstream culture.

In order to test how reliable this subjective method of categorising the answers was, we selected five random replies and gave the same set of replies to five different people to analyse. The comparison of the five people’s different analyses were satisfying. The results were almost identical, with some minor differences on the left or right side of the continuum, but never opposite interpretations.

Evaluation and Findings

There were many questions in our survey that addressed the issues of acoustic vs. digital instruments from different angles but they were varied approaches or “interfaces” to some underlying topics of interest. In the next sections we will look at some selected topics and what we learned from the answers.

The Survey Participant

We have already mentioned the high mean age of the survey participants. From analysing the demographic of the people answering the survey, we could divide the typical survey participant into two groups to which more than 90 percent of the participants would belong:

Group 1. People that have had over 20 years of studying music and playing acoustic instruments, therefore typically 30–40 years of age or older. They have been using the computer for their music for at least 10 years and usually have some form of programming experience. Many write their own software or use the common audio programming environments available today. This group has thought much about their instruments and why they have chosen to use digital technologies in the production and performance of their music.

Group 2. This group consists of younger people that grew up with the computer and are highly computer literate. Many of them have not studied music formally or practised an acoustic instrument but use the computer as their instrument or environment for creating music. Here, of course, one could view all the time in front of the computer screen (performing any task) as part of the musical training.

Naturally, there was some degree of overlap between the two groups.

It might be illustrative to look at which operating systems the participants are running their tools on, and here we see that 45 people use Linux/GNU; 105 use Windows; and 88 use Mac OS. Of these 16 stated that they use both Mac OS and Linux/GNU; 30 use both Mac OS and Windows; 25 Linux/GNU and Windows; and 7 used all three. Other operating systems in use were NeXTstep, BSD and Solaris, one person for each system.

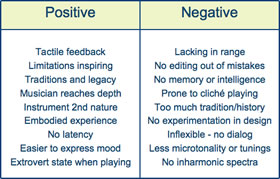

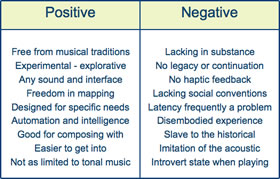

Acoustic vs. Digital Instruments

The question we were concerned with here is how people experience the different qualities of acoustic instruments and digital instruments or software tools. Apart from the experiential and perceptual differences, do people think that the tools enframe or influence their work?

Many people found that an important difference in these two types of instruments lies in the fact that the digital instrument can be created for specific needs whereas the player has to “mould oneself” to the acoustic instrument. As the composed digital instrument can be very work specific, it lacks the generality of acoustic instruments. In this context, the question of originality came up frequently. People found it possible to be more original using the composed, digital instruments, precisely because of the lack of history and traditions. As one survey participant put it: “when playing an acoustic instrument, you are constantly referring to scales, styles, conventions, traditions and clichés that the instrument and the culture around it imposes on you. A musician can just play those conventions in autopilot without having to THINK at all. It’s easy and unchallenging”. This, of course, is a double-edged sword as it is difficult in a live performance, using software tools, to refer to the musical reservoir in the spur of the moment. All such decisions have to be pre-programmed and thus pre-planned thus subjecting the performance to a certain degree of categorisation and determinacy. The issue of originality also points to the limited scopes of some commercial software environments where users are virtually led into producing music of certain styles.

One participant talked about the enriching experience of learning the vocabulary and voice of an instrument like the viola to its finest details, whereas with computer technology the voice is too broad to get to know it thoroughly. In this vain, other participants expressed the wish for more limited expressive software instruments, i.e. not a software that tries to do it all but “does one thing well and not one hundred things badly.” They would like to see software that has an easy learning curve but incorporates a potential for further in-depth explorations, in order not to become bored with the instrument. True to form, the people asking for such software tools had a relatively long history as instrumentalists.

Many people were concerned with the arbitrary mappings in digital instruments. (Hunt et al. 2002). There are no “natural” mappings between the exertion of bodily energy and the resulting sound. One participant described digital instruments as “more of a mind/brain endeavour.” He continued, “it is more difficult to remove the brain and become one with the physical embodiment of performing”. Others talked about the haptic feedback of acoustic instruments and directly experiencing the source of the sound as something that computer systems lack with their buttons and sliders, soundcards and cables going out to the remote speakers. The computer was often seen as a symbolic system that can be configured differently according to situations, thus highly open, flexible and adaptable to infinite situations. The acoustic instrument was seen, in this context, as a non-symbolic tool that one has an embodied relationship with, one that is carved into the motor memory of the body and not defined by the symbolic and conversational relationship we have with our digital tools.

Some participants expressed how they found their time better spent working with digital technology, creating music or “experimenting with sound” rather than practising an acoustic instrument. They could be instantly creating music, even of releasable quality, as opposed to the endless hours spent on practicing the acoustic instrument. Conversely, others talked about the dangers of getting side-tracked when using the computer, constantly looking for updates, reading mailing lists, testing other people’s patches or instruments, even ending up browsing the web whilst trying to make music.

Some talked about the “frightening blank space” of the audio programming patcher (its virtually infinite expressive possibilities) and found retreat in limited tools or acoustic instruments, whereas others were frustrated with the expressive limitations of the acoustic instruments and craved for more freedom and open work environments. Naturally, this went hand in hand with people’s use of environments such as SuperCollider, ChucK, Pure Data and Max/MSP vs. preference of less open or more directive software like ProTools, Cubase, GarageBand, Fruityloops, etc. Here one might consider personality differences and cognitive styles as important factors in how people relate to the engineering tasks of building one’s own tools but also with regards to the guidance the tool affords in terms of musical expression (Eaglestone et al. 2008).

Another issue of concern was latency. An acoustic instrument does not have latency as such, although in some cases there is a slight delay between the energy applied and the sounding result (such as in the church organ). In digital instruments there might be up to 50 ms latency that people put up with when playing a hardware controller; many seconds latency in networked performances; but also the organisational latency when opening patches, changing effect settings or in live-coding where one has to type a whole function before hearing the result (typically by hitting the Enter button). This artificial latency is characteristic of digital instruments, but it is not necessarily always a negative property apart from the situation with using hardware controllers. This state of affairs has improved drastically in recent years.

Related to this, some people reported discontent with the uncertainty of the continuation of commercial digital instruments or software environments. Their production could be discontinued or not supported on new operating systems. Unless open source is used, the proprietary protocols could render the instruments and generative compositions practically objects of archaeology. In this regard, acoustic instruments have longer lifetime, which makes practising them more likely a continuous path to mastery.

Affordances and Constraints

Here we were interested in the question whether people relate differently to the affordances and the limitations of their acoustic and digital instruments.

It was a common agreement that the limitations of acoustic instruments were a source of inspiration and creativity. People talked about “pushing the boundaries” of the instrument and exploring its limits. Many participants said the same about digital instruments, but more commonly people were critical of the limitations of software. People felt that software limitations are due to engineering or software design, as opposed to the physical limitations of natural material like wood or strings. Interestingly the boundaries of these two types of instruments are of a different nature. In acoustic instruments the boundaries are typically those of pitch, timbre, amplitude and polyphony. In digital instruments the limitative boundaries are more of conceptual nature, typically those of musical forms, scales, structure, etc. From this perspective, the essence of the acoustic instrument could be seen as that of a resonating physical body, whereas the digital instrument’s body is theoretical, formal and generic.

Most of the skilled instrumentalists experience the limitations of their acoustic instruments positively and saw the potential — both discovered and undiscovered — of the instrument as the foundation of an interesting relationship based on depth. People usually had an “emotional” affection towards their acoustic instrument (one of our questions asked about this) and they bonded with its character. This issue was very different with regards to people’s feelings about their digital instruments. Survey participants often expressed frustrations with the technology, irritating limitations of software environments and dissatisfaction with how hardware needs constant upgrading, maintenance, and, not surprisingly, the use of electricity. One responder talked about how the limitations of acoustic instruments change or evolve constantly according to skill levels but also state of mood, whereas the limitations of software, once it has been learned and understood, are the limitations of its design. As another participant put it: “the creative challenge [in digital instruments] is to select and refine rather than expand.”

In general people felt that the main power of digital instruments is the possibility of designing the instrument for specific needs. The process of designing the instrument becomes a process of composing at the same time. The fact that people talk about “composing instruments” (Schnell and Battier 2002) reveals a clear distinction from the acoustic world where instruments tend to be more general in order to play more varied pieces. This also explains why we rarely see the continuity of a digital instrument or musical interface through time: each instrument tends to be made for a specific and not general purpose. The power to be able to store conceptual structures in the tool itself renders it more specific and unique for a certain musical piece or performance and less adaptive for other situations. There is therefore a continuum where instruments are unique and specific on the one side and general and multi-purpose on the other. Creating a digital instrument always involves decisions of where to place the instrument on that continuum.

The Instrument Maker Criticised

People were more critical of software tools than acoustic instruments. There could be many reasons for this; one being that musical software is such a new field and naturally experimental whereas acoustic instruments have had centuries of refinement. Another observation — supported by our data — is that people normally start to learn an acoustic instrument at a very young age when things are more likely to be taken for granted. Students of acoustic instruments see it as their fault if they cannot play the instrument properly, not the imperfection of the instrument design itself. Our survey participants had different relation to the digital instruments: they are more likely to criticise the digital instrument and see its limitations as weakness of design rather than their own insufficient understanding of the system. This is reflected in the way people relate to the instruments themselves. The fact that acoustic instruments appear to have existed forever (and the survey affirms that the majority of people do not have a very thorough historical knowledge of their instrument) makes people less likely to step back and actively criticise their instrument of choice.

Almost all the participants stated that their acoustic instruments have been built from ergonomic and æsthetic/timbral considerations and saw the evolution of their instrument as a continuous refinement of moulding it to the human body and extorting the most expressive sound out if its physical material. There is, however, evidence that orchestral instruments were developed primarily with the view to stabilise intonation and increase acoustic power or loudness (Jordà 2005, 169). In reality, the young but strong research field of digital music instruments and interface building is perhaps more consciously concerned with ergonomics and human-tool interaction than we find in the history of acoustic instrument building. Ergonomics have at least become more prominent in the way people think when building their musical tools. An agreed view was that the difficulty of building masterly interfaces in the digital realm is largely because of the complexity of the medium and the unnatural or arbitrary nature of its input and output mappings.

In Being and Time, the philosopher Martin Heidegger talks about two cognitive or phenomenological modalities in the way we look at the world (Heidegger 1995, 102–22). There is the usage of tools, where the tools are ready-at-hand and are applied in a finely orchestrated way by the trained body, and then there is a more theoretical relationship with the tool called present-at-hand, for example when the tool breaks. Here, the user of the tool has to actively think what is wrong with the tool, and look at its mechanism from a new and critical stance. Heidegger takes the example of a carpenter who uses the hammer day in and day out without thinking about it. Only when the head falls off the hammer will the carpenter look at the hammer from a different perspective and establish a theoretical and analytical relationship to it. As digital instruments/software are normally applied on the computer, we are more likely to have these phenomenological breaks. The tool becomes present-at-hand and we have to actively think about why something is not working or what would be the best way of applying a specific tool for a certain task. In fact, the way we use a computer and digital instruments is a constant oscillation between these two modes of being ready-at-hand and present-at-hand. We forget ourselves in working with the tool for a while, but suddenly we have to open or save a file, retrieve a stored setting, switch between plug-ins or instruments, zoom into some details, open a new window, shake the mouse to find the cursor, plug in the power cable when the battery is low, kill a chat client when a “buddy” suddenly calls in the middle of a session, etc. In this respect, many of the participants saw the computer as a distracting tool that did not lend itself to deep concentration or provide a condition for the experience of flow.

Entropy and Control in Instruments

Here we were interested to know how people relate to the non-deterministic nature of their instruments and if it differs whether the instrument is acoustic or digital. On this topic we had two trends of responses. It was mostly agreed that the accidental or the entropic nature in acoustic instruments could be a source of joy and inspiration. Some people talked about playing with the tension of going out on the “slippery ice” where there was less control of the instrument, and where it seemed that the instrument had its own will or personality. Typically, people did not have the same view of digital instruments: when they go wrong or become unpredictable, it is normally because of a bug or a fault in the way they are set up or designed. However, there was a strand of people that enjoyed and actively searched for such “glitches” in software and hardware, of which one trace has resulted in the well known musical style called “Glitch”.

That said, according to our data, the process of exploration is a very common way of working with software, where the musician/composer sets up a system in the form of a space of sonic parameters in which he or she navigates until a desired sound or musical pattern is encountered. This style of working is quite common in generative music and in computationally creative software where artificial intelligence is used to generate the material and the final fitness function of the system tends to be the æsthetic judgement of the user.

Time and Embodiment

As most people would guess, we found that the longer people played an acoustic instrument the more desire they had for a physical control and emphasised the use the body in a musical performance. People with highly advanced motor skills, the experts of their instruments, evaluate those as important factors of music making. Playing digital instruments seems to be less of an embodied practise (where motor-memory has been established) as the mapping between gesture and sound can be changed so easily by changing a variable, a setting, a patch or a program. The digital instrument involves more cognitive activity, as opposed to the embodied activity of playing the acoustic instrument. Some responders noted that working with digital instruments or software systems had forced them to re-evaluate the way they understand and play their acoustic instrument. Of course, the contrary has to be true as well.

One thing has to be to noted here. Most of the people that answered the survey were both acoustic and digital instrumentalists and embraced the qualities of both worlds. It seems that people subscribe positively to the qualities of each of the two instrumental modalities — acoustic and digital — and do not try to impose working patterns that work in one type of instruments onto the other. In general people seem to approach instruments on their own merits and choose to spend time with a selected instrument only if it gives them some challenge, excitement and the prospect of nuanced expression. It is in this context that we witness a drastic divergence in the two types of instruments: as opposed to the willingness to spend years on practicing an acoustic instrument, the digital instrument has to be easily learned, understood and mastered in matter of hours for it to be acceptable. (1) This fact emphasises the role of tradition and history in relation to acoustic instruments and the role of progress, inventiveness and novelty with regards to instruments founded on the new digital technologies.

Interesting Comments

There were some comments that are worth printing here due to their direct and clear presentation:

“I don’t feel like I’m playing a digital instrument so much as operating it.”

“Eternal upgrading makes me nervous.”

“full control is not interesting for experimentation. but lack of control is not useful for composition.”

“Can a software ‘instrument’ really be considered an instrument, or is it something radically different?”

“The relationship with my first instrument (guitar) is a love/hate one, as over the years I developed a lot of musical habits that are hard to get rid of ;-)”

“j’entretiens un certain rapport avec mes machines. Impossible pour moi de penser à revendre une machine.”

“I think acoustic instruments tend to be more right brain or spatial, and digital instruments tend to be more left brain and linguistic.”

Discussion

There were many surprising and interesting findings that came out of this survey. First of all, we were intrigued by the high mean age of the survey participants. We wondered if the reason for the high average age could be the nature of the questions, especially considering the questions regarding embodiment. Perhaps such questions are not as relevant to younger people who have been brought up with the computer and are less alienated by the different modes of physical vs. virtual interaction? One explanation might be that the mean age of the survey participants is reflecting that of the members of the mailing lists we posted the survey to, but we doubt that. More likely is that the questions resonated better with more mature people. It is illustrative that the majority of people answering the survey were involved with academia or had an academic or conservatory education. This helps to explain the high mean age but also the high level of analysis that most people had applied to their tools. We noted that the time spent playing an instrument enforces the musician’s focus on embodiment and as such the questions of this survey might have connected better with the older musicians.

An important point to raise here is that whereas the survey focused on people’s perception of the differences of acoustic and digital instruments, the fact is that most people are content with working with both instrument types and confidently subscribe to the different qualities of each. Many of the participants use the computer in combination with acoustic instruments, especially for things that the computer excels at such as musical analysis, adaptive effects, building hyper-instruments and the use of artificial intelligence.

A clear difference between the acoustic and digital instruments is the polarity between the instrument maker and the musician in acoustic instruments. This is further forked by the distinction of the composer and the performer. In the field of digital instruments, designing an instrument often overlaps with the musical composition itself (or at least designing its conditions) which, in turn, is manifested in the performance of the composition, most typically, by the composer/instrument builder herself. This relates to a specific continuum of expression in digital instrument design that we don’t see as clearly in acoustic instruments: a digital musical instrument can be highly musical, a signature of the composer herself, rendering it useless for other performers. But it could also be generic, universal, and open for musical inscriptions, thus more usable to everybody.

Another interesting trait we noticed in the survey was the question of open source software. People professed that the use of open source tools was more community building, collaborative, educative and correct than working with commercial software. Many stated that open source should be used in education precisely due to these facts and because of the continuity that open formats have. Furthermore, many people were using Linux (or expressing desire to do so) because they felt that they had more control over things and are less directed by some commercial company’s ideas of how to set up the working environment or compose/perform music. The questions of open protocols and standards, of legacy in software, of collaborative design and freedom to change the system were all important issues here.

Future Work

The topics of this survey revealed many more questions that would have been unconceivable without the process of making this survey and reading the replies. In continuation of our research we designed another survey that is more focussed on a specific software, the ixiQuarks, and we are in the process of receiving data from users of that software (for those interested in it can find it here). We are also interviewing musicians and users of ixi software for further understanding of these issues. This has resulted in a clarified understanding of digital musical instruments as a type of epistemic tools, and we have written further on this topic, soon to be published.

Notes

- Yet it needs to be challenging for people in order to keep up the interest. Csikszentmihalyi’s (1996) elaboration on the flow concept is illustrative here. In order to be interesting for the human performer the musical instrument would have to be equally distributed amount of skill and challenge. To much challenge without skill results in anxiety, but too much skill without any challenge would result in boredom.

References

Csikszentmihalyi, Mihaly. Creativity: Flow and The Psychology of Discovery and Invention. New York: Harper Collins, 1996.

Duignan, Matthew, James Noble and Robert Biddle. “A Taxonomy of Sequencer User-Interfaces.” Proceedings of the International Computer Music Conference (ICMC) 2005: Free Sound (Barcelona, Spain: L’Escola Superior de Música de Catalunya, 5–9 September 2005).

Eaglestone, Barry, Catherine Upton and Nigel Ford. “The Compositional Process of Electroacoustic Composers: Contrasting Perspectives.” Proceedings of the International Computer Music Conference (ICMC) 2005: Free Sound (Barcelona, Spain: L’Escola Superior de Música de Catalunya, 5–9 September 2005).

Eaglestone, Barry, Nigel Ford, Peter Holdridge and Jenny Carter. "Are Cognitive Styles an Important Factor in Design of Electroacoustic Music Software?" Journal of New Music Research 37/1 (2008), pp. 77–85.

Godøj, Rolf. "Motor-mimetic Music Cognition." Leonardo 36/4 (2003), pp. 317–19.

Heidegger, Martin. Being and Time. Oxford: Blackwell Publishers, 1995.

Hunt, Andy, Marcelo M. Wanderley and Matthew Paradis. “The Importance of Parameter Mapping in Electronic Instrument Design.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2002 (Dublin: Media Lab Europe, 24–26 May 2002). Limerick: University of Limerick, Department of Computer Science and Information Systems.

Hutchins, Edwin. Cognition in the Wild. Cambridge MA: MIT Press, 1995.

Jordà, Sergi. “Digital Lutherie: Crafting musical computers for new music’s performance and improvisation.” PhD thesis. Barcelona: Universitat Pompeu Fabra, 2005.

Leman, Marc. Embodied Music Cognition and Mediated Technology. Cambridge MA: MIT Press, 2008.

Magnusson, Thor. “ixi software: Open Controllers for Open Source Software.” Proceedings of the International Computer Music Conference (ICMC) 2005: Free Sound (Barcelona, Spain: L’Escola Superior de Música de Catalunya, 5–9 September 2005).

_____. “Screen-Based Musical Instruments as Semiotic-Machines.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2006 (Paris: IRCAM—Centre Pompidou, 4–8 June 2006).

Molnar-Szakacs, Istvan and Katie Overy. "Music and Mirror Neurons: From Motion to ‘E’motion." Social Cognitive and Affective Neuroscience 3/2 (2006).

Schnell, Norbert and Marc Battier. “Introducing Composed Instruments, Technical and Musicological Implications.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2002 (Dublin: Media Lab Europe, 24–26 May 2002). Limerick: University of Limerick, Department of Computer Science and Information Systems.

Varela, Francisco, Evan Thompson and Eleanor Rosch. The Embodied Mind. Cambridge MA: MIT Press, 1991.

Other Articles by the Authors

Hurtado, Enrike.

_____. "ixi software." Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2002 (Dublin: Media Lab Europe, 24–26 May 2002). Limerick: University of Limerick, Department of Computer Science and Information Systems. Also in Cybersonica. ICA (London UK)

_____. “Análisis de Software Musical y su Influencia en la Expresión Artística.” 2004 "Música, Software, Cultura" ZEHAR magazine (Arteleku) 53 (2004).

_____. "The Acoustic, the Digital and the Body: A Survey on musical instruments." Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2007 (New York: New York University, 6–10 June 2007).

Magnusson, Thor. “Intelligent Tools in Music.” Artificial.dk 14 (December 2005). http://artificial.dk

_____. “Screen-Based Musical Instruments as Semiotic Machines.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2006 (Paris: IRCAM—Centre Pompidou, 4–8 June 2006).

_____. “Generative Schizotopia: SameSameButDifferent v02 — Iceland.” Soundscape: The Journal of Acoustic Ecology 7/1 (Winter 2007).

_____. “The ixiQuarks: Merging Code and GUI in One Creative Space.” Proceedings of the International Computer Music Conference (ICMC) 2007: Immersed Music (Copenhagen, Denmark, 27–31 August 2007).

_____. “Working with Sound.” The Digital Artists Handbook. Lancaster: Folly, 2008.

_____. “Expression and Time: The Question of Strata and Time Management in Art Practices using Technology.” The FLOSS + Art Book. Poitiers: goto10, (forthcoming).

Social top