What You See Is What You Hear

Using visual communication processes to categorize various manifestations of music notation

In electroacoustic music as well as in other domains of contemporary music, a vast number of experimental representations and alternative approaches to music notation have come to life in recent decades. Since the 1960s, composers and artists have explored and documented their work using those alternative notation approaches from various perspectives. The essays about music notation by Earle Bown or Mauricio Kagel at the Darmstädter Ferienkurse für Neue Musik (Thomas 1965) as well as Erhard Karkoschka’s Das Schriftbild der neuen Musik (1966) and John Cage’s Notations (1969) give insight into common practices of that time. Theresa Sauer’s collection of graphic notations and essays about the practices, Notations 21 (2009), can be regarded as a successor of John Cage’s Notations. The See This Sound audiovisuology project comprehensively examined the connection between image and sound in art, media and perception from a rather historical point of view (Daniels and Naumann 2009). In Extended Notation (2013), Christian Dimpker “depicts the unconventional” by developing a coherent and consistent notation system for extended playing techniques as well as the utilization of electroacoustic instrumentation.

Although all these profound works examine alternative notation from different perspectives, one important aspect is almost completely left out: the process of visual communication — how the music is represented visually and, more importantly, how information is conveyed. In other words, how the performers will read and understand the notation. Visual communication processes are the core of the conveyance of musical ideas through notation. Considering and understanding communication processes can be a valuable contribution to the discussion and examination of alternative music notation approaches. Furthermore, the visual communication process is proposed here as one common base that can assist in the categorization of virtually any kind of experimental representation of acoustic phenomena in music notation.

Music Notation — A Definition

Already the general definition of notation in music offered by the Encyclopedia Britannica raises several important points indicating why the visual communication process is important in music notation:

Musical notation, visual record of heard or imagined musical sound, or a set of visual instructions for performance of music. It usually takes written or printed form and is a conscious, comparatively laborious process. Its use is occasioned by one of two motives: as an aid to memory or as communication. (Bent 2001)

The first sentence of the Britannica’s definition is almost identical to the beginning of the general introduction to music notation in The New Grove Dictionary of Music and Musicians (Sadie 2004), which states: “A visual analogue of musical sound, either as a record of sound heard or imagined, or as a set of visual instructions for performers.” And further on in the text: “… there are two motivations behind the use of notation: the need for a memory aid and the need to communicate” (Sadie 1980). There are two important distinctions to be made between aspects of these two definitions. First, whether the notation is meant to offer a means to record musical ideas or as guidance how to play. Second, if the notation is to be used to memorize music or to communicate it to others. In this context, memorizing can be regarded as a musical form of interpersonal communication, an exchange of information for and with oneself. So apart from its use as a guide of how to play what the notation is meant to represent through codified signs, symbols and annotations, the main use of the notation of music is communication. The way the conveyance of musical information works is the core of music notation. In other words, music notation makes sense only if the visual record can be understood by the receiver — who is usually a performer of music.

Context

Before we attempt to characterize, define or categorize any kinds of music representation, the context in which they are used needs to be clarified — regardless of whether the representation is in the form of, for example, Western staff notation on paper, live-generated graphics, a photograph or a video score. Contextualization is the question whether the object was initially meant to serve the purpose stated in the definition above, namely to communicate the music that is to be performed, or not. A graphic featuring conventional pitched and unpitched staff notation used in an unconventional manner, e.g., like Mohican Friends (1993) by Brent Michael Davids (Sauer 2009, 59) can be understood within the context of music notation more easily than Blue Line (2006) by Catherine Schieve (Ibid., 216–217), in which a 30-metre piece of canvas painted in blue and white tones, and meant to be installed in a variety of outdoor environments, serves as the score for a musical performance. The simplest explanation — and one that has held a certain amount of currency in the past few decades — is that essentially any visual object could be utilized as source of a musical interpretation, simply by contextualizing it. Whether it makes “musical” sense or not. However, not every visual object intentionally associated with a specific musical performance can realistically be considered as a form of music notation. Visual music, music videos or the work of VJs are initially created to translate already existing music or sounds into an appropriate, related visual representation. The visuals are created according to a sonic input. Initially, they are not normally meant to communicate music to a performer — in the VJ context in particular, the imagery is typically created subsequent to the music it accompanies — and are therefore unlikely to be understood as notation of music. However, it needs to be clear that abstract graphics created by a VJ, for instance, could be utilized as a musical score simply by contextualizing them. Those visual representations, which inherently do not feature common elements of music notation (similar to the piece of blue cloth in Blue Line) can only by contextualized by clearly indicating their purpose. This is usually done by the composer. By contextualizing it, any visual object can become a music representation intended to mediate the communication of music or musical intention, i.e. a musical score. However, the type of score and whether this score can and should be considered a form of music notation — even if it corresponds to the accepted definition of music notation — still needs to be determined.

The Visual Communication Process

Communication theory is the study of communication processes. Within this particular field of information theory, the main elements of communication, sender, receiver and message have been defined (Shannon 1948). Schematically, a communication process works as follows: a message is encoded and sent from source to receiver using a communication channel; the receiver decodes the message and gives feedback. In the traditional context of music notation, the source would be the composer, the message is the encoded music, the channel is the visual object (the score) and the receiver decoding the message is a performing musician. Regarding the performance of music, however, the communication process does not create a loop of sending, receiving and feedback as is common in human communication (for instance, a conversation). In the context of music notation, the feedback is not directly addressed to the sender. It creates rather a new communication process which manifests in the complex process of performing and perceiving acoustic phenomena (Truax 2001).

Regarding music notation the process of coding and decoding information is also called “mapping” (Fischer 2015). In an ideal process, the receiver decodes exactly the same content as the source that was previously encoded. In this case, the mapping process has, in theory at least, been applied without any loss of information. In an analogous manner, in his 1997 text “Semiotic Aspects of Musicology: Semiotics of Music,” Guerino Mazzola refers to Jean Molino and Paul Valéry and their description of the tripartite communicative character of music. He describes three niveaus: the “poietic”, the “neutral” and the “esthesic”:

[The poietic] niveau describes the sender instance of the message, classically realized by the composer. According to the Greek etymology, “poietic” relates to the one who makes the work of art.

[The neutral] niveau is the medium of information transfer, classically realized by the score. Relating to the poietic niveau, it is the object that has been made by that instance, and which is to be communicated to a receiver. But it is not a pure signal in the sense of mathematical information theory. The neutral niveau is the sum of objective data related to a musical work. Its identification depends upon the contract of sender and receiver on the common object of consideration.

[The esthesic] niveau describes the receiver instance of the message, classically realized by the listener. (Mazzola 1997, 4)

The neutral niveau describes the channel, the score itself. The “identification” and the “contract of sender and receiver” encompass the content of the message, as well as the way this message is understood. The term “contract” is used here, as there should be a kind of mutual agreement between sender and receiver. For instance, in Western staff notation, the understanding of the message (“contract”) is ensured by relying on a system of meaningful signs (staff, note value, etc.) that have been previously learned by both the sender and the receiver. Only due to this “contract” can the message be conveyed. The question that arises now is: what happens if there is no “contract” between sender and receiver? Can the conveying of musical information work if sender and receiver do not rely on a common system of meaningful signs? A visual communication theory and models that define three forms of communication processes will help here.

Visual Communication Theory

According to German communication theorist Heinz Kroehl, in visual communication theory there are three models that form the basis of a communication process. Basing his work on the semiotic studies of Charles Sanders Peirce, Kroehl proposes three clearly distinct communication systems: Everyday Life, Science, and Art (Kroehl 1987).

The Everyday Life model refers to real objects that surround us; Kroehl calls this “denotative information”. Our spoken language defines (mostly physical) objects. Objects have a name and we can assume that we are understood by others, using the same language, if we use the right name for the corresponding object. When I say “door”, everybody who is capable of understanding the English language will know what object I am referring to. In discussing music notation, this model is of little or no significance, because it is not precise enough. Even if we use the term “door” and the receiver of our message understands the meaning of the word “door”, the source and the receiver would most likely not have the exact same door in mind. Furthermore, pitch, dynamics and rhythm are not physical objects that can be seen, let alone touched. In order to be more precise and to minimize the loss of information during the mapping process, terms referring to objects that need to be shared between a source and a receiver would need to be precisely defined. Then it would be possible for both to have the same object in mind when using the term “door”. However, those particular definitions are not used in the Everyday Life model, but rather in the Scientific model.

In the Scientific model, signs convey meaning according to definitions and rules; Kroehl calls this “precise information”. Mathematics is such a scientific model. In such models, terms and objects, as well as the coherences between them, are clearly defined to create meaning. Regarding music, Western staff notation is an example of a Scientific model, consisting of a system of specific rules, syntax and modes that together create meaning. This system needs to be learned and understood to be able to apply it for musical performance. There is a pre-defined connection between sign and sonic result. The Scientific system of Western music notation was formed over the course of many centuries, with the neumes — which were developed in the early Middle Ages out of wavy lines guiding the recitation of religious texts — gradually evolving into the staff notation we know and use today. Anyone able to read modern staff notation knows exactly which key to press on a piano keyboard when reading one specific note (e.g., C4 , or “middle C”) in a score. Another musician on the other side of the world and reading the very same score will therefore press the very same key on the piano keyboard when reading this note. To interpret this C4 as a completely different pitch and therefore pressing any other key apart from C4 would be regarded as wrong. 1[1. Within, of course, the Western music system for fully composed music. Differences in tuning standards around the world and indeed throughout history mean that the actual frequency of the note may vary. Although there is scientific variance, semantically speaking the differently tuned C4’s are considered to be the “same”. Further discussion of this issue falls outside the scope of the present article.] Music scores which use the Scientific model are based on a system. This could be an established system like Western staff notation or a unique system developed by a composer. The system can be complex or rather simple. But in any case the graphic objects used in the system aim at a universal validity, at least within the closed system of the score itself. Western staff notation in its current manifestation has been used for an infinite set of works by countless composers for over three centuries. Nevertheless, over the course of the past century, and particularly since the 1960s, there are composers who have created unique music notation systems just for their own œuvre, like Anestis Logothetis (1999), or just for one single piece (Cage 1969). In the case of the systematically notated score that is not based on a common system, there are typically a number of interacting and reoccurring components which have predefined meaning. Visually, individual components with singular meaning have to be clearly recognizable, if they are to function properly and consistently within the system. Furthermore the application of those components is also clearly defined by the composer. The systematic approach, using the Scientific communication model, tries to reduce possible lack of understanding for sender and receiver to a minimum.

The third of Kroehl’s communication models is Artistic. This model uses connotative information and works in an entirely different manner from the Scientific model (Kroehl 1987). In accordance with the basics of visual communication theory, meaning is generated in this model through interpretation. A photography, a picture or a drawing cannot be read; it can only be interpreted (Müller 2003). The Artistic communication model conveys possibilities. It is not likely that two people (in our case musicians) interpret or understand a message in exactly the same way and play exactly the same thing when reading from the same source. The decoding might lead to a different result than the source intended in the coding. Thus, the mapping process in the Artistic model is not lossless. The message is rather an invitation for performers to generate meaning by starting their own mapping process. However, the interpretation is not arbitrary, as it is contextualized. A red square in an advertising context will be interpreted differently from a red square in a music notation context. In advertising, a red square could be recognized as a logo of a brand and thereby associated with certain attributes according to the public relations strategy of the company. In a music notation context, a red square could indicate a specific instrument or a certain playing technique according to the composer’s instructions. Contrary to the Scientific model, the Artistic communication model does not aim to develop a normative canon or any kind of universality. Within the context of music notation, it is up to the composer to decide if and how the mapping process is guided. In the context of graphic notation, Mauricio Kagel used the term “determined ambiguity” (Thomas 1965) to describe how composers can set the boundaries for performers. Within the Artistic model, composers are able to give meaning to graphical attributes within a score while others are left completely open for interpretation. The level of determination is up to the composer. Scores using the Artistic model are not music notations — they are interpretational and alternative music representations.

Typology

The 3D model shown in Figure 3 allows for the categorization of musical works according to their scores. As a fundament the typology uses the indication of the previously discussed communication models (on the x-axis). The other axes are necessary to be able to differentiate the very diverse approaches in alternative music notation. Some scores only communicate specific aspects of a musical performance, while leaving others out. The meaning of the three axes will be explained in more detail below. The grey spheres display musical works. These musical examples were chosen as they use partly extreme approaches of alternative music notation and are useful to clearly display how the model works. The pieces are described more in detail below.

The 3D coordinate system consists of the following:

- x-axis — interpretational / systematic level. First, there is the clear distinction between a systematic music notation using the Scientific communication model (the right half of the cube) and alternative representations with an interpretational approach using the Artistic communication model (left half of the cube). The more to the right that a work sits within this framework, the stricter the systematic approach and the less interpretational freedom there will be within the communicational model.

- y-axis — level of improvisation. Improvisation in this context is meant as the individual share the performer has to introduce in order to perform the piece. It is an indication of how much effort and own ideas the performer has to invest in the interpretation. The level of effort invested depends highly on the composer’s intention and willingness to indicate direct connections between graphic and sonic parameters. The clearer the composer’s instructions, the less effort that is required by the performer and the more a piece would be classified toward the bottom of the y-axis. A piece without clear instructions, which is open for any kind of interpretation and is merely a trigger for improvisation, such as Earle Brown’s December 1952, is therefore on the top end of the axis.

- z-axis — musical-actional. The distinction between musical and actional is important to distinguish: “musical” here means that the score depicts the sound itself. For instance, a single graphic displays the sonic attributes of a sound. On the other hand, there are scores which are rather actional in nature. “Actional” means that the score depicts a certain physical action that needs to be executed by the performer in order to produce a sound. Also, the timing of those actions is often indicated quite precisely. In other words, musical and actional refer to whether a score concerns the characteristics of a sound (musical) or its conduct (actional).

- Area of determined ambiguity. In the middle of the cube is an area that reaches only a little into the right side of the cube and about half into the left side. This is an area where the composer sets certain rules and boundaries for a score, in which the performers are free regarding their interpretation.

A typology like this serves as a visualisation of the characteristic parameters of the alternative notational representations of music in order to be able to characterize and compare them, despite their different approaches. However, a typology like this can only be descriptive. The proposed parameters cannot be measured or calculated mathematically. Practice shows that when a score is more systematically notated and tends more toward the Scientific communication model, a lesser degree of interpretative freedom is granted to the performer, and correspondingly less individual initiative will be required. This indicates that the x-axis and y-axis correlate, while the differentiation between musical and actional score remains independent.

Examples

The examples that follow (in chronological order) reflect diverse examples of alternative approaches to music notation and can be used to illustrate the proposed typology. The characteristics of each example are described and it is this analysis that determines the positioning of the pieces (represented as grey spheres) within the 3D model. Also, the positioning is by no means a result of calculations, but rather a subjective evaluation of the pieces’ characteristics.

Earle Brown — December 1952 (1954)

The score for Earle Brown’s December 1952 is one of the most cited in discussions of alternative notation approaches explored by the musical avant-garde of the mid-twentieth century. 2[2. The score for December 1952 and some information on the composer can be consulted on the Graphic Notation’s “Earle Brown” webpage.] It should perhaps not be considered an example of graphic notation — as is typically the case — but rather a musical graphic (Daniels 2009). This score clearly uses the Artistic communication model. The composer offers only a few hints on how to read the score. One aspect to consider are the relationships and the size of the displayed musical objects (black lines) and blank spaces between them. Inspired by the art work of Alexander Calder and Jackson Pollock, Brown himself saw the score as a trigger for spontaneous decisions to be made by the performer (Gresser 2007). Even within the Artistic communication model, the expression is very vague, and the level of improvisation is therefore very high. The instrumentation, the length of the piece and clearly the music itself is left entirely up to the performer(s). It is also not defined whether the score is to be considered musical or maybe rather actional.

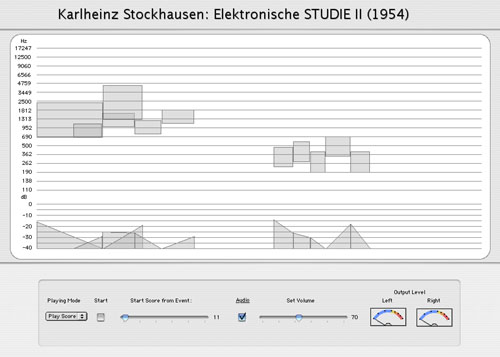

Karlheinz Stockhausen — Studie II (1956)

This famous score is exceptional and has to be understood in its historical context. At the time, the realization of electronic music was done exclusively in a few well equipped studios by people with a profound knowledge of the studio technology. In the foreword of the original score, published by by Universal Edition in 1956, is written:

This score of Study II is the first Electronic Music to be published. It provides the technician with all the information necessary for the realisation of the work, and it may be used by musicians and lovers of music as a study score — preferably with the music itself.

The introduction is followed by exact instructions about pitch, the mixture of sounds, volume, duration and the realisation. The score itself displays frequencies in hertz and the volume in decibels over time. This approach is very systematic. The score is both musical and actional. Within Stockhausen’s system, the blocks are a representation of “note mixtures”, each consisting of five sinusodial notes with constant intervals, and thereby convey the sound as such; the blocks are therefore musical. At the same time a block indicates when to start and how long to play a note mixture, while the triangles at the bottom indicate the volume of a block. Those indications, either for the technician in the studio, or if we hypothetically assume the score could be played live, would be rather actional. The score does not leave any room for interpretation or improvisation, since the system is based on mathematics and all indications are very precise. 3[3. Although the human operating a potentiometer to change the level of an individual sinus tone in the production of the piece could perform this task “musically” (interpretively), this is not in fact the goal of the piece — this could nevertheless impact the perceived “musicality” of the piece in the form of a residual result. Such an “interpretation” of the parameters would theoretically be absent in computer-based versions of the piece, such as the one produced by Georg Hadju.]

Anestis Logothetis —12-Tonzyklen im freien Kanon und eine 2-Stimmigkeit (1987)

From the mid-1950s until his death in 1994, Anestis Logothetis composed works exclusively with his own graphic notation system. Three elements form the basis of his notational approach and appear alone or in combinations in all of his scores (Logothetis 1999):

- Pitch symbols refer to note values in Western staff notation and indicate a relative pitch as they are often not accompanied by the typical five-line staff.

- Association factors describes graphics that indicate loudness, timbre changes and sound character.

- Action signals are graphics (very often curved lines or dots) that display movement graphically and are to be translated into musical movement.

Additionally, Logothetis uses the actual text in his vocal works, where words and letters are formed into shapes to display the way they should be sung or spoken. His scores generally combine all these elements in complex ways and therefore require a profound examination to be played correctly. Although his approach is quite systematic, it still offers the performers a great degree of freedom of interpretation, or as Mauricio Kagel called it, “determined ambiguity” (see also Fig. 3). The graphics display relations and correlations of elements and not precise instructions.

Pauline Oliveros — Primordial/Lift (1998)

Pauline Oliveros’ 1998 work Primordial/Lift, for accordion, cello, electric cello, guitar/harmonium, violin sampler and oscillator, requires the performers to have two parallel approaches to its interpretation. On one hand, the score reflects Oliveros’ idea of deep listening (Oliveros 2016) in music performance. The score indicates correspondences between listening and instrument playing, but leaves the execution up to the performer. On the other hand, there is an indication of a time structure regarding two different parts of the piece and the usage of the oscillator. The level of improvisation is rather high. Apart from the oscillator, there is no indication of the sounds the performers are expected to play or make. The graphic elements in the score refer to an ideology of how to perceive and work with sounds and music. Therefore, the score is action-oriented and its notation sits on the very edge of a determined ambiguity.

Ryan Ross Smith — Study No. 31 (2013)

Over the years, Ryan Ross Smith has developed his own notation system for dealing with animated notation. His scores use a variety of symbols and organized elements:

- Primitives. Irreducible static or dynamic symbols (e.g., the dots in Video 1).

- Structures. Two or more primitives in some interrelated relationship (dots and arches).

- Aggregates. A collection of primitives, structures and their respective dynamisms that correspond to a single player (circles).

- Actualized indication. The use of a playhead to trigger (see the lines with numbers at the end of the video).

The score is systematic and actional. According to the composer, the score features “distinguish Animated Music Notation as a particular notational methodology” (Smith 2015).

Christian M. Fischer — Biological Noise (2016)

The score for my 2016 work Biological Noise is an alternative music representation that I call “musical motion graphic”; it is not a systematic music notation. It was written for electric guitar, effects and live electronics, and uses animated abstract graphics that are interpreted by the performer according to a set of guidelines. As the score manifests as a video, the timing of events and the overall length is determined. There are also guidelines how to deal with the score. For instance, one guideline is that the interpretation should be coherent and comprehensible. By this, I mean that an individual visual element should have the same corresponding sound throughout the whole piece. On the other hand, the interpretation itself — tasks such as finding, choosing and performing sounds that correspond to the visuals — is left to the performer. The score thus creates a determined ambiguity as discussed in the typology above. Rather than describing actions the musician is expected to perform, the score describes sounds and music that are expected to result from the performance of the work.

Conclusion

Visual communication processes are an integral part of music notation in general. The utilization of visual communication processes offers a simple and practical tool to categorize any kind of alternative music notation. A contextualized differentiation of the present communication model of a score is the core of the proposed typology. Within the typology, the communication process (on the x-axis) is linked to the “level of improvisation”, or the effort a performer has to invest to perform a score (on the y-axis). Furthermore, the typology includes a differentiation of whether a score aims to represent sounds or actions (on the z-axis). This three-dimensional model allows for a differentiated classification of alternative approaches of music notation. Thereby it is of no significance here whether a score is meant for acoustic instruments or electronic music. The typology is meant to support further discussions of alternative notation approaches as it allows us to position them in relation to new approaches as well as to already-existing notation systems.

Bibliography

Bent, Ian D. “Musical Notation.” Encyclopaedia Britannica. Last updated 12 January 2001. http://www.britannica.com/art/musical-notation [Last accessed 20 February 2017]

Cage, John. Notations. New York: Something Else Press, 1969.

Daniels, Dieter and Sarah Naumann (Eds.). See This Sound. Audiovisuology Compendium: An interdisciplinary survey of audovisual culture. Cologne: Walther Koenig, 2009.

Dimpker, Christian. Extended Notation: The depiction of the unconventional. Zürich: LIT Verlag, 2013.

Fischer, Christian Martin. “Worlds Collide: Utilizing animated notation in live electronic music.” eContact! 17.3 — TIES 2014: 8th Toronto International Electroacoustic Symposium (September 2015). https://econtact.ca/17_3/fischer_notation.html

_____. “Book of Life: Biological Noise.” 22 June 2016. http://c-m-fischer.de/2016/06/22/book-of-life [Last accessed 20 February 2017]

Gresser, Clemens. “Earle Brown’s Creative Ambiguity and Ideas of Co-Creatorship in Selected Works.” Contemporary Music Review 26/3 & 4 (2007) “Earle Brown: From Motets to Mathematics,” pp. 377–394.

Hajdu, Georg. “Karlheinz Stockhausen: Elektronische Studie II.” http://georghajdu.de/6-2/studie-ii [Last accessed 20 February 2017]

Kroehl, Heinz. Communication Design 2000: A Handbook for all who are concerned with communication, advertising and design. Basel: Opinio Verlag, 1987.

Logothetis, Anestis. Klangbild und Bildklang. Vienna: Lafite, 1999.

Mazzola, Guerino. “Semiotic Aspects of Musicology: Semiotics of Music.” 21 May 1997. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.31.943&rep=rep1&type=pdf [Last accessed 20 February 2017]

Müller, Marion. Grundlagen der visuellen Kommunikation. Konstanz: UVK Verlagsgesellschaft, 2003.

Oliveros, Pauline. “Mission Statement.” Deep Listening Institute. http://deeplistening.org/site/content/about [Last accessed 20 February 2017]

Sadie, Stanley. “Notation.” The New Grove Dictionary of Music and Musicians. London: Macmillan Publishers Limited, 1980.

Sauer, Theresa. Notations 21. London: Mark Batty, 2009.

Shannon, Claude. A Mathematical Theory of Communication. New York: The Bell System Technical Journal, 1948.

Smith, Ryan Ross. Study No. 31 (2013), for 7 triangles and electronics. http://ryanrosssmith.com/study31.html [Last accessed 17 September 2016]

Stockhausen, Karlheinz. Nr. 3 Elektronische Studien — Studie II. London: Universal Edition, 1956.

Thomas, Ernst. Darmstädter Beiträge zur neuen Musik — Notation. Mainz: B. Schott, 1965.

Truax, Barry. Acoustic Communication. New York City: Ablex Publishing Corporation, 2000.

Social top