The Modular Synthesizer Divided

The keyboard and its discontents

Held in Manchester from 24–26 October 2014, the first Sines & Squares Festival of Analogue Electronics and Modular Synthesis was an initiative of Richard Scott, Guest Editor for this issue of eContact! Some of the authors in this issue presented their work in the many concerts, conferences and master classes that comprised the festival, and articles based on those presentations are featured here. After an extremely enjoyable and successful first edition, the second edition is in planning for 18–20 November 2016. Sines & Squares 2014 was realised in collaboration with Ricardo Climent, Sam Weaver, students at NOVARS Research Centre at Manchester University and Islington Mill Studios.

Towards Modular Synthesizers

While experiments involving music and electricity date back to the 18th century, the start of the 20th century saw the development of numerous innovative electronic instruments. Moreover, advances in sound generation were matched by comparable innovations in performance interface; in other words, not only new sounds, but also new ways to play. However, by the early 1930s, the innovative performer-instrument interactions proposed by the likes of the theremin (1920) and Sphäraphon (1924) had been marginalized by a spate of more conventional electronic keyboard designs (Hunt 1999).

Enabled, at least in part, by the technological advances of World War II, the post-war period saw the spread of the electronic music studio in Europe, the United States and beyond. The workflows afforded by these studios were strictly non-real-time and typically labour-intensive. Despite these limitations, the studio could be imagined as a kind of proto-modular synthesizer; a room-sized network of interconnected machines, typically repurposed from test equipment (Holmes 2008, 81). Similar technologies were subsequently miniaturized in Harald Bode’s Modular Synthesizer and Sound Processor (Bode 1984). Created in 1959–60, it was the first real-time modular analogue synthesizer. The Modular Synthesizer and Sound Processor not only heralded instantaneous sound creation and manipulation in a significantly more convenient format, but also real-time programmability (i.e. the ability to be near-instantly reconfigured to alter functionality).

Moog Modular

In the early 1960s, Bode’s ideas were picked up and extended by Robert Moog, a young physics PhD who had previously designed and sold a popular theremin kit. Following input from composer Herb Deutsche, by 1964 Moog had developed his first voltage-controlled modular synthesizer. In October of the same year he then presented the instrument to the annual Audio Engineering Society (AES) convention in New York (Manning 2004, 102). The main premise of voltage control was that any module could be connected to and control any other. Thus, in theory at least, control input could come from any available voltage source. However, if this dissolving of the previously fixed connection between performance interface and sound generator seemed to herald greater flexibility and new means of interaction (Brice 2001, 164), Moog ultimately resisted this direction and instead decided to provide a conventional organ-type keyboard as standard (Fig. 1).

This choice may not have been obvious or straightforward. Moog recalls that while he wanted his instrument to support the maximum possible musical variety, he was initially uncertain about the choice of interface. There was also little consensus among his associates. The composer Vladimir Ussachevsky (who also proposed the ADSR envelope generator) argued that the keyboard must be abandoned entirely. By contrast, Herb Deutsche insisted on the necessity of a keyboard (Fjellestad 2004). To compound matters, it was not yet clear exactly who would use a modular synthesizer. Moog initially thought that it would primarily appeal to academic institutions. However, about half of his early customers were commercial composers and professional musicians (Leon 2003), many of whom were skilled keyboard players. Thom Holmes, noting that Moog was typically keen to respond to customer requests, argues that the provision of a keyboard is therefore simply a case of the manufacturer responding to user feedback. He goes as far as to suggest that if the familiar keyboard interface had not been provided, “the ordinary musician would have found the synthesizer to be too disconnected from the reality of simply playing music” (Holmes 2015).

Whether a missed opportunity or necessary way into a new and alien technology, the organ-type keyboard appears to have been readily accepted. The familiarity of the keyboard is perhaps a significant contributor to the simplicity and usability outlined by Dave Moulton as reasons why the Moog Modular succeeded where “clunky” prior machines such as the RCA Mark I and Mark II synthesizers did not (Moulton 1995). This ease of use, allied to the ready musical compatibility of the keyboard, may help to explain the Moog instrument’s significant and unprecedented infiltration of popular music. For instance, in the mid-to-late 1960s, it featured on records and in concerts by artists as diverse and well known as the Monkees, the Beatles, the Doors, the Rolling Stones and the Grateful Dead (Pinch and Trocco 2002, 118). The Moog Modular was even more prominent on the Switched on Bach record by Wendy Carlos, and was even pictured on the cover. Released in 1968, it became the first classical record to sell half a million copies and thus inspired numerous imitations (Ibid., 8).

Buchla 100 Series

Approximately contemporaneously to the development of the Moog Modular, Pauline Oliveros, Ramon Sender and Morton Subotnick of the San Francisco Tape Music Center commissioned a new modular synthesizer from musician and engineer Donald Buchla (Manning 2004, 102). The modules were initially labelled “San Francisco Tape Music Center” and sold as “Modular Electronic Music systems” (Battier 2000) but were quickly renamed the Buchla 100 Series Electronic Music Box (Fig. 2). Crucially, Buchla had little or no awareness of the activities of Moog at the time, and the 100 Series turned out significantly differently to its East Coast counterpart in terms of both its philosophy and its practical implementation.

Most notably, Buchla considered his modular synthesizer to be a kind of portable experimental sound laboratory rather than a conventional musical instrument (Dunn 1992). Additionally, Buchla considered from the outset that his synthesizer would be used in live performance. By contrast, and perhaps surprisingly, given the presence of the keyboard, Moog at first thought that use of his modular synthesizer would be limited to the studio (Holmes 2008, 171).

Our idea was to build the black box that would be a palette for composers in their homes. It would be their studio. The idea was to design it so that it was like an analog computer. It was not a musical instrument but it was modular… (Buchla, in Dunn 1992)

The modular synthesizer created by Buchla also differed from the Moog in the way it handled audio signals and control voltages (CV). In the Moog modular the two could be used interchangeably (at least in theory), but the Buchla separated the two. Specifically, audio signals were carried by tini-jax cables, while banana cables carried CV (Barton 2014). The latter is another clue as to Buchla’s intention to create a laboratory for sound.

Audio Signals are simply sounds. They may be generated internally by various modules such as oscillators or harmonic generators, or they may come from external sources such as a tape recorder or microphone. Audio signals may be processed by further devices in the system, such as filters, frequency shifters, reverberation units, etc. (Howe 1969)

For Buchla, this separation helped to guarantee audio quality. It arguably also made the Buchla modular more conceptually similar to playing an acoustic instrument, in that audio signals are acted upon and modified by separate control signals (Barton 2014). 1[1. An approximately comparable example in an acoustic instrument context might be a brass player selectively placing a hand over the bell of a trumpet so as to mute (i.e. filter) its sound output.]

There are also differences between the modular synthesizers of Moog and Buchla in terms of the assumed synthesis model, a division that, respectively, became known as the East Coast and West Coast schools of thought. The subtractive paradigm assumed by the Moog is heavily orientated towards the filter. Usually, several voltage-controlled oscillators are used. Their simple but harmonically rich waveforms are mixed together, and then passed through a low-pass and/or high-pass filter to modify the harmonic content. The filter has a significant role in shaping the overall sound. In contrast, the assumed paradigm of the Buchla is that the sound is primarily forged by a so-called complex (i.e. dual) oscillator that features inbuilt waveshaping and modulation capabilities. What Buchla terms a “frequency and amplitude domain processor,” more commonly termed a lowpass gate, is then used to adjust the final timbre (Richter 2011). Despite these substantial differences between the original Moog and Buchla synthesizers, care must be taken to avoid oversimplification for the sake of convenience. For instance, many subsequent synthesizers have been able to produce both East and West Coast-type sounds (Ibid.), or otherwise hybridized the two paradigms.

Perhaps the most significant difference of all however, was in the choice of performance interface. Whereas Moog adopted the familiar keyboard, Buchla offered the more open-ended Model 112 and 114, both of which featured touch-sensitive plates, as well as the Model 123 Sequential Voltage Source.

The Model 112 (Fig. 3) featured twelve touch plates and four outputs. Firstly, each plate could be tuned to produce any of twelve preselected voltages at the first two outputs. The third output was proportional to the area of fingertip in contact with the plate, providing an approximation of pressure sensitivity, while the fourth provided a pulse-generated output whenever a plate was touched (Buchla 1966, 1). The Model 114 was closely related to the 112 but featured ten independent touch plates, each able to produce a different voltage when touched. The voltages were not fixed or quantized and could be freely tuned by the user. Again, a separate pulse output was also provided (Ibid.). As the first analogue sequencer to feature in a commercial instrument, the Model 123 positioned the 100 Series even further away from the notion of a keyboard instrument. Sequence length could be varied from two to eight steps, and three simultaneous user-determined voltages were output per step (Ibid., 2).

The Minimoog as Archetype

Despite some success, by 1969 Moog had concluded that his modular synthesizer was not only prohibitively expensive, but also too complex to appeal to many musicians. After four prototypes, the Minimoog Model D (Fig. 4) was launched in summer 1970 (Holmes 2015). A portable keyboard synthesizer, it embodied Moog’s desire to create a simpler and more accessible instrument, but also resulted in a relinquishment of modularity and corresponding loss of flexibility. In contrast to the relatively open-ended programmability of the Moog Modular, the Minimoog Model D featured a far smaller number of routing options. Additionally, these options were selectable by rocker switches on the front panel rather than by patching (Holmes 2008, 200).

While sales of the Moog Modular declined after late 1969 and by 1971 had dropped by ninety percent, the Minimoog Model D was an immediate success (Holmes 2013). By the end of 1970, the R.A. Moog Co. was selling three times more instruments than its nearest competitor Buchla (Holmes 2015), and by the time production ceased in 1981 approximately 40,000 Model D units had been sold in total (Holmes 2013). The unprecedented popularity of the instrument served not only to solidify the keyboard-synthesizer connection, but also reinforce the keyboard-synthesizer format in the minds of users and designers. As Trevor Pinch and Frank Trocco put it, “In the end, keyboards won out, at least for most users” (Pinch and Trocco 2002, 2). Indeed, in the decades that followed, the simpler East Coast paradigm assumed by the Moog synthesizers were generally more influential than the ideas of Buchla, although the latter influence can be identified in the instruments of Serge Tcherepin.

MIDI and the Entrenchment of the Keyboard

While the number of synthesizers and synthesizer manufacturers increased throughout the 1970s, there remained no standard or consistent way for synthesizers produced by different manufacturers to communicate (Manning 2004, 263). However, the most widely adopted was the volts per octave (V/oct) scheme developed by Moog. For Moulton, this had the advantage of being intuitively understood (Moulton 1995). Moreover, multiple octaves could be represented using a relatively small voltage range. For example, if 1 V is chosen to represent the note C1, 2 V would then represent C2, 3 V would represent C3, 4 V would represent C4, and so on. The main alternative to this was the Volts per Hertz (V/Hz) scheme developed by Buchla. However, the exponential scaling of V/Hz meant that fewer octaves could be “fitted” into a given voltage range. For instance, while C1 and C2 equal 1 V and 2 V, respectively (the same as V/oct), C3 equals 4 V and C4 equals 8 V (Curtis 2010).

In a concerted attempt to resolve these incompatibilities, the first version of the Musical Instrument Digital Interface (MIDI) specification was ratified in August 1982 (Hunt 1999). Well supported by most major manufacturers of the time, the decision was made to freely license the protocol, thereby further increasing its spread and adoption. MIDI also promised advantages over CV beyond greater cross-compatibility. For instance, Joseph Rothstein and Andrew Swift argue that CV is too rudimentary to describe expressive musical parameters, resulting in dull and lifeless sounds (Rothstein 1992; Swift 1997). MIDI on the other hand allows for the specification of exact notes at a precise location in time, and also other layers of data that are necessary for expressive performance. There are also new possibilities that result from the ability to accurately store, edit and play back multiple channels of data simultaneously.

The flexibility and ubiquity of MIDI is such that it may be tempting to consider it as a neutral or universal solution. However, the success of the keyboard synthesizer in the period leading up to MIDI’s design meant that a keyboard interface was assumed, and this assumption became built into the protocol. Specifically, it led to a rigid description of music that was articulated in discreet, keyboard-centric commands for pitch, note-on, note-off, velocity, duration, and assigned sound (Phelps et al. 2004).

The bias towards the keyboard is immediately apparent in MIDI’s Note On and Note Off status bytes. The Note On status byte instructs a MIDI-compatible device to start a note. Two more bytes — pitch and velocity — are also required as part of the Note On command. From the perspective of a keyboard, there is a neat efficiency to this: a key press is not only a simple and economical way to produce these three bytes, but these three bytes also offer a reasonably comprehensive description of that particular musical event. In contrast, if other types of musical interface are used then the act of sound production may be less readily or fully described in three bytes, thereby leading to a loss of information (i.e. there is a less complete description of the action).

To end a note once started, a Note On command must be followed by a separate Note Off command. Without this, a note will continue indefinitely. A Note Off command is usually produced by the release of previously held key, and requires the same two extra bytes used for the Note On command.

The specter of the keyboard is also found in the restriction of MIDI data to 127 steps as per the original MIDI specification. For instance, 127 steps can reproduce the discrete pitches of the keyboard quite adequately, producing more than 10 octaves of usable range. However, the horizontal block of a MIDI note 2[2. See, for example, a typical DAW software representation of a MIDI note.] cannot directly represent glissandi or other smoothly time-varying pitches. 127 steps are also a significant limitation for other parameters such as dynamics. There, the coarseness of the transcription can not only result in audible steps and inaccurate notation, but also affect related musical aspects (Ibid., 256). That the keyboard typically sounds groups of notes (i.e. several notes simultaneously) may mask these limitations, but they are more apparent in monophonic instruments where the focus is more overtly on the expressive qualities of individual sounds (Jordà 2005).

The difficulties involved in transferring the MIDI protocol to non-keyboard interfaces arguably led to compromised or not-entirely-satisfactory designs. These efforts in turn provided little impetus or compelling reason for users to move away from the familiar keyboard (Hunt 1999). Thus, while some alternative interfaces did appear, from relatively mundane wind controllers to more radical interfaces such as The Hands by Michel Waisvisz and Sound=Space by Rolf Gehlhaar, they did not achieve commercial success (Ibid.). By contrast, MIDI keyboards became an omnipresent of music technology in the 1980s and 1990s, equally likely to be found in the bedroom studios of aspiring producers as in professional studios and the labs of schools and universities. Nevertheless, it is notable that MIDI keyboards are not necessarily all alike in terms of their user experience. At one end of the spectrum there are mass-produced units, typically made of cheap plastic. Their keys may essentially be simple binary switches. At the other end of the spectrum are more carefully crafted and expensive interfaces that feature velocity sensitivity, aftertouch and weighted keys, in an attempt to offer the user a sensorially richer, more physical and piano-like experience.

Affordances of the Keyboard

Affordances provided one way to consider how the keyboard might influence those who use it and, correspondingly, their musical output. Initially proposed in 1977 and further developed in the context of visual perception in 1979, psychologist James Gibson’s Theory of Affordances states that affordances are exclusively properties of the environment, independent of an actor’s perception (i.e. the ability to perceive these affordances was not considered). The term was then appropriated by the designer Donald Norman to mean the action possibilities of artefacts. Moreover, affordances can imply how something should or could be used:

[T]he term affordance refers to the perceived and actual properties of the thing, primarily those fundamental properties that determine just how the thing could possibly be used… Affordances provide strong cues to the operation of things. (Norman 1988, 9)

As a simple example, if a rubber ball is held in the hands, it gives perceptual clues that it can be bounced or thrown, and also that it might be tripped over if left on the floor. With the realization that he had seemingly excluded the virtual, Norman updated the concept in 1999 to encompass the perceived action possibilities of software. In this formulation, an affordance can only exist when it is both permitted by the environment and perceivable by the actor.

Perhaps the most immediately apparent affordance of the organ-type keyboard is that it strongly pushes the user towards the 12-tone equal tempered scale common to a large body of Western music. Thus, if not impossible (particularly in the electronic and digital domains), the use of microtonal and non-Western tunings is strongly discouraged and hidden to all but the most dedicated users. Moreover, synthesizers (and especially modular synthesizers) make multiple (and often many) control parameters available simultaneously. However, many of these parameters are continuous in nature, for example filter cut-off frequency, and the stepped / quantized voltages produced by the rigid divisions of the keyboard are ill suited to these applications. The implication is that the keyboard is used primarily (and in some cases only) to control pitch. At best this relegates the manipulation of timbral parameters to being of secondary importance. If the keyboard is played with one hand, the other hand is at least free to manipulate the other available parameters. 3[3. Even if this is in itself a limitation.] However, if organ-style, two-handed keyboard technique is adopted, it may result in other parameters being neglected or even left entirely untouched. In a modular synthesizer that makes many control parameters and patch points available on the front panel for user manipulation, to focus so heavily on just one of these would seem to be terribly inefficient and unnecessarily restrictive. Indeed, the keyboard’s focus on pitch can also be seen as oppositional to numerous broader 20th-century musical tropes. For example, in the past century, unpitched sounds and noise have been accepted into Western art music, and strongly rhythmic (i.e. beat-orientated) musical forms, from hip hop and techno to drum and bass, and Intelligent Dance Music (IDM), have experienced exponential growth worldwide. It is therefore possible to speculate that the specific affordances of the keyboard-synthesizer may have inadvertently limited or marginalized its use.

The affordances of the synthesizer’s keyboard can be contrasted with those of the Buchla 100 Series performance interfaces. Freed from the fixed and equal divisions of a conventional keyboard, its touchplates did not exclude tonal music creation, but render microtonal, non-Western and other unconventional scales more visible and accessible, and therefore more likely to be adopted or explored by users (Holmes 2008, 222). Additionally, these raw, unquantized voltages imply that they may be used to manipulate not only pitch, but also any parameter desired. The musical affordances of the Buchla sequencer are even more different from those of conventional keyboard in that aspects of a performance can be automated. This means that complex or otherwise unplayable sequences can be programmed outside the timeframe of playback, set in motion and then left to run indefinitely. Concurrently, the automation not only reduces the cognitive load on the user, but crucially also leaves both hands free to modify the sequence (or other parameters) as it plays.

The perceived aspect of Norman’s notion of affordance implies that there may be differences in how (and how successfully) action possibilities are communicated to a user. William Gaver makes this explicit by separating affordances into three distinct categories. A perceptible affordance is an instance where an action possibility not only exists, but also is able to be perceived and therefore acted on. A hidden affordance is an action possibility that is not perceivable by the user and therefore cannot be acted on and exploited. Finally, a false affordance is one for which the user perceives that a particular action possibility exists, but in reality it is not possible (Gaver 1991).

The keyboard of a synthesizer is curious in that it may appear superficially identical to a keyboard from the acoustic domain, but even differences brought about by programmability aside, does not necessarily provide the same affordances. That is, the interfaces of acoustic instruments are integrated into and must physically act on the means of sound generation. On one hand, this imposes significant limitations in terms of form and material, even if these have been partially overcome by many years of constant refinement. On the other hand, the necessarily tight connection between the two aspects of the instrument passes rich acoustic vibration back to the user. This haptic feedback is vitally important to many musicians who use it to continuously monitor the response of their instrument and, if necessary, subtly adapt their actions (Marshall 2009). The situation in electronic instruments is fundamentally different. The performance interface is physically separate from the means of sound generation and the two are only loosely connected by cables carrying electrical signals. This means that acoustic vibrations from the instrument’s sound output are no longer passed back to the performer, resulting in an emphatic loss of direct tactile information. From a haptic perspective, the synthesizer keyboard is therefore largely inert. While this does not result in false affordances per se, the subsequent reliance on audible feedback means that the performer-instrument interaction loop, and therefore also the actions of the performer, are modified. For example, with reduced perceptual information available to the performer, it can be more difficult for the performer to comprehend the state of the instrument and make musical decisions in the moment. Thus, in extreme cases, it is conceivable that the performer may resort to simple input so as to reaffirm their effect on the system.

Analogue Revival

It is generally accepted that by the late 1980s analogue synthesizers had reached their zenith, rendered obsolete by more convenient digital synthesizers and samplers. By the early 1990s few manufacturers of modular synthesizers remained and, so the myth goes, analogue keyboard and drum synthesizers could be found in garage sales and thrift stores (Russ 2008, 356). Their low cost made them accessible to a new generation of electronic musicians such as Richard James (Aphex Twin), Richie Hawtin (Plastikman), Robin Rimbaud (Scanner), Daniel Pemberton (Witts 2004), the Chemical Brothers, Orbital, Leftfield and the Prodigy. This proliferation of apparently inimitable analogue sounds did much to revive demand for equipment such as the Roland SH-101, TB-303 (Fig. 5) and TR-808, Korg ms-20 and ARP Odyssey. Indeed, the ensuing fetishization of, and nostalgia for analogue gear started an upward curve in second-hand prices that continues to the present day. In parallel, new manufacturers such as Novation started to release new analogue synthesizers in attempt to meet this renewed demand.

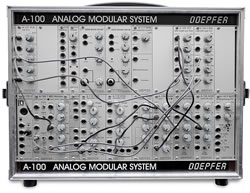

With the release of the Roland TB-303-inspired ms-404 (1994), Dieter Doepfer initially appeared a somewhat minor figure in this analogue revival. The introduction of the Doepfer A-100 modular synthesizer (Fig. 6) in 1995 changed this dramatically (Schneider 2015). It was not only a then-rare example of a new modular synthesizer, but also a tangible manifestation of Doepfer’s newly created Eurorack format (Doepfer 2015). In addition to specifying signal and connector types, power supply and a system bus, the Eurorack standard also set the panel height at 3U (5.25 inches), significantly smaller than traditional modular synthesizers. Just as importantly as the A-100 itself, Doepfer made the standard freely available, thereby making it possible for others to produce compatible modules.

For three years Doepfer remained the only exponent of the format, but continued to add modules to the A-100 system — some simple utility functions, others more unusual or esoteric. This Eurorack monopoly started to change in 1998. First, the London-based Analogue Systems released the RS Integrator modules that updated classic designs by Moog and EMS. However, these were only loosely compatible with the Eurorack format. Specifically, the RS Integrator power connector and mounting holes required fiddly modification by the user in order to fit in cases produced by Doepfer. Also located in the UK, Analogue Solutions released their first modules later the same year (bouzoukijoe1 2012). Significantly, rather than adopt the conventions of Analogue Systems or propose his own, their proprietor Tom Carpenter chose to closely follow Doepfer’s Eurorack standard.

Encouraged and enabled by the distribution of Doepfer and Analogue Solutions in the United States by Analog Haven, the mid-2000s saw several small American manufacturers produce their first Eurorack modules. Perhaps the most notable were Plan B (2005), Livewire (2005) and The Harvestman (2006), soon followed by Make Noise (2008), Tip Top Audio (2008) and Toppobrillo (2008) [bouzoukijoe1 2012]. Their adherence to the Doepfer specification meant that it became relatively easy to mix modules from different manufacturers in a single system. Nevertheless, the ubiquity of the laptop computer in almost all forms of music meant that Eurorack synthesizers continued to be a niche concern, of interest to only a relatively small number of people worldwide. 4[4. Largely but not exclusively located in Europe and the United States.]

However, the rise of the laptop and its uncritical acceptance has also bred discontent in some quarters. As Kim Cascone presciently commented:

With electronic commerce now a natural part of the business fabric of the Western world and Hollywood cranking out digital fluff by the gigabyte, the medium of digital technology holds less fascination for composers in and of itself. (Cascone 2002)

This reaction against the digital, and the reliance on the computer in musical contexts more specifically, has spurred an assortment of different but related movements. These include hacked toys and other hardware (Ghazala 2005), David Tudor-esque handmade circuits (Collins 2006), the physical interfaces of the New Interfaces for Musical Expression (NIME) community, and also modular synthesizers in general and the Eurorack format in particular. The appeal of Eurorack synthesizers is not necessarily the same for all users. For some it is the tangibility of the user-instrument interaction or the ability to manipulate synthesis parameters directly (i.e. one knob per parameter). For other users it is flexibility or customizability, the diversity of modules available or that the smaller size of the format lends itself to portable systems that can be carried as hand luggage to a gig or while travelling.

In the last five or so years, the increased interest in Eurorack has led to rapid growth in the size of the market and an almost gold rush mentality as more manufacturers clamour to get onboard. Thus, from only 3 manufacturers in early 2004 and 18 at the start of 2010 (bouzoukijoe1 2012), by November 2015 there were 175 different Eurorack manufacturers listed on the popular ModularGrid synthesizer planning resource. These initially tended to be small operations run by one or two dedicated people, but in recent times they have been joined by established music equipment industry manufacturers such as Roland and, most recently, Moog Music Inc.

Surveying the contemporary Eurorack scene, it is notable that the Moog-type subtractive synthesis paradigm remains well represented. However, the influence of Buchla and Serge is also widespread, for instance in the modules of Make Noise, Toppobrillo and (to a lesser extent) Doepfer, as well as in the explicitly Buchla-derived modules of Verbos Electronics and Sputnik Modular. If there is noticeably little emphasis on the keyboard, it is more that the keyboard is no longer privileged; only one of a number of input options, rather than entirely rejected. That is, some users have displaced certain control functions that would likely have been previously assigned to the keyboard by default to alternative interfaces such as joysticks, touch controllers, sequencer programmers and generative elements (Scott 2015).

As interest in the Eurorack format has grown, there have also been attempts to integrate and reconcile modular synthesizers and computers into a singular cohesive workflow. In particular, there has been a revival of the hybrid, computer-controlled analogue synthesizer model developed in the late 1960s (Mathews and Moore 1970). For instance, the likes of the Expert Sleepers ES-1, ES-3 and ES-4 audio-to-CV interfaces (Expert Sleepers 2015), as well as numerous new multi-channel MIDI-to-CV interfaces, greatly simplify the clocking and sequencing of a modular synthesizer by the computer. On the computer side, both popular Digital Audio Workstation (DAW) software such as Ableton Live and more open-ended musical programming environments such as Max, Pure Data and SuperCollider can be used to this end. Indeed, the Beap library for Max 7 and Max for Live is explicitly intended to ease this kind of communication:

Beap modules emulate the 1 V/octave signal standard used by most analog modulars. This means that the output of any Beap signal is compatible with the input of any Beap signal. You don’t have to worry about unit conversion or signal scaling. You also don’t have to worry about resolution or stair stepping because every Beap signal is a full resolution, audio rate signal. You can even send a Beap signal to the output of DC-coupled audio interface to control a hardware modular. (Cycling74 2015)

Less common are the Expert Sleepers ES-2 and ES-6 interfaces that enable communication with a computer in the other direction, in order that control voltages from a modular synthesizer can be recorded and manipulated in a DAW (Expert Sleepers 2015).

Future Prospects

The future directions and trajectory of the Eurorack format are not easy to predict, but one possibility would be a crossover with NIME researchers, based on a shared interest in alternative means of interaction. The roots of the NIME field date back at least as far as the Generated Real-time Output Operations on Voltage-controlled Equipment (GROOVE) system created by Max Mathews and Richard Moore (1970), but the field was only formalized in 2001 with an annual conference series. Since then, hundreds of new designs have been presented (Marshall 2009). Most are one-off creations or personal projects used only by their creator, and only a handful have been commercially released. Examples of the latter include the grid-based Yamaha TENORI-ON (now discontinued), the ReacTable multi-touch table and tangible object system (Jordà 2005), and Touchkeys multi-touch augmentation for piano keyboards.

In addition to a general lack of mainstream adoption, a main criticism levied at the NIME community is that its focus on the interface is blinkered and leads to other parts of the instrument being neglected. For instance, NIME researchers have only rarely considered the synthesis-related developments presented by Digital Audio Effects (DAFx) researchers (Jordà 2005). However, the control demands of synthesis techniques vary enormously in the same way that different types of interface each have their own interaction possibilities. Thus, there exists the potential for incompatibility between performance interface and sound generation technique (Ibid.). For example, the discrete triggers of the organ-type keyboard may be a poor fit for the multi-dimensional parameter spaces of granular synthesis.

The potential for future crossover is twofold. Firstly, Eurorack synthesizers may offer NIME researchers a portable and sympathetically tangible sound generation platform for their explorations of musical interaction and input-output mapping. To this end, the flexibility and interoperability afforded by the likes of the Expert Sleepers computer-to-modular interfaces may be particularly useful. For instance, the user interface and sound generation patch can be kept constant while completely changing the mappings of the computer-based intermediary layer. Similarly, the same mapping and sound generation patch can be tried with a wide range of different interfaces.

Second, non-keyboard interfaces for Eurorack have largely been based on historical designs. These include the theremin-inspired Doepfer A-178, the Synthwerks PGM-4x4, Make Noise Pressure Points, and Verbos Touchplate Keyboard. However, the unique aspects of the Eurorack context are not well understood. For instance, what does it mean to transfer a Buchla-style touch plate interface over to the smaller 3U format? There are a few more radically novel examples 5[5. In the sense of radical rather than incremental innovation.] such as the Voltage Memory by Meng Qi Music, an 84x6 sequencer-programmer able to fulfil a wide variety of functions.

However, there remains little consensus as to what constitutes a successful interface for a Eurorack synthesizer, let alone any guidelines or best practices for their design. It therefore seems prescient that NIME researchers have not only already proposed seemingly more radical designs, but also explored frameworks for their design and evaluation that could be applied to the Eurorack domain.

Bibliography

Barton, Todd. “Buchla Architecture.” Music, Composition, Buchla Synthesis. February 2014. Available online at http://toddbarton.com/2014/02/buchla-architecture [Last Accessed 24 November 2015].

Battier, Marc. “Electronic Music and Gesture.” In Trends in Gestural Control in Music. CD-ROM edition. Edited by Marcelo Wanderley and Marc Battier. Paris: IRCAM.

Bode, Harald. “History of Electronic Sound Modification.” Journal of the Audio Engineering Society 32/10 (October 1984) pp. 730–739.

Brice, Richard. Music Engineering. Oxford: Newnes, 2001.

Buchla, Donald. The Modular Electronic Music System. San Francisco: Buchla Music Associates, 1966.

bouzoukijoe1. “Eurorack Timeline 1995–2012.” Last updated 21 October 2012.” Available online at https://www.flickr.com/photos/85441876@N03/8108965017/sizes/k/in/photostream [Last Accessed 17 November 2015].

Cascone, Kim. “‘Post-Digital’ Tendencies in Contemporary Computer Music.” Computer Music Journal 24/4 (Winter 2002), pp. 12–18.

Collins, Nicolas. Handmade Electronic Music: The Art of Hardware Hacking. New York: Routledge, 2006.

Curtis, Andrew. “Modular Synth Design: V/Hz vs. V/Octave.” 16 July 2010. Available online at http://groupdiy.com/index.php?topic=40066.0 [Last Accessed 11 November 2015].

Cycling74. “Max 7 is Patching Reimagined.” 2015. Available online at http://cycling74.com/max7 [Last Accessed 19 December 2015].

Doepfer, Dieter. A-100 Manual. Berlin: Doepfer Musikelektronik GmbH, 2015.

Dunn, David. “A History of Electronic Music Pioneers.” Exhibition catalogue for “Eigenwelt der Apparatewelt, Pioneers of Electronic Arts.” Linz: Ars Electronica, 1992.

Expert Sleepers. “Module Overview.” 2015. Available online at http://www.expert-sleepers.co.uk/moduleoverview.html [Last Accessed 19 December 2015].

Fjellestad, Hans (Dir.). Moog. 2004.

Gaver, William. “Technology Affordances.” Proceedings of the SIGCHI conference on Human factors in Computing Systems (New Orleans, Louisiana, United States: 27 April — 02 May 1991).

Ghazala, Reed. Build Your Own Alien Instruments. Indianapolis: Wiley Publishing Inc., 2005.

Gibson, James. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979.

Holmes, Thom. Electronic and Experimental Music. 3rd edition. New York: Routledge, 2008.

_____. “The Greatest Moog Albums of 1970.” Noise and Notations. 14 January 2013. Available online at http://thomholmes.com/Noise_and_Notations/Noise_and_Notations_Blog/Entries/2013/1/14_The_Greatest_Moog_Albums_of_1970.html [Last Accessed 30 October 2015].

_____. “Reflection on the Moog Influence.” Bob Moog Foundation. 20 August 2015. Available online at http://moogfoundation.org/thom-holmes-reflection-on-the-moog-influence [Last Accessed 21 October 2015].

Howe, Hubert. User’s Guide to the Buchla Modular Electronic Music System. New York: CBS Musical Instruments, 1969.

Hunt, Andy. “Radical User Interfaces for Real-Time User Musical Control.” Unpublished doctoral dissertation, University of York, 1999.

Jordà, Sergi. “Digital Lutherie: Crafting musical computers for new musics’ performance and improvisation.” Unpublished doctoral dissertation, Universitat Pompeu Fabra, 2005.

Leon, Richard. “Dr Robert & His Modular Moogs, 1964–1981.” Sound on Sound (October 2003). Available online at https://www.soundonsound.com/sos/oct03/articles/moogretro.htm [Last Accessed 30 October 2015].

Manning, Peter. Electronic and Computer Music. 2nd edition. New York: Oxford University Press, 2004.

Marshall, Mark. “Physical Interface Design for Digital Musical Instruments.” Unpublished doctoral dissertation, McGill University, 2009.

Mathews, Max and Richard Moore. “GROOVE: A Program to Compose, Store and Edit Functions of Time.” Communication of the Association for Computing Machinery 13/12 (December 1970), pp. 715–721.

ModularGrid [website]. http://www.modulargrid.net [Last Accessed 22 November 2015].

Moulton, Dave. “Looking at MIDI Through the Wrong End of the Telescope.” Moulton Laboratories. January 1995. Available online at http://www.moultonlabs.com/more/looking_at_midi_through_the_wrong_end_of_the_telescope [Last Accessed 3 November 2015].

Norman, Donald A. The Psychology of Everyday Things. New York: Basic Books, 1988.

_____. “Affordance, Conventions and Design.” Interactions 6/3 (May 1999), pp. 38–43.

Phelps, Roger, Lawrence Ferrara, Ronald H. Sadoff and Edward C. Warburton. A Guide to Research in Music Education. 5th edition. Lanham MD: Scarecrow Press, 2004.

Pinch, Trevor and Frank Trocco. Analog Days: The invention and impact of the Moog Synthesizer. Cambridge MA: Harvard University Press, 2002.

Richter, Grant. “East Coast vs West Coast.” Mamonu Labs. 7 June 2011. Available online http://mamonu.weebly.com/east-coast-vs-west-coast.html [Last Accessed 19 November 2015].

Rothstein, Joseph. MIDI: A Comprehensive Introduction. Middleton WI: A-R Editions, 1992.

Russ, Martin. Sound Synthesis and Sampling. 3rd edition. London: Focal Press, 2008.

Schneider, Andreas. 20 Years of Löten. Berlin: SchneidersBeuro, 2015.

Scott, Richard. Email Exchange. 4 October 2015.

Swift, Andrew. “An introduction to MIDI.” Surprise 1 (May–June 1997). Available online at http://www.doc.ic.ac.uk/~nd/surprise_97/journal/vol1/aps2 [Last accessed 26 October 2015].

Witts, Richard. “Advice to Clever Children: Interview with Karlheinz Stockhausen, Aphex Twin and others.” In Audio Culture: Readings in modern music. Edited by Christopher Cox and Daniel Warner. London: Continuum International Publishing Group, 2004.

Social top