Spectralism and Microsound

One of the defining characteristics of spectral music is its engagement with psychoacoustic phenomena, particularly those involving relationships among the stable frequency components that make up distinctive timbres. The spectral approach is firmly grounded in a scientific understanding of sound, having emerged alongside advancements in computerized signal analysis technology; the Fast Fourier Transform (FFT) was a particularly important source of inspiration for spectral composers. This method of analysis aligns well with human physiology, since the FFT parses complex signals in a way that is analogous to the way that the structure of the ear responds to frequency components. Although many debate how the label “spectral” ought to be applied to musical works, we can take the term to refer to music that bears the signature of FFT-oriented thinking: viewing sonic phenomena in terms of a frequency/amplitude/time graph, considering complex sounds as a set of stable sinusoidal partials in combination with various unstable noise and transient components. Furthermore, spectral music engages explicitly with the perception of these physical phenomena, evaluating the perceptual relevance of a spectrum’s partials and considering how the ear combines various components to create unified percepts or differentiates between disparate sources, among other psychoacoustic phenomena.

In his book Microsound, Curtis Roads discusses a conception of sound that, in contrast to the FFT, decomposes sonic phenomena into short perceptual units, or “sound grains”. This approach was developed by the British physicist Dennis Gabor, who drew upon ideas from quantum physics in formulating a general, invertible method of signal analysis that can represent any sound as a collection of elementary Gaussian particles. Gabor contrasts this conception with Fourier analysis:

Fourier analysis is a timeless description in terms of exactly periodic waves of infinite duration. On the other hand it is our most elementary experience that sound has a time pattern as well as a frequency pattern… A mathematical description is wanted which ab ovo takes account of this duality. (Gabor 1947, 591)

From this perspective, complex sounds are viewed as collections of short sonic events, the durations and temporal arrangement of which are of great importance. Roads’ primary motivation for invoking Gabor’s concepts is for their application in the electroacoustic technique of granular synthesis; his approach to microsound composition is strongly informed by studies of the many perceptual implications of variations in sound grains’ temporal features.

Like spectralism, microsound composition is founded in the scientific exploration of acoustics and psychoacoustics, engaging these phenomena as a fundamental aspect of its musical discourse. Since spectralism and microsound composition are primarily considered to be instrumental and electroacoustic genres respectively, the commonalities between the two paradigms are not often exploited; in composition for mixed ensembles, however, concepts and techniques from each may be readily employed. The following discussion will first examine selected psychoacoustic issues from both compositional approaches, and then proceed to explore ways in which these may interact with one another.

Composition with Spectral Materials

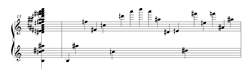

The following series of media examples traces a process of deriving musical materials from a source sound, illustrating some of the concerns inherent in the spectral approach. In this example, the source sound is that of a struck metal plate (Audio 1). Analysis of this sound by the “iana~” Max object yields a set of frequency/amplitude pairs, represented in staff notation in Figure 1. This object applies Ernst Terhardt’s algorithm to an FFT analysis, reducing what is typically an unwieldy quantity of data to a concise set; this is achieved by calculating the masking effects on partial frequencies and amplitudes, then using this information to rank partials according to perceptual relevance. The second measure of Figure 1 presents the pitches of the iana~ analysis ordered in this ranking.

Re-synthesis of the iana~ data using percussive sinusoids (Audio 2) illustrates the aspects of the original sound that are captured by this analysis. While this re-creation bears a clear aural connection to the source sound, distinctive characteristics of the original are omitted: timbral qualities that result from the frequency and amplitude relationships among stable partials are present, whereas those resulting from differences among individual partial envelopes as well as unstable transient vibrations are not. This example serves to illustrate Gabor’s characterization of the FFT as a “timeless description” of sound; the focus is on the frequencies and amplitudes of stable partials found within a short time frame, while temporal features are de-emphasized.

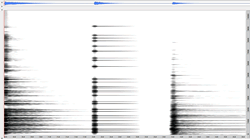

A piano re-creation of the analysis data produces a hybrid sound that combines qualities of the original sound with those of the piano (Audio 3). Although partials above C8 (the highest key on the piano) are omitted, frequencies have been rounded to equal temperament, and amplitude relationships have been approximated, the piano chord manages to capture certain a distinctive timbral colour of the metal plate; the aural connections between the two are particularly apparent if the listener’s ear has been primed with the original sound. The choice of the piano for this instrumental re-synthesis was significant, due to the similarities that this instrument has with the metal plate: both sounds exhibit percussive amplitude envelopes, similar attack noise, and quickly-fading high partials above long-ringing low partials. Since each piano note introduces its own harmonic spectrum, multiplication of the original spectrum appears at integer multiples above the original components; this feature results in the hybrid quality of the resultant timbre, somewhere between a metal plate sound and a piano chord. Figure 2 depicts FFT analyses of the first three audio examples: source sound, sinusoidal re-synthesis, and piano re-synthesis.

Psychoacoustic Dynamics of Spectralism

Central to the spectral attitude is the idea that timbre and harmony are interrelated phenomena, that sounding bodies generate chord-like collections of pitches within their spectra. Spectral music therefore often explores degrees of cohesion within an instrumental ensemble, drawing upon knowledge of psychoacoustics to achieve sonorities that lie within liminal regions between timbre and harmony.

While frequency relationships play a primary role in unifying spectral harmonies, the cohesiveness of a set of pitches relies in large part on “common fate regularity”, described by Daniel Pressnitzer and Stephen McAdams:

The ear tends to group together partials that start together. If several partials evolve over time in similar fashion (this is called common fate regularity), they will have a high probability of coming from the same source: as a matter of fact, a modulation of the amplitude or of the fundamental frequency of a natural sound affects all of its partials in the same way. (Pressnitzer and McAdams 2000, 50)

The synchronized attacks in Audio 3 therefore help to unify the collection of pitches into a cohesive sonority. In the musical applications below, this phenomenon will be exploited in explorations of spectral cohesion.

Psychoacoustic Dynamics of Microsound

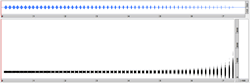

Many of the perceptual concerns engaged by spectral composers bear similarities with those that arise in microsound composition. In a series of sound grains where all grains have the same pitch, the rate and duration of the grains interact to create varying degrees of temporal cohesion, ranging from the perception of individual pulses to a continuous tone (Audio 4). In traversing this continuum we find an area of ambiguity, where pulses are too rapid to be heard as discrete events, but not fast enough to create a continuous pitch. This recalls the liminal regions between timbre and harmony described above, here in the temporal domain rather than the frequency domain. This ambiguity relies on the phenomenon of forward masking, whereby the perception of one impulse will tend to mask a second if the time difference between them is less than 200 ms.

A similar area of perceptual ambiguity arises when successive grains have different pitches. At a low pulse rate, the ear is able to trace a line between grains, constructing auditory streams analogous to melodies. As the rate increases, however, the texture transforms into a cloud of sound grains, perceived as a stochastic mass of an indeterminate number of elements (Audio 5).

By adjusting the parameters of individual grains, microsound composers can explore a range of other psychoacoustic phenomena. Our ability to perceive the pitch of a sound grain is affected by the grain’s duration: shortened below a particular threshold, a clearly pitched grain transforms into a percussive click (Audio 6). (This threshold varies with the grain’s frequency; a pitch of 500 Hz requires 26 ms to be perceived.) As well, the amplitude envelopes applied in granulation will alter the sound grains’ frequency spectra, since any variation in envelope will introduce amplitude modulation effects. This results in a degree of bandwidth widening, the extent of which depends on envelope shape. The aural result of this widening is an increased perception of noisiness; Audio 7 contrasts Gaussian, percussive, and square envelopes.

In the domain of instrumental composition, the physical limitations of many instruments prohibit the exploration of many interesting microsonic phenomena; for example, a pianist may not be able to re-articulate a note faster than 12 Hz, nor shorten the length of their notes beyond 200 ms. However, a surprising number of microsonic concepts may be exploited using acoustic instruments, particularly in combination with electroacoustic sound production.

Musical Applications — “The Damages of Gravity”

I approached the composition of my piece The Damages of Gravity (2014) with the intent of exploring some of the ways that the dynamics discussed above could interact. Scored for two pianos, two percussionists and live electronics, this piece explores ideas of spectral and temporal cohesion, liminal regions of pitch and attack perception, and masking effects to obscure perception of source identification. The following discussion will first demonstrate the concepts using abstract examples, and then show ways that these concepts are applied in the piece.

The first example transforms the percussive sound of a struck wooden plank into a metallic sound, by sending the signal from a contact microphone through a set of resonant filters tuned to the partials of a source spectrum (Audio 8). The decay times of the resonant filters gradually increase, creating a transition towards continuous pitch. Similarly, as the attack rate accelerates, the percussive aspects of the passage move towards temporal fusion.

In the second example, the source spectrum used in the first example is granulated, progressively slowing the attack rate and shortening grain durations. This transforms a continuous sound into a series of discrete sound objects, while at the same time exploiting the temporal limit of pitch perception to arrive at a percussive sound (Audio 9).

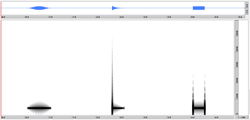

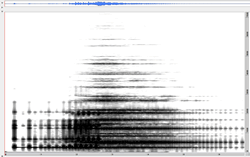

Combining the two preceding examples thus creates a kind of palindrome, where discrete percussive attacks transform to a unified metallic spectrum that in turn transforms into a series of percussive sound grains (Audio 10). Figure 5 shows a spectrogram of this progression; the widening of the bandwidths around each partial towards the end of the passage is a result of the granulation process, and aligns well with our perception of noisy attacks.

Whereas the first set of examples maintains frequency domain cohesion by treating each element in a spectrum equally, the following example takes a step away from such unity. In a technique that I call a “dynamic roll”, a pianist rapidly re-articulates an FFT-based chord while shifting the dynamic weight of each note. This represents an area of transition between a cohesive spectral re-construction and clear differentiation of spectral components. As in an electronic filter sweep, the partials’ independent amplitude envelopes allow them to be perceived individually, without necessarily breaking the impression of a unified spectrum.

Additionally, the dynamic roll helps to disassociate the stable partials from the sound of the attacks. Since these attacks remain relatively constant while the ringing strings change, these two aspects of the sound can be heard to divide into two separate perceptual streams. In Audio 11, an electroacoustic extension of a dynamic roll (divided between two pianos) serves to continue this differentiation: the attack noise is granulated with an accelerating pulse rate and shortening grain duration, removing pitch content as in the first example; at the same time, the sustaining sound of the piano chord is granulated with a decelerating pulse rate and lengthening grain duration, allowing grains to cohere into a continuous sound. In imitation of the dynamic roll, a filter sweep is applied to the continuous stream. To further connect the sustained sound to the source spectrum, the piano chord used in the granulation is passed through a bank of resonant filters tuned to those partials that are beyond the piano’s range.

The third example represents a fully disintegrated source spectrum, using two pianos to generate a cloud of pitches drawn from the set of partials (Audio 12). The players are instructed to create a rapid, randomized texture from a repository of pitches; since they are not synchronized, attacks are likely to appear in rapid succession, often within the 20 ms time frame within which order confusion may occur (Roads 2000, 5). By touching upon this perceptual boundary, the texture creates the Gestalt effect characteristic of a sound cloud, whereby the impression of individual elements recedes behind a global impression of a scintillating harmonic entity.

The physical limits of the piano prevent modification of this texture beyond certain limits: the notes cannot be played without attack noise, nor can the note lengths or envelopes be manipulated. Audio 13 extends and modifies the piano passage with a texture generated in OpenMusic, removing attack noises, increasing the grain rate, smoothing the amplitude envelopes, and extending the durations of the individual grains. These transformations allow the individual notes to gradually fuse together, moving from a cloud of discrete pitches to a continuous texture. Audio 14 combines Audio 12 and Audio 13, then continues to unify the texture by granulating the sound as a whole, exploiting common fate regularity to give the impression that the components originate from the same source. This passage therefore effects a re-construction of a fully disintegrated source spectrum, manipulating microsonic parameters to facilitate a transition to temporal and spectral cohesion.

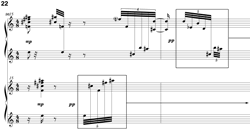

The next two examples draw passages from The Damages of Gravity to illustrate ways in which the concepts illustrated above have been integrated into a musical texture. The first passage (Fig. 6) displays a treatment of melodic gestures that recalls the increasingly dense stochastic cloud demonstrated earlier. The passage begins with rapid, disjunct melodic lines that lie somewhere between a melodic gesture and a pitch cloud. Such gestures draw progressively nearer one another, finally reaching a relatively dense texture that is more likely to be perceived as a Gestalt than as separate lines. The passage is punctuated by synchronous (and nearly-synchronous) instrumental re-syntheses of the source spectrum, followed by dissolution of this spectrum using the dynamic roll technique. These instrumental ideas are expanded by electronic elements: the repeated piano pitches recall granular pulses and are extended via granular synthesis streams that hover around the boundary between continuous pitch and a series of individual pulses; as well, the dynamic roll gesture is taken over in the electronics, which shortens the series of pulses to transform the pitch content into a series of percussive attacks (Audio 15).

The final example uses stochastic textures to create a transition from the pitches of one spectral source to another. This passage (Fig. 7) begins and ends with synchronous instrumental re-syntheses of the origin and goal spectra, respectively. A series of intermediary pitch collections were generated to effect an interpolation between these two spectra; these pitch collections provided the basis for the randomized boxed gestures that form the centre of the passage. The passage therefore achieves a motion from one spectrum to another, beginning with a unified sound object, decomposing it into drifting fragments that eventually re-assemble themselves into a new sound spectrum. This evolution is reinforced by the electronic elements: the source spectra are presented as unified timbres around the origin and goal piano chords, granular streaming begins to fragment the piano chord, and a percussive texture adds spectral pitches above the piano range to the texture.

Conclusion

The above examples have aimed to illustrate ways in which spectral, FFT-oriented concepts can interact with the temporally-oriented concerns of microsound composition. Both paradigms engage issues surrounding cohesion, pitch perception and multi-event textures, and investigate the nature of sound by exploring perceptual boundaries. Although the two approaches have not yet seen significant interaction in musical works, it is my hope that the preceding discussion will suggest directions for further creative investigations in hybrid electroacoustic composition.

Bibliography

McAdams, Stephen. “The Effects of Spectral Fusion on the Perception of Pitch for Complex Tones.” The Journal of the Acoustical Society of America 68 (1980), pp.

McAdams, Stephen and Albert Bregman. “Hearing Musical Streams.” Computer Music Journal 3/4 (Winter 1979), pp. 26–43.

Pressnitzer, Daniel and Stephen McAdams. “Acoustics, Psychoacoustics and Spectral Music.” Contemporary Music Review 19/2 (2000) “Music: Techniques, Aesthetics and Music,” pp. 33–59.

Roads, Curtis. Microsound. Cambridge MA: MIT Press, 2001.

Terhardt, Ernst, Stoll Gerhard and Seewann Manfred, “Algorithm for Extraction of Pitch and Pitch Salience from Complex Tonal Signals.” The Journal of the Acoustical Society of America 71/3 (March 1982), pp. 679–688.

Todoroff, Todor, Eric Daubresse and Joshua Fineberg “IANA: A Real-Time Environment for Analysis and Extraction of Frequency Components of Complex Orchestral Sounds and its Application within a Musical Realization.” ICMC 1995. Proceedings of the International Computer Music Conference (Banff AB, Canada: Banff Centre for the Arts, 1995).

Social top