Affective Computing, Biofeedback and Psychophysiology as New Ways for Interactive Music Composition and Performance

This text gives an insight and overview into a promising new field of work using human emotional responses — captured by means of psychophysiology, biofeedback and affective computing — in the fields of music composition and performance. The techniques described here give rise to a vast number of new and unexplored possibilities to create, in a radically new way, personalized interactive musical compositions or performances, for which the emotional reactions of listeners are used as input. The text is organized in five sections. The first section contains a characterization of the concept of interactivity in the arts. In the second section, an overview of the fields of psychophysiology, biofeedback and affective computing and how they can be used in the practice of music composition and music performance is presented. Two of the most widely used existing methods of integrating direct human emotional response into performance art, indicated by the terms “sonification” and “interpretation”, are dealt with in the third section, while the fourth describes a selection through history of some installations and projects where the use of biofeedback, psychophysiology and/or affective computing plays a central role. These include Music for Solo Performer by Alvin Lucier, On Being Invisible by David Rosenboom and the author’s own EMO-Synth project. The fifth and final section of the paper deals with fundamental questions and paradigms that arise from the techniques and concepts described in the paper. The focus therein is on the how composers and performers can extend or complement their own creative process by using and integrating human emotions quantitatively, as well as how the use of direct emotional reactions changes boundaries between artist, audience and the artwork. Questions related to authorship and artistic authenticity will also be discussed thoroughly in this concluding section.

Interactivity

Background

Before giving a characterisation of the term “interactivity” we find it important to briefly discuss its position in the context of the current juxtaposition between the open source community and the commercial market systems. In every commercial market, terms as “innovation”, “interactivity” and “multimedia” are widely used. 1[1. For more background information on topics covered in this section see Beyls 2003, Grau 2007, Packer and Jordan 2002.] But it seems that they are merely functioning as magical marketing terms. Upon closer inspection, the level of innovation or interactivity is in a lot of cases not at all satisfactory. Since the introduction of the Xerox Alto, one of the first personal computers, it seems that on a conceptual level, time has been at a standstill in the commercial circuit. Old ideas and methodologies are more often than not simply transformed and presented in new ways for a vast range of different products. It seems that commercial interests are not compatible with the renewing and creative approach of an open source community towards innovation and interactivity. By their strategies and policies it has become clear that large corporations tend to hold on to the heritage of an old model of new media originating from the previous century. This heritage includes the belief in a hierarchical structure of multimedia, the utmost importance of authorship and various royalty systems not to mention the fact that multimedia content is always spread via central sources over controlled channels. It should come as no surprise that the contrast with the open source-driven approach is more than striking. New forms to spread digital media in rhizomatic ways are being developed, tested and used worldwide, software is being developed as a community practice and integrated interactivity is thoroughly elaborated. For the remainder of this text we will talk a lot about “new media” and “interactivity”. But what exactly do we mean by those terms?

Key Components in New Media or Multimedia Art

In regards to new media or multimedia art we hold on to a point of view that it can be characterized by the presence of one or more of the following five different characteristics: integration, interactivity, hypermedia, immersion and narrativity.

Integration

The production of most works classified in the field of new media involves the integration of different disciplines and knowledge fields. These fields and disciplines can be both artistic and scientific in origin.

Interactivity

One common characteristic of new media art is the allowance for the audience to interact with the work. In this component of interactivity different levels can be distinguished, from simple responsive systems to systems that adapt themselves to and interact with the behaviour of the audience.

Hypermedia

Upon a closer look one finds that new media art often involves complex nonlinear communication or information networks. Such networks or often designed based on the associative nature of the human thought process. The initial idea of hypermedia was proposed in the pioneering work of Vannevar Bush, Douglas Engelbart and Ted Nelson in the 1960s. Their work finally culminated in the rise of the World Wide Web, originally proposed in 1989 by Tim Berners-Lee.

Immersion

This characteristic refers to the aspect of “alternate reality” that a lot of new media systems offer spectators, which may diverge, to varying degrees, from their own everyday experience. This alternate reality can be constructed on an auditory as well as a visual level.

Narrativity

A final defining characteristic of new media art is the narrativity of the work. New media art often questions the classical concept of linear narrativity. As a result of this, experiments are being carried out in the field of new media with alternative narrative structures, such as associative or other nonlinear narrative systems.

Towards a Characterization of Interactivity

For this characterization we follow the approach of the Belgian multimedia artist Peter Beyls, whereby interactivity entails four crucial elements: engagement, recursion and reflection, memory, and complexity (Beyls 2003).

Engagement

This feature pertains to the fact that interactivity implies that the participant and work are in one way or the other physically connected. The interactive work can be thought of as a network in which the spectator navigates; his or her trajectory may be one with or without fixed beginning or end. The engagement that interactivity implies will not be entirely without commitment of the audience. Aesthetics of such works will in most cases reside in an active triangular relationship involving the physical action of the spectator, his or her cognitive inference and the qualitative feedback that is provided during the interaction. Engagement implies that interactivity must be thought of in terms of a circular process wherein both the piece of art and the interactor play an equal and important role in the artistic and æsthetic experience the work provides.

Recursion and Reflection

It is crucial, for a work to be considered interactive, that at least part of the system is of a circular nature. This can translate into a recursive property whereby circular processes feed back into each other. Related and linked to the recursive dimension is the reflective quality that interactivity entails — the spectator will at one point or another be confronted with his or her own position in relation to the work of art. This can be on the physical as well as on the conceptual level.

Memory

A third characteristic feature of interactive systems is the fact that most of them imply the notion of a memory. This memory can refer to a database of audiovisual material that is used to offer the spectator an interactive experience with sound and or image.

Complexity

As a fourth and final characterizing element of interactivity we consider the presence of complexity that can be scaled on several levels. At the most basic level of complexity interactivity emerges under the form of a so-called responsive system; that is, one that merely responds to input that is provided intentionally or unintentionally by the spectator. The second major level in complexity is formed by adaptive systems. Unlike responsive systems, such systems will adapt their behaviour to that of the user or spectator. The design and implementation of adaptivity often entails the use of dedicated artificial intelligence techniques such as reinforcement learning.

For our present needs, the term “interactive multimedia system” is understood to refer a system that can be considered to fall in the category of new media and can be characterized as being interactive, according to the definitions above.

Affective Computing, Psychophysiology and Biofeedback

Affective Computing

In the 1990s, Rosalind Picard founded and pioneered a subdomain of artificial intelligence, known as “affective computing”. In this promising new field, researchers are looking for new ways to realize human-machine interactions by means of human emotions. The term was first coined in 1995 by Picard in a ground-breaking paper. Not long after this first publication, Picard founded the Affective Computing Research Group at MIT, and two years later she published an authorative book on the subject.

(CC BY-SA 2.5) [Click image to enlarge]

(CC BY-SA 2.5) [Click image to enlarge]The domain of affective computing was greatly influenced by the work of Manfred Clynes, a renowned scientist and musician who conducted ground-breaking research in neuroscience and musical interpretation. With his revolutionary “sentic theory” (Clynes 1977 and 1980) he showed the existence of universal properties in human emotions that can be detected by the means of a sentograph, a device that measures the pressure a user exerts with his or her finger onto a specially designed sensor. Clynes went on to develop a touch art application called Sentic Cycle, which links the user’s emotional state and finger pressure. Over the years, sentic cycles have had many applications, most of which are situated in the therapeutic field.

Current research in affective computing is situated in various fields, including the detection, recognition, processing and use of human emotions by the means of speech, facial recognition, body language and bodily reactions. Even the domain of the visual æsthetics does not escape from the broad scope of affective computing. At Penn State University, for example, researchers are trying to develop a system that can automatically determine the level of universal beauty of visual material. Here of course the fundamental question arises if there even exists something such as universal beauty.

Psychophysiology

Working with emotions in a scientific environment implies that knowledge is being used and developed to quantitatively measure such emotions. This is where the fields of psychophysiology and the related field of biofeedback play a key role. Psychophysiology is the subdomain of psychology where researchers are working to better understand the biological base of human behaviour and, more specifically, human emotions. To this end, certain human conditions of test subjects are manipulated in a laboratory setting. Examples of such conditions are stress, attention or emotional state. At the same time, certain psychophysiological reactions or parameters are measured; these refer to bodily reactions that can be correlated to the human psychological state. Examples of such parameters are heart rate (electrocardiogram, or ECG), galvanic skin response (GSR) and brain activity (electroencephalogram, or EEG). Measurement of such parameters is done using appropriate devices, called biosensors. During the experiments, researchers seek to find causal links between the change in the measurements and the change in the subjects’ condition.

Biofeedback

Psychophysiology is closely related to the third field we will shortly describe, namely the field of biofeedback. In the narrow sense, biofeedback pertains to a process by which the user can gain more insight and learn to control certain (psycho)physiological reactions inside his or her own body. The ultimate aim of practitioners of biofeedback is to be able to manipulate these reactions at will. Biofeedback has been proven useful in several therapeutic contexts. 2[2. David Rosenboom’s 1974 publication is the standard reference for the use of biofeedback in artistic contexts. More recently, an entire issue of eContact! was devoted to the subject of Biotechnological Performance Practice (July 2012).]

New Possibilities for Music Composition and Performance

Affective computing, psychophysiology and biofeedback provide promising new ways to extend the process of music composition, as well as performance. These fields open up new possibilities of registering and using human emotions in a very direct and visible way. The communication or expression of emotion is a crucial factor in both music composition and performance/ the composer or performer creates or presents a work with the right emotional intentions to represent it, while the listener receives these emotional intentions — wholly or in part — through an unconscious process of internalization. Traditionally, this process goes in one direction — from the composer or performer to the audience. It is only after the musical piece has been written or performed that feedback from the audience towards the composer or performer can occur. Taking advantage of new technological advances, by which emotions can be assessed and processed by computers, new modalities of emotional communication between composer, performer and audience arise. The concept of a one-way communication from the composer or performer to the audience can be replaced or extended by a fascinating new concept, that of a two-way communication. By measuring and processing the emotional reactions of the audience members (Fig. 4), musical pieces or performances can respond or be adapted to these reactions in real time. The effects of such a process include a greatly enhanced emotional impact of the work on the audience. Moreover, using the emotional reactions of the audience means they can be integrated into a musical composition or performance in ways that were not possible until very recently. Such practices clearly raise very interesting questions about the role and authorship of the composer, or the autonomy of the performer. We will discuss these issues in the final section, below.

Sonification and Interpretation

Classical View on Sonification

In regards to the use of psychophysiological data in the process of music composition or performance, two predominant and different approaches can be distinguished, which we refer to as sonification and interpretation. Before getting into the details of these two approaches, we will give a short clarification of the general theoretical background of the field of sonification. As we will come to see, the term “interpretation” as used in this paper can be considered as a special case of sonification if we follow the general classical theory as explained in the standard reference on the topic, The Sonification Handbook (Hermann, Hunt and Neuhoff 2011). The classical definition of sonification was proposed by Thomas Hermann in 2010/

Sonification is the use of nonspeech audio to convey information. More specifically, sonification is the transformation of data relations into perceived relations in an acoustic signal for the purpose of facilitating communication or interpretation.

In this definition we see that a lot of stress has been put on the fact that in a sonification process an alternative presentation of information is created by the means of non-speech audio. If we are using psychophysiological information as the main information source, then sonification will thus be any process that transforms this data into non-speech sound and/or that expresses the relations that exist in the data in an audio signal. Note that this definition does not say anything about the nature of the processes or techniques that could be involved. But we will come to this later.

As to the functions that sonification may have, there are four that can be readily and commonly distinguished:

- Alarm, alerts or warnings;

- Monitoring of data or messages;

- Exploration of data;

- Art, leisure, sports and entertainment.

Sonification of data captured using biosensors can fall into any of these four categories. It can be used, for example, to alert someone about or monitor his or her current bodily state. As psychophysiological data — such as brain wave activity (EEG, electroencephalogram) — can demonstrate a very high degree of complexity, sonification can help to gain more insight into measured brainwaves. In this way, sonification of brainwaves can be used to both monitor and explore EEG data. The cost of biosensors has drastically decreased in recent years. Moreover, it is becoming easier to measure certain bodily reactions by means of ubiquitous digital devices such as smartphones. This evolution has clearly propelled the use of sonification of data gathered using biosensors especially in the fourth category — sonification of psychophysiological data in art and in entertainment environments (e.g., games), for a better experience while sporting or in our daily leisure time (e.g., systems that enhance experience of listening to music using biometrical data).

As to the techniques used in sonification we will use the classification and characterization as proposed by Hermann. According to this theoretical foundation, five distinct techniques can be distinguished in sonification (Hermann 2011):

- Audification;

- Auditory icons;

- Earcons;

- Parameter mapping sonification;

- Model-based sonification.

And this leads into a discussion of the two approaches we distinguish here for the use of biometrical data in musical composition or performance. But before doing so we, describe the five different sonification techniques.

Audification

This is the oldest technique of sonification. Audification is used when the data involved in the sonification process are time ordered or sequential, such as seismic data coming from an electrocardiogram. A generic example of audification is the process by which inaudible waveforms such as those residing in seismic data are frequency- and/or time-shifted such that they become audible to the human ear.

Auditory Icons

With this form of sonification, everyday non-speech sounds lives are mimicked. Typical examples of auditory icons are the sounds used in computer operating systems when emptying the trashcan containing deleted files. Because these sounds mimic natural sounds, their meaning is easily understood when used.

Earcons

In this approach to sonification, artificial sounds are used to warn or inform the listener about something specific but without these sounds having a direct relationship to their intended meaning (e.g., electronic beeps on the computer). As earcons are typically abstract sounds, their meaning must be learned by the target population that will make use of them.

Parameter mapping sonification

This sonification technique is what is commonly referred to when researchers or adherents talk about “sonification”. In parameter mapping sonification, certain values arising from the data are mapped in a one-to-one relation with certain parameters that influence acoustic events. In a classical example, values arising from the data are mapped onto MIDI data, which are then used to control synthesized sounds.

Model-based sonification

In this approach, the data to be sonified are first transferred into pre-designed dynamical models that produce a certain output. It is then by the use of this output that sounds are generated. This process also offers the listener the opportunity to be involved interactively. The audience can be invited to excite the models to explore the data or listeners can be attached to these models in an unintentional way, thus influencing the models as well as the sound that is being generated.

Sonification versus Interpretation

Having described these three different approaches, we can give a precise description of the terms “sonification” and “interpretation” that will stand as a reference for the remainder of this text. By sonification of psychoacoustic data we mean the classical techniques of audification and parameter mapping sonification. It is clear from the nature of the data that both possibilities exist and that some overlap between the two concepts may occur. When we discuss the technique of interpretation to integrate biometric data in a musical context, we mean a subclass of the model-based sonification techniques. More specifically, with interpretation we target model-based sonification in which psychophysiological data are interpreted. With interpreted we understand that a certain kind of semantic metastructure is attached to the processed data.

Examples of sonification are numerous. Some of them even date back from the beginning of the twentieth century. One of the first real sonification projects can be traced back to 1932, when the German sound artist and pioneer of the soundtrack Oskar Fischinger started to experiment to transform painted patterns into sound.

On Three Different Interactive Musical Performances and Installations Using Psychophysiology, Affective Computing and/or Biofeedback

In this section, we look at three different musical works in which the use of biometrical data plays a fundamental role: Music for Solo Performer (1965) by Alvin Lucier, On Being Invisible (1977) by David Rosenboom and the “EMO-Synth project” (2009) by the author. 3[3. For more information on Music for Solo Performer, see Austin 2011. More information about On Being Invisible can be found in Rosenboom 1997. More detailed information on the EMO-Synth can be found in previous publications by the author, notably his article in eContact! 14.2 — Biotechnological Performance Practice (July 2012), “The EMOSynth/ An emotion-driven music generator.”] These examples are also discussed in light of the characterization of interactivity elaborated above and the discussion of sonification versus interpretation.

Alvin Lucier — Music for Solo Performer (1965)

Music for Solo Performer was the first composition and performance that made use of brainwaves. It was composed in the winter of 1964–65 by Alvin Lucier with the assistance of physicist Edmond Dewan, and its first performance was on 5 May 1965 at the Rose Art Museum, Brandeis University. Lucier himself was the performer for the occasion, with assistance by John Cage and electronics by Nicolas Collins. Since then, the piece has been performed many times in concerts by various performers in Europe and America.

At the beginning of the performance, an assistant affixes three Grass Instrument silver electrodes on the solo performer’s left and right temple and one hand using electrical conductive paste, after these areas have been cleaned with alcohol and gauze. The electrodes capture the alpha wave activity of the solo performer. Alpha waves are the low frequency brainwaves of 7.5–12.5 Hz that arise in a state of relaxation with closed eyes (but remaining awake). Several percussion instruments are positioned in the area surrounding the performer, and a loudspeaker is attached to the membrane or playing surface of each instrument. During the performance, streams of alpha waves are triggered when the performer’s eyes are closed and interrupted when he opens his eyes. These are captured by the electrodes and highly amplified by means of a powerful amplifier before being sent to the loudspeakers, so that they provoke sympathetic resonance of the percussion instruments. The choice of which loudspeakers are sent the audio signal at any time is made by the technical assistant.

If we look at this piece in the terms of the characterization of interactivity, we find that from the soloist’s perspective two components are present: engagement and recursion/reflection. Engagement is a crucial condition for Music for Solo Performer, as the soloist is physically connected to the sound-generating system. There is a triangular relation between the soloist, the sound that is produced and the feedback mechanism between the soloist and the music. The feedback system between the sound that is produced as a result of the performer’s activity and his or her subsequent reaction to it provides the performance with a clear recursive quality. Music for Solo Performer also invites the performer to develop an awareness of his or her own brain activity in response to sound that is generated by earlier brain activity, giving it a highly reflective nature. In regards to the discussion of sonification versus interpretation, Music for Solo Performer is a classical example of sonification. More specifically, when viewed from within the classical taxonomy of sonification, the piece is a straight-forward example of audification: periodic brainwaves are amplified and played through a set of loudspeakers; the signal emanating from the loudspeakers is then translated into sound via the sympathetic resonance of percussion instruments upon which the speakers are placed.

David Rosenboom — On Being Invisible (1977)

David Rosenboom’s On Being Invisible was first performed on 12 February 1977 at The Music Gallery in Toronto. The piece was a result of a series of prior projects in which Rosenboom experimented with the use of EEG (electroencephalogram) in a musical context. Central in the work is a self-organizing, dynamic system that generates sounds and music — the performer is not playing a classical musical instrument. The title of the work reflects to the role of the performer within the piece. One the one hand the performer can act as an initiator of musical events in the piece, while on the other can decide to let his or her dynamic state evolve alongside the dynamics of the system as a whole. At the core of each performance of On Being Invisible lies a software and hardware system that consists of five different components:

- A mechanism, attached to a sound synthesis system, that generates musical structure;

- A model of human musical perception that detects and predicts shifts in attention of the performer;

- The performer that acts as a perceiving and interacting entity;

- An input analysis system for detecting and analyzing incoming EEG data;

- A structure-controlling mechanism that directs the musical structure-generating mechanism and updates the model of human perception in response to the information it gets from the input analysis system.

For a performance of On Being Invisible, the performer is attached via a set of EEG electrodes to the central system that lies at the heart of the work (Fig. 5). During the performance, the system simultaneously generates sounds and music, and attempts to predict the shift in attention of the perceiving and interacting entity — namely, the human performer — using a predictive module. Using this setup, a truly dynamic musical system arises that can evolve between different states. These states can be understood as different configurations of the system that will produce different kinds of musical output. To enhance the contribution of the performer, Rosenboom extended later versions of the system with an option that allowed the performer to manipulate certain parametric sequences used in the music-generating process.

With On Being Invisible, Rosenboom created a pioneering interactive, multimedia system, certainly the first of its kind. He had even implemented predecessors of certain techniques commonly used today in the field of artificial intelligence. The work is characterized by all four components of interactivity, as discussed above. Engagement is clearly present if one considers the performer at the same time as spectator. He or she is physically connected to the performance and navigates in a network conceived by the dynamic nature of the system. By using EEG signals to align the attention and interest of the performer with the music that is generated, the performer and machine create a very intriguing æsthetic dimension. As the performer’s reaction to the output is sent back to the input for evaluation, recursion is also obviously one of the key concepts. Moreover, the performance allows the performer to be confronted with his or her own inner state, so that a reflective component also characterizes the work. The memory component of On Being Invisible can be found in the design of the central system that is used. By its very construction, this system includes various self-organising systems, each of which includes a memory component that can be temporary or permanent. As these self-organising systems are interconnected, they provide On Being Invisible with a global memory capacity. Ultimately, On Being Invisible is an adaptive system that tries to align its musical output with the reactions of the user. As such, the installation can be considered to show a dimension of utmost high complexity.

EMO-Synth Project

In short, the EMO-Synth project is based on an interactive multimedia system, called the EMO-Synth, that is capable of automatically generating and manipulating sound and music in order to bring the user into pre-determined emotional states of varying intensity. Heart rate measuring devices (ECG, electrocardiogram) and stress level metering devices (GSR, Galvanic Skin Response) provide data representing these emotional states. To quantitatively decode emotional reactions of the user, the classical model of the psychology of emotions — which states that emotions can be characterized by the two dimensions of valence and arousal — provides the theoretical base. 4[4. For more on the theory, see the author’s research in Leman et al. 2005 and Leman et al. 2004.] Valence pertains to the positivity or negativity of an emotion, whereas arousal pertains to the intensity of the emotion. With classical biosensors such as ECG and GSR it is only possible to measure the arousal of an emotion. This is also the reason that the quantitative aspect of the emotional response that will be used in the EMO-Synth only relates to this aspect of the response. For ease of processing and calculations, emotional states with four different levels of arousal states are used: low arousal, low average arousal, high average arousal and high arousal.

Using the EMO-Synth involves two phases: a learning phase and a performance phase. During the learning phase the user is connected to the EMO-Synth using biosensors. The system subsequently learns how to bring the user into the four different arousal states with the music and sounds it generates by means of genetic algorithms (Goldberg 1989; Koza 1992 and 1994). With this approach, statistical artificial intelligence models that reside in the EMO-Synth, and that contain the emotional response profile of the user, can be trained. Once trained, these models contain information concerning the kinds of sound or music that can bring the user into a particular type of aroused emotional state. In the genetic algorithm the artificial intelligence models are the individuals in an evolutionary pool that evolves under the Darwinistic rules of survival, mutation and crossover.

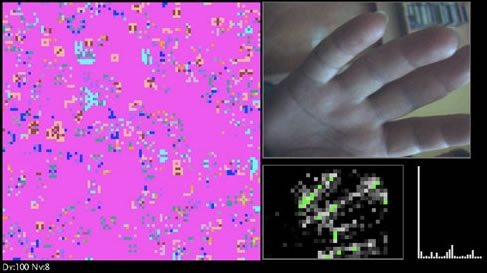

During the learning phase, the emotional feedback of the user is matched to the sounds that are generated by the EMO-Synth. Once the learning phase has been completed, the EMO-Synth is ready to be used as a real-time multimedia performance tool. During performance, the artificial intelligence models that were constructed in the learning phase are used to produce personalized soundtracks for live visuals in real time. The live visuals consist of sequences of video fragments that have been entered into a database in the EMO-Synth beforehand. Each video fragment is annotated according to the arousal component of the emotional impact it is intended to have on the viewer. The emotional impact of the soundtrack generated by the EMO-Synth underneath the video fragments will then match with the annotated emotional intensity of each video fragment. To make this possible the EMO-Synth uses the artificial intelligence model that was developed in the learning phase for the same user. An audiovisual experience then arises in which the arousal component of the emotional impact for the user of the visual material is maximally reinforced by the generated sounds and music. The soundtracks of the individual performances involve not only digital audio but also live musicians (Fig. 6). The live audiovisual concerts that result from this procedure tend to be unique and are entirely based on the emotional response profile of the user.

If we consider the user to be a spectator, the EMO-Synth can be understood as a truly interactive multimedia installation. It has a recursive and reflective nature, although its recursivity is mainly found in the training phase. The artificial intelligence models used have an implicit memory component and the use of genetic programming implies that a high degree of complexity is integrated into the system. In terms of engagement, during the learning phase the user navigates in a network formed by the genetic algorithm. During performances the user and EMO-Synth play an equal role in providing an artistic and emotionally thriving audiovisual experience. THE EMO-Synth Project offers a classical example of model-based sonification using statistical artificial intelligence models containing the emotional response profiles of the users of the EMO-Synth.

Questions and Paradigms

The first interesting new paradigm we wish to discuss is the new view on artistic creativity that results from the use of psychophysiological data in an artistic context. Such practices offer numerous inventive ways of extending and broadening the artistic creative process. This of course depends on how we characterize and look at the concept of human creativity. My own experiences as both mathematician and artist have given me very different but complementary perspectives on “creativity”. Working in the field of mathematics requires, on the one hand, a certain rational skill set, and on the other hand, a non-rational intuition. The creative process then arises from the interplay of the two. When looking at the artistic creative process, a similar reasoning applies: there is a set of skills the artist has to master as well as an irrational component that is the driving force behind the creation of new work. However, there is an important distinguishing factor between creativity as it is used in the mathematics field and creativity as it is used in artistic practice: risking generalization, we might state that at the core every piece of art, be it music, multimedia, painting or other art forms, is the attempt of the creator to communicate on an emotional level with the audience. Thus, emotion is inherently present in the artistic creative process, whereas in a mathematical context this is very seldom the case. And with the use of affective computing, biofeedback and/or psychophysiology new ways can thus be designed to complement and even enhance the emotional artistic experience of the audience. It gives rise to new possibilities to extend or complement the way by which the artist communicates on an emotional level with the work and how can it be characterized (Fig. 7).

A second very interesting issue that the use of bodily reactions in the process of composing or performing music brings up is the concept of authorship. In a lot of systems using psychophysiology we see that bodily responses will determine part of the compositional or performative process. Thus, the question arises concerning who the author of this component of the final composition or performance might be: is it the person from whom these bodily responses originate or is it the person who designed the system to integrate these bodily reactions as a sort of virtual composer or performer?

And this brings us to a third paradigm, which offers huge potential for extending and rethinking the design of interactive systems. The techniques described here redefine the triangular relationship between the audience, the artist and the music that is composed or performed. By integrating the direct emotional responses of the audience into the creative act, the boundaries between these three antagonists begin to dissolve. Central to this process is the imposition of feedback mechanisms that integrate the emotions of these three agents. As a consequence, the roles that each has within the relationship audience become not only interchangeable, but also redefined. Moreover, from a classical point of view, there is a certain temporal order in this triangular relationship. There is first the artist who creates a work that is presented to the audience. After the presentation of the work, the feedback of the audience can be used in a subsequent creative process for the creation of a new work. Using biosensors offers a new way of extending this process in a very direct way. The intimate and physical connection between the work and the audience allows the audience to influence the work in a direct and interactive manner. The use of bodily reactions radically alters the classical temporal order such that the audience can simultaneously experience and influence in real time the artistic work. As the psychophysiological reactions of the audience will determine part of the artistic output and creation, the artist is no longer required to present a completely finished and immutable work; the final form of the piece can now be determined during its presentation or performance.

Biofeedback techniques also open up new possibilities for the artist to compose or perform new works. For example, STELARC’s Third Hand uses psychophysiological reactions to extend his creative process. Using biosensors, the artist can get more insight into his or her own creative process and gain new artistic inspiration guided by his or her own emotions. Note that the artist’s psychophysiological reaction is a reaction to what he or she is creating and is used at the same time to create the art work. In this way, an intriguing feedback mechanism arises that shows a lot of similarities with the feedback mechanism often found in biofeedback methods. It is in this way that we can even see the use of bodily reactions in artistic context as an extension of the classical use of biofeedback as a therapeutic method. It can bring certain unconscious mechanisms of the artist to surface that can be used in his or her performance.

As a final note, I wish to express my belief that the use of affective computing and related techniques is by no means a plea for the replacement of the artist by a computer. Crucial for me in this discussion is the so-called cognitive paradigm that has been around in artificial intelligence research for over two decades (De Mey 1992). At the core of this paradigm lies the desire to recreate human intelligence via an artificial system, such as a computer or other digital system. The failure to realize this paradigm clearly showed that every programmable machine that is discrete and finite clearly has its limitations. But if we look at human thought, behaviour and the dynamics of emotions, research shows more and more that continuous infinite and chaotic processes are involved (Strogatz 2000). And it is these processes that I believe that could never be modelled using any machine. By this statement I place myself amongst the group of adherents to the weak artificial intelligence movement (Searle 1980). In short, the weak artificial intelligence group rejects the fact that true intelligence can be created by machines or computers. One of the arguments and facts used by this movement is Gödel’s incompleteness theorem, which showed that for something such as simple as the natural numbers with their usual arithmetic there exists no complete axiomatic system. Every axiomatic system one tries to develop to describe the natural numbers will always lead to a theory which incorporates mathematical statements which are at the same time true and false. The argument that Gödel’s theorem provides for the weak artificial intelligence movement is that if something so simple as the concept of natural numbers cannot be modelled by a computer, how could we realistically expect that the human brain could ever be modelled by a computer? Opposed to the movement of the weak artificial intelligence are the adherents to the strong artificial intelligence movement (Goertzel and Wang 2007), who simply believe that matters of computational power and software limitations are all that currently prevent us from designing truly intelligent artificial machines. And it is one small step further to suppose then that if one would succeed in making such a machine, one could also succeed in giving it an emotional consciousness. With such a rational and emotional intelligent agent, one could presume that building an artificial artist comparable to a real human artist would be not far off.

Bibliography

Austin, Larry, Douglas Kahn and Nilendra Gurusinghe (Eds.). Source: Music of Avant-garde, 1966–1973. Oakland CA: University of California Press, 2011.

Beyls, Peter. “Interactiviteit in context / Interactivity in context.” KASK Cahiers 4 (2003).

Clynes, Manfred. Sentics: The Touch of Emotions. Anchor Press/Doubleday, 1977.

_____. The Communication of Emotion: The theory of sentics. New York NY: Academia Press, 1980.

De Mey, Marc. The Cognitive Paradigm. University of Chicago Press, 1992.

Goertzel, Ben and Pei Wang (Eds.). Advances in General Artificial Intelligence. IOS Press, 2007.

Goldberg, David E. Genetic Algorithms in Search, Optimization and Machine Learning. Addison-Wesley, 1989.

Grau, Oliver (Ed.). Media Art Histories. Cambridge MA: MIT Press, 2007.

Hermann, Thomas. “Sonification — A Definition.” 3 November 2010. Available online at http://sonification.de/son/definition [Last accessed 23 January 2014]

Hermann, Thomas, Andy Hunt and John G. Neuhoff (Eds.). The Sonification Handbook. Berlin: Logos Verlag, 2011.

Koza, John R. Genetic Programming: On the programming of computers by means of natural selection. Cambridge MA: MIT Press, 1992.

_____. Genetic Programming II: Automatic discovery of reusable programs. Cambridge MA: MIT Press, 1994.

Leman, Marc, Valery Vermeulen, Liesbeth De Voogdt, Dirk Moelants and Micheline Lesaffre. “Prediction of Musical Affect Attribution Using a Combination of Structural Cues Extracted from Musical Audio.” Journal of New Music Research 34/1 (2005) “Expressive Gesture in Performing Arts and New Media,” pp. 37–67.

Leman, Marc, Valery Vermeulen, Liesbeth De Voogdt, Johannes Taelman, Dirk Moelants and Micheline Lesaffre. “Correlation of Gestural Musical Audio Cues and Perceived Expressive Qualities.” In Gesture-Based Communication in Human-Computer Interaction. Lecture Notes in Artificial Intelligence 2915. Berlin: Springer Verlag, 2004, pp. 40–54.

Packer, Randall and Ken Jordan. Multimedia: From Wagner to Virtual Reality. W.W. Norton and Co., Inc., 2002.

Picard, Rosalind W. “Affective Computing.” MIT Technical Report 321. Cambridge MA: MIT, 1995.

_____. Affective Computing. Cambridge MA: MIT Press, 1997.

Rosenboom, David. Biofeedback and the Arts: Results of early experiments. Vancouver: Aesthetic Research Centre of Canada, 1974.

_____. Extended Musical Interface with the Human Nervous System: Assessment and Prospectus. Leonardo Monograph Series No. 1. Cambridge MA: MIT Press, 1997.

Searle, John R. “Minds, Brains and Programs.” Behavioral and Brain Sciences 3/3 (1980), pp. 417–457.

Strogatz, Steven H. Nonlinear Dynamics and Chaos: With applications to physics, biology, chemistry and engineering. Studies in Nonlinearity. New York NY: Perseus Publishing, 2000.

Vermeulen, Valery. “The EMOSynth: An emotion-driven music generator.” eContact! 14.2 — Biotechnological Performance Practice / Pratiques de performance biotechnologique (July 2012). https://econtact.ca/14_2/vermeulen_emosynth.html

_____. “The EMO-Synth, an Intelligent Music and Image Generator Drive by Human Emotion.” GA2012. Proceedings of the 15th Generative Art Conference (Lucca, Italy: 2012). Available online at http://www.generativeart.com/GA2012/valery.pdf [Last accessed 23 January 2014]

Social top