LANdini

A networking utility for wireless LAN-based laptop ensembles

Background Motivation

Wireless Routers Are Good

The two laptop ensembles of which the authors are a part — the Princeton Laptop Ensemble (PLOrk) and Sideband — both use wireless routers for group networking. This is a decision based on convenience and logistics: not having to worry about cables dramatically reduces the setup and takedown time for performances and rehearsals, and makes it easy to scale the ensemble up or down in size. In the case of PLOrk, which has at times exceeded 30 simultaneous performers, these are extremely valuable features. Performing without the need for networking cables also affords significant freedom in the performance positioning of both individual performers (up in balconies, spread out around the audience, etc.) and the ensemble (such as playing outdoors or in non-traditional venues). Even confined to on-stage setups, the presence of cables can impede fluid re-arrangement of performer stations from piece to piece, which is a common element in both PLOrk and Sideband concerts. In order to stay as flexible as possible, both PLOrk and Sideband intend to use wireless routers for the foreseeable future.

Wireless Routers are Bad

As a trade-off for all of the logistic convenience afforded by wireless routers, PLOrk and Sideband have had to deal with the less reliable performance of UDP protocols over wireless systems for OSC communication (Wright, Freed and Momeni 2013), as well as the propensity of routers to occasionally drop users from the network. Latency and dropped packets have been a constant source of trouble for any piece in our repertoire that uses networking and, while some pieces can suffer a dropped packet and/or timing inconsistencies, others may be structured such that enough networking errors result in the piece failing. This is a well-known fact amongst laptop-ensemble musicians and has been the subject of other academic inquiries (Cerqueria 2010). Because of this, ensembles such as Louisiana State University’s Laptop Orchestra of Louisiana (LOL) and Virginia Tech’s L2Ork choose to make the trade-off in the other direction and perform with a wired router and a mass of Ethernet cables (Beck 2011; Bukvic 2011).

Previous Solutions Have Left Us Unsatisfied

Composers who have worked with PLOrk and Sideband have approached the problems of wireless unreliability in different ways from piece to piece.

To deal with dropped packets, some pieces take a “shotgun approach” by sending redundant OSC messages in short bursts in hopes that one of them will make it to its destination. This method is not guaranteed to work and has the potential to create a lot of overhead, depending on the number and density of messages that need to be transmitted in this fashion.

For network sync, some pieces have tried a simple server-side broadcast that either gets picked up or not on the client computers, with predictable results in terms of reliability and timing. Other pieces have employed versions of Cristian’s Algorithm to coordinate execution time across different computers; these solutions are often paired with the shotgun approach to set up timed messages in a network, again with imperfect results.

Pieces requiring a specific spatial ordering of the players often require the players to select their user number manually before launching the patch — in one memorable case, a guest composer had written a piece that required everyone to change the network sharing name of their laptop to an integer and re-login in order to run the piece!

All of the methods above (with the exception of the username change) have worked well enough for composers to keep using them, but the current situation has three main drawbacks:

- Composers are wasting time solving problems of user-list maintenance, reliable message delivery and useable timing anew with each piece. PLOrk and Sideband have both experienced lost rehearsal time and significant individual coding time because of these problems.

- In addition to regular idiosyncratic behaviour within the piece itself, each composer’s approach to solving the above-mentioned networking issues potentially introduces another layer of erratic behaviour.

- Composers sometimes avoid certain networking strategies altogether for fear of failure in a live situation. While pragmatic, this is obviously bad for the state of the art as a whole.

A Proposed Solution

A solution to the above situation is to relegate all networking duties to a separate application, which would run in the background for the duration of a rehearsal or concert. This modular approach directly addresses the three problems listed at the end of the previous section:

- Composers would no longer need to worry about how to deal with networking problems.

- Faults with basic networking issues would be easily traceable back to one common application. Assuming a stable enough application, this would make networking problems both rarer and easier to address as they come up.

- Composers could feel free to explore uses of networking that had hitherto felt too risky or complicated.

Our current attempt at addressing these problems involves a software utility we are developing, named LANdini. 1[1. The name LANdini was coined by Sideband member Lainie Fefferman as a play on local area networks (LANs) and the famous 14th-century composer of the same name.] It is still in an early stage of development, but has to date been used in performance five times with encouraging results. It addresses issues with delivery and timing, as well as implementing some extra features that we thought would be useful, such as the “stage map” (see section 3.8).

Pre-Existing Solutions

There are other laptop ensembles who have also worked on solutions. One prominent example is OSCthulhu (McKinney and McKinney 2012), a similarly motivated application developed by the group Glitch Lich (in particular Curtis McKinney). Its absence of clear documentation was an initial hurdle in its being adopted, but it also focused on a state-based model of information flow that we did not feel drawn to. It also lacked some of the features that we envisioned, such as the “stage map” feature (described in the following section).

Neil Cosgrove’s LNX Studio (2012) is quite a different type of application, being a collaborative music-making environment that can work over LANs and Internet connections. It is extremely well implemented, but it is not designed to be a networking utility. What it does have is an impressively resilient network sync and message delivery system and excellent network time synchronization. The code is open source and many of the features of LANdini were the result of studying and adapting solutions that were used in LNX Studio.

Ross Bencina’s OSCGroups (2005) is a core component of LNX Studio, but in that context is used for Internet connections, which it was primarily designed for. Cosgrove’s code for LAN connections uses classes of his own making which feature the same API. Since the authors of this paper are interested in LAN-based music, we elected to follow Cosgrove’s example and implement our own solutions, leaving OSCGroups to those who are working over Internet connections.

LANdini’s Implementation

Here we describe a series of features that we felt would be reasonable demands to make of any networking utility that was going to be truly useful, and explain how they are currently implemented in LANdini. It should be noted that while LANdini was developed primarily for wireless networks, some of the features described could be useful for laptop ensembles on wired networks as well.

Self-Contained

LANdini is a simple double-clickable application that does not require any extra installs. At the moment, LANdini is implemented in SuperCollider (McCartney 2002) to run on Mac OS 10.6+. SuperCollider was used because it was the language Narveson knew best, it is expected to work cross-platform (though this has not been implemented and tested, due to a lack of Linux and PC machines) and it allows the user to easily create stand-alone applications. SuperCollider does not currently support sending OSC via TCP, so UDP was used, and TCP-style behaviour was implemented directly. Narveson suspects this is better than the built-in latency that comes with TCP, but a parallel version in a TCP-enabled language would need to be tested to be sure.

No Client / Server Differentiation

For simplicity and flexibility, LANdini is the same on each computer in the ensemble. In this way, members of the group can simply start LANdini at the beginning of a session and then let it run without worrying about having an extra server laptop on hand.

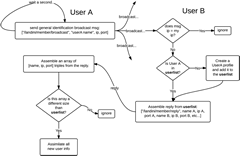

Dynamic User List

Each running copy of LANdini maintains a dynamically updated list (Fig. 1). This is achieved by each user broadcasting their name, IP address and port number once per second, and using incoming messages from other users to assemble a list of active LAN participants. Each user replies with a copy of their entire list, so that the sender of the original broadcast message can compare and add any users they have not detected yet.

Once connected, each user creates local user profiles of everyone on the network and sends regular status pings to everyone on their user list. These pings contain positional information as well as info pertinent to the “guaranteed delivery” and “ordered guaranteed delivery” protocols (more on these below). The most recent ping time is also stored and used for removing that user from the list if a predetermined amount of time elapses without an update. The currently implemented default is two seconds but this can be adjusted by the user as needed.

Local applications can ask LANdini for a copy of the current user list either as a simple set of names or as a “stage map”.

Minimal Change to Pre-Existing OSC Messages

In order to facilitate the updating of old pieces and the happy adoption of LANdini into new pieces, we wanted to change as little as possible about the way OSC messages were sent and received by composers’ patches. Incoming OSC messages are, from the perspective of the receiving patch, completely unchanged, requiring no updating in the composer’s code. Outgoing OSC messages are sent through LANdini and are simply prefaced with two extra strings: a protocol and a destination. The protocol tells LANdini how to send the message, the destination tells LANdini where to send the message. Other outgoing OSC messages are sent to LANdini to request specific information, such as the current network time or user list.

Message Protocols for Different Tasks

LANdini uses three message protocols that are based on a subset of those found in Neil Cosgrove’s LNX Studio, mentioned above. In adopting the code, changes were made to accommodate the different internal organization of LANdini’s data structures, but the general strategies are similar. Each protocol is referred to by a name that is used as a prefix in outgoing OSC messages from locally running applications:

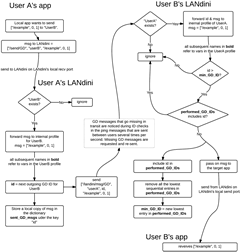

- /send — This is just normal OSC.

- /send/GD — The “guaranteed delivery” method, which indexes outgoing messages and stores local copies in a look-up dictionary in order to re-send upon request. Messages known to have been safely received are deleted on the sending computer in order to save memory. A more detailed summary can be seen in Figure 2.

- /send/OGD — The “ordered guaranteed delivery” method, which works in a manner similar to “/send/GD”, with the addition that incoming messages are stored in an intermediate queue and passed on to the local application strictly in order. As above, the sending computer deletes messages that are known to have arrived safely, and the receiving computer deletes messages as they are performed and leave the queue.

Status Pings

The network’s current state is maintained through frequent status pings that are sent between all active users who are running LANdini. At the time of writing, these pings default to 3 pings per second. A breakdown of the contents of the ping and how they are used is provided below:

- Name

The sender’s name is used so that the receiver can put the rest of the info in to the appropriate user profile. All variables mentioned below are stored in the receiver’s user profile for that particular sender and appear in bold — analogous ones exist for every separate user profile on the receiver’s machine. The underscores used in the variable names have been omitted for formatting purposes.

- Update position

The sender sends an updated current x/y location on the stage map.

- Check “/send/GD” IDs

The sender includes the ID of the last outgoing “/send/GD” ID they sent the receiver. This is compared against the receiver’s last incoming GD ID variable: if their last outgoing ID is bigger, last incoming GD ID is updated to equal it.

The ID of the “/send/GD” message beneath which all other messages have been safely received is included so that the receiver can delete locally stored copies of those messages it sent the sender. This keeps memory usage at a minimum.

The receiver looks at all the IDs in the range from (min GD + 1) to last incoming GD ID, and asks for re-sends of the ones whose IDs do not appear in the performed GD IDs list.

- Check “/send/OGD” IDs

The sender includes the ID of the last “/send/OGD” from the receiver that they performed, allowing the receiver to delete locally stored copies of those messages.

The ID of the last “/send/OGD” the sender sent the receiver is compared to the receiver’s last performed OGD ID. As above, all IDs between the receiver’s last performed OGD ID and the sender’s last sent “/send/OGD” ID are collected, and ones that do not appear in the receiver’s msg queue for OGD are requested to be re-sent.

- Check network time server

Every status ping message includes the name of the current network timeserver. In the event that the network timeserver leaves the group, the next user in alphabetical order is automatically chosen to take up the role. There is no deep reason for choosing alphabetical order as the organizing principle for this role: better methods will be explored and implemented in future versions of LANdini.

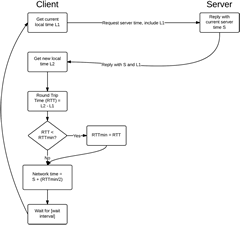

Synched Network Time

LANdini automatically establishes a shared network time on boot. At the moment, this is a simple implementation of Cristian’s Algorithm (Fig. 3), with the commonly added refinement of using the shortest recorded round-trip time. Once more than one player is on the network, the user with the first username in alphabetical order is assigned the role of network time server. If this server goes offline, the next highest in alphabetical order takes over with minimal interruption. Network time can be polled and used as a reference when sending messages that need to be executed at a certain point in the future.

Stage Map

This feature is, to our knowledge, unique to LANdini. Up until this point, composers have required players to choose a player number at the start-up of their patch; this is clearly vulnerable to human error and can result in lost rehearsal time or incorrect performances with missing and/or redundant parts. Moreover, requiring players to choose a player number does not necessarily scale well — some patches are “hard wired” to expect n number of players, sometimes with no better reason than the server needing to know ahead of time how many players there are.

LANdini’s stage map window (when opened) represents each user on the LAN with a simple named square. Imagining the window to represent the stage or area they are in, users arrange themselves in the window in relation to the other LAN members. This information is updated through the regular ping messages, and is therefore always current. Local applications can request a copy of the stage map as an OSC message containing ordered triples of name/x/y for each user. Once this data is received, it can be sorted and used to send commands to the ensemble in whatever order the local application specifies: left-to-right, front-to-back, or other, more subtle constructions, such as those found in Gil Weinberg’s paper on network topologies (Weinberg 2005).

Asking users to arrange themselves on the stage map is also vulnerable to human error, of course; the thinking here is that having the ensemble do this at the start of a performance is safer and more convenient than choosing player numbers for every piece that requires a specific order.

Traffic Monitoring Windows

LANdini has windows for monitoring incoming OSC traffic on both the local and LAN ports. Text fields on the top of the windows allow users to filter the displayed messages by typing the text of the relevant messages, allowing users to search for specific messages. This is useful functionality for debugging.

Performance

At the moment, LANdini has been used in several rehearsals and five concerts, with encouraging results. Background network traffic for the upkeep of the user lists and user status peaked at about 17 kbps for a group of 8 laptops, which is acceptably light background usage. As of this writing there has been no chance to test LANdini with a large group of 20 or more laptops, although this is a necessary and planned future step.

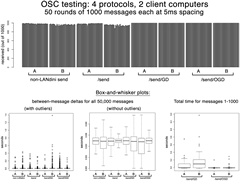

Narveson did run a smaller-scale test (Fig. 4), which consisted of four rounds: one for regular OSC without LANdini and one each for LANdini’s “/send”, “/send/GD” and “/send/OGD” protocols. The tests were performed with a D-Link Dir655 router (802.11n, 2.4 GHz), a 15” MacBookPro from 2011 running OS 10.8.2 as the server, and two 13” white plastic MacBooks from 2009 running OS 10.6.8 as the clients. Each round consisted of 50 tests of 1000 messages from a server laptop to two client laptops. The tests were simple patches written in SuperCollider — each round of 1000 messages was separated by 3 seconds, and the messages themselves used a 5 ms spacing. The test patches recorded message ID, protocol and arrival time.

The results show a clear performance advantage to using the “/send/GD” and “/send/OGD” protocols, which managed a 100% arrival rate without any appreciable cost in terms of average message spacing or total time between the arrival of messages 1 and 1000 in a given test. As expected, regular non-LANdini OSC and LANdini’s “/send” protocol were matched in performance, with an arrival rate in the mid-to-high 90% range, depending on the machine.

The chart in the bottom left of Figure 4 shows outliers for the between-message deltas in these tests, reaching 1.4 seconds for the “/send/OGD” protocol on machine B in the worst case. This points to further work that needs to be done to make timing issues more reliable.

A positive side-effect of the LANdini GUI is that rehearsals and concerts are sped up by making it easy to confirm that everybody is on the network simply by looking at the user list. Previously, connection problems only showed up once we started noticing unresponsive behaviour while playing.

Example Implementations

To date, three pieces in our repertoire have been converted to make use of LANdini; below are short summaries of how this has worked so far.

Jascha Narveson — Beepsh (2009), for laptop orchestra, 2 synthesizers and 4 percussionists

Narveson’s piece Beepsh involves the group passing around pitch and rhythm sequences. The pre-LANdini implementation used its own method for establishing the player list and for establishing group pulse synchronization. The new version uses LANdini to get the list of available participants, and uses the simple “/send” protocol and network time for sending out the rapid pulse metronome messages. Pulses are scheduled for half a pulse of latency using LANdini’s network time, and this provides good synchronization. The “/send/GD” protocol is used for players to update the server about the beginning and end of their sequences, since these are more important pieces of information. The stage map functionality makes it easy to arrange the player messages in order so that sequences can be heard travelling around the group from one side of the stage to the other.

Jascha Narveson — In Line (2011) for multiple laptops with tether controllers

Narveson’s other piece, In Line, also involves group synch. In this case, a regular metronomic pulse at 1 pulse/second is sent to the entire group using the “/send” protocol with a scheduling latency of 100 ms, which performs well. Earlier versions of this piece relied on setting up an internal metronome which would be constantly corrected by “/pulse” messages from the server as they came in, which resulted in occasional jitter. Other, crucial messages about state-change in certain players are handled using the “/send/GD” protocol, again with very encouraging results; earlier versions of the piece relied on a “shotgun” approach to ensuring the arrival of these messages, with occasional glitches. This piece has a history of crashing the router, which the current LANdini-enabled version has not done to date.

Dan Trueman — Clapping Machine Music Variations [CMMV] (2010) for laptop duo and any number of acoustic instrumentalists

Trueman’s piece CMMV also uses a mixture of “/send” and “/send/GD” protocols, the former for time-sensitive beat information and the latter for less time-sensitive but musically important pitch information. Again, the performance of both has been very encouraging, with noticeable savings in rehearsal time due to the absence of networking problems.

Linked List Test

Trueman has long tried to implement a simple “linked list” style OSC test in ChucK (Wang and Cook 2003) in which two (or more) computers on a LAN pass a “play” message around a list: computers wait for the message and then, upon receiving it, play a simple sound and send a “play” message to the next user on the list. This deceptively simple test has so far failed to work, likely due to dropped packets. Using LANdini’s “/send/GD” protocol solves this particular issue and enables the test to run smoothly.

Future Work

LANdini is currently being developed in SuperCollider, though porting it to other languages and environments is something that could be done if the need arose. One imminent future application is a port to iOS for Daniel Iglesia’s new mobile music platform MobMuPlat.

There is currently a SuperCollider class — LANdiniWatcher.sc — that takes care of regularly polling LANdini for network time and user list info. This has the advantage of saving composers working in SuperCollider the need to setting up their own OSC loops to and from LANdini to access network information, thus cutting down on time and potential problems with implementation from piece to piece. Skeletal classes for Max and ChucK exist which currently handle polling for network time, but need to be fleshed out to handle the “stage map” information.

While LANdini has been used in performance with 8–9 players, it has yet to be carefully tested with larger groups. Testing large group sizes is important because LANdini’s background traffic currently grows in quadratic time: a group of size n sees [n*(n -1)] ping messages being sent every ping interval, which is set to 3 pings/second by default. For a group of 8 people this results in 8*7*3 = 168 pings per second — for 10 people that number goes up to 270 pings/sec, and, for 30 people, it would be 2610 pings/sec (!). Alternatives that get LANdini closer to n log n performance are clearly required, either in the form of automatic or manual throttles to the status ping frequency, a dynamic centralized server model (as is currently the case with network time, as explained above), or something else entirely. In general, more tests related to timing and latency need to be done, as well.

At the time of writing, “/send/GD” looks for missing messages at each status ping, whereas “/send/OGD” looks for missing messages at the receipt of each new message. This should be changed since “/send/GD” currently shows marginally slower total completion times than “/send/OGD” (see Fig. 4).

Improvements to the implementation of synced network time include possible tweaks to the algorithm and the addition of visual feedback on the GUI about which machine is currently the timeserver. A manual override of this function should be implemented in the event that the current timeserver machine is faulty in some way. As already stated, using alphabetical order as an organizing principle for determining the time server is an ad hoc solution, and better methods need to be explored and implemented.

Bibliography

Beck, Stephen David. Personal Interview. August 2011.

Bencina, Ross. “Oscgroups.” Software utility. First version from 2005. http://www.rossbencina.com/code/oscgroups [Last accessed 9 November 2013]

Bukvic, Ivica Ico. Personal Interview. August 2011.

Cerqueria, Mark. “Synchronization over Networks for Live Laptop Performance.” Unpublished thesis, Princeton University, 2010. Available online at http://lorknet.cs.princeton.edu/cerqueira_thesis.pdf [Last accessed 9 November 2013]

Cosgrove, Neil. “LNX Studio, version 1.4.” Open source software. http://lnxstudio.sourceforge.net [Last accessed 9 November 2013]

Iglesia, Dan. “MobMuPlat.” Mobile music platform for iOS. First version 2013. http://mobmuplat.com [Last accessed 9 November 2013]

McCartney, James. “Rethinking the Computer Music Language: SuperCollider.” Computer Music Journal 26/4 (Winter 2002) “Language Inventors on the Future of Music Software,” pp. 61–68.

McKinney, Curtis and Chad McKinney. “Oscthulhu: Applying video game state-based synchronization to network computer music.” ICMC 2012: “Non-Cochlear Sound”. Proceedings of the 2012 International Computer Music Conference (Ljubljana, Slovenia: IRZU — Institute for Sonic Arts Research, 9–14 September 2012). http://www.icmc2012.si

Wang, Ge and Perry Cook. “ChucK: A concurrent, on-the-fly, audio programming language.” ICMC 2003. Proceedings of the International Computer Music Conference (Singapore: National University of Singapore, 29 September – 4 October 2003).

Weinberg, Gil. “Interconnected Musical Networks: Toward a theoretical framework.” Computer Music Journal 29/2 (Summer 2005) “Networks for Interdependent Music Performances,” pp. 23–39.

Wright, Mathew, Adrian Freed and Ali Momeni. “OpenSound Control: State of the art 2003.” NIME 2003. Proceedings of the 3rd International Conference on New Instruments for Musical Expression (Montréal: McGill University — Faculty of Music, 22–23 May 2003). Available online at http://www.nime.org/2003 http://www.nime.org/proceedings/2003/nime2003_153.pdf [Last accessed 9 November 2013]

Social top