Space and Computer Music

A Survey of Methods, Systems and Musical Implications

Abstract

Spatialization is presumably one of the most important indicators of technical development in 20th century music. With the systematic inclusion of space into musical composition, music finally escapes the old conceptual constraints of music as a time-art. The computer takes a special place in this development and puts the new concepts into effect, which would have been inconceivable until 50 years ago. The present article deals with the four most important indicators of the spatial music, namely: Time Delay; The Role of Dynamics in the Simulation of Distance; Inner Sound Space Organization; and Movement in Space.

Introduction

It is in the nexus of time-space in 20th century physics that musical space again captures the attention after its brief success in the Venetian polychoral music of 16th century. Although numerous examples can be given that musical space has not been forgotten since the 16th century, we must admit that it was never included so extensively in the arts, and particularly in music, until the 20th century. The rediscovery of the spatial dimension in music is found in the 1950s in the spatial separation of the sound sources, most noticeably instruments. Many instrumental works which attract considerable interest for space as a new musical dimension can be mentioned: Universe Symphony (1911–51) by Charles Ives, Antiphony I (1953) by Henry Brant, Déserts (1954) and Poème électronique (1958) by Edgard Varèse, Gruppen (1955–57) by Karlheinz Stockhausen and Terretektorh (1956–66) by Iannis Xenakis. It is particularly important also to cite the works of the American avant-garde artists like Charles Ives, Edgard Varèse and Henry Brant, who not only award an identity to space as a new dimension, but also create the theory behind sound spatialization based on the principles of psychoacoustical perception.

The contributions of the American avant-garde, the inheritance of the Viennese school, the emancipation of noise within the futurist movement, together with the development of new acoustic technologies, led the developments in music composition in the post-war period. Even elektronische Musik and musique concrète, two important musical directions of the time, consider space as the fifth independent dimension in music after tone color, duration, dynamics and pitch. While spatialization in instrumental music meant, at different times, varying the position of the instruments, the movements of the instrumentalists during playing and, in certain cases, sound color change (Gustav Mahler), in computer music it deals even with the innermost sound structures. The use of the computer as a modern tool in musical production began with far-reaching experiments and productions at electronic music studio of Westdeutscher Rundfunk (WDR) in Cologne and Radiodiffusion Française (RDF) in Paris. Initially, computer-assisted technology provided excellent solutions to the practical problems of live performances. Later on, it permitted the production of musical qualities which were no longer possible with the technology of the earlier computer music, such as additive sound synthesis, mechanical manipulation of the tape recorder, etc.

The Four Most Important Indicators of Spatial Music

1. Time Delay

The process of spatialization of sound lets special sound qualities arise in order to award them a physical volume. Time delay is one of the most important tools for the production of these sound qualities. The following goals can be accomplished from different applications of time delay in the computer music: change of the tone color, dynamic and spatial characters of the sound (e.g. echo effects) and the spatial perception of the listener (i.e. sound localization).

The resulting effects formed by time delay are not only experiments with acoustic phenomena for the examination of audible perception, but also the creation of a separate musical language itself. An early example of the musical application of time delay in the 20th century is a Dada performance in 1920 at which a Beethoven symphony was played fast and slowly simultaneously. These sound effects can best be discussed in view of the behavior of the temporal delay itself. If the temporal delay does not change with time, i.e. if it remains constant during the playing time of the original and the delayed signal, different effects, depending on its duration, can be reached: A delay time up to 10 ms yields a sound discoloration so much that the effect can be compared with one of a low pass filter, while a new envelope, i.e. a new dynamic course, is arising at delays between 10 and 40 ms. If the delay time is more than 40 ms, a number of discrete recurring signals will be perceived, which can be compared with the echo effect formed by a Comb filter and can be used for the formation of virtual spaces. If the time delay changes with time, i.e. if the time delay gets different values in accordance with a certain time pattern, certain wavebands are extinguished or brought out resulting in effects such as a flanging/phasing till 10 ms, a chorus between 10 and 40 ms, and an echo over 40 ms.

The perception of distance and direction which are arranged by spatial sound makes the sound localization possible in space. Right-left, front-back and the perception of elevation are the most important factors in recognizing sound direction. The right-left perception depends directly on temporal relations. With a lateral sound source position, the time differences of the signal arise at the azimuth level between the ears, which, besides the level differences at the horizontal level, describe the main parameters of direction in the perception of a sound (“Duplex Theory” [1] in Begault 1994, 39). The effects of the run-time differences and level differences become apparent, for example, in the work Vanishing Point (1989) by Chris Chafe, in which the four audio channels, differing both in dynamic level and temporal characteristica, are mixed up on two tracks. The spatial effects of temporal delay had already been used by the American composer Pauline Oliveros at the beginning of 1960s. Oliveros captured sounds on a tape at two or three different time points to have control over the spatial behavior of the sounds, which she directly used in her first compositions like The Bath (1966), I of IV (1966) and Bye Butterfly (1965).

Since the repetitions of a signal in an echoey space can be defined and feigned by its temporal structure, a similar temporal structure in its turn can be associated to an echoey space. Furthermore, the simulation of a space can be translated into the simulation of its reverberation time. In this context, the experiments of Gary Kendall and William Martens in the 1980s regarding a precise elaboration and inclusion of the space simulation in music also must be mentioned. Kendall and Martens criticize the earlier methods of space simulation and try to design a system by considering the temporal and spectral factors in hearing spatially, in which the listener can grasp the sounds more precisely and even as real sound sources.

One of the most common methods of spatialization is the duplication of the Mono input signal into the stereophony, quadraphonic sound, etc. with the help of the time delay. The duplication of the audio channels multiplies not only the number of sound sources and disperses them in the listening space, but it also influences the space impression considerably. Since this method departs from the splitting of the sound in space, i.e. reorganization of the spatial components of the sound, it is described with the term decorrelation. A decorrelated mono signal is a signal which remains faithful to its spectral, temporal and dynamic character but behaves differently spatially. Decorrelation has a considerable influence on hearing spatially and offers control over the geometric design of the sound in space.

Decorrelation is noticeable with a performance instrument known as Ambisonics Surround Sound (ASS). ASS describes a number of methods which were developed in the recording, processing and playback of the audio in the 1970s. Unlike quadraphonic sound, all loudspeakers contribute in the playback of the audio here. The number of loudspeakers with Ambisonics is usually not defined and it depends on the size of the space. However, it requires a minimum of four loudspeakers for the playback of music at the horizontal level. The number of loudspeakers could be increased without having to provide additional information. At least six or eight loudspeakers are recommended for periphony or three-dimensional playback. The weakest point of ASS is that no phantom localization is possible in this system. In other words, it is not possible to isolate a loudspeaker or post a sound between two loudspeakers as it is carried out in the stereophonic and quadraphonic sound system. This is because, with ASS, all loudspeakers are involved in the playback of the produced sound. Although the selective localization causes difficulties in Ambisonics, the realization of the sound movement, particularly the circular soundway in space, is very simple. One of the greatest advantages of Ambisonics is the independence of the listening space and the unspecified number of loudspeakers. Malham and Myatt define three types of sound movements which work particularly well with Ambisonics. These movements are three different circular sound ways: Rotation (a rotation around the Z-axis), Tilt (a rotation around the x-axis) and Tumble (a rotation around the y-axis) (Malham and Myatt, 1995). Musical examples of Ambisonics are, for instance, Pyrotechnics (1996) by Ambrose Field, Vox 1 (1982) by Trevor Wishart, What a Difference a Day Makes (1997) by Tim Ward. Another interesting piece of work is John Richards’ Spherical Construction (1997). The composition is based on a sculpture of different rings constructed by Aleksander Rodchenko. To translate these rings into sound, Richards uses two programs: Csound Orchestras and Scores by Richard Furse, and LAmb by Dylan Menzies-Gow, both developed at University of York in England. Here are the physical and static rings of Rodchenko’s sculpture transformed into audible and dynamic rotations in time. This is achieved through rotational panning with different diameters, changing in pitch and speed in time and modifying the axes and directions of the rotations.

2. The Role of Dynamics in the Simulation of Distance

The composers of the 19th century succeeded in delivering imaginary and real spaces with acoustic sound sources (instruments). It was only possible in the 20th century to do the same with imaginary sound sources. The fact that loudspeakers do not offer the same flexibility of movement as the instrumentalists must have been the origin of the idea in the history of computer music that loudspeakers should not be put into movement in order to effect change on the position of the sound in space. Instead, the indicators of the spatial perception must be simulated. An important aspect here is the treatment of the dynamic relationships of the sound components in a musical event which had been known in instrumental music for a long period of time.

Dynamics is a contrast forming factor, but also a tool in the instrumental music to place a certain sound in the front or background. In computer music, the dynamics accomplish the first mentioned task, and it is also used to create a real spatial dimension. Therefore, dynamics are often mentioned in connection with the simulation of distance. The reason is that in the everyday hearing experience, the weak sounds give an impression of distance while loud sounds seem nearer. Therefore, with the help of the simulation of dynamics, it is possible to let sounds come closer or go farther from the listener. Additional factors in this context should not be forgotten. The spectral character, which is seen in the time flow of the sound, has a deciding role here. The interaction of dynamics and the spectral character of the sound is comparable with one of size and color changes in the visual arena, in which the change of the size also generates the impression of the distance. The understanding of the distance impression is actually so complicated that Begault describes distance perception as inaccurate and distinguishes between relative and absolute distance: “This is because distance perception is multidimensional, involving the interaction of several cues that can even be contradictory.” (Begault 1994, 25).

The meaning of the dynamic relations in the configuration of space is noticeable particularly in the works in which loudspeakers are combined with instruments. Numerous works in the history of electroacoustic music, from Kontakte (1959–60) by Stockhausen to Répons (1981–84) and Dialogue de l’ombre double by Boulez (1985), are based on this idea. Another piece worth mentioning is that of the Texan composer Larry Austin, which is composed exclusively for a single loudspeaker and a viola. The work is called Catalogo Sonoro for Viola and Tape. The piece is subtitled Narcisso, which is alluded to when the viola player looks in the surface of the water, here the only loudspeaker, and plays and admires his viola, which will be heard repeatedly on the tape. The piece was performed at The International Computer Music Conference at the Northwestern University in Chicago, Illinois in 1978, and it aroused both curiosity and astonishment since Austin insisted on using a single small loudspeaker instead of a quadraphonic loudspeaker system.

The history of using distance impressions musically can be traced back to the Romantic period. It was discovered relatively early in the 19th century how the control of dynamics (one of the inheritances of the Baroque period) and the spectral character of a sound can produce a full variation of music perception. The technology was simple. The reduction or multiplication of the number of instruments, the regulation of the dynamic course of a musical event and even manipulating the physical distance of the instruments were part of the composition technique in romantic music.

The compositional application of the parameter distance, i.e. the simulation of distance, is, however, a part of the daily work routine of every composer in the computer music in contemporary times. Michael McNabb’s Dreamsong is one example in which the distance parameter plays a central role in the composition. Dreamsong, is a stereo composition and was composed in 1977/78 in CCRMA. McNabb designs a spatial organization of both synthetic and concrete sounds with a frontal depth of 15 meters. Technically, the distance here is used in two ways. The most interesting sound starts 29 seconds after the beginning of the piece and describes a star and a circle.

3. Inner Sound Space Organization

In view of the important role of the inner organization of sound and the dependence of audio perception on the arrangement of frequencies, sound localization will be discussed here at length. Consonant- or vowel-rich languages, male or female voice and synthetic or natural sounds are examples of contrasting sounds which pull the identity of a sound back on its spectral distinctiveness. Acousticians are fond of saying that sound localization by “normal hearing” of “normal sounds” is made easier in daily situations as compared with concert situations because the nature sounds contain phase information at low frequencies and spectral information and impulse components at high frequencies. Although the spatial arrangement of sound components in this context is regarded as an important factor, the question would be aimed at the pitch — if the sound is reduced to a single tone. As a result, sound key set and pitch seem to be the most important acoustic features of a sound regarding sound localization in addition to the spatial and dynamic configuration of sound components.

While the basic prerequisites of azimuthal sound localization are, because of the importance of run time differences and level differences, binaural, the prerequisites for the perception of elevation indicates a monaural hearing. The elevation impression is feigned mainly by additional loudspeakers and tests trying to simulate this perception by the azimuthal-built loudspeakers first appeared only at the beginning of the 1970s. This technology has been used largely in popular music since then, but in 1994, in the composition buzzingreynold’sdreamland (1994) by Henry Gwiazda using Bo Gehring’s Focal Point software, the stereophonic loudspeaker system is used for the first time for the creation of three-dimensional music in real space.

Although today’s concert halls play an important role in the development and spreading of the music of the 20th century, it is clear that portable music was a turning point in the history of the music listening. The increasing binaural production and the simulation of the spatial features through Head-Related Transfer Function (HRTF) in the last years is a sign for the success of this kind of spatial music. To be mentioned here are the CD productions of the company Abadone: the recordings of G. Silberman-Organ, Ensemble Oriol Berlin and an interesting CD by a Russian vocal ensemble, ALEKO, in which the spatial sound positioning is designed so that:

you can readily determine which voices belong to short men and which to tall ones, and, if you close your eyes or turn down the lights, you will be able to judge exactly how far you’d need to reach to touch the man on the left. (2)

Headphone compositions are a relatively new form of computer music which, since the 1980s, points to an increase in the use of 3D technology. For example, the works Audioearotica (1986) by Gordon Mumma, Duet (1995) by Ian Chuprun and thefLuteintheworLdthefLuteistheworLd (1995) by Henry Gwiazda are clear examples of this trend.

The partitioning of sound in different frequency ranges in order to diffuse it over spatially separated or differently directed loudspeakers is carried out with the concept of Gmebaphone. This performance instrument was conceived by Christian Clozier, though the actual instrument was realized by Jean-Claude Leduke at the beginning of the 1970s in L’Atelier de Recherches Technologiques Appliquées au Musical (ARTAM) at the Groupe de musique expérimentale de Bourges (GMEB). The original was further developed and improved and new versions were introduced in 1975 and 1979. The splitting of sound in space with Gmebaphone is not only used for the purpose of spatialization of sound components, but it is used also for the creation of an acoustic space, which is made exceptionally vigorous and dynamic by the transformation of small, inaudible movements in sound space into marked and clear movements in real space. The Gmebaphone is a hybrid system in which the loudspeakers are built both frontal and ambient. In that way, low frequencies are projected via one single loudspeaker on the stage and high frequencies are diffused over ambient loudspeakers.

Distinction of timbres as a spatializing tool is also mirrored in the 8-track tape music work Mortuos Plango, Vivos Voco by Jonathan Harvey. The work was carried out at at IRCAM in 1980. The sound material underlying the composition consists of the sounds of the bells of the Winchester Cathedral in England and the voice of Harvey’s young son. The analytical treatment of the spectrum of the bells spreads on both temporal and spatial dimensions here. The 8 minute and 58 seconds composition is divided into eight sections. For each section, the low-sound component of the bells is central, i.e. the first eight lowest sound components of the bells will determine the spectral character of the eight sections. The spatial extension of the sound components appears in different ways in the composition, which is often carried out by partitioning the sound components of the bell sound between eight loudspeakers.

When considering inner sound movement, one must mention the experiments of Roger Shepard, Ken Knowlton and particularly those of Jean-Claude Risset. In the 1960s, Risset carried out at Bell Laboratories a means of manipulating the dynamic, spatial and temporal configuration of sound components to manage imaginary movements. He speaks of Pitch-Effect, Rhythm-Effect and Spatial Effect and how he used them in his composition Moment Newtoniens in late 1977. Pitch-Effect, for example, involves moving a solid block of ten sound components which lie in a one-octave distance from each other on the x-axis. The envelope also moves in the same direction if the amplitude of every component remains unchanged. However, this is not the case here. By reinforcing the dynamics of the high components, Risset moves the envelope in the opposite direction. Risset enacts these paradoxical movements, i.e. becoming lower at the same time as louder, in his works Trois Mouvements Newtoniens (1978), Voilements (1987), Attracteurs Etranges (1988) and at the beginning of Passages (1982).

4. Movement in Space

Sound movement is one of the most important features of spatial music. Sound movement refers both to inner and real space sound structures, which, in transition from one position to another, go through an audible change in at least one of their musical dimensions: tone color, rhythm, dynamic or spatial parameters. Relating sound movement to subjective auditory perception can be problematic, particularly in computer-assisted music, because some sound movements do not fulfill any function in the musical event, especially those minimal movements aroused by cunning sound modifications, e.g., granular sound synthesis, which is hardly audible. Although the change of sound in different dimensions of music is the core of musical movement, the temporal behavior of these changes plays a decisive role inasmuch that the changes can be questioned at all as a movement. They can, in some cases, move the music into a definite static state. Musical sound movement, as is the case with mechanical physical movement, should be, from the point of view of time, a durable sequence of sound structures. The musical continuum, in which the movement will be embodied, plays an important role here for the temporal construction of sound movement.

Having a sound moved in space and including such a movement in music composition was of interest since the emergence of musique concrète and electronische Musik. Stereophonic technology has permitted a fairly linear sound movement in the space between the loudspeakers, but a sound movement which can cover the azimuth level, except for the elevation, is possible only with electronic simulation. The computer-assisted simulation of sound movement, however, is not possible without a better understanding of the dynamic behavior of sound in space, as John Chowning explained with The Simulation of Moving Sound Sources in the 1970s. Movement is one of the four parameters which Chowning includes in the outline of his quadraphonic system to produce a virtual space together with reverberation, distance and direction angle. Chowning emphasizes the virtuality of this space. According to him, distance and movement are only psychoacoustical impressions which could not have existed outside the fantasyland of the listener. Chowning’s most important contribution to the simulation of sound movement is the inclusion of the Doppler Effect (Shift) in his work Turenas (1972).

Turenas was not the first instance of use of Doppler Shift because I did a lot of experiments to develop the technique and it took me some while because there was a lot it was not known. I think the distance cue particularly was well known and may be at the acoustic community, but it was not terribly prominent. It was no data that I could latch onto easily to build into a computer program, so I had to do some number of experiments and of course in doing of experiments one makes lots of mistakes for example I thought, well I use a kind of source tone that has a sharp attack with exponential envelope. If that was over exponentially modulated sound this way, you can’t hear the reverberation very clearly with that kind of signal, because there is no pitch variation. You can hear repeated attack, but the idea of full reverberation is not apparent, because I didn‘t know enough. All the things that are natural tones were not easily done in the early days of computers. (Chowning interviewed by Zelli, 1998)

Trevor Wishart is perhaps the only composer who not only includes movement as a main element in his compositional work, but also tries to categorize it theoretically. Movement categories change into a musical language in the work VOX 1 (1982) from the six-part cycle VOX, which he composed between 1982 and 1988. Both VOX 1 and VOX 5 are encoded for ambisonic and quadraphonic sound systems. Electroacoustic music has produced numerous forms of spatial sound movements in its 50-year existence. An interesting work is that of the American composer Elizabeth Hoffman. The work is called Alchemy and was composed in 1996 in the USA. Hoffman investigates a temporal variation of sound metamorphoses or, as she describes, “a chemical transformation of sounds.” However, in this process, the wind sound remains as an important sound substance which spreads in stereophonic space. In this way, the essential sound transfiguration will be distinguished from the untransformed wind sound and manage a contrast of transformed/unchangeable and moving/silent interrelation.

For Annette Vande Gorne and her first 8-track work, Terre, movement is an inseparable part of sound, marking not only the development of sound material over the course of time but also in a double-sided relation to it. Terre is the last part of the cycle Tao, which was realized in 1991 at INA/GRM and is a good example of how sound art and dynamic elements can stand by each other in intimate relation, a relation which is dominated by the dynamic character of the sound movements and also determines the order of the sounds.

Sound movement is also a decisive point in the work Cycle de l’errance by Francis Dhomont. The cycle was composed over a period of eight years from 1981 to 1989 and commissioned by Claude Schryer from The Banff Centre for the Arts in Canada. The journey through the approximately 76-minute cycle can be summarized with one word: movement.

Conclusions

The inclusion of space in computer-assisted music has been examined. It was shown how the spatial dimension was made possible with computer-assisted technology. The roles of time delay, dynamics and inner sound space organization were discussed and illustrated with examples from acoustics, psychoacoustics and music. The following conclusions can be drawn: firstly, time delay is the most important factor in the production of spatial qualities. Secondly, dynamic differences are authoritative for distance impressions. Thirdly, inner sound space organization is an important starting point for sound projection in the performance space and for simulation of elevation with low loudspeaker numbers. Fourthly, sound movement is most distinct way in the articulation of space.

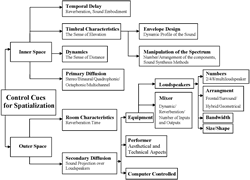

In conclusion the following diagram illustrates an outline of the most important cues for using spatialization as compositional and performing tools.

Acknowledgements

My gratitude is extended to all the artists mentioned in this article, especially Mr. John Chowning for the interviews and sharing of materials with me, and Prof. Dr. Helga de la Motte-Haber, whose support and guidance were a great help in writing the article. Many thanks to Mrs. Allison Brimmer, Mr. Kevin Austin and Ms. Mehri Barabari for their editorial and technical help and commentary.

Notes

- A theory proposed by Lord Rayleigh which states that there are two cues for human binaural sound localization: Interaural Time Difference (ITD) and Interaural Level Difference (ILD).

- Fanfare, The Magazine for Serious Record Collectors, January/February 1997, reprinted online in The Binaural Bulletin. http://www.binaural.com/binbb.html

Bibliography

Begault, Durand R. 3D Sound for Virtual Reality and Multimedia. San Diego: Academic Press, 1994.

Malham, David G. and Anthony Myatt. “3D Sound Spatialization using AmbisonicTechniques.” Computer Music Journal 19/4 (1995)

Zelli, Bijan. Interview with John Chowning. 3 June 1998.

Social top